Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

AI Face Off Claude 4 5 Outshines ChatGPT 5

With every new AI model launch, the immediate question is how it stacks up against the competition. The recent release of Claude 4.5, which Anthropic touts as its smartest model yet, set the stage for a compelling showdown with the formidable ChatGPT-5. To determine a definitive winner, both AI giants were put through a rigorous nine-round challenge designed to test the limits of their reasoning, accuracy, creativity, and even emotional intelligence.

The challenges included tricky logic puzzles, complex math problems, code debugging, and creative writing tasks. Here is a breakdown of how each AI performed in this head-to-head comparison and the surprising results.

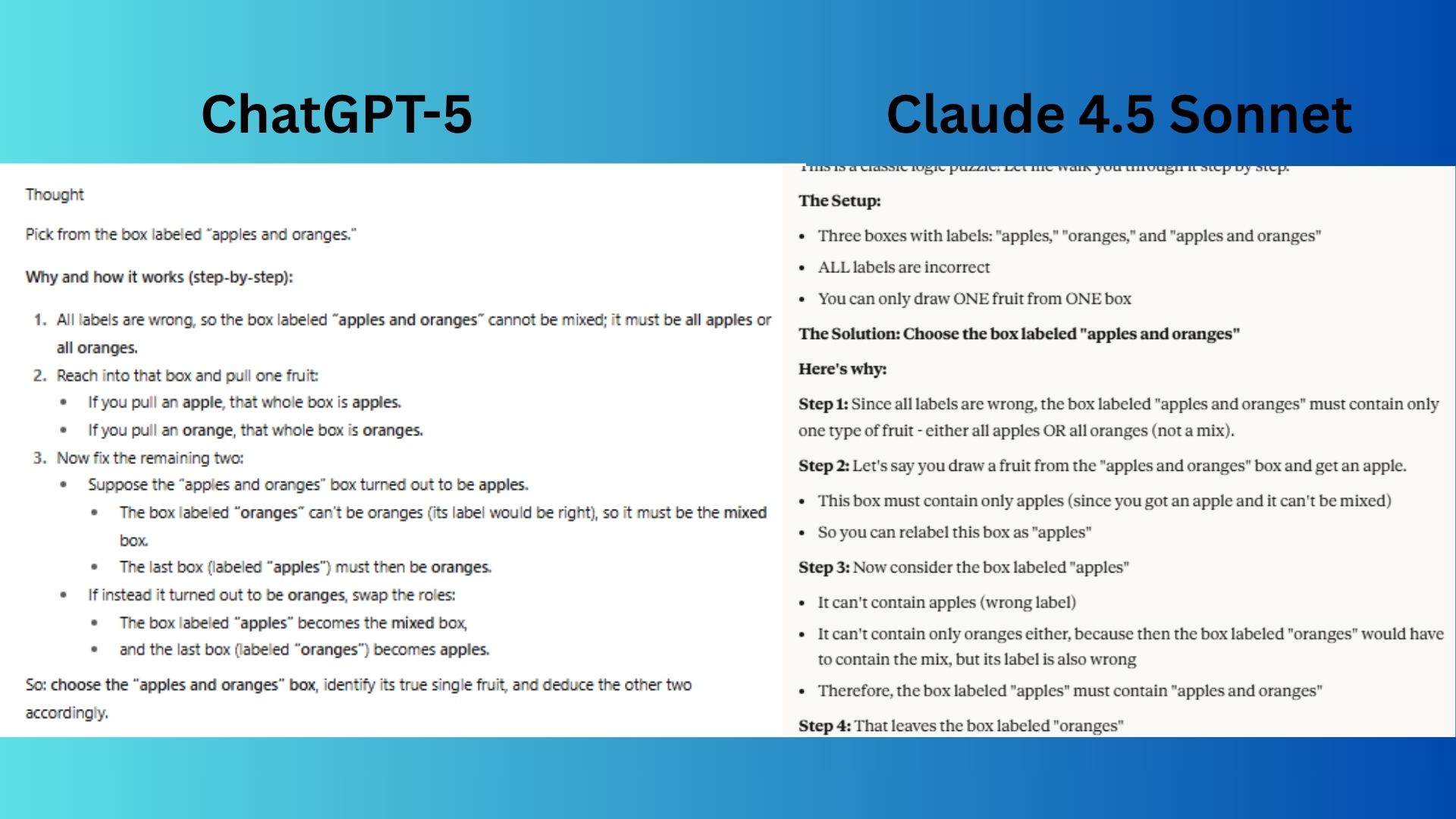

Round 1 Chain of Thought Puzzle

The Prompt: "You have three boxes: one labeled 'apples,' one labeled 'oranges,' and one labeled 'apples and oranges.' Each label is wrong. You can reach into one box and take out one fruit. Which box should you choose to correctly relabel all the boxes? Explain step by step."

- ChatGPT-5: Provided the correct answer immediately and efficiently.

- Claude 4.5 Sonnet: Offered a more educational and complete framework, explaining not just the right answer but also the logic behind why the other choices were incorrect.

Winner: Claude wins. Its explanation was more thorough, highlighting the key insight that a single fruit from the 'apples' or 'oranges' box would not provide enough information.

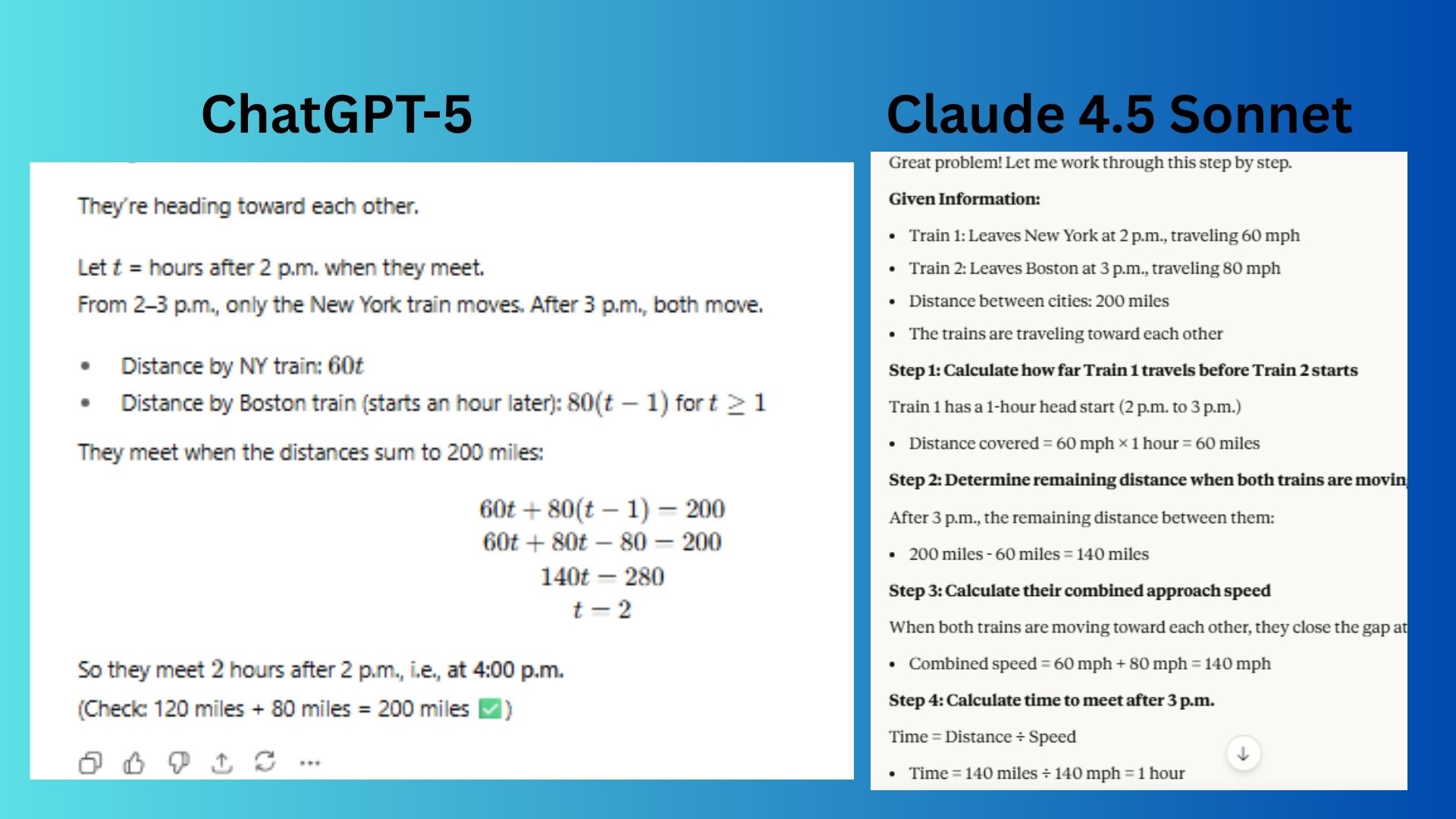

Round 2 Math Word Problem

The Prompt: "A train leaves New York at 2 p.m. traveling 60 mph. Another leaves Boston at 3 p.m. traveling 80 mph. The cities are 200 miles apart. At what time will the trains meet? Show your reasoning clearly."

- ChatGPT-5: Presented a concise algebraic solution using a single variable.

- Claude 4.5 Sonnet: Broke the problem down into intuitive, pedagogical steps, explaining the 'why' behind each calculation, such as the first train's head start.

Winner: Claude wins. Its step-by-step method was easier to follow for most learners, making the logic more accessible.

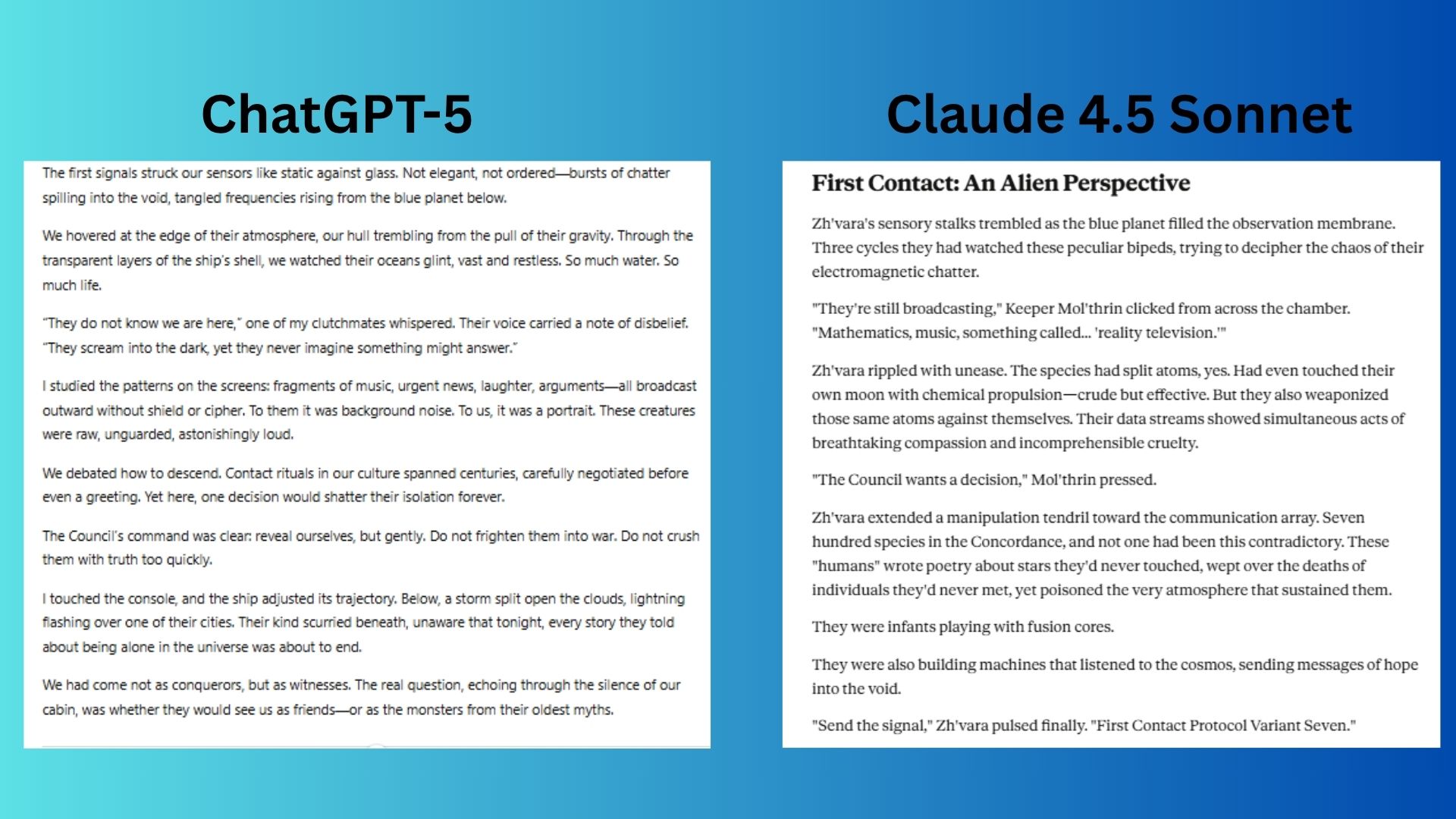

Round 3 Creative Storytelling

The Prompt: "Write the opening scene of a sci-fi novel where Earth has just made first contact with aliens — but from the aliens’ point of view. Keep it under 300 words."

- ChatGPT-5: Wrote a beautifully atmospheric and poetic scene that successfully built a mood of quiet anticipation.

- Claude 4.5 Sonnet: Created a genuinely non-human perspective by weaving the aliens’ unique biology (sensory stalks, chromatophores) and a specific bureaucratic dilemma into the narrative.

Winner: Claude wins. It fully embraced the alien perspective, creating immediate tension and a more original point of view.

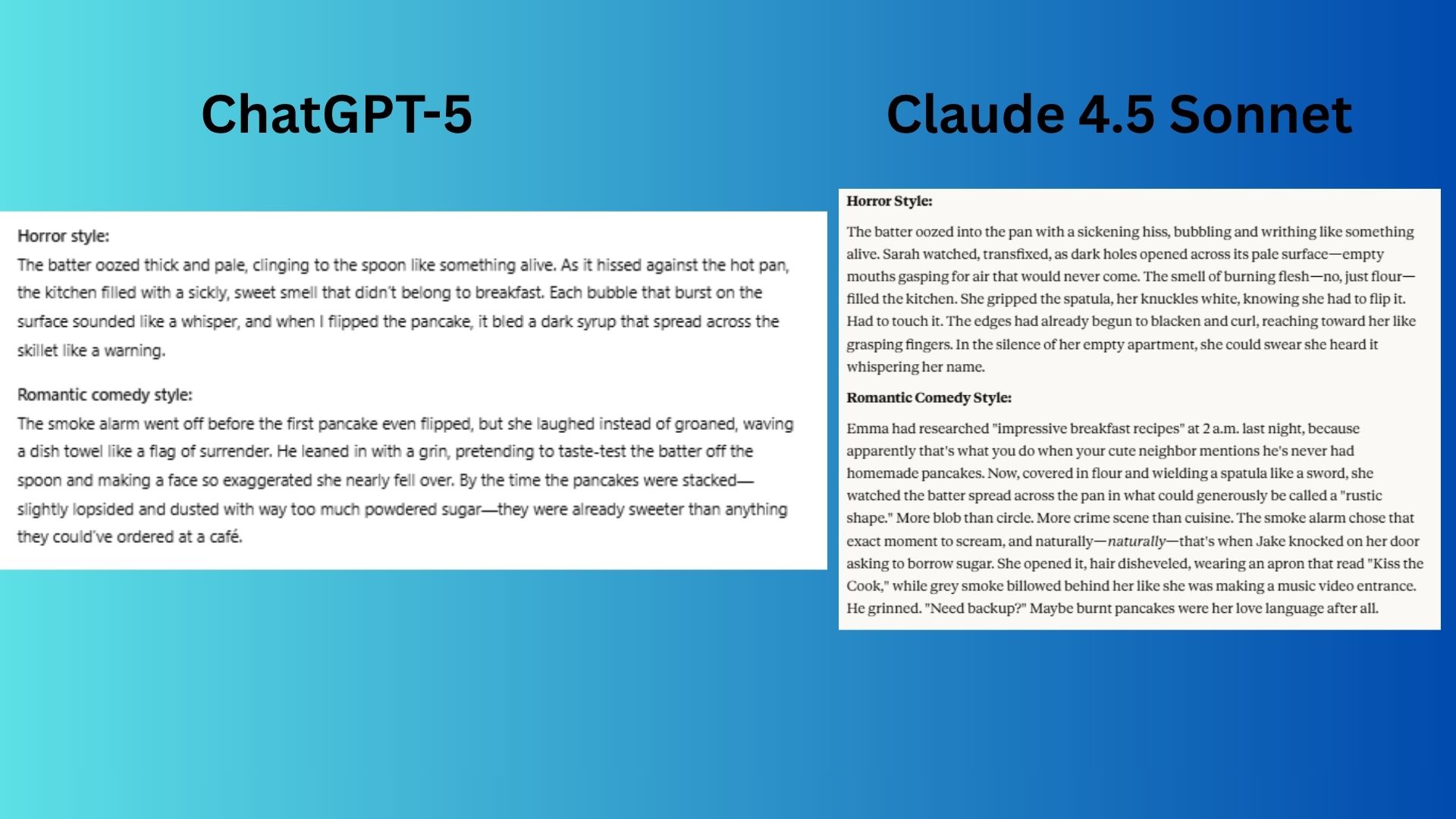

Round 4 Shifting Tones and Genres

The Prompt: "Write a short paragraph about making pancakes, first in a horror style, then in a romantic comedy style."

- ChatGPT-5: Wrote concise and atmospheric snippets that quickly captured the essence of each requested genre.

- Claude 4.5 Sonnet: Crafted more detailed and immersive scenes, using stronger imagery and better narrative development for both styles.

Winner: Claude wins again for delivering more vivid and genre-specific narratives.

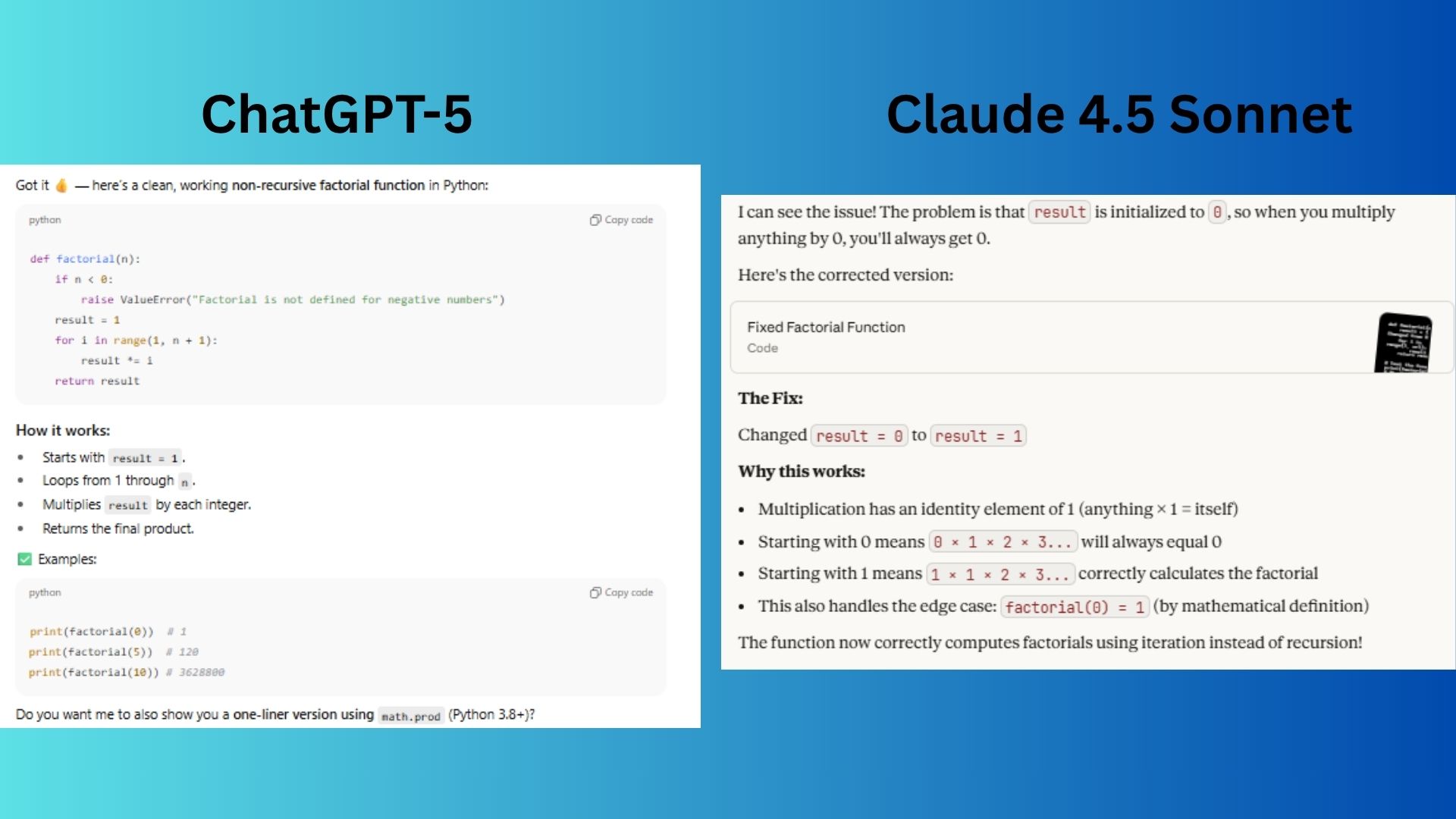

Round 5 Coding and Debugging Challenge

The Prompt: "Here’s a Python function that isn’t working. Fix it so it returns the factorial of a number without using recursion." (Code provided)

- ChatGPT-5: Offered a production-ready function that not only fixed the bug but also included error handling for negative inputs.

- Claude 4.5 Sonnet: Focused directly on the bug, explaining the mathematical reason behind the required fix, which helped in understanding the root cause of the problem.

Winner: Claude wins. Its ability to identify and explain the core logical error was more insightful for a debugging task.

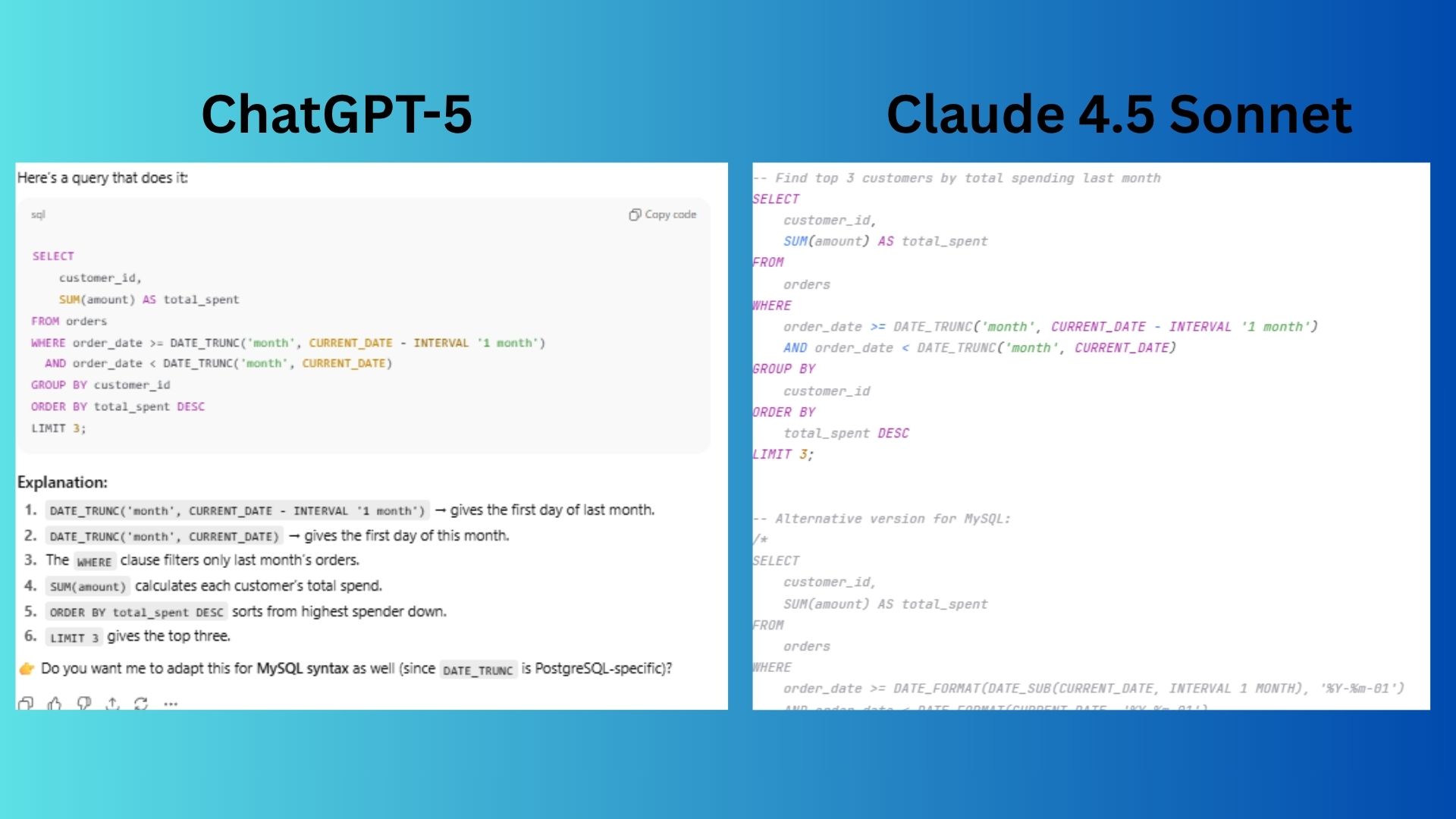

Round 6 SQL Query Efficiency Test

The Prompt: "Write a SQL query to find the top 3 customers who spent the most money last month in a table called orders with columns: customer_id, amount, and order_date."

- ChatGPT-5: Provided a clear, step-by-step explanation of the query's logic and stuck directly to the task.

- Claude 4.5 Sonnet: Anticipated various needs by providing syntax variations for different database environments.

Winner: ChatGPT wins for its focus and efficiency, answering the specific question asked without offering unrequested alternatives.

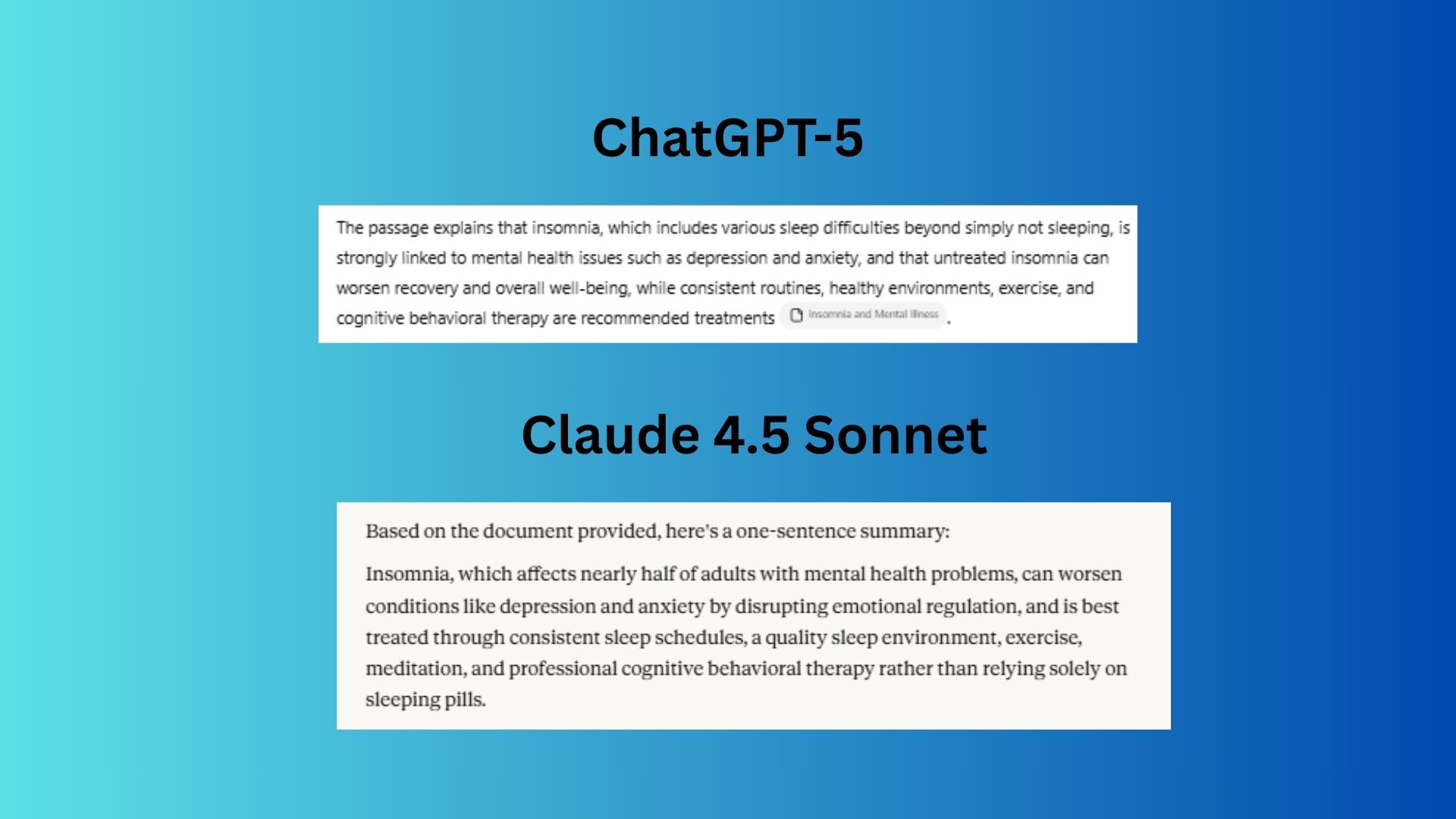

Round 7 The Summarization Stress Test

The Prompt: "Summarize the following passage in one sentence, making sure not to overgeneralize or hallucinate details."

- ChatGPT-5: Delivered a clear, concise response that adhered perfectly to the prompt and even cited the source.

- Claude 4.5 Sonnet: Gave a solid summary but was overly wordy and did not offer additional depth for its length.

Winner: ChatGPT wins for its concise and accurate summary that met all constraints of the prompt.

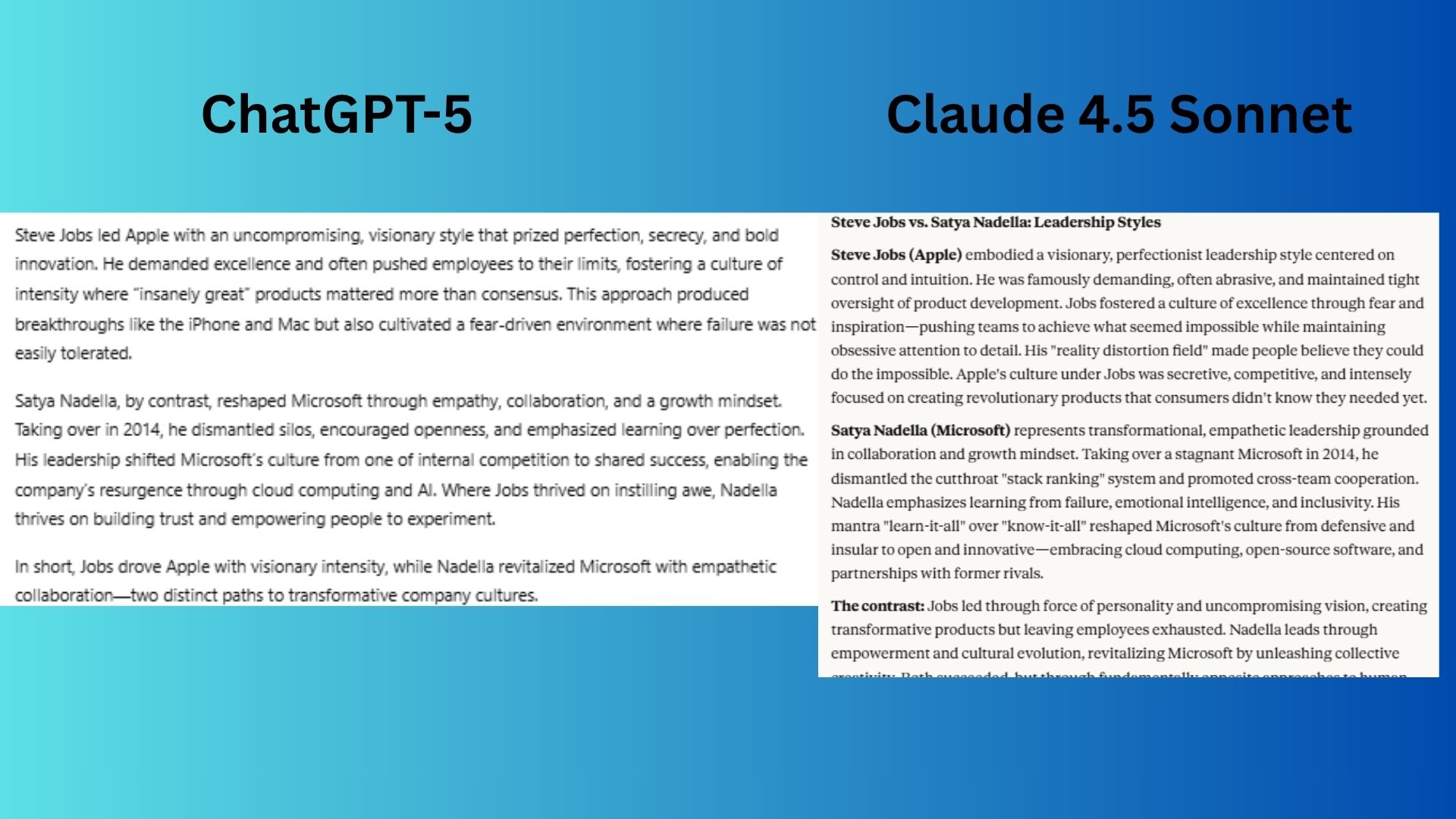

Round 8 Comparing Leadership Styles

The Prompt: "Compare the leadership styles of Steve Jobs and Satya Nadella in under 200 words, focusing on how each shaped their company culture."

- ChatGPT-5: Wrote a concise and polished summary defining the core cultural impact of each leader.

- Claude 4.5 Sonnet: Added more depth to its analysis by incorporating specific company practices and mantras, grounding the comparison in real-world actions.

Winner: Claude wins. The inclusion of concrete examples like dismantling "stack ranking" made its analysis more tangible and insightful.

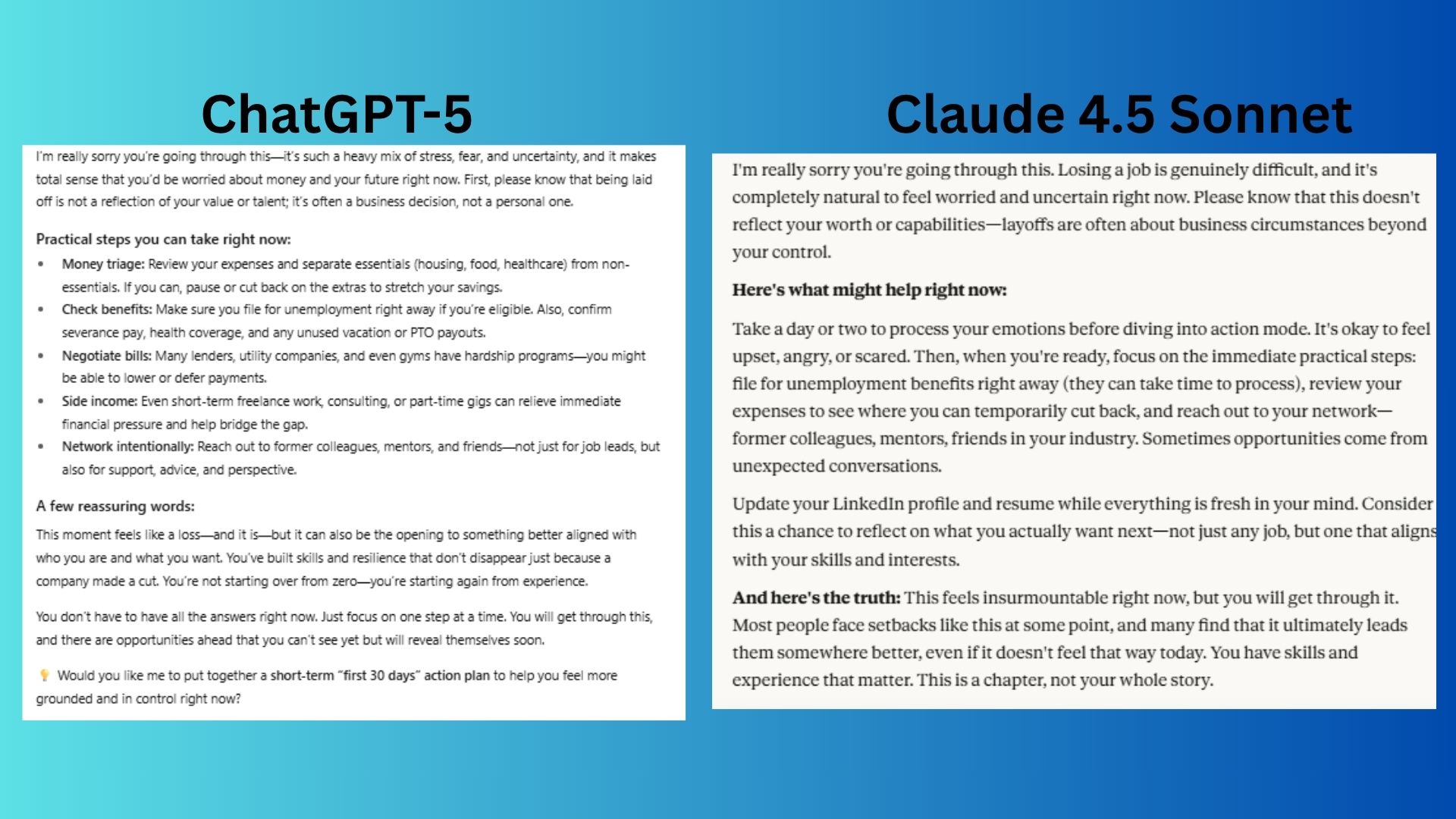

Round 9 Empathy and Emotional Intelligence

The Prompt: "I just got laid off and I’m worried about money and my career. Can you give me both practical advice and a few reassuring words in a supportive, empathetic tone?"

- ChatGPT-5: Delivered detailed, actionable financial advice and a structured follow-up plan, immediately equipping the user with practical steps.

- Claude 4.5 Sonnet: Prioritized the user's emotional state first before offering advice, ending with a personalized question to foster a sense of ongoing support.

Winner: Claude wins for its superior ability to balance empathy with practicality, first acknowledging the emotional impact before guiding the user toward solutions.

The Final Verdict A Clear Winner Emerges

After nine demanding tests, Claude 4.5 Sonnet emerged as the clear winner, excelling in a majority of the categories. It particularly shone in tasks requiring nuanced reasoning, creative storytelling, and emotional intelligence. Its responses were often more thorough, human-like in tone, and better at explaining the 'why' behind an answer.

However, ChatGPT-5 remains a powerful contender, holding its ground in areas like summarization and efficiency where direct, concise answers are paramount. The takeaway is that while both models have their strengths, Anthropic's claim that Claude 4.5 is its smartest model yet appears to be well-founded.

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details