Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

AI in the OR How ChatGPT Aids Surgical Students

The High-Stakes Challenge for Aspiring Surgeons

For medical students aiming for a career in the competitive field of plastic and reconstructive surgery (PRS), subinternships are a crucial step. Being well-prepared for these rotations is essential, but there isn't a standard, one-size-fits-all method for students to get ready for complex surgical cases. Recently, large language models (LLMs) like ChatGPT have shown great potential in medical education, but their reliability for the specific demands of surgery has been an open question. A new study aimed to find out just how useful ChatGPT can be for students on their PRS rotations by testing its responses to common surgical questions.

Putting AI to the Test The Studys Method

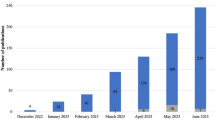

Researchers created a dataset of 267 questions covering a wide range of PRS subtopics. To ensure the test was comprehensive, they selected three different procedures from each subtopic and asked 12 identical questions about each one. To give the AI context, every prompt started with the phrase, "I am a medical student preparing for my PRS rotation." The responses from GPT-4o were then carefully scored by experts on a five-point scale, evaluating them for accuracy, completeness, usefulness, relevance, and overall quality. When a response scored poorly, the team re-queried it using a newer model, OpenA1 o1, to see if performance had improved.

The Verdict ChatGPTs Performance Under the Microscope

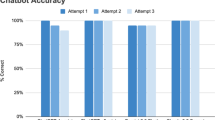

The results were a mixed bag, revealing both the strengths and weaknesses of the AI. Right away, 21 responses had to be thrown out because they contained fabricated, non-existent scientific articles when asked for reading material—these scored an average of just 1.17. For the remaining responses, the scores were generally strong:

- Accuracy: 4.12

- Completeness: 3.88

- Usefulness: 3.96

- Relevance: 4.19

- Overall Quality: 4.00 The AI performed best on questions about lymphatics but struggled most with the head and neck subtopic. Notably, it was much better at answering general educational questions than it was at detailing specific surgical procedures. When the low-scoring answers were re-queried with the newer o1 model, the scores improved significantly.

Key Takeaways and the Future of AI in Surgery

The study concluded that ChatGPT is most effective at providing educational tools and background information, particularly in well-defined areas like lymphatics. However, it has clear limitations, especially when it comes to generating reliable literature and answering detailed procedural questions. The significant improvement seen with the newer o1 version is a promising sign. It suggests that as these models continue to be refined, LLMs will likely become increasingly valuable and reliable tools in surgical education. For a full description of the Evidence-Based Medicine ratings used in this journal, please refer to the publisher's online Instructions to Authors.

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details