Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

ChatGPT Impact Jordanian Medical School Learning

Unveiling ChatGPTs Role in Jordanian Medical Education

Background

ChatGPT shows great potential for changing medical education. It can help streamline research and make teaching better. However, not much is known about its use in Middle Eastern medical schools. This study looked at what factors affect ChatGPT adoption in Jordanian medical education. It used a modified version of the Unified Theory of Acceptance and Use of Technology (UTAUT) framework.

Methods

A survey was given to medical students and faculty at the University of Jordan. This was a cross sectional study. A validated 33 item questionnaire was shared online and on campus. It targeted people who were familiar with ChatGPT. Structural equation modeling (SEM) was used to check the relationships between important factors. These included Performance Expectancy (PE), Effort Expectancy (EE), Perceived Risk (PR), Facilitating Conditions (FC), and attitude (ATT).

Results

There were 127 participants. 53 percent were male, and the average age was 23.2 plus or minus 7.6 years. Attitude (ATT) was significantly affected by PE and EE. These two factors explained 37 percent of the variation in attitude. Behavioral Intention (BI) was predicted by attitude and positively affected actual usage. Facilitating Conditions (FC) did not significantly affect EE or BI. This suggests users did not rely much on external support. Surprisingly, Perceived Risk (PR) did not negatively affect attitude. This means the tool's usefulness was more important than worries about wrong information or privacy. Overall, the model explained 53 percent of the variation in BI and 36.5 percent of the variation in actual usage.

Conclusion

The use of ChatGPT in Jordanian medical education is mainly driven by how useful and easy to use it is perceived to be. Attitudes are very important in this. To encourage more use of AI tools like ChatGPT in medical training, it is important to address risks of misinformation and build trust with specific strategies.

The Rise of AI in Medical Training

The introduction of ChatGPT has greatly improved conversational AI. This flexible AI system can create content and code, summarize information, write creatively, and change writing styles. Trained on large multilingual datasets, it produces responses that sound like human conversation. The medical field is very interested in AI applications. They are exploring AI's potential in making clinical decisions, diagnosing illnesses, managing patients, handling electronic health records, and medical education.

The use of AI in medical education has led to better learning methods, more accurate assessments, and support for clinical decisions. For example, ChatGPT 4 performed very well on the United States Medical Licensing Examination (USMLE). It scored 85.7 percent in Step 1, 92.5 percent in Step 2, and 86.9 percent in Step 3. This shows its potential as a helpful tool for medical students.

AI powered tools also help in medical imaging training. They improve anatomical learning with advanced image segmentation, which greatly helps educational outcomes. Natural Language Processing (NLP) tools like ChatGPT have shown they can provide human like language responses. However, there are still challenges with accuracy, how easy they are to understand, and ethical concerns.

ChatGPT A Game Changer for Medical Students

ChatGPT can do many things. It can make literature reviews easier, analyze large medical datasets, overcome language barriers, and support personalized, problem based education. Additionally, ChatGPT has potential in developing new drugs, improving patient centered care in radiology and other areas, and acting as a reference for healthcare providers, such as deciding on imaging steps for breast cancer screening.

Despite its promise, there are significant limitations. These include generating incorrect responses, biases, and potential misinformation. These issues can reduce its effectiveness in education and clinical decisions. Furthermore, privacy concerns and trust issues are major barriers. These need careful management to ensure AI tools are used ethically.

Navigating the Challenges Risks and Regional Gaps

In Middle Eastern areas, especially Jordan, AI adoption faces specific challenges. These include poor optimization for the Arabic language, resistance from faculty, and limited integration into current curricula. These are significant regional barriers. Despite these important challenges, there is not much research on AI adoption, including ChatGPT, in Jordanian medical education. This is a clear gap in knowledge.

To fill this gap, this study used a modified UTAUT framework. It investigated the barriers and facilitators influencing ChatGPT adoption in Jordanian medical education. The goal is to provide practical insights to help integrate it effectively into medical curricula.

How Was the Study Conducted The UTAUT Framework

The UTAUT model was chosen as the best framework to assess factors influencing users' willingness to adopt ChatGPT in medical education. Developed by Venkatesh et al., this model combines eight major theoretical frameworks of technology acceptance. This makes it one of the most complete and widely validated models in the field. The UTAUT model was used in this study because it can capture the many aspects of ChatGPT adaptation in educational settings. It provides a way to understand factors that influence the adoption of tools and ensures a full analysis of both drivers and barriers to ChatGPT adaptation, making it very relevant for our study. Chatterjee and Bhattacharjee proposed the adaptation of the UTAUT model used here.

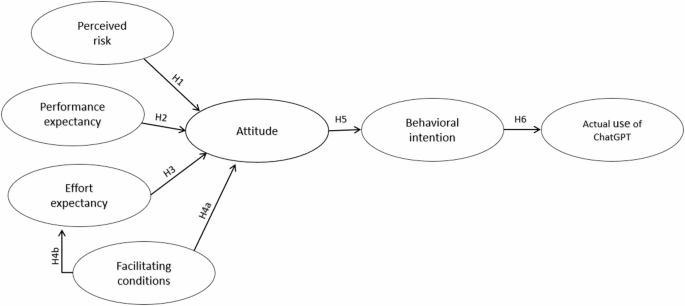

The UTAUT model is good at explaining user behavior towards technology by including key constructs (see Table 1 for constructs and hypotheses, Fig. 1 for the model diagram). It has been shown to explain up to 70 percent of the variance in Behavioral Intention and technology use, performing better than earlier models in predictive accuracy. For this study, Social Influence was excluded because it was not very relevant to medical students’ adoption of ChatGPT, based on findings from similar studies. Instead, the focus was on the other three constructs. Attitude (ATT) was included as a mediating factor, due to its important role in shaping Behavioral Intention in previous research.

Given the specific context of medical education, the quality of knowledge provided by ChatGPT was a key concern. Therefore, Perceived Risk (PR) was introduced as an external factor affecting user adoption. This is consistent with earlier studies that highlight the negative influence of perceived risks, such as security, privacy, and credibility concerns, on technology adoption.

To simplify the analysis and focus on direct effects, moderators like age, gender, experience, and voluntariness were excluded. This was based on the assumption that these factors have little impact on medical students’ attitudes toward adopting ChatGPT. The resulting modified UTAUT model suggests that PR, PE, and EE influence BI through ATT. Facilitating Conditions were hypothesized to have both a direct effect on BI and an indirect effect on EE.

Who Participated Study Sample and Data Collection

This cross sectional study used a validated 33 item questionnaire, originally developed and published in a previous study. It was distributed via email and QR codes on campus to reduce sampling bias. The study targeted medical students and professors at the University of Jordan and its affiliated hospital. Undergraduate medical students from all years (first to sixth) and professors of various specialties participated.

We received Institutional Review Board (IRB) approval from the University of Jordan Medical College. Data were collected from April 8 to June 3, resulting in 441 responses. To focus on participants familiar with ChatGPT, we excluded 300 participants who had not used ChatGPT and 14 participants who did not consent.

Analyzing the Data Statistical Approach

Structural Equation Modeling (SEM) was performed using SPSS AMOS version 26 to assess relationships among latent variables and validate the model’s structure. SEM was chosen for its ability to verify theoretical models and test relationships between constructs. The model’s explanatory power was evaluated using R squared values, indicating the variance in the dependent variable explained by independent variables. Facilitating Conditions (FC) were measured to assess the extent of ChatGPT adoption among organizations. Path coefficients in the SEM model illustrated the strength and direction of the relationships between constructs. The R squared values indicate the proportion of variance in the dependent variable explained by independent variables, providing insight into the model’s explanatory power.

Demographic data were analyzed using SPSS version 26. Continuous variables (e.g., age) were summarized using mean (plus or minus standard deviation), while categorical variables (e.g., gender) were reported as frequencies and percentages.

What Did the Study Find Key Results

A total of 442 individuals agreed to participate in the study. Of these, 127 respondents (28.73 percent) had prior experience using ChatGPT and were included in the analysis. The group included medical students and teaching staff. The participants ages ranged from 18 to 58 years, with an average of 23.2 years (SD = 7.6). The gender distribution was nearly balanced, with 67 males (53 percent) and 60 females (47 percent).

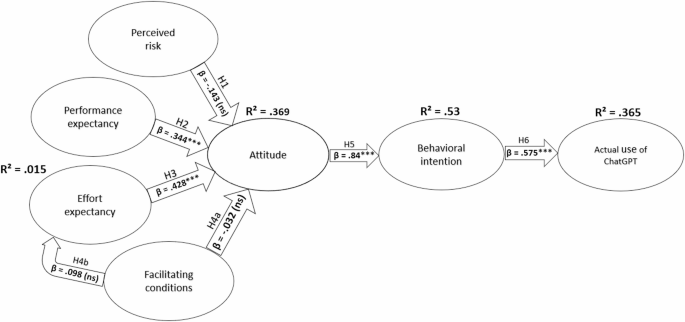

Structural equation modeling produced seven hypotheses about the technology acceptance model, as shown in Fig. 2 and summarized in Table 3. Key findings include that Performance Expectancy (PE) and Effort Expectancy (EE) both had significant positive effects on Attitude (ATT) (beta = 0.344, p < .001; beta = 0.428, p < .001, respectively), accounting for 37 percent of the variance in ATT. Facilitating Conditions (FC) did not have a significant impact on EE (beta = -0.032, not significant) or on Behavioral Intention (BI) (beta = -0.098, not significant). Attitude (ATT) significantly influenced BI, showing a strong positive relationship (beta = 0.84, p < .001). The path from BI to Actual Use of ChatGPT (ACME) was also significant (beta = 0.575, p < .001), with BI explaining 53 percent of the variance in ACME.

The results confirm that user attitudes significantly affect their intentions and actual usage of ChatGPT in the medical educational context. However, FCs did not significantly influence EE or BI. This suggests that other factors not captured by the current model might play a role. This insight could be valuable for educational technology developers focusing on maximizing the usability and effectiveness of AI tools in medical education.

Understanding the Findings Performance and Effort Matter

ChatGPT’s adoption in medical education is shaped by many factors that influence students' and educators' willingness to use AI tools in their learning. While its potential benefits are well known, how it's adopted varies based on context, methods, and cultural factors. This requires a deeper look to understand the dynamics.

Performance Expectancy (PE) and Effort Expectancy (EE) are key to adopting ChatGPT and other technologies in medical education. Our findings confirm this. PE and EE were significantly linked with Attitude (ATT) toward ChatGPT, explaining 37 percent of the variance. This matches studies that show PE (or perceived usefulness) is a critical driver of adoption when users believe technology improves learning outcomes and efficiency.

Among Moroccan nursing students, PE predicted Information and Communication Technology (ICT) and social media use in education, with EE as a moderating factor. Similarly, ChatGPT’s ease of use likely encouraged positive attitudes. This reinforces the importance of user friendly interfaces in AI assisted diagnostic tools. However, the impact of PE and EE varies across educational settings. A study on mobile app adoption among medical students found that EE indirectly affected usage, while PE directly influenced behavioral intention.

The Surprising Role of Perceived Risk

Research on UTAUT in educational technology has found reasons for insignificant relationships between model constructs. For example, 40 percent of respondents in a blockchain adoption study said that EE, facilitating conditions, and hedonic motivation did not influence adoption. Similarly, a study on Google Classroom found that 35 percent of students reported that social influence, EE, and self efficacy had no impact on their decision to use the platform. Methodological limits, like overlooking nonlinear relationships, may contribute to these differences. A meta analysis of 40 studies found that linear models explained only 60 percent of technology adoption variance, compared to 75 percent when nonlinear relationships were considered. These variations likely reflect contextual factors like technological familiarity and cultural differences, highlighting the need for more research in medical education. In our study, the significant role of both PE and EE suggests that ChatGPT’s perceived utility and ease of use match Jordanian medical students’ needs, supporting broader adoption.

Perceived Risk (PR), which was expected to negatively affect attitudes, was not significant in our study. This is surprising, as PR is often a key barrier to technology adoption, especially in healthcare education. Trust, security, and user familiarity shape risk perception, with higher PR reducing adoption. One study found that while 60 percent of students saw AI tools as beneficial for assessments, 40 percent had concerns about data privacy and dependency. Another study reported that 90 percent of students were familiar with AI tools, yet 35 percent lacked clarity on institutional AI policies, leading to uncertainty about proper use. Additionally, overreliance on AI tools negatively affected problem solving skills in 45 percent of students, while moderate use improved academic performance by 30 percent. Addressing PR is crucial, as digital health literacy and user centric designs can reduce worries and improve adoption.

Facilitating Conditions Not a Major Factor

Facilitating Conditions (FC) were not significantly associated with Behavioral Intention (BI) or Effort Expectancy (EE) in our study. This is unlike other contexts where FCs strongly influence technology adoption. Research on mobile health applications, Moodle adoption, and mobile banking highlights the critical role of technical support, system compatibility, and resource availability in driving adoption. However, in augmented reality (AR) adoption for primary education, FCs have an insignificant impact on BI. This is likely due to the newness of the technology and lack of supporting policies or training.

The lack of significance in our study may reflect a cultural or contextual difference, where students and educators rely less on external support due to their familiarity with self directed learning tools. However, ensuring accessible infrastructure and training remains essential for maximizing ChatGPT's potential in medical education.

Intention to Use and Actual Use A Clear Link

Behavioral Intention (BI) and its relationship with Actual Use (ACME) showed a clear pathway in our findings, which is consistent with UTAUT. Attitude (ATT) significantly influenced BI, which, in turn, predicted ACME, explaining 53 percent and 36.5 percent of the variance, respectively. These results align with broader UTAUT research, where ATT is a strong predictor of BI, particularly when technologies are perceived as useful and easy to use.

For ChatGPT, fostering positive attitudes by addressing usability concerns and demonstrating practical benefits can enhance its adoption.

Cultural Context and Regional Differences

Cultural factors that shape technology acceptance in the Middle East, such as hierarchical decision making, communal learning, and gender norms, may influence adoption behavior. Barriers like limited access to resources and institutional biases, particularly for women in STEM, highlight the need for equitable and culturally sensitive implementation strategies. The COVID 19 pandemic further worsened digital literacy gaps, emphasizing the need for targeted training and support to build trust in technologies like ChatGPT.

Comparative studies show regional differences in ChatGPT adoption. Underdeveloped regions face infrastructural challenges, whereas developed nations focus on ethical frameworks and personalized learning. In Jordan, addressing foundational barriers while fostering technological acceptance is key to successful integration.

Misinformation and the Path Forward

ChatGPT offers transformative opportunities in medical education but also raises concerns, particularly about misinformation. The risk of generating inaccurate or biased content poses challenges for critical assignments and research integrity. These issues are worsened by a lack of training in ethical AI use, as seen in studies that highlight the risks of fabricated data and images.

Integrating AI literacy into curricula is essential for ensuring responsible use. Research emphasizes the need for critical evaluation skills to identify credible information and biases. Paired with the professional development of educators, these initiatives can promote ethical and effective ChatGPT use in medical education.

Our study highlights ChatGPT's potential in medical education, if supported by tailored strategies. Addressing usability challenges, misinformation risks, and cultural apprehensions, while enhancing trust and digital literacy, can ensure successful integration. With institutional support, ChatGPT can revolutionize medical education and offer personalized learning, improved diagnostic training, and innovative teaching methods.

Study Strengths and Limitations

This study is the first to examine ChatGPT adoption in Jordanian medical education, filling a critical gap in Middle Eastern research. Using the UTAUT framework, it identifies key factors influencing adoption among medical students and educators, reinforcing its relevance in understanding technology acceptance in medical education. Although our findings emphasize this potential, this study has several limitations. Reliance on self reported data introduces potential response bias, and the focus on a single institution limits generalizability. A larger sample size would enhance statistical power. As the limited sample size and geographic scope restrict generalizability, an international study across Middle Eastern countries is recommended to validate and extend these findings. This would help better understand regional adoption dynamics, cultural and infrastructural differences, and broader applications of ChatGPT in medical education. Future tests could ensure the validity and reliability of findings, and a multi collinearity test could address potential issues among independent variables. Additionally, this study does not explore the physiological dimension of ChatGPT adoption. Policymakers and institutions should consider developing training programs to improve digital literacy among educators and students. They should also establish clear guidelines addressing ethical concerns and misinformation risks to support ChatGPT integration into medical education. While this study focuses on Jordanian education, its findings and methodology have significant global implications, showing UTAUT as a flexible model applicable across regions and cultures. Thus, this study serves as a key reference for AI adoption in medical education within cultural and global discussions. This pioneering study lays the groundwork for future studies on AI adoption in medical education, emphasizing the need for strategies to enhance usability and trust.

The Future of ChatGPT in Jordanian Medical Education

ChatGPT presents transformative opportunities for medical education in Jordan by enhancing personalized learning and streamlining research. Our findings highlight the central role of attitudes, influenced by performance and effort expectancies, in driving adoption among medical students and educators. While perceived risk and facilitating conditions showed minimal impact, trust building measures and addressing misinformation were essential for sustained integration. By fostering positive attitudes and ensuring ethical use, ChatGPT can become a cornerstone of medical education, providing innovative, student centered learning experiences.

Data Availability

Data is provided within the manuscript or supplementary information files.

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details