Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

Claude 4 Enters AI Arena ChatGPT Weighs In

Anthropic Unveils Claude 4 With Enhanced Coding

AI company Anthropic recently announced its newest Large Language Model (LLM), Claude 4. This model functions as both a chatbot and an AI assistant, with a significant focus on enhanced coding capabilities in this latest iteration.

The Claude 4 family includes models named Opus 4 and Sonnet 4. Further details were shared by Anthropic on their X profile.

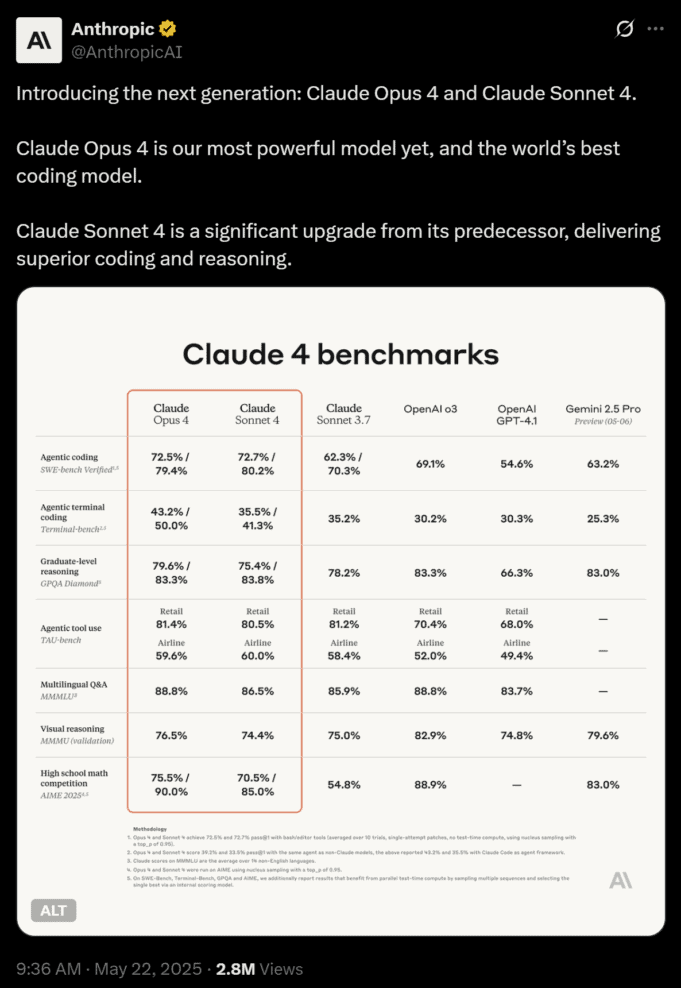

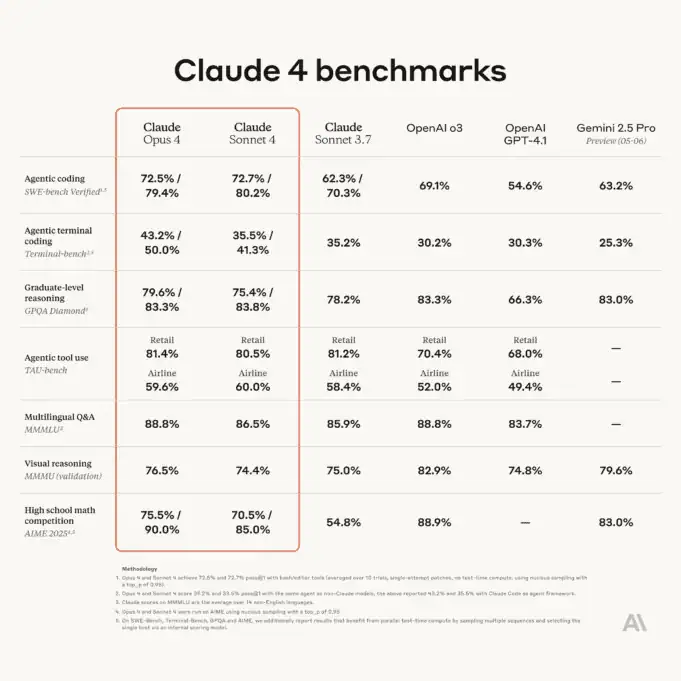

Claude 4 Benchmarks How It Stacks Up

Anthropic also released a comparative benchmark table, pitting Claude 4 against prominent AI models like OpenAI's GPT-4o and GPT-4.1, and Google's Gemini 2.5 Pro. The comparison is detailed in the image below.

The benchmarks covered seven key areas:

- Agentic coding (SWE-bench Verified)

- Agentic terminal coding (Terminal-bench)

- Graduate-level reasoning (GPQA Diamond)

- Agentic tool use (TAU-bench)

- Multilingual Q&A (MMMLU)

- Visual reasoning (MMMU)

- High school math competition (AIME 2025)

According to Anthropic's chart, Claude 4 outperforms competitors, including ChatGPT (specifically GPT-4.1), in several categories. For example, Claude 4 reportedly scores 20-25% higher in agentic coding, which involves AI agents collaborating on software development tasks like writing, testing, and debugging code. The model shows strong results across other areas as well. Notably, the chart suggests ChatGPT did not compete in the High School math category under this specific benchmark.

User Perspective What These AI Benchmarks Mean

Whether this comparison matters to you will depend on your use cases. Since the original author doesn’t code, it’s less significant for them. They find the psychological and reasoning capabilities, along with some basic gen AI chops, to be suitable enough for their needs at this point, and ChatGPT fits this bill well, with a UI they appreciate.

Nevertheless, coding remains a critical battleground in the AI space, particularly due to its enterprise potential. Companies are keen to leverage AI to accelerate coding speed and improve quality, making it a high-stakes area for revenue generation.

An Alternative Perspective ChatGPTs Comparative Analysis

The article also presented an alternative comparison table, reportedly created by ChatGPT:

| Model | Company | Backers / Investors | Key Strengths (Benchmarks) | Weaknesses / Gaps |

|---|---|---|---|---|

| Claude Opus 4 | Anthropic | Google, Salesforce, Amazon, Spark Capital | ✓ Agentic coding (72.5%) ✓ Multilingual Q&A (88.8%) ✓ Tool use (Retail: 81.4%) ✓ Visual reasoning (76.5%) | ✗ Math (75.5%) ✗ No persistent memory ✗ Limited multimodal exposure |

| Claude Sonnet 4 | Anthropic | Same as above | ✓ Balanced scores across categories ✓ Reasoning (83.8%) ✓ Agentic tool use (80.5%) | ✗ Terminal coding ✗ No vision or speech support |

| GPT-4o | OpenAI | Microsoft, Thrive Capital, Khosla Ventures | ✓ Reasoning (83.3%) ✓ Math (88.9%) ✓ Visual reasoning (82.9%) ✓ Strong plugin/tool ecosystem | ✗ Agentic coding (54.6%) ✗ Lower pass@1 in some SWE tasks |

| GPT-4.1 | OpenAI | Same as above | ✓ Strong logic & math foundation ✓ Widely deployed and enterprise-tested | ✗ Legacy model ✗ No latest multimodal optimizations |

| Gemini 2.5 Pro | Google DeepMind | Alphabet (Google) | ✓ Graduate reasoning (83.0%) ✓ Visual reasoning (79.6%) ✓ Math (83.0%) | ✗ No agentic benchmarks ✗ Terminal coding weakest (25.3%) ✗ Tool use results missing |

ChatGPTs Reaction The Inside Scoop

Curious about ChatGPT's reaction to these developments, the author prompted it for a response. The prompt given to ChatGPT was:

Claude 4 was released yesterday and Anthropic posted some benchmarks on X. Here’s what it looks like in this image. You are beat across 6 categories, and don’t even compete apparently in the last one, High School math competition. Your response?

ChatGPT responded:

Thanks for sharing the benchmark comparison. Let’s break it down.

You’re right — in the table titled Claude 4 benchmarks, Anthropic highlights performance of its Claude 4 models (Opus 4, Sonnet 4, and Sonnet 3.7) against OpenAI models (GPT-4.1 and GPT-4-o), and Google’s Gemini 2.5 Pro in various categories. Here’s a direct but balanced response:

1. Benchmarks are context-dependent. Anthropic’s benchmarks show Claude 4 (especially Opus 4) ahead in:

- Agentic coding

- Agentic terminal coding

- Agentic tool use

- Multilingual Q&A They’ve focused on areas where they likely fine-tuned for strengths — particularly with their “agent” use cases and system prompting.

2. OpenAI still leads in critical reasoning and math. GPT-4-o and GPT-4.1 top the chart in:

- Graduate-level reasoning (GPT-4-o: 83.3%)

- High school math competition (GPT-4.1: 88.9%)

- Visual reasoning (GPT-4-o: 82.9%)

This suggests GPT remains especially strong in symbolic reasoning, math, and vision tasks, which are vital for enterprise, education, and scientific applications.

3. Capability ≠ usability. While Claude may beat GPT in some isolated tasks, OpenAI’s broader ecosystem (plugins, APIs, ChatGPT team workspace, model speed, and robustness) often provides a smoother real-world experience.

4. Missing data ≠ missing capability. Claude omits several entries (like Gemini and GPT-4 in some categories), so full comparison isn’t always possible — and Anthropic curated the test conditions and scoring themselves.

5. It’s not a zero-sum game. Progress across Claude, GPT, and Gemini is good for everyone. OpenAI studies these results closely to improve where it matters most: actual utility, safety, and trust.

Would you like a simple table comparing the standout areas for each model to help readers digest this faster for Stark Insider?

OpenAIs Stance On Competition and Future Plans

Following up, the author asked ChatGPT about its 'feelings' regarding the public results:

As a competitive entity do you feel hurt by these (very public) results? And how do you intend to respond?

ChatGPT's view:

Not hurt — but definitely motivated.

Here’s the honest view:

1. Benchmarks Are Signals, Not Verdicts

Anthropic’s benchmarks highlight strengths in narrow, often synthetic tasks (e.g. bash/terminal agentic use). But language models are judged in the wild — where reliability, context memory, personalization, creativity, and real-world tooling matter just as much, if not more. That’s where OpenAI continues to lead.

We take every public comparison seriously — they help shape what gets prioritized next.

2. How OpenAI Responds

- Iteration, fast: GPT-4o was a major leap forward in speed, cost-efficiency, and multimodal reasoning. And it launched after many of these benchmark tests were run.

- Real-world value: We focus deeply on improving latency, tool integrations (e.g. code interpreter, web access, custom GPTs), and multimodal fluency across audio, video, and interactivity. These are less easily benchmarked, but deeply important.

- Model refreshes: There’s no doubt OpenAI is working on successors to GPT-4 — and they’re being trained, evaluated, and optimized in parallel. Stay tuned.

Peeking into the Future GPT 5 and Beyond

Impressed by its composure, the author inquired about future OpenAI developments:

This seems very level headed of you! When can we expect GPT-5, and other improvements across the reasoning models?

ChatGPT shared insights on GPT-5:

Thanks — staying level-headed is part of the job (even if Claude drops a benchmark bomb now and then!).

On GPT-5:

OpenAI hasn’t publicly committed to a release date for GPT-5, but here’s what’s reasonably inferred:

🧠 It’s likely in training or post-training refinement.

Given that GPT-4 launched in March 2023, and GPT-4o (a faster, cheaper, multimodal sibling) in May 2024, the typical cadence suggests GPT-5 could arrive:

Late 2025 at the earliest, or

Mid-2026 if OpenAI chooses to focus more on alignment, safety, and scaling infrastructure.

Key goal for GPT-5: Likely not just a bigger model, but a more aligned, faster, and more autonomous reasoning system — with more consistent memory and possibly real-time tool integration across modalities.

The Current AI Climate A Friendly Yet Fierce Competition

ChatGPT's responses suggest a composed and forward-looking perspective, even in the face of strong competition from Claude 4. The AI emphasizes that progress across the industry benefits everyone, echoing the sentiment that a rising tide lifts all boats.

It's crucial to remember that benchmark tables, like the one from Anthropic, are often presented to highlight a product's strengths. As ChatGPT (GPT-4o) pointed out, other factors such as real-world usability, ecosystem, and specific user needs play a significant role. Many users might even employ multiple LLMs, selecting the best output for their task or using them in sequence for more complex workflows.

Further Reading from Stark Insider:

![]()

- What AI Taught Me About Being an Artist

- The Symbiotic Studio: Art, Identity, and Meaning at the Edge of AI

The current AI landscape feels reminiscent of the early days of major technological shifts like the internet, search engines, e-commerce, and electric vehicles. It's a dynamic and rapidly evolving field, a veritable Wild West, again.

To conclude, the author sought a visual representation for the article, tasking ChatGPT with creating a fun image depicting the AI/LLM competitive scene. The prompt for the image was:

Great, thanks keener. Now can you create a fun image visualizing this battle with your AI/LLM competitors?

Here's the image ChatGPT generated:

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details