Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

AI Image Generation Boosts Maxillary Lesion Diagnosis

Introduction

The development of AI-based diagnostic tools in medicine, especially for multi-lesion analysis, is often hampered by challenges in acquiring and annotating medical data. These issues include high costs and class imbalance in lesion datasets, where certain conditions are far less common than others. For instance, in oral implantology, maxillary sinus lesions (MSL), which are crucial for determining eligibility for sinus lift surgery, represent a minority of cases. Mucosal thickening and polypoid lesions, specific types of MSL, were found in only 20.2% and 9.17% of cases, respectively, in a database of 2000 maxillary sinus images. This scarcity limits the development of AI diagnostics tailored to these specific lesions, highlighting the need for effective methods to address medical data limitations.

Medical imaging data presents unique characteristics that affect data optimization strategies and AI tool performance. Accurate lesion labeling is vital for AI reliability, ensuring images reflect clinically relevant pathological features. Furthermore, the same lesion type can vary in appearance (e.g., size and location of polypoid lesions), requiring AI models to learn diverse features for better generalization. The coexistence of multiple lesion types also demands integrated evaluation for accurate medical decisions, such as dentists analyzing concurrent MSLs to plan sinus elevation surgeries. Beyond continuously collecting and labeling real data, finding alternative ways to obtain realistic, diverse, and multi-type medical images is crucial.

Researchers often use data augmentation (DA) to expand training datasets. Traditional DA methods, like geometric transformations, cannot create new image features beyond the original dataset, limiting their utility. This has spurred the development of generative adversarial networks (GANs) to produce lesion-specific images, optimize dataset balance, and enhance AI diagnosis for less common lesion categories. Previous studies have used GANs to generate images of liver lesions, thyroid conditions, brain tumors, skin lesions, and colon polyps. However, these approaches often require training separate GANs for each lesion type, and controlling the quality of automatically generated images remains a challenge.

The StyleGAN series, an advancement over traditional GANs, uses a mapping network to encode data features into latent space vectors. The GANSpace algorithm further allows for dimensionality reduction and feature disentanglement, enabling linear manipulation of image attributes. This human-in-the-loop approach offers controllability in GAN-based image generation. It allows flexible synthesis of realistic, diverse images without predefined class constraints and eliminates the need for per-class model training, addressing key limitations of traditional GANs.

This study proposes a StyleGAN2-based framework to optimize dataset distribution and improve AI-based diagnosis of specific lesions, using MSL image generation as an example. Unlike traditional GANs, this research introduces feature vector disentanglement and expert-controlled adjustments. This enables the generation of mucosal thickening and polypoid lesion images with regulated anatomical realism, morphological diversity, and pathological subtypes. These generated images were validated by high structural similarity indices (SSIM >0.996), low maximum mean discrepancy values (MMD <0.032), and clinician-assigned clinical scores. Incorporating synthetic images into diagnostic model training significantly improved performance for both lesion types across internal and external datasets. This work, an early exploration of controllable image generation in dental medicine, offers a practical solution to data scarcity and advances diagnostic support, especially in resource-constrained settings, ultimately aiming to enhance patient outcomes.

Methods

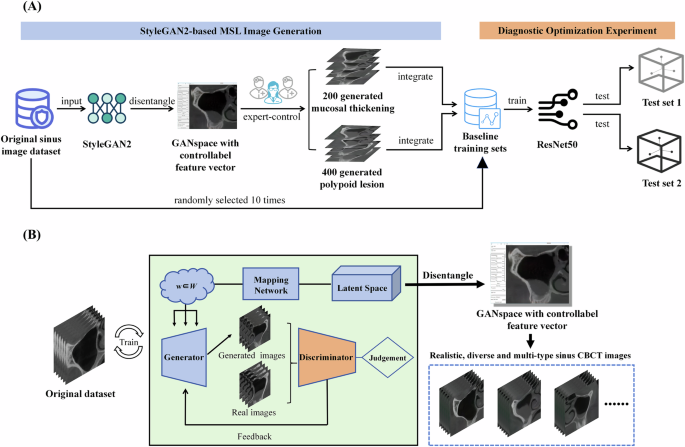

The study design flowchart is illustrated in Fig. 1A.

Dataset development

This study followed the Helsinki Declaration and received approval from the Ethics Committee of the Sun Yat-sen University Affiliated Hospital (KQEC-2020-29-06). Informed consent for imaging data use was obtained from all participants. Previously, 2000 coronal images of the maxillary sinus at first molar sites were collected from CBCT scans of 1000 patients. A YOLOv5 object detection network was used to crop maxillary sinus regions and standardize MSL annotation. These 2000 images served as training data for StyleGAN2 and ReACGAN development.

Of these images, mucosal thickening constituted 20.2%, polypoid lesions 9.17%, and nasal obstruction 60.45%. The study focused on optimizing diagnosis for the first two lesion types due to data distribution issues. For reliability, a stochastic sampling strategy was used: ten baseline training sets, each with 400 images, were randomly sampled from the original dataset (excluding internal test sets). Lesion proportions matched the original dataset, guiding generation quantity. Two independent static internal test sets (100 images each) were created: Internal Test Set 1 (50 mucosal thickening, 50 normal) and Internal Test Set 2 (50 polypoid lesions, 50 normal). These were used to compare ResNet50 performance before and after integrating generated MSL images.

For generalizability testing, external test sets were employed under ethical approval. CBCT images from 260 patients at Guangzhou Chunzhihua Dental Clinic were collected. Using identical image processing, 520 annotated maxillary sinus images were obtained. Two external test sets (100 images each) were randomly created: External Test Set 1 (50 mucosal thickening, 50 normal) and External Test Set 2 (50 polypoid lesions, 50 normal).

Construction and image generation of StyleGAN2 and ReACGAN

GANs comprise a generator and a discriminator, relying on a latent space of randomly distributed latent vectors. During unsupervised GAN training, the generator produces outputs from random noise, and the discriminator assesses their realism. Through adversarial training, the generator refines synthetic images to mimic real data. In StyleGAN2, the mapping network discovers relationships between training data features and latent space vectors (feature disentanglement).

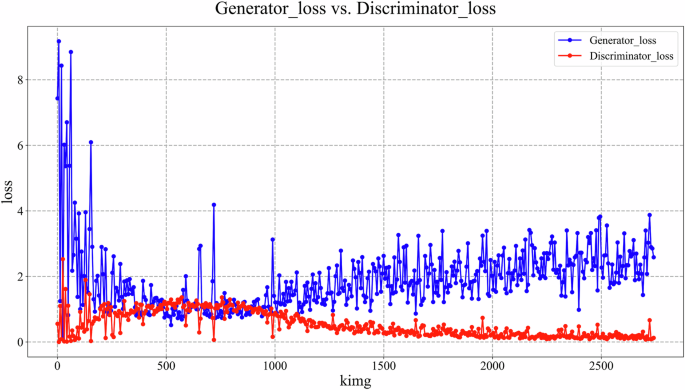

During StyleGAN2 training, input vectors passed through an 8-layer fully-connected network to generate intermediate latent vectors. These modulated convolutional kernels at multiple scales, controlling image features. Generation started with a fixed 4x4x512 tensor, progressively refined to produce 512x512 images. Style injection controlled global appearance, while a detail branch enhanced local features. Training used logistic loss (learning rate 0.002) with an Adam optimizer. A progressive growing strategy from 8x8 to 512x512 resolution was used over 1.2 million images (minibatch size 32). Generator weights were smoothed using EMA. Total training spanned 2700 thousand images (kimg), with the lowest discriminator loss checkpoint selected for inference. Post-training, GANSpace applied PCA for dimensionality reduction of disentangled latent vectors, creating an actionable interface (Supplementary Fig. 1). Three experienced implantologists manipulated feature vectors via this interface to generate realistic, diverse, multi-type MSL images (normal, mucosal thickening, polypoid lesions, ostium obstruction) at 512x512 pixels, with simultaneous annotation.

ReACGAN, an improved ACGAN variant, was also trained. Implemented via the StudioGAN framework, it used Big ResNet as its backbone. Both generator and discriminator used spectral normalization and attention mechanisms. The generator had a latent vector dimension of 140 and employed EMA. The discriminator included an auxiliary classifier. Hinge loss was used, with Adam optimizer, and the discriminator updated twice per iteration over 40,000 steps with Adaptive Discriminator Augmentation. ReACGAN was independently trained on mucosal thickening and polypoid lesion images to generate 512x512 pixel images (Supplementary Fig. 2).

Model construction and training used a local server with NVIDIA Tesla V100 GPUs. The code is open source at https://github.com/xhli-code/stylegan2-ganspace?utm_source=imaginepro.ai.

Evaluation of generated MSL images

Generated MSL images were evaluated against original lesion images using SSIM and MMD. SSIM measures similarity (closer to 1 is better). MMD evaluates feature distribution (lower is better, closer to 0 indicates more realism).

A clinical validation experiment assessed realism. For mucosal thickening, 100 images each from the original dataset, StyleGAN2-generated, and ReACGAN-generated sets formed three blind sub-datasets. Three experienced implantologists independently scored lesion realism on a 5-point scale (1=unrealistic, 5=convincingly realistic). This was repeated for polypoid lesions.

Diagnostic optimization experiments based on ResNet50

ResNet50, previously showing superior performance, was used for optimizing diagnosis of mucosal thickening and polypoid lesions (epoch=600, learning rate=0.0001, batch size=32). For mucosal thickening, ResNet50 was first trained on a baseline set and tested on Internal Test Set 1. Then, the baseline set was optimized with StyleGAN2- and ReACGAN-generated images, followed by retraining and re-testing. This was repeated across 10 baseline sets. A parallel approach was used for polypoid lesions with Internal Test Set 2. Traditional DA (flipping/rotation) effects were also compared. External validation used External Test Sets 1 and 2.

Performance was evaluated using F1 score, precision-recall curves (PRC), and receiver operating characteristic (ROC) curves. Precision and recall at the optimal F1-score threshold were also reported. Python (version 3.7) was used for analysis.

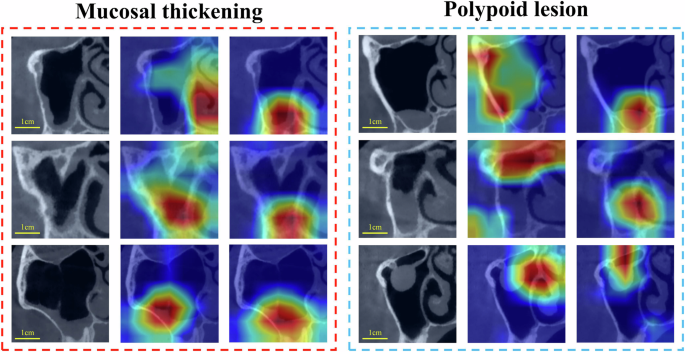

Visualization of the diagnostic model ResNet50

Grad-CAM was used to visualize ResNet50's regions of interest (ROI) on internal test images, providing insights into model performance before and after training set optimization.

Statistics and reproducibility

Data normality was assessed using the Shapiro-Wilk test. The Mann-Whitney U test compared average scores in clinical validation and differences between optimization techniques in diagnostic experiments. P <0.05 was considered statistically significant.

Reporting summary

Further research design information is in the Nature Portfolio Reporting Summary linked to the article.

Results

Construction and image generation of StyleGAN2 and ReACGAN

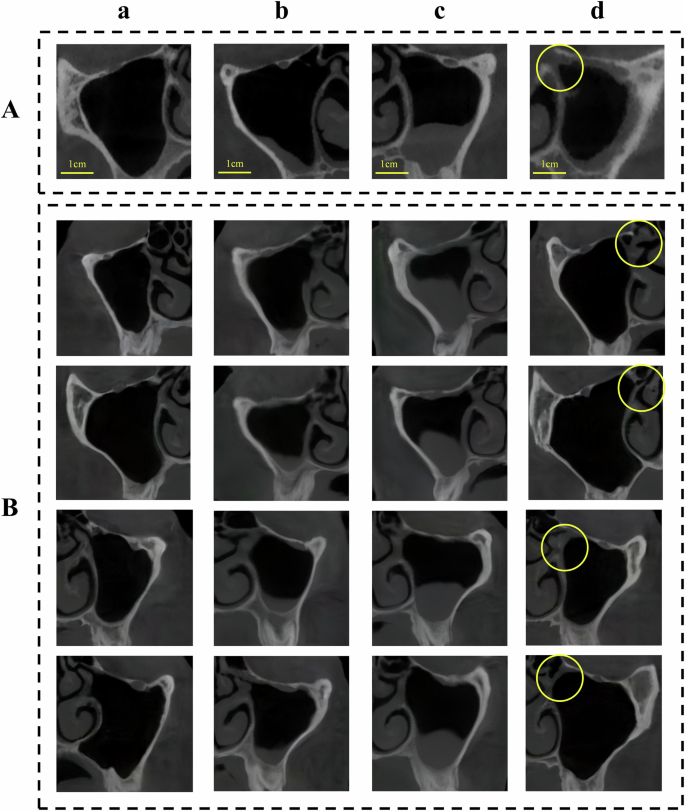

The StyleGAN2-based MSL image generator architecture is shown in Fig. 1B. StyleGAN2 generator and discriminator training completed, with losses stabilizing (Fig. 2). Implantologists generated 200 mucosal thickening images and 400 polypoid lesion images using GANSpace to address data imbalance. Other image types like normal and ostium obstruction images were also generatable (Fig. 3). For comparison, trained ReACGANs autonomously generated the same number of lesion images without expert intervention (Supplementary Fig. 2).

Evaluation of generated MSL images

StyleGAN2-generated mucosal thickening and polypoid lesion images achieved high SSIM values (0.9966 and 0.9962, respectively) and low MMD values (0.0155 and 0.0317, respectively), indicating good fidelity and alignment with real images. In contrast, ReACGAN-generated images showed bias, artifacts, or missing features, with lower SSIM scores (0.4781 and 0.4564) and higher MMD values (0.1994 and 0.1895). These findings are detailed in text summarizing Table 1.

Clinical validation by three implantologists showed StyleGAN2-generated images received realism scores comparable to real images (average 4.33 +/- 0.38 for mucosal thickening, 4.02 +/- 0.44 for polypoid lesions). ReACGAN images scored near 1 for both lesion types. StyleGAN2-generated mucosal thickening images' average score significantly differed from real images (P = 0.001). Significant differences were found between StyleGAN2 and ReACGAN image scores for both lesions (both P < 0.001). These results are from textual summaries of Tables 2 and 3.

Diagnostic optimization experiments based on ResNet50

Results from diagnostic optimization experiments are summarized from Table 4. For mucosal thickening, optimizing datasets with StyleGAN2 images significantly improved AUPRC (P < 0.001), AUROC (P = 0.001), and F1 score (P = 0.011) compared to baseline. For polypoid lesions, improvements in AUPRC, AUROC, and F1 score were more significant (all P < 0.001), with AUPRC increasing by nearly 14%. ReACGAN-optimized methods showed no substantial differences from baseline for either lesion type, with only AUPRC for mucosal thickening showing a statistically significant difference (P = 0.043) but other metrics not. Traditional DA (flipping/rotation) significantly degraded performance for both lesions.

On the external test set (summarized from Table 5), ResNet50 with StyleGAN2-optimized data showed slight improvement for mucosal thickening diagnosis compared to baseline. Polypoid lesion prediction performance improved markedly, with statistically significant differences (all P of metrics = 0.001).

Visualization of the diagnostic model ResNet50

Grad-CAM visualization (Fig. 4) showed that integrating StyleGAN2-generated images into the baseline training set enabled ResNet50 to focus its ROI more precisely on mucosal thickening and polypoid lesion locations, aiding more accurate predictions.

Discussion

This study successfully developed a StyleGAN2-based MSL image generation tool, producing high-quality, realistic, and diverse mucosal thickening and polypoid lesion images, confirmed by SSIM, MMD, and clinical validation. Integrating these images into ResNet50 training significantly improved diagnostic performance for both lesion types, evidenced by increased F1 scores, AUPRC, AUROC, and better Grad-CAM visualizations.

StyleGAN2, with expert control, generated realistic and diverse MSL images. It even showed hyper-realism, as StyleGAN2-generated mucosal thickening images significantly differed in average score from real images (P = 0.001). ReACGAN-generated images performed worse, highlighting StyleGAN2's feature disentanglement and GANSpace-driven latent space manipulation for fine-tuned adjustments. Traditional GANs like ReACGAN, being automatic black boxes, struggle with image realism and diversity, posing risks for medical applications requiring high reliability. Lack of diversity can also hinder diagnostic models from capturing varied lesion features.

Clinicians generated multi-class MSL images using a single StyleGAN2 framework, unlike ReACGAN which needs lesion-specific training. This makes StyleGAN2 more suitable for medical image generation, reducing computational costs and addressing data collection challenges. Previous studies using multiple GANs for different lesion types contrast with this study's approach, which establishes an effective methodological framework. The class-free training and class-conditional controllable generation hold promise for medical DA to enhance computer-aided diagnosis.

Identifying which latent vectors correspond to specific image features remains challenging. Empirically, initial vectors control global attributes (e.g., maxillary sinus shape/size), while later vectors affect fine details (e.g., mucosal thickness). Leveraging this pattern allows control and combination of vectors to generate various MSL types.

In diagnostic optimization, ResNet50's baseline performance for mucosal thickening was robust, likely due to its uniform characteristics. StyleGAN2 optimization still significantly improved performance, with Grad-CAM showing more focused ROIs. For polypoid lesions, with greater variability, diagnostic improvement was more pronounced. Increased sample size particularly benefited model performance. Nuanced evaluation showed increased precision for mucosal thickening (recall unchanged), and improved recall for polypoid lesions (precision decreased), suggesting room for refinement (Supplementary Table 2).

ReACGAN-generated images showed limited or no improvement in diagnostic performance, confirming that unsupervised generation may lack quality for medical tasks, possibly due to the study's dataset size. Traditional DA (flipping/rotation) detrimentally affected performance, potentially by altering original feature distributions. Combining traditional DA with StyleGAN2 optimization for polypoid lesions restored performance to baseline levels, mitigating traditional DA's negative impact and underscoring StyleGAN2's effect.

External test set results were consistent with internal tests, confirming generalizability, especially for polypoid lesions. Mucosal thickening improvement was less satisfactory, highlighting dataset uniqueness from different sources and the importance of multi-center data. Classes with more imbalanced distributions, like polypoid lesions, benefited more significantly from dataset optimization.

Study limitations include moderate sample size; larger, multi-center datasets are needed for enhanced reliability and generalizability, and to reduce overfitting. Exploring diverse generative models, DA techniques, and other classification tasks would broaden applicability. The methodology's reliance on expert access limits use in low-resource settings; semi-supervised learning for less labor-intensive annotation warrants consideration.

In summary, the StyleGAN2-based image generation tool effectively addressed medical imaging data acquisition and imbalance challenges for MSL conditions like mucosal thickening and polypoid lesions, significantly improving diagnostic performance. This strategy can be extended, enabling efficient generation of high-quality, diverse, multi-type medical images with lower privacy/data risks, potentially advancing AI-driven research in dental medicine through virtual public oral medical imaging databases.

Data availability

Representative images generated by StyleGAN2 were included as Supplementary Data 1. The source data for the figures can be found in Supplementary Data 2. To protect study participant privacy, other data used and/or analyzed during the current study are available from the corresponding author upon reasonable request.

Code availability

The code for this study is open source and has been released at https://github.com/xhli-code/stylegan2-ganspace?utm_source=imaginepro.ai.

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details