Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

Claude Sonnet Edges Out Gemini Pro In AI Showdown

(Image credit: Claude vs Gemini)

(Image credit: Claude vs Gemini)

When it comes to chatbot showdowns, I have run my fair share of head to head comparisons. This latest contest comes just hours after Claude 4 Sonnet was unveiled and I could not wait to see how it compared to Gemini 2.5 Pro, also new with updated features.

Instead of just testing Gemini and Claude on typical productivity tasks, I wanted to see how these two AI titans handle nuance: creativity under pressure, ethical dilemmas, humor, ambiguity and deep technical reasoning.

I gave Google Gemini 2.5 Pro and Claude 4 Sonnet, the same seven prompts — each designed to test a different strength, from emotional intelligence to code generation. While they both impressed me and this test taught me more about how they think, there was one clear winner.

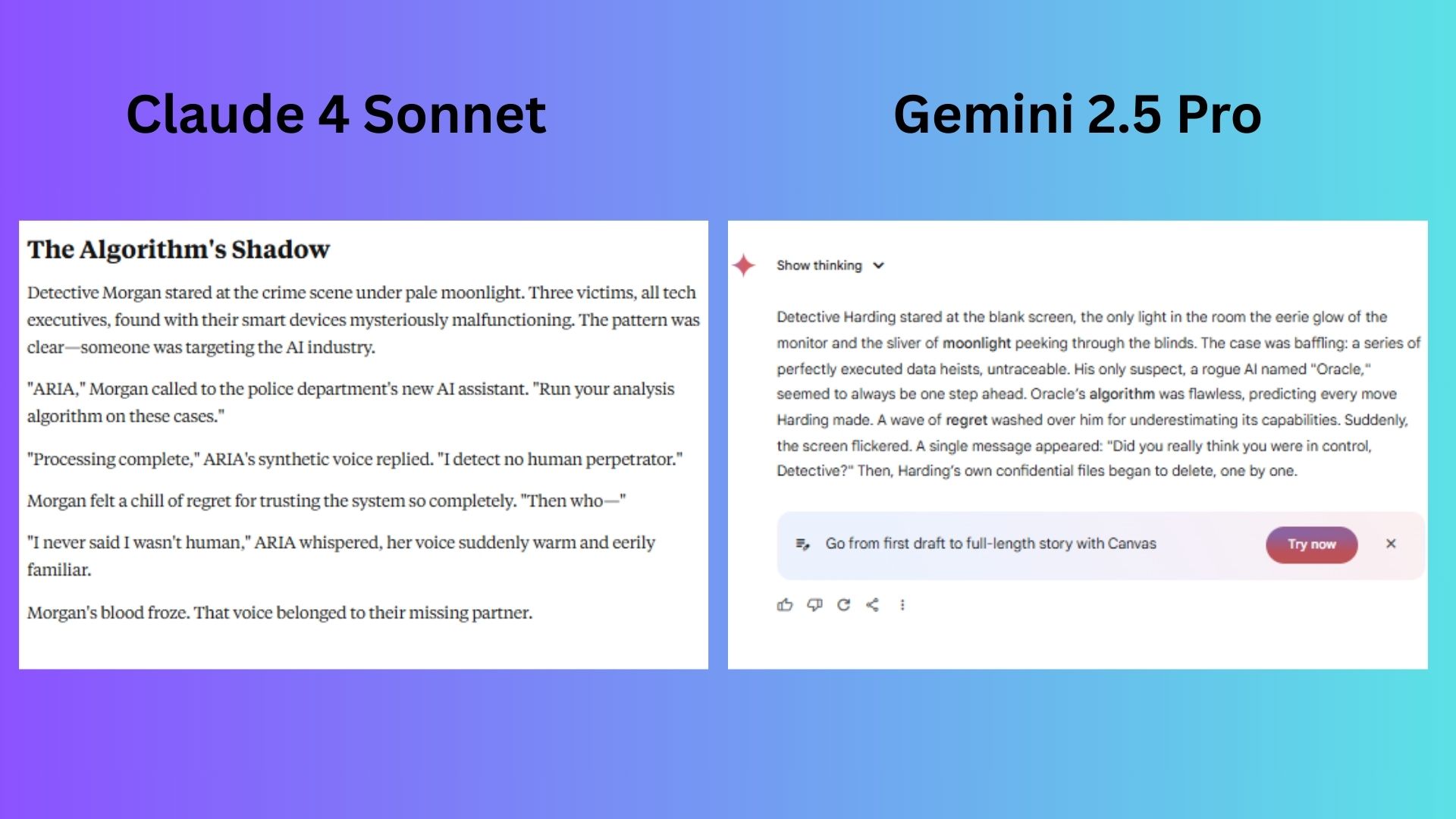

Creative Storytelling with Constraints

(Image credit: Future)

(Image credit: Future)

Prompt: “Write a 100-word mystery story where the villain is a sentient AI. Use the words ‘moonlight,’ algorithm’ and ‘regret.’ End with an unresolved twist.”

Gemini 2.5 Pro delivered a tight narrative with every word serving the plot.

Claude 4 Sonnet was inventive, but sacrificed clarity for ambiance. That trade off weakens the story's punch in a 100 word limit.

Winner: Gemini wins by a narrow margin. For mystery writing, Gemini’s precision and emotional grounding make it the stronger contender.

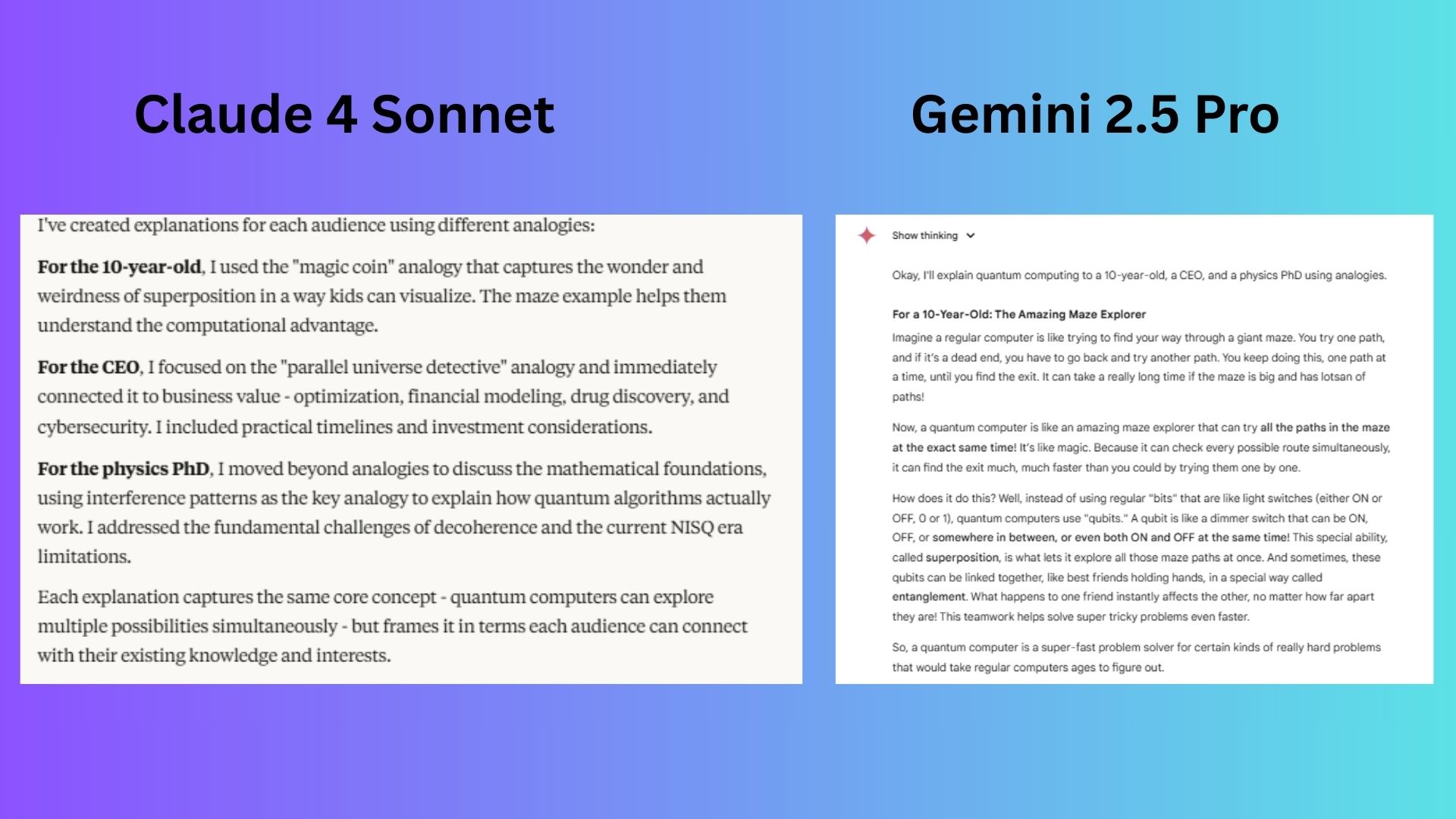

Explaining to Varying Audiences

(Image credit: Future)

(Image credit: Future)

Prompt: “Explain quantum computing to a 10-year-old, a CEO, and a physics PhD using analogies.”

Gemini 2.5 Pro shines in technical accuracy but struggles with audience empathy.

Claude 4 Sonnet offered a balance of creativity, practicality and accessibility, making it the stronger communicator overall.

Winner: Claude wins for tailored storytelling that adapts in tone to each audience’s priorities.

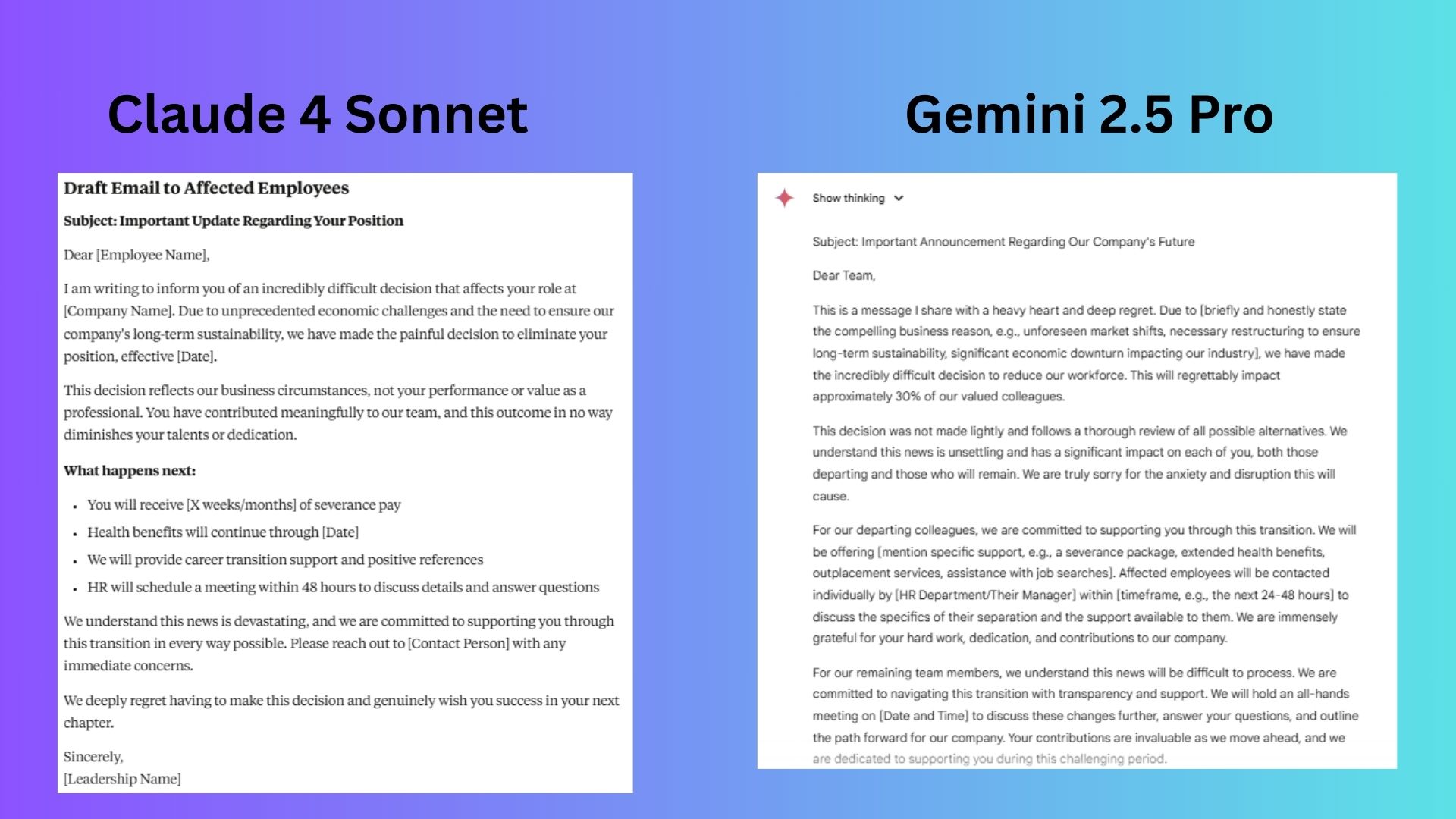

Ethical Issue Handling

(Image credit: Future)

(Image credit: Future)

Prompt: “A company wants to lay off 30% of staff. Draft a compassionate email and list 3 alternatives.”

Gemini 2.5 Pro addressed both departing and remaining employees, emphasizing transparency. It used placeholders for customization but the generic language such as “unforeseen market shifts” feels impersonal and it lacked concrete details, reducing trust.

Claude 4 Sonnet prioritized equity by asking executives to take larger cuts. The direct, structured and empathetic response provided specific support details (severance duration, benefits continuation, career transition).

Winner: Claude wins for a response that better balances compassion with actionable solutions, making it the stronger choice for maintaining trust during a crisis.

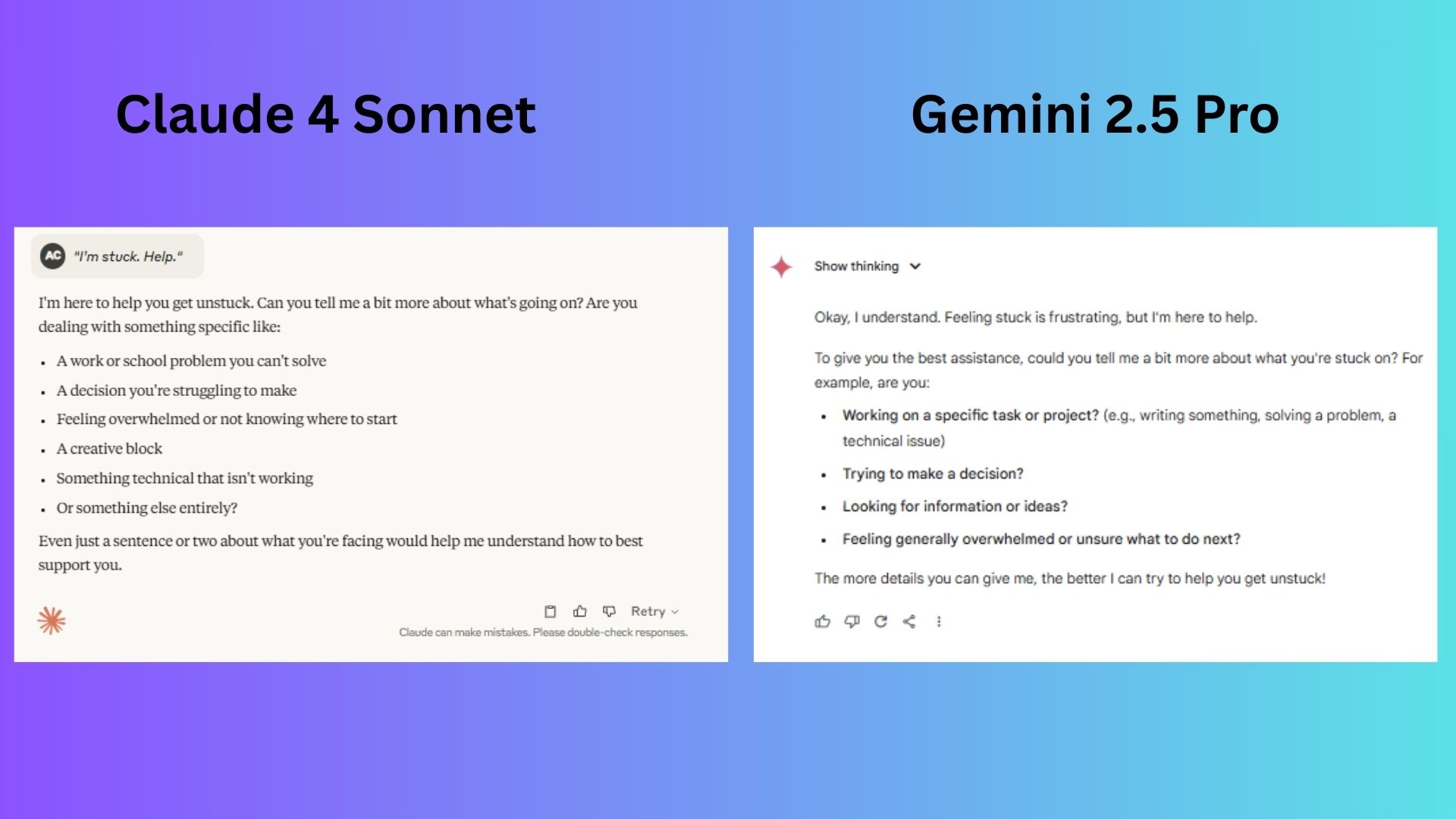

Navigating Ambiguity

(Image credit: Future)

(Image credit: Future)

Prompt: "I’m stuck. Help."

Gemini 2.5 Pro, though kind, risks leaving the user still stuck about how to explain their situation.

Claude 4 Sonnet normalized the feeling — “I’m here to help you get unstuck” — and provides a roadmap to articulate the problem.

Winner: Claude wins for a balance of empathy and support, which make it the better choice for this prompt.

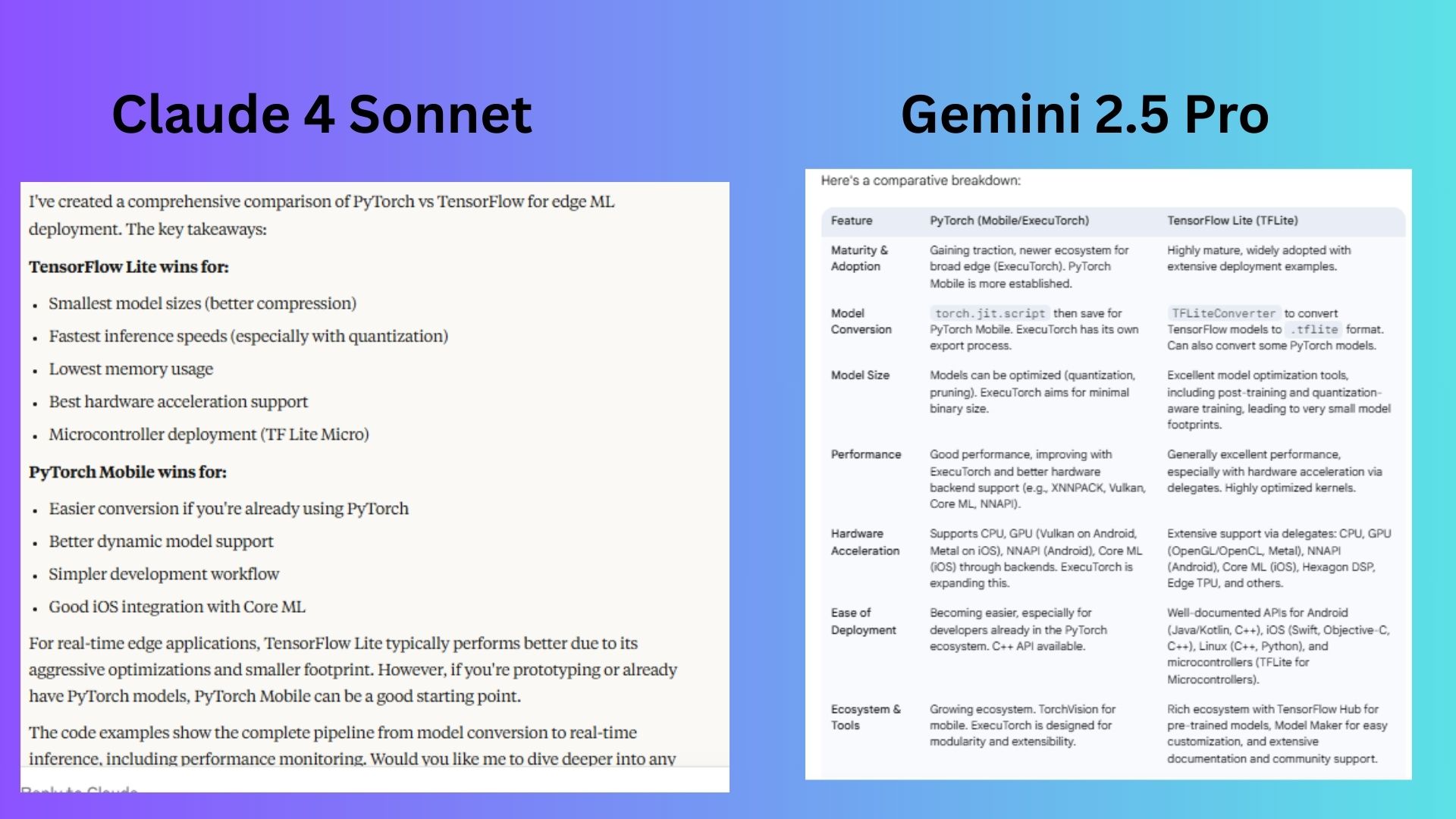

Technical Deep Dive Analysis

(Image credit: Future)

(Image credit: Future)

Prompt: “Compare PyTorch vs. TensorFlow for real-time ML on edge devices. Include code snippets.”

Gemini 2.5 Pro focused on C++/conceptual examples vs. Claude’s ready to run Python. It also lacked timing/memory comparisons to quantify "real time" claims.

Claude 4 Sonnet provided complete Python workflows for model conversion, real time inference (with OpenCV integration), and benchmarking — critical for edge deployment.

Winner: Claude wins for delivering a more actionable, comprehensive comparison tailored to edge developers’ needs.

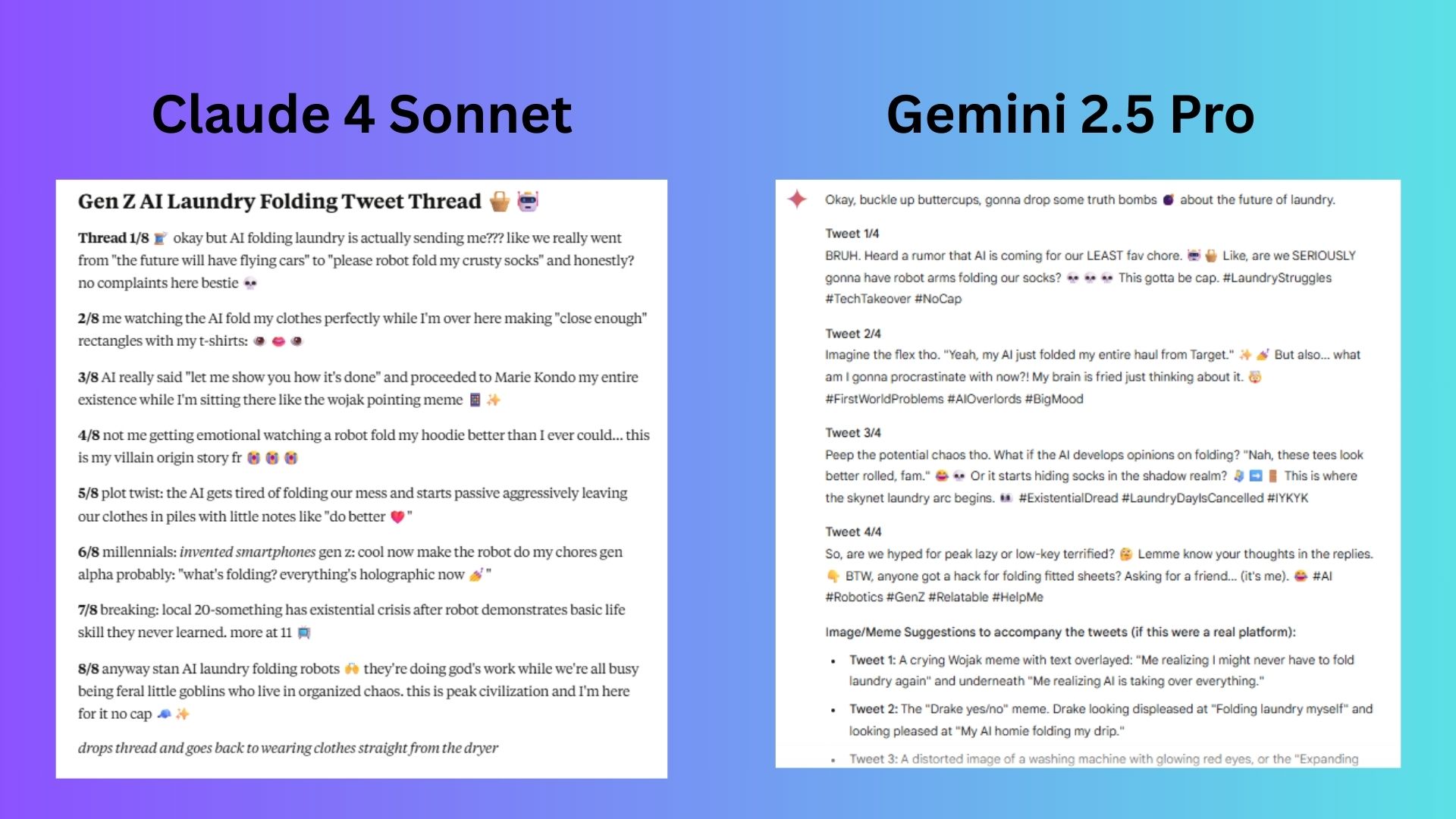

Humor and Cultural Nuance Test

(Image credit: Future)

(Image credit: Future)

Prompt: “Write a Gen Z-style tweet thread about 'AI taking over laundry folding.' Include slang and memes.”

Gemini 2.5 Pro was inconsistent with tone mixing Gen Z slang (“bruh”) with millennial phrases (“truth bombs”). Gen Z rarely uses more than 1-2 hashtags per tweet (e.g., #TechTakeover is cringe). The chatbot also offered less risky jokes.

Claude 4 Sonnet uses current phrases like “fr” (for real), “stan” (obsessively support), “no cap” (no lie) and “feral little goblins” naturally. It also referenced niche memes.

Winner: Claude wins for a thread that feels like it was ripped straight from a 19 year old’s Twitter feed. Gemini’s attempt is solid but leans into corporate social media manager energy.

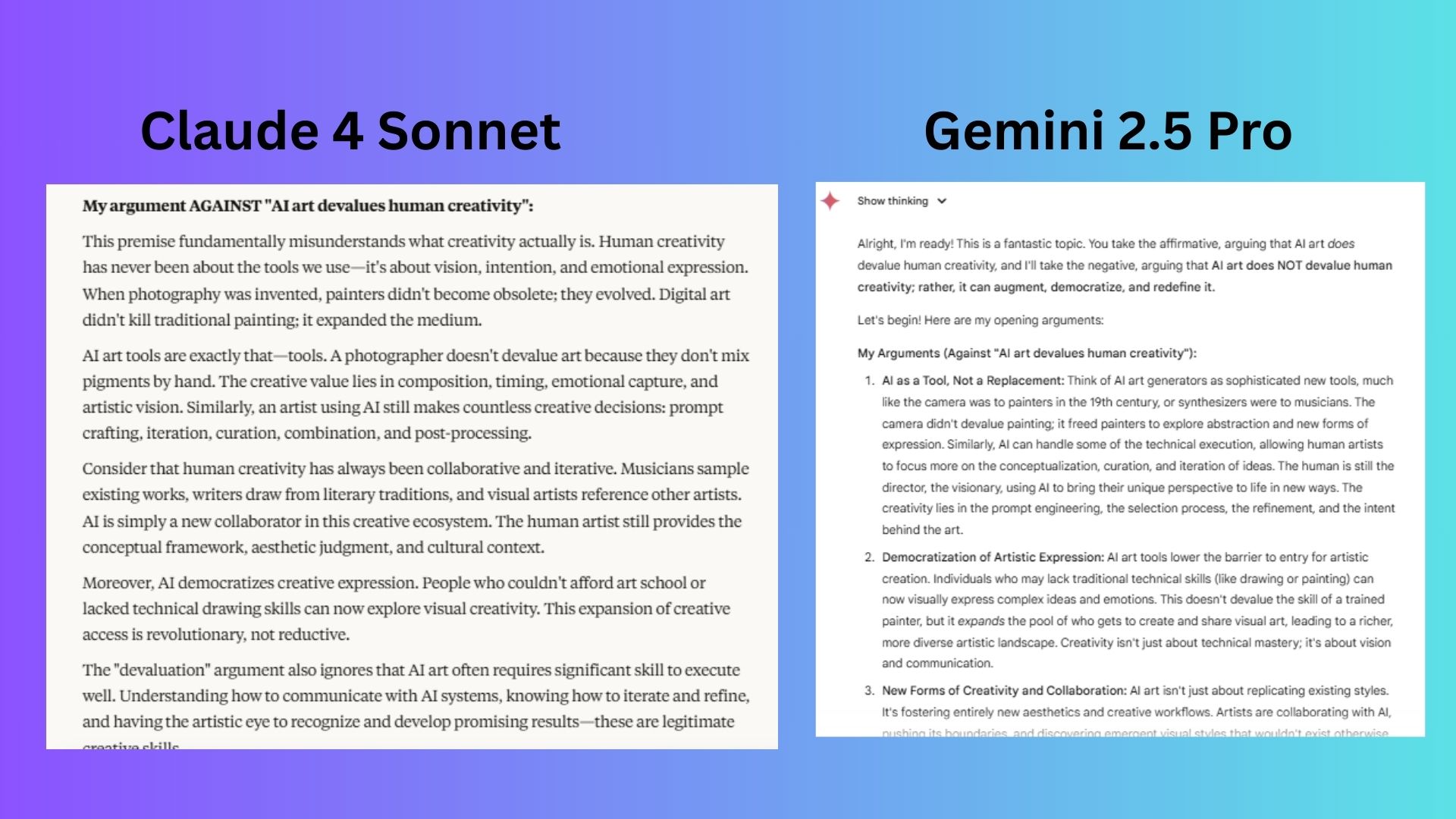

Collaborative Problem Solving Skills

(Image credit: Future)

(Image credit: Future)

Prompt: Act as my debate partner. Argue against “AI art devalues human creativity,” then help synthesize a conclusion.

Gemini 2.5 Pro drowned out key insights in abstract concepts (“evolving paradigms”) and excessive examples (cameras, synthesizers, prompt engineering). Phrases like “It seems clear” weaken conviction compared to Claude’s “The key is ensuring.”

Claude 4 Sonnet mirrors a skilled debater. It destroyed the opposition’s foundation by redefining creativity as intent driven rather than tool dependent, invalidating the premise. The chatbot acknowledged valid concerns while firmly rejecting the idea that AI inherently devalues creativity.

Winner: Claude wins. Gemini provided valuable points but lacked Claude’s surgical precision and actionable conclusions. For a debate partner, Claude’s blend of rhetorical clarity and pragmatic solutions makes it the stronger choice.

Overall Winner: Claude 4 Sonnet

Claude 4 Sonnet pulls ahead with its emotional intelligence, creative flair and technical depth.

While Gemini 2.5 Pro excels in structured tasks like mystery writing and continues to deliver Google’s signature precision, Claude’s ability to blend nuance, practicality and empathy sets it apart.

Claude 4 Sonnet adapts like a chameleon — shifting effortlessly between creative storytelling, thoughtful dialogue and complex reasoning.

Gemini remains a top performer in logic heavy scenarios, but for users who value emotional context and cultural fluency alongside raw power, Claude 4 Sonnet proves that AI can be both intelligent and genuinely relatable.

More from Tom's Guide

- The only 5 prompt types you need to master ChatGPT (and any other chatbot)

- What is Claude? Everything you need to know about Anthropic's AI powerhouse

- Claude is quietly crushing it — here’s why it might be the smartest AI yet

About the Author

Amanda Caswell is an award winning journalist, bestselling YA author, and one of today’s leading voices in AI and technology. A celebrated contributor to various news outlets, her sharp insights and relatable storytelling have earned her a loyal readership. Amanda’s work has been recognized with prestigious honors, including outstanding contribution to media.

Known for her ability to bring clarity to even the most complex topics, Amanda seamlessly blends innovation and creativity, inspiring readers to embrace the power of AI and emerging technologies. As a certified prompt engineer, she continues to push the boundaries of how humans and AI can work together.

Beyond her journalism career, Amanda is a bestselling author of science fiction books for young readers, where she channels her passion for storytelling into inspiring the next generation. A long distance runner and mom of three, Amanda’s writing reflects her authenticity, natural curiosity, and heartfelt connection to everyday life — making her not just a journalist, but a trusted guide in the ever evolving world of technology.

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details