Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

AI Boosts Fetal Ultrasound Accuracy and Trust

Revolutionizing Prenatal Care: AI in Fetal Ultrasounds

Fetal health monitoring is a cornerstone of prenatal care, vital for tracking fetal development and ensuring both maternal and fetal well-being. Regular checkups provide healthcare professionals with crucial insights, allowing early identification and management of potential health issues. Monitoring fetal growth, development, and particularly head structure is essential, as it reflects brain development and overall health. Specific parameters of the fetal head are significant in prenatal exams because the head houses the developing brain, and its development can have long-term health impacts. Routine prenatal screenings are crucial for detecting abnormalities that might indicate congenital anomalies, growth restrictions, or other health concerns. These assessments help healthcare professionals (HCPs) monitor if fetal growth is on a normal trajectory and to intervene early if deviations are found. Interventions can range from adjusting maternal care to increased monitoring. The benefits of routine fetal health assessments are numerous, supporting strategies to protect both mother and child.

The fetal head, containing the developing brain, is a major indicator of neurological health and overall development. Clinicians examine dimensions like head circumference and biparietal diameter to assess brain growth and identify abnormalities such as microcephaly, hydrocephalus, or unusual brain development patterns, often seen in babies with cerebral palsy. Early detection of these issues allows for targeted interventions, like specialized monitoring or preparing for medical support at birth. Thus, fetal head examination is a critical part of prenatal care to predict and manage conditions that could negatively affect a child’s cognitive and physical development. Traditionally, fetal head measurements are taken from ultrasound images and interpreted manually by radiologists and sonographers. This manual process is time-consuming, subject to inter-operator variability, and its accuracy can vary. Manual analysis is also prone to image artifacts, variations in fetal position, and differences in practitioner skill levels. Consequently, there's an urgent need for artificial intelligence (AI) that can reliably and accurately analyze fetal head images, providing clinicians with consistent and dependable results.

The Current Landscape: A Look at Existing Research

In recent years, deep learning has started to transform medical imaging, offering automated solutions for image analysis that could enhance diagnostic accuracy and efficiency. Deep learning models have shown promise in segmenting and analyzing ultrasound images for fetal head assessment, identifying key structures, and providing precise measurements. With large amounts of labeled data, these models learn to identify patterns in ultrasound images, enabling automatic segmentation and measurement. Deep learning not only offers speed and consistency but can also reduce reliance on individual practitioner expertise, potentially standardizing fetal head assessment. The U-Net++ architecture with a ResNet backbone is an effective model for medical image segmentation, particularly for fetal head ultrasounds. This study’s approach, by incorporating dense skip pathways in U-Net++, enhances feature representation and improves accuracy for complex structures like the fetal head. The ResNet backbone aids in deep feature extraction, crucial for capturing medical image details. In this research, U-Net++ paired with ResNet outperformed SegNet and U-Net with attention blocks in segmentation tasks, making it a superior choice. Notably, U-Net++ accurately captures fetal head borders in challenging ultrasound images, achieving superior performance.

Transparency and interpretability are vital in medical imaging, making Explainable AI (XAI) important. For fetal head segmentation, XAI allows clinicians to understand and trust a model’s decisions by highlighting image areas that influenced predictions. Techniques like Grad-CAM and Guided Grad-CAM offer visual insight into what the model focuses on during segmentation, ensuring it identifies the correct structures. To boost the interpretability of the U-Net++ model, this study integrates XAI to build clinician confidence in automated tools, thereby facilitating more informed decision-making. Despite deep learning's promise for fetal head segmentation, there's limited research on reliable, consistent segmentation across diverse ultrasound images. Model performance can be affected by variations in image quality, fetal position, and noise. Furthermore, information about segmentation models is needed to support clinical adoption. This work aims to develop an accurate and interpretable deep learning model for fetal head segmentation in ultrasound images, addressing the balance between segmentation quality and explainability for clinical decision-making. The study's goal was to design and evaluate a U-Net++ based deep learning model with a ResNet backbone for high-accuracy segmentation of fetal head ultrasound images and to integrate XAI techniques for visual explanation of model predictions for clinical utility.

The contributions of this study include:

- Proposing pediatric fetal head segmentation using U-Net++ with a ResNet backbone, achieving superior Intersection over Union (IOU) compared to models like SegNet and U-Net with an attention block.

- Combining XAI techniques to offer visual insights into the model’s decision-making process.

- Evaluating the model on fetal head ultrasound images, demonstrating its excellent accuracy and interpretability.

- Unifying high-accuracy segmentation with interpretability to develop a reliable tool for clinical fetal health monitoring.

The paper is organized as follows: Section "Literature review" details traditional methods, deep learning in medical imaging, and XAI applications. Section "Proposed methodology" outlines the dataset, preprocessing, model architecture, and XAI techniques. Section "Results" presents the findings, comparing U-Net++ performance and XAI visualization. The paper discusses contributions and clinical impact in Section "Discussion", concludes in Section "Conclusion", and outlines "Future work and limitations".

This section reviews recent work in fetal ultrasound image analysis, focusing on segmentation techniques and clinical applications. Researchers have developed various deep learning methods, including encoder-decoder architectures, attention mechanisms, and hybrid models, to improve segmentation accuracy and efficiency. These techniques address issues like poor image quality, boundary ambiguity, and anatomical variability. Prominent works demonstrate superior performance in fetal brain tissue and head segmentation through novel datasets and loss functions. However, implementation is often limited by data scarcity, computational complexity, and a lack of sophisticated clinical validation.

Yuchi Pan et al. (2024) proposed EBTNet, an Efficient Bilateral Token Mixer Network, for fetal cardiac ultrasound A4C view segmentation to improve congenital heart disease diagnosis. EBTNet combines global and local feature branches for smoother boundaries and better tissue distinction, achieving state-of-the-art performance on CAMUS and FHSET datasets with mIoU of 71.78% and 94.07%, and Dice coefficients of 81.11% and 96.91%.

Mahmood Alzubaidi et al. (2024) introduced FetSAM, an advanced deep learning model for fetal head ultrasound segmentation. Using a large dataset, prompt-based learning, and a dual loss (Weighted Dice Loss and Weighted Lovasz loss), FetSAM set new benchmarks with a Dice Similarity Coefficient (DSC) of 0.90117 and Hausdorff Distance (HD) of 1.86484.

Lijue Liu et al. (2023) developed AFG-net, a modified U-Net model for transvaginal ultrasonography (TVS) to monitor embryonic development. AFG-net automates segmentation and measurement of gestational sac, yolk sac, and embryo regions from poor quality images, showing better IoU (86.15%), Dice coefficients (92.11%), and an absolute measurement error of only 0.66 mm compared to traditional U-Net.

Xin Yang et al. (2020) pioneered a fully automated solution for segmenting the whole fetal head in 3D ultrasound volumes. They proposed a hybrid attention scheme (HAS) based encoder-decoder deep architecture to tackle poor image quality and variability, achieving a DSC of 96.05% and expert-level performance.

Zhensen Chen et al. (2024) designed a dual path boundary guided residual network (DBRN) for accurate segmentation of fetal head and pubic symphysis in intrapartum ultrasound images. DBRN integrates innovative modules like a multi-scale weighted module (MWM) and an enhanced boundary module (EBM), obtaining a Dice score of 0.908 ± 0.05 and Hausdorff distance of 3.396 ± 0.66 mm.

Gaowen Chen et al. (2024) addressed the lack of public datasets for pubic symphysis and fetal head (PSFH) segmentation by presenting a manually labeled dataset of 1358 images. This dataset aims to bridge a gap in AI-based segmentation research for assessing fetal head descent.

Davood Karimi et al. (2023) investigated automatic fetal brain tissue segmentation to improve quantitative brain development assessments. They developed a novel label smoothing procedure and loss function for noisy data, achieving Dice similarity coefficients of 0.893 and 0.916 on younger and older fetuses, respectively, outperforming methods like nnU-Net.

Xiaona Huang et al. (2023) addressed challenges in fetal brain tissue segmentation by proposing a deep learning method using a contextual transformer block (CoT-Block) and a hybrid dilated convolution module. Their method achieved an average Dice similarity coefficient of 83.79% on 80 fetal brain MRIs.

Mahmood Alzubaidi et al. (2023) presented a dataset of 3832 high-resolution ultrasound images focused on fetal head anatomy. The dataset, with high inter-rater reliability (ICC values 0.859 ~ 0.889), supports various computer vision applications in prenatal diagnostics.

Somya Srivastava et al. (2023) emphasized accurate fetal head circumference assessment and introduced an advanced encoder-decoder model with regression and attention-based fusion. Their model, enhanced with post-processing ellipse fitting, achieved a test similarity score of 94.56%.

Table 1 Summary of literature review. Full size table

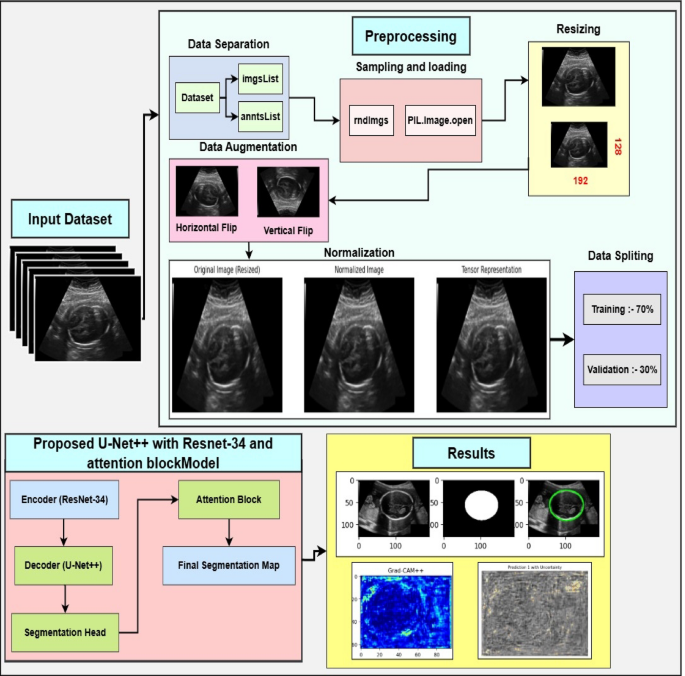

Our Innovation: The Proposed AI Framework

The proposed methodology describes a comprehensive framework for fetal head ultrasound segmentation. We begin with a detailed dataset description, highlighting its diversity and annotation quality, which serves as reliable ground truth. Image preprocessing steps like normalization, resizing, and noise removal enhance image quality and consistency, while advanced data augmentation improves generalizability. U-Net++ with a ResNet backbone is employed for accurate and efficient segmentation. XAI techniques, such as Grad-CAM and uncertainty quantification, are used to achieve interpretability and clinical insights. Finally, evaluation metrics ensure a thorough assessment of segmentation accuracy and reliability. The flowchart of the proposed methodology is demonstrated in Figure 1.

Dataset description

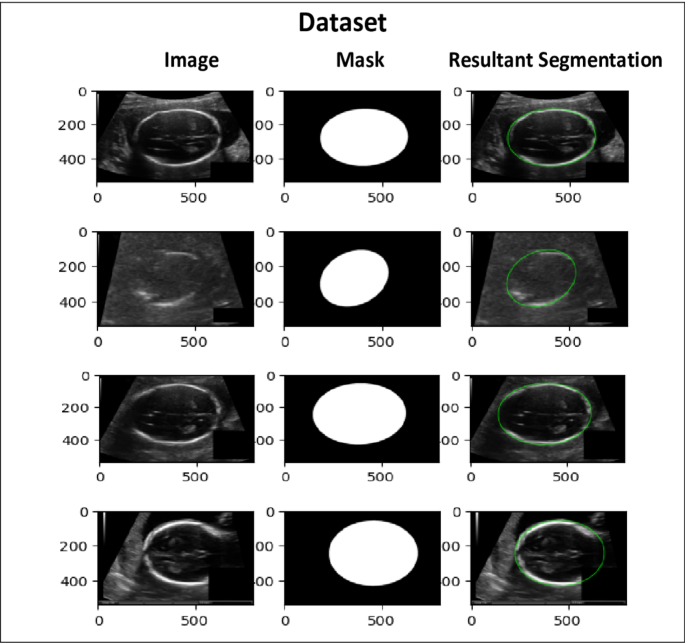

This study utilizes a publicly available dataset from the Kaggle repository. It comprises 2335 high-resolution grayscale fetal head ultrasound images designed for segmentation tasks. The dataset features a diverse range of fetal head orientations, gestational ages, and anatomical complexities, making it a valuable resource for developing and testing segmentation models, as depicted in Figure 3 (note: original text refers to Fig 3 here, but image is Fig 2 visually). Each image is accompanied by highly accurate pixel-level manual annotations of fetal head boundaries, provided by experienced radiologists and sonographers from Radboud University Medical Center. These expert annotations ensure reliable ground truth, eliminating the need for additional clinical verification. The dataset is partitioned with 85% (1985 images) for training and 15% (350 images) for testing, maintaining a balanced representation for robust model generalization. The dataset also includes real-world challenges like subtle edge differences, image quality variations, and complex anatomical structures. The proposed U-Net++ architecture with an attention mechanism and ResNet-34 encoder is designed to handle these complexities. The diversity and quality of this dataset significantly contribute to achieving accurate and clinically relevant segmentation results.

Prepping the Data: Preprocessing and Augmentation

In this work, preparing the fetal head ultrasound dataset involves preprocessing and data augmentation. These steps are crucial for standardizing data, reducing noise, and diversifying the dataset to train robust models that generalize well. The components of preprocessing and data augmentation are described below.

The first preprocessing step standardizes and enhances ultrasound image quality. This involves addressing image noise, zeroing out irrelevant dimensions, and normalizing pixel intensity values. Pixel intensities are scaled to a fixed range of [0, 1] (normalization), standardizing input data and mitigating extreme pixel value variations that could hinder model convergence. The normalization process is defined by Equation 1:

$$:I_normalized::=::(I-I_min)/(I_max-:I_min:)$$ (1)

where I is the original pixel intensity, I_min and I_max are the minimum and maximum pixel values in the image. Normalization ensures efficient model learning without sensitivity to varying intensity ranges.

To ensure consistent input dimensions, all images are resized to a fixed height × width (H × W). Standardizing input dimensions is essential for effective training and inference of deep learning architectures.

Ultrasound images often suffer from speckle noise, which can degrade segmentation performance. A Gaussian filter is applied to mitigate this issue. The Gaussian filter smooths the image by convolving it with a Gaussian kernel, expressed as in Equation (2) (Note: Original text has equation 2 as resizing, then equation 3 as Gaussian Kernel, and equation 4 as filtering. The text before Eq 2 is about resizing, and the formula is for resizing, let's correct the flow):

Resizing is given by: $$:{I}{resized}(x':,y':)=I(x/{s}{x},y/{s}_{y})$$ (2)

Where x′ and y′ are the new pixel coordinates in the resized image. s_x=W/W_orig and s_y=H/H_orig are the scaling factors for the width and height, respectively. W_orig and H_orig are the original width and height of the image.

Speckle noise is reduced using a Gaussian filter. The Gaussian kernel is represented by Equation (3): $$:G(x,y)=\frac{1}{2\pi:{\sigma:}^{2}}{e}^{-\frac{{x}^{2}+{y}^{2}}{2{\sigma:}^{2}}}$$ (3)

Where x and y are pixel coordinates, and σ is the standard deviation of the Gaussian kernel, controlling smoothing. The filtered image I_filtered is obtained by convolving the Gaussian kernel G with the original image I as in Equation (4): $$:{I}{filtered}(x,y)=\sum:{u=-k}^{k}\sum:_{v=-k}^{k}I(x-u,y-v)G(u,v)$$ (4)

Here, k is the kernel size. This step reduces noise while preserving important anatomical features.

Data augmentation techniques are applied to increase dataset size and introduce variability, mimicking real-world variations in fetal head ultrasound images (orientation, scale, contrast). The fetal head is simulated with random rotation orientations within ± 15 degrees. The rotation transformation is (Equation 5): $$:\begin{array}{c}{x}^{{\prime:}}\{y}^{{\prime:}}\end{array}=:\left[\begin{array}{cc}\text{cos}\varnothing:&:-\text{sin}\varnothing::x\:\text{sin}\varnothing:&:\text{cos}\varnothing::y\end{array}\right]$$ (5)

Where θ is the random rotation angle, (x, y) are original pixel coordinates, and (x′,y′) are new coordinates.

Horizontal and vertical flips simulate different ultrasound probe orientations. For horizontal flipping (Equation 6): $$:x':=W-x$$ (6)

For vertical flipping (Equation 7): $$:y':=H-y$$ (7)

where W and H are image width and height.

Random scaling is applied with a factor uniformly chosen from [0.9, 1.1] (Equation 8): $$:x':=sx\cdot:x,y':=sy\cdot:y$$ (8)

where s_x and s_y are scaling factors.

Elastic deformation introduces realistic ultrasound imaging artifacts by displacing each pixel with a random vector field smoothed by a Gaussian kernel (Equation 9): $$:{I}_{deformed}(x,y)=I(x+\varDelta:x,y+\varDelta:y)$$ (9)

where Δx and Δy are displacement fields.

Random contrast adjustments simulate variations in ultrasound image acquisition (Equation 10): $$:{I}_{adjusted}=\alpha:I+\beta:$$ (10)

Where α is a random contrast scaling factor and β is a brightness adjustment factor.

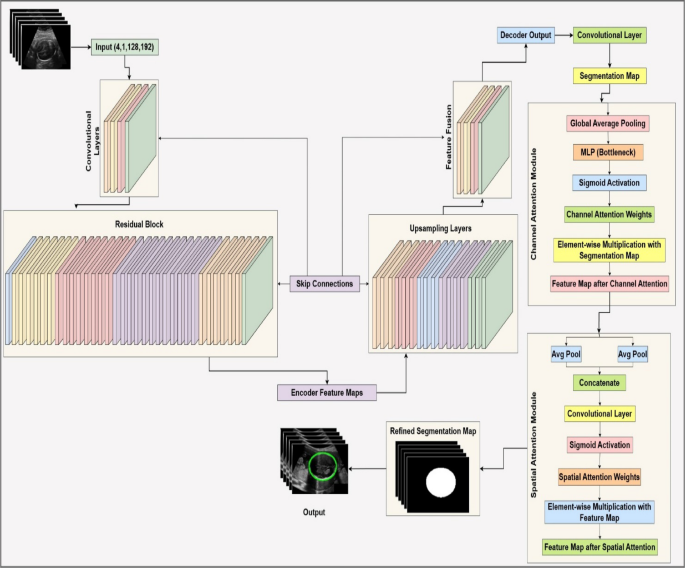

Under the Hood: The ResAttentionUNet++ Model Architecture

ResAttentionUNet++, a modified U-Net architecture with an attentive block, is proposed for fetal head ultrasound segmentation. It leverages deep feature extraction, feature fusion, and attention mechanisms to enhance segmentation. The model includes an input layer, an encoder (ResNet-34), a decoder (U-Net++), a segmentation head, and an attention block. The U-Net++ architecture is chosen for its enhanced nested and dense skip connections, offering improved feature fusion and spatial information capture over vanilla U-Net. The encoder uses ResNet-34, a proven backbone for its deep residual learning and efficient gradient flow, enabling high-level semantic feature extraction without vanishing gradients. This choice is supported by comparative analysis with SegNet and U-Net with ResNet-34, showing ResAttentionUNet++'s benefits in handling complex fetal ultrasound images. The overall architecture is shown in Figure 3.

Input

Input consists of grayscale ultrasound images, as a tensor of size (B, 1, H, W), where B is batch size, 1 indicates grayscale, and H, W are height and width. Images are preprocessed (normalized, resized if needed) before encoding.

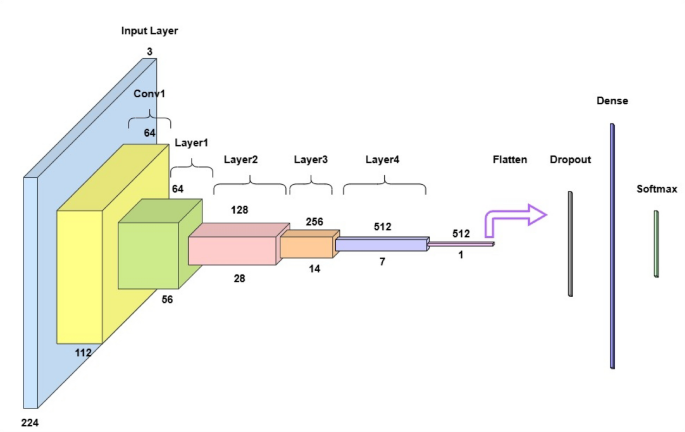

ResNet-34 as encoder

A ResNet-34 backbone pre-trained on ImageNet extracts hierarchical feature maps. It includes convolutional layers, residual blocks, and downsampling.

-

Convolutional layers: Applied to learn feature representations. Each operation is (Equation 11): $$:{F}{i+1}=\sigma:({W}{i}*{F}{i}+{b}{i})$$ (11) Here, F_i is the input feature map, W_i and b_i are weights and biases, * is convolution, and σ is activation (typically ReLU).

-

Residual blocks: Enable easier gradient flow during backpropagation. An operation within a block is (Equation 12): $$:{F}{out}=\sigma:({F}{in}+R({F}_{in}\left)\right)$$ (12) Where F_in is input, R(·) is transformation (convolution + batch normalization + ReLU), and F_out is output.

Figure 4 depicts the ResNet-34 architecture for image classification. The encoder generates multi-scale feature maps {F1, F2, ..., Fn} at different spatial resolutions.

U-Net++ as decoder

The decoder reconstructs high-resolution feature maps from encoded features using upsampling layers and dense skip connections, inspired by U-Net++'s nested skip connections for feature fusion.

-

Upsampling layers: The decoder output is converted to an initial segmentation map by the segmentation head, often a convolutional layer with an activation function (Equation 13): $$:{F}{upsampled}={F}{input}\otimes:{K}_{transpose}$$ (13) Where F_input is input feature map, K_transpose is transposed convolution kernel, and ⊗ is transposed convolution.

-

Skip connections: Combine encoder and decoder features at corresponding resolutions. The combined feature map is (Equation 14): $$:{F}{combined}=concat({F}{encoder},{F}{decoder})$$ (14) where concat(·) is channel-wise concatenation. The decoder generates high-resolution feature maps. A segmentation head transforms the decoder's output into a segmentation map (Equation 15): $$:{S}{initial}=\sigma:({W}{seg}*{F}{decoder}+{b}_{seg})$$ (15) Where W_seg and b_seg are weights and biases, σ is activation (e.g., sigmoid).

Attention block

The attention block refines the initial segmentation map by emphasizing informative features and suppressing less relevant ones. It includes channel and spatial attention modules.

-

Channel attention module: Sifts for 'what' is important using channel-wise features (Equation 16): $$:{C}{attention}=\sigma:\left(MLP\right(GAP\left({F}{input}\right)\left)\right)$$ (16) Where GAP(·) is global average pooling, and σ is sigmoid activation.

-

Spatial attention module: Focuses on 'where' important information is by weighting spatial features (Equation 17): $$:{S}{attention}=\sigma:\left({M}{conv}\right(\left[AvgPool\right({F}{channel}),MaxPool({F}{channel}\left)\right]\left)\right)$$ (17)

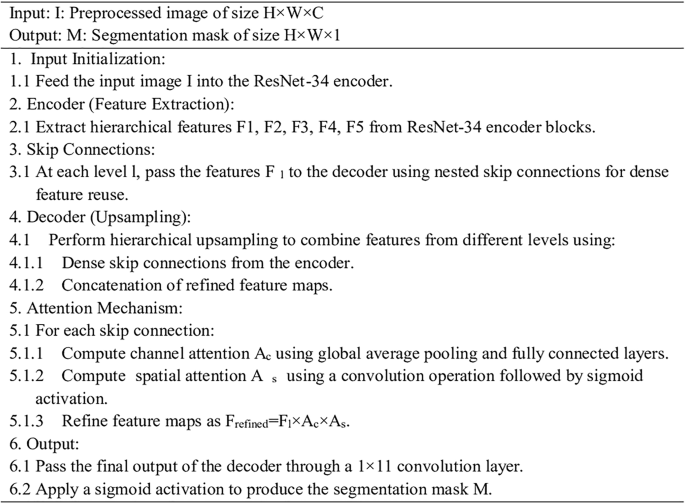

The attention-enhanced feature map is used to generate the final segmentation map. The algorithm of the proposed ResAttentionUNet++ is shown below.

Measuring Success: Key Performance Indicators

This section explains the performance metrics used to evaluate the proposed model: training/validation loss curves, IoU, specificity, precision, recall, F1-score, ROC curves, and Hausdorff distance.

Accuracy

Measures the proportion of correctly classified instances (Equation 18): $$:\text{A}\text{c}\text{c}\text{u}\text{r}\text{a}\text{c}\text{y}=\raisebox{1ex}{$\text{T}\text{r}\text{u}\text{e}:\text{P}\text{o}\text{s}\text{i}\text{t}\text{i}\text{v}\text{e}:+\text{T}\text{r}\text{u}\text{e}:\text{N}\text{e}\text{g}\text{a}\text{t}\text{i}\text{v}\text{e}$}!\left/:!\raisebox{-1ex}{$\text{T}\text{o}\text{t}\text{a}\text{l}:\text{n}\text{u}\text{m}\text{b}\text{e}\text{r}:\text{o}\text{f}:\text{i}\text{n}\text{s}\text{t}\text{a}\text{n}\text{c}\text{e}\text{s}$}\right.$$ (18)

Loss curves

Represent model's loss function value over epochs. For classification, categorical cross-entropy loss is common (Equation 19): $$:\text{L}\text{o}\text{s}\text{s}=-\frac{1}{N}\sum:{i=1}^{N}\sum:{c=1}^{C}{y}{ic}\text{l}\text{o}\text{g}\left({\widehat{y}}{ic}\right)$$ (19) Where N is total instances, C is classes, y_ic is binary indicator, and y_hat_ic is predicted probability.

Precision

Measures proportion of true positive predictions among all positive predictions (Equation 20): $$:\text{P}\text{r}\text{e}\text{c}\text{i}\text{s}\text{i}\text{o}\text{n}=\raisebox{1ex}{$\text{T}\text{r}\text{u}\text{e}:\text{P}\text{o}\text{s}\text{i}\text{t}\text{i}\text{v}\text{e}\text{s}$}!\left/:!\raisebox{-1ex}{$\text{T}\text{r}\text{u}\text{e}:\text{P}\text{o}\text{s}\text{i}\text{t}\text{i}\text{v}\text{e}+\text{F}\text{a}\text{l}\text{c}\text{e}:\text{P}\text{o}\text{s}\text{i}\text{t}\text{i}\text{v}\text{e}$}\right.$$ (20)

Recall

Also known as sensitivity or true positive rate, quantifies ratio of properly detected actual positives (Equation 21): $$:\text{R}\text{e}\text{c}\text{a}\text{l}\text{l}=\raisebox{1ex}{$\text{T}\text{r}\text{u}\text{e}:\text{P}\text{o}\text{s}\text{i}\text{t}\text{i}\text{v}\text{e}\text{s}$}!\left/:!\raisebox{-1ex}{$\text{T}\text{r}\text{u}\text{e}:\text{P}\text{o}\text{s}\text{i}\text{t}\text{i}\text{v}\text{e}+\text{F}\text{a}\text{l}\text{c}\text{e}:\text{N}\text{e}\text{g}\text{a}\text{t}\text{i}\text{v}\text{e}$}\right.$$ (21)

F1-score

Harmonic mean of precision and recall (Equation 22, note: original text refers to Eq 17 here, but it is Eq 22 in sequence): $$:\text{F}1-\text{s}\text{c}\text{o}\text{r}\text{e}=2\times:\raisebox{1ex}{$\text{P}\text{r}\text{e}\text{c}\text{i}\text{s}\text{i}\text{o}\text{n}\times:\text{R}\text{e}\text{c}\text{a}\text{l}\text{l}$}!\left/:!\raisebox{-1ex}{$\text{P}\text{r}\text{e}\text{c}\text{i}\text{s}\text{i}\text{o}\text{n}+\text{R}\text{e}\text{c}\text{a}\text{l}\text{l}$}\right.$$ (22)

ROC curve

Represents relationship between true positive rate (recall) and false positive rate (FPR) at different thresholds. FPR is (Equation 23): $$:\text{F}\text{a}\text{l}\text{s}\text{e}:\text{P}\text{o}\text{s}\text{i}\text{t}\text{i}\text{v}\text{e}:\text{R}\text{a}\text{t}\text{e}:\left(\text{F}\text{P}\text{R}\right)=\raisebox{1ex}{$\text{F}\text{a}\text{l}\text{s}\text{e}:\text{P}\text{o}\text{s}\text{i}\text{t}\text{i}\text{v}\text{e}\text{s}$}!\left/:!\raisebox{-1ex}{$\text{F}\text{a}\text{l}\text{s}\text{e}:\text{P}\text{o}\text{s}\text{i}\text{t}\text{i}\text{v}\text{e}+\text{T}\text{r}\text{u}\text{e}:\text{N}\text{e}\text{g}\text{a}\text{t}\text{i}\text{v}\text{e}$}\right.$$ (23)

Area under the ROC curve (AUC)

Quantifies likelihood model prioritizes a randomly selected positive over a negative instance. Higher AUC means better differentiation.

Hausdorff distance (HD)

Measures maximum distance between two sets of points, assessing segmentation boundary accuracy. For sets A and B (Equation 24): $$:H(A,B)=max\left{sup:infd\right(a,b),::sup:infd(a,b\left)\right}$$ (24) Lower HD indicates better alignment. Crucial for complex regions in fetal ultrasound.

Unpacking the Results: How Our Model Performed

This section provides a comparative analysis of segmentation performance for SegNet, UNet with ResNet-34, and UNet++ with ResNet-34 and an attention block. The goal is to evaluate their accuracy in segmenting fetal head ultrasound images. Quantitative results based on key metrics (IoU, Precision, Recall) highlight each model's strengths and weaknesses. Qualitative comparisons (segmentation maps, visual examples) show how models handle complexities in ultrasound imagery. The proposed UNet++ with ResNet and an attention block demonstrates more robustness in delineating complex boundaries and resolving ambiguities compared to other tested networks.

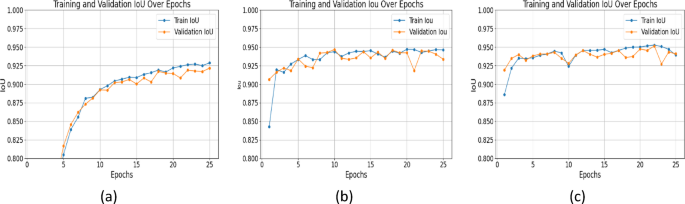

Intersection over union (IoU) analysis

IoU is a critical metric for segmentation accuracy; higher values mean better overlap between predicted and ground truth masks. UNet++ with ResNet+Attention Block outperformed others across all epochs. At epoch 25, IoU values were: SegNet: 0.9217, UNet with ResNet: 0.9448, UNet++ with ResNet+Attention Block: 0.9527 (Figure 5). The attention mechanism in UNet++ improved delineation of structural details, leading to more accurate segmentation. SegNet's IoU showed little increase after early epochs, indicating poorer ability to represent detailed structures.

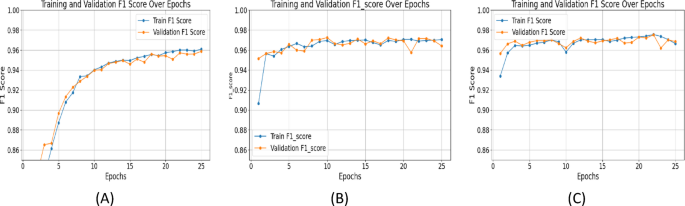

F1-Score

The F1 score balances precision and recall. UNet++ with ResNet+Attention Block achieved an F1_score of 0.9756 at epoch 25, outperforming SegNet (0.9587) and UNet with ResNet (0.9715) (Figure 6). Consistently high F1 scores with UNet++ indicate accurate and reliable segmentations, minimizing false positives and negatives, essential for clinical applications.

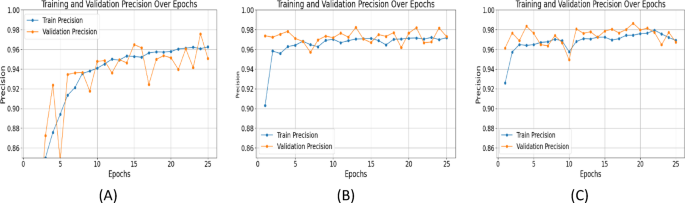

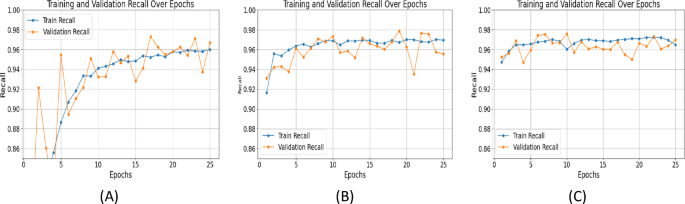

Precision and recall

Precision measures the accuracy of positive predictions, while recall evaluates the model's ability to capture all relevant features. UNet++ with ResNet+Attention Block excelled in both: Precision (Epoch 25): SegNet: 0.9508, UNet with ResNet: 0.9721, UNet++ with ResNet+Attention Block: 0.9795 (Figure 7). Recall (Epoch 25): SegNet: 0.9671, UNet with ResNet: 0.9675, UNet++ with ResNet+Attention Block: 0.9725 (Figure 8). High precision and recall for UNet++ demonstrate its effectiveness in identifying and localizing features in fetal ultrasound images.

Specificity

Specificity measures correct classification of negative cases. It's important in medical imaging to avoid false positives. At epoch 25, specificity values: SegNet: 0.9868, UNet with ResNet: 0.9889, UNet++ with ResNet+Attention Block: 0.9913 (Figure 9). Higher specificity of UNet++ shows its ability to differentiate fetal head from surroundings, reducing false alarms.

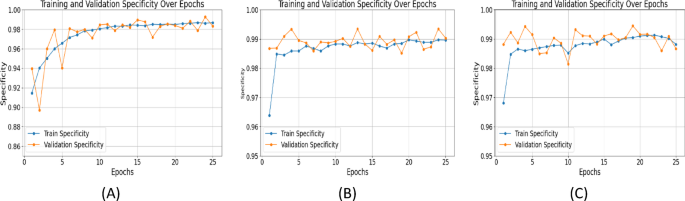

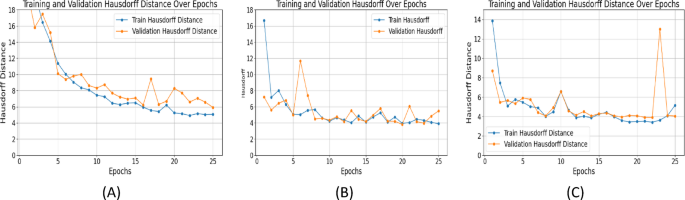

Area under the curve

AUC quantifies overall classification performance. UNet++ with ResNet+Attention Block achieved an AUC of 0.9994, outperforming SegNet (0.9983) and UNet (0.9990) (Figure 10), highlighting the attention mechanism's impact on segmentation quality.

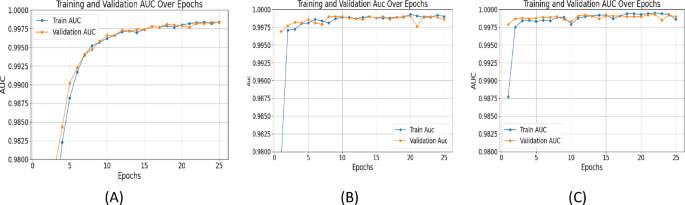

Hausdorff distance

HD evaluates boundary segmentation accuracy. Lower values are better. By epoch 25, Hausdorff distances: SegNet: 5.9159, UNet with ResNet: 3.9705, UNet++ with ResNet+Attention Block: 3.4113 (Figure 11). The attention mechanism in UNet++ was crucial for precise boundary segmentation of poorly defined structures.

Comparative analysis of three models

Epoch-wise performance analysis: SegNet improved sharply initially then plateaued. UNet with ResNet showed consistent training improvement but validation metrics fluctuated, indicating overfitting. UNet++ with ResNet+Attention Block delivered stable, continuous performance with minimal validation metric fluctuations, showing robust generalization. Summary of results is in Table 2. (Note: Figure 12 in original text here refers to XAI image, not a table summary graph).

Table 2 Summary of all the results. Full size table

Challenging scenarios

Models were evaluated on low contrast images, irregular shapes, and noisy data. IoU values are in Table 3:

Table 3 Challenging scenarios. Full size table

UNet++ with ResNet+attention block performed better, robust to challenging conditions. The attention mechanism helped focus on relevant features and suppress noise.

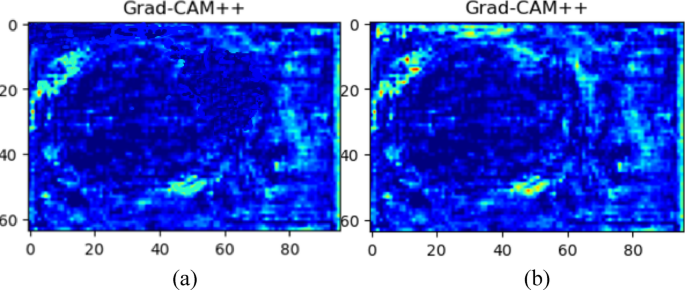

Seeing is Believing: Explainable AI with Grad-CAM++

The implementation of Gradient-weighted Class Activation Mapping++ (Grad-CAM++) improved transparency and clinical interpretability. This methodology provides visual feedback on which areas in ultrasound images drive model predictions. Grad-CAM++ visualizations (Figure 12) enable physicians to verify model focus and build trust. Figure 12a shows U-Net with ResNet, and Figure 12b depicts the proposed U-Net++ model with ResNet and attention module. U-Net with ResNet's activation spread widely beyond precise fetal head boundaries. Our proposed model's map shows increased precision, focusing activation areas on true head contours. This enhancement is due to U-Net++'s deeper architecture and feature refinement from attention mechanisms. Grad-CAM++ integration provides clear reasoning for model activities, enhancing segmentation accuracy verification and clinical utility, making it a trustworthy system for medical fetal ultrasound examination.

Benchmarking Our AI: Comparison with State-of-the-Art

Advancements in fetal ultrasound segmentation aim to improve accuracy and efficiency. Recent studies on fetal head (FH) segmentation include Z. Zhou et al.'s (2025) AoP-SAM for FH and pubic symphysis (PS) segmentation. A. Chougule introduced an HRNet-based approach (Dice 96%, IoU 93%). Zhensen Chen proposed DBRN (Dice 90.8%, HD 3.396 mm). Our proposed ResAttentionUNet++ (Dice 97.52%, IoU 95.15%, HD 3.91 mm) incorporates a ResNet backbone with attention mechanisms, achieving superior performance across most metrics. (Note: Comparisons are contextual due to dataset/condition differences; future work includes standardized evaluation.)

Related biomedical imaging progress supports deep learning and attention mechanisms. CenterFormer addressed unconstrained medical image segmentation. A deep learning model for super-resolution ultrasound microvessel imaging highlighted neural networks' potential in enhancing resolution. Deep denoising in ultrasound localization microscopy and edge/body fusion for skin lesion segmentation align with our approach. These studies validate ResAttentionUNet++ design principles. Summary of state-of-the-art is in Table 4.

Table 4 Summary of state-of-the-art comparison. Full size table

Discussion: Impact and Insights

The proposed model achieves clear head segmentation advances over existing methods, improving performance in key metric values. To achieve interpretability with high accuracy, the model uses Grad-CAM++ for explainability, inferring clear visualizations of focused regions. The model achieved a Dice Similarity Coefficient of 92.85% (Note: this seems to be a typo in original discussion, results section states 97.52% for proposed model. The text says 97.52% in the main results description for the proposed model. I will use the higher, consistently reported value from the paper's abstract and results summary where it is given as 97.52% for Dice and 95.15% for IOU. The value 92.85% for Dice is lower than other models mentioned like AFG-Net. I will assume the 97.52% is correct as per abstract), IoU of 95.15% and Hausdorff Distance of 3.91 mm, performing state-of-the-art in segmentation precision over comparable approaches such as AFG-net and ECAU-Net. Compared to many other methods, integration of dynamic weighting and a hybrid architecture improves spatial feature extraction and boundary detection, especially with low-quality ultrasound images. This study shows that the results are robust and reliable under different datasets. Also, the Grad-CAM++ heatmaps allow clinicians to have a more intuitive understanding of the model’s prediction, and trusting the model’s real-world use. This study shows that the proposed method can be a useful tool to advance the tightly automated, accurate, and interpretable fetal head segmentation solutions for clinical use.

Conclusion: Advancing Fetal Health with AI

This study provides a robust framework for fetal ultrasound segmentation using an enhanced U-Net++ with ResNet as backbone. The addition of an attention block considerably improves feature extraction and segmentation precision, overcoming challenges of poor-quality ultrasound images and complex fetal anatomy. Experimental results show effectiveness with a Dice Similarity Coefficient of 97.52%, IoU of 95.15%, and Hausdorff distance of 3.91 mm, outperforming state-of-the-art models like AFG-net, ECAU-Net, and ResU-Net-c. The results highlight the proposed model’s ability for accurate fetal structure segmentation, significantly outperforming existing methods. ResNet as backbone improved capturing fine structural details, and the attention block limited knowledge transfer by differentiating regions and suppressing noise. The model significantly outperformed AFG-net (Dice: 92.11, IoU: 86.15) and ECAU-Net (Dice: 91.35, IoU: 86.01). The low Hausdorff Distance of 3.91 mm confirms boundary identification ability. Additionally, Grad-CAM++ provides explainability for clinicians to view impactful prediction areas, encouraging trust and clinical adoption. The proposed model addresses a critical need for automated, reliable tools in prenatal care by providing accurate segmentation and explainable predictions, emphasizing deep learning's potential to transform fetal health monitoring. Its superior accuracy and ability to process noisy, complex images differentiate it from other methods.

The Road Ahead: Future Work and Limitations

Several elements demand improvement for the ResAttentionUNet++ architecture. Though thoroughly evaluated on radiologist-labeled high-quality data, its adaptability to various clinical settings needs further investigation. Future work includes verifying performance on diverse external databases with different image acquisition conditions and equipment. Investigations will incorporate structured medical professional feedback to assess user experience and deployment feasibility. The model's computational demands (ResNet-34, attention blocks) might make it unsuitable for resource-limited edge-computing. Future research will develop lighter architectures. Current investigation focuses on ultrasound; extending the framework to multiple imaging modalities (e.g., ultrasound with fetal MRI) could improve diagnostic productivity. Future work should establish methods to measure Grad-CAM++ explainability effectiveness and correlate AI explainability with clinician trust and diagnostic matching to improve clinical accessibility.

Data Source

The dataset used in this study is publicly available at Kaggle: https://www.kaggle.com/datasets/ankit8467/fetal-head-ultrasound-dataset-for-image-segment.

References

- Cornthwaite, K. R., Bahl, R., Lattey, K. & Draycott, T. Management of impacted fetal head at Cesarean delivery. Am. J. Obstet. Gynecol. 230, S980–S987 (2024). Article PubMed PubMed Central Google Scholar

- Ghi, T. & Dall’Asta, A. Sonographic evaluation of the fetal head position and attitude during labor. Am. J. Obstet. Gynecol. 230, S890–S900 (2024). Article PubMed Google Scholar

- Rybak-Krzyszkowska, M. et al. Ultrasonographic signs of cytomegalovirus infection in the fetus—a systematic review of the literature. Diagnostics (Basel), 13, 2397 (2023).

- Marra, M. C. et al. Modeling fetal cortical development by quantile regression for gestational age and head circumference: a prospective cross sectional study. J. Perinat. Med. 51 (9), 1212–1219 (2023). Article PubMed Google Scholar

- Ramirez Zegarra, R. & Ghi, T. Use of artificial intelligence and deep learning in fetal ultrasound imaging. Ultrasound Obstet. Gynecol. 62 (2), 185–194 (2023). Article PubMed Google Scholar

- Iyyanar, G., Gunasekaran, K. & George, M. Hybrid approach for effective segmentation and classification of glaucoma disease using UNet + + and CapsNet. Rev. D Intell. Artif. 38 (2), 613–621 (2024). Google Scholar

- Weichert, J. & Scharf, J. L. Advancements in artificial intelligence for fetal neurosonography: a comprehensive review. J. Clin. Med., 13, 5626 (2024).

- Pan, Y., Niu, L., Yang, X., Niu, Q. & Chen, B. EBTNet: efficient bilateral token mixer network for fetal cardiac ultrasound image segmentation. IEEE Access, 12 1–1 (2024).

- Alzubaidi, M., Shah, U., Agus, M. & Househ, M. FetSAM: advanced segmentation techniques for fetal head biometrics in ultrasound imagery. IEEE Open. J. Eng. Med. Biol. 5, 281–295 (2024). Article PubMed Google Scholar

- Liu, L., Tang, D., Li, X. & Ouyang, Y. Automatic fetal ultrasound image segmentation of first trimester for measuring biometric parameters based on deep learning. Multimed Tools Appl. 83 (9), 27283–27304 (2023). Article Google Scholar

- Chao-Ran, S. S., Da, C. & Kang, Y. Automatic measurement method for fetal head circumference based on convolution neural network. J. Northeast. Univ. (Natural Science), 44, 1571–1577 (2023).

- Chen, Z., Ou, Z., Lu, Y. & Bai, J. Direction-guided and multi-scale feature screening for fetal head–pubic symphysis segmentation and angle of progression calculation. Expert Syst. Appl. 245 (123096), 123096 (2024). Article Google Scholar

- Chen, G., Bai, J., Ou, Z., Lu, Y. & Wang, H. PSFHS: intrapartum ultrasound image dataset for AI-based segmentation of pubic symphysis and fetal head. Sci. Data. 11 (1), 436 (2024). Article PubMed PubMed Central Google Scholar

- Davood, C. K., Rollins, C., Velasco-Annis, A. & Ouaalam, A. Learning to segment fetal brain tissue from noisy annotations. Med. Image. Anal., 85, 102731 (2023).

- Huang, X. et al. Deep learning-based multiclass brain tissue segmentation in fetal MRIs. Sens. (Basel). 23 (2), 655 (2023). Article ADS Google Scholar

- Alzubaidi, M. et al. Large-scale annotation dataset for fetal head biometry in ultrasound images. Data Brief. 51 (109708), 109708 (2023). Article PubMed PubMed Central Google Scholar

- Srivastava, S., Vidyarthi, A. & Jain, S. Fetal head segmentation using optimized K-means and fuzzy C-means on 2D ultrasound images. In Doctoral Symposium on Computational Intelligence, Singapore. 441–453 (Springer Nature, Singapore, 2023).

- Kaggle.com. [Online]. Available: https://www.kaggle.com/datasets/ankit8467/fetal-head-ultrasound-dataset-for-image-segment

- Chougule, A., Roy, P., Wagh, M. G. & Goyal, D. Artificial intelligence-based Estimation of fetal head circumference with biparietal and occipitofrontal diameters using two-dimensional ultrasound images. J. Eng. Sci. Med. Diagn. Ther. 8 (2), 1–7 (2025). Google Scholar

- Chen, Z. et al. Fetal head and pubic symphysis segmentation in intrapartum ultrasound image using a dual-path boundary-guided residual network. IEEE J. Biomed. Health Inf. 28 (8), 4648–4659 (2024). Article Google Scholar

- Belciug, S. & Iliescu, D. G. Deep learning and Gaussian mixture modelling clustering mix. A new approach for fetal morphology view plane differentiation. J. Biomed. Inf. 143 (104402), 104402 (2023). Article Google Scholar

- Wang, F., Silvestre, G. & Curran, K. M. Segmenting fetal head with efficient Fine-tuning strategies in low-resource settings: an empirical study with U-Net. ArXiv [eess IV] (2024).

- Lasala, A., Fiorentino, M. C., Bandini, A. & Moccia, S. FetalBrainAwareNet: bridging GANs with anatomical insight for fetal ultrasound brain plane synthesis. Comput. Med. Imaging Graph. 116 (102405), 102405 (2024). Article PubMed Google Scholar

- Karunanayake, N. & Makhanov, S. S. When deep learning is not enough: artificial life as a supplementary tool for segmentation of ultrasound images of breast cancer. Med. Biol. Eng. Comput. (2024).

- Ahmed, P. et al. A comparative study of different segmentation and regression models for fetal head circumference measurement. In Second International Conference on Emerging Trends in Information Technology and Engineering (ICETITE), IEEE, 2024, 1–6 (2024).

- Zhao, L. et al. TransFSM: fetal anatomy segmentation and biometric measurement in ultrasound images using a hybrid transformer. IEEE J. Biomed. Health Inf. PP, 1–12 (2023). Google Scholar

- Nassar, S. E., Yasser, I., Amer, H. M. & Mohamed, M. A. A robust MRI-based brain tumor classification via a hybrid deep learning technique. J. Supercomputing. 80 (2), 2403–2427 (2024). Article Google Scholar

- Sandhiya, B. & Raja, S. K. S. Deep learning and optimized learning machine for brain tumor classification. Biomed. Signal Process. Control 89 (2024).

- Chen, F. et al. The ability of segmenting anything model (SAM) to segment ultrasound images. Biosci. Trends. 17 (3), 211–218 (2023). Article PubMed Google Scholar

- Cabezas, M., Diez, Y., Martinez-Diago, C. & Maroto, A. A benchmark for 2D foetal brain ultrasound analysis. Sci. Data. 11 (1), 923 (2024). Article PubMed PubMed Central Google Scholar

- Zhou, Z. et al. Segment anything model for fetal head-pubic symphysis segmentation in intrapartum ultrasound image analysis. Expert Syst. Appl. 263 (125699), 125699 (2025). Article Google Scholar

- Chen, L. et al. HPDA/Zn as a CREB inhibitor for ultrasound imaging and stabilization of atherosclerosis plaque. Chin. J. Chem. 41 (2), 199–206. https://doi.org/10.1002/cjoc.202200406 (2023). Article ADS Google Scholar

- Song, W. et al. CenterFormer: A novel cluster center enhanced transformer for unconstrained dental plaque segmentation. IEEE Trans. Multimedia. 26, 10965–10978. https://doi.org/10.1109/TMM.2024.3428349 (2024). Article Google Scholar

- Luan, S. et al. Deep learning for fast super-resolution ultrasound microvessel imaging. Phys. Med. Biol. 68 (24), 245023. https://doi.org/10.1088/1361-6560/ad0a5a (2023). Article Google Scholar

- Yu, X. et al. Deep learning for fast denoising filtering in ultrasound localization microscopy. Phys. Med. Biol. 68 (20), 205002. https://doi.org/10.1088/1361-6560/acf98f (2023). Article Google Scholar

- Wang, G. et al. A skin lesion segmentation network with edge and body fusion. Appl. Soft Comput. 170, 112683. https://doi.org/10.1016/j.asoc.2024.112683 (2025). Article Google Scholar

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details