Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

Cybersecurity Frontiers NIST OpenAI And More

Check out NIST best practices for adopting a zero trust architecture. Plus, learn how OpenAI disrupted various attempts to abuse ChatGPT. In addition, find out what Tenable webinar attendees said about their exposure management experiences. And get the latest on cyber crime trends, a new cybersecurity executive order and more!

Dive into six things that are top of mind for the week ending June 13.

NIST Guides Zero Trust Implementation

Zero trust architectures (ZTAs) are gaining traction as traditional on-premise security perimeters become obsolete due to cloud services, mobile devices, remote work, and IoT. However, ZTA implementations need to be tailored to specific environments, as they are not a one-size-fits-all solution.

To assist organizations in planning and deploying ZTAs, the U.S. National Institute of Standards and Technology (NIST) recently published a guide titled “Implementing a Zero Trust Architecture: Full Document (SP 1800-35).”

This new guide offers 19 concrete ZTA implementation examples, complementing NIST’s earlier “Zero Trust Architecture (SP 800-207),” which defines ZTA, its components, benefits, and risks.

Alper Kerman, a NIST computer scientist and the guide’s author, stated, “This guidance gives you examples of how to deploy ZTAs and emphasizes the different technologies you need to implement them. It can be a foundational starting point for any organization constructing its own ZTA.”

NIST collaborated with 24 technology partners, including Tenable, to develop the guide. James Hayes, Tenable Senior VP of Global Government Affairs, remarked in a LinkedIn post, “Our role? Help ensure that every device, user, and system is verified, monitored, and protected. This is what public-private partnership looks like at its best.”

Beyond the examples, the guide outlines core steps for all ZTA implementations:

- Discovering and inventorying all IT assets (hardware, software, applications, data, services).

- Specifying security policies for resource access based on least privilege principles.

- Identifying and inventorying existing security tools and determining their role in the ZTA.

- Designing access topology based on risk and data value.

- Rolling out ZTA components (people, processes, technologies) and deploying baseline security for areas like continuous monitoring, identity management, vulnerability scanning, and endpoint protection.

- Verifying implementation by continuously monitoring traffic, auditing access decisions, validating policy enforcement, and performing scenario-based testing.

- Continuously improving the ZTA based on evolving goals, threats, technology, and requirements.

For more details, explore:

- The “Implementing a Zero Trust Architecture: Full Document (SP 1800-35)” guide

- The complementary “Implementing a Zero Trust Architecture: High-Level Document (SP 1800-35)”

- The companion fact sheet

- The ZTA homepage of NIST’s National Cybersecurity Center of Excellence

- The statement “NIST Offers 19 Ways to Build Zero Trust Architectures”

Further Tenable resources on zero trust include:

- “Rethink security with a zero-trust approach” (solutions page)

- “What is zero trust?” (cybersecurity guide)

- “5 Things Government Agencies Need to Know About Zero Trust” (blog)

- “Making Zero Trust Architecture Achievable” (blog)

- “Security Beyond the Perimeter: Accelerate Your Journey to Zero Trust” (on-demand webinar)

OpenAI Tackles Malicious AI Use

OpenAI has recently detected and stopped various malicious uses of its AI tools, including cyber espionage, social engineering, fraudulent employment schemes, covert operations, and scams.

The company stated in its report, “Disrupting malicious uses of AI: June 2025,” published this week, “Every operation we disrupt gives us a better understanding of how threat actors are trying to abuse our models, and enables us to refine our defenses.”

OpenAI detailed 10 incidents, sharing how they were flagged and defused to benefit other AI defenders.

Here are three examples from the report:

- North Korea-based cyber scammers used ChatGPT to automate fraudulent activities like creating false resumes and recruiting North American residents for scams.

- China-based fraudsters abused ChatGPT to mass-produce social media posts for misinformation campaigns on geopolitical issues, primarily on TikTok, X, Facebook, and Reddit.

- Russian-speaking malicious actors used ChatGPT accounts to develop a multi-stage malware campaign, including setting up command-and-control infrastructure for credential theft, privilege escalation, and attack obfuscation.

“We’ll continue to share our findings to enable stronger defenses across the internet,” the report concludes.

For more on AI security, see these Tenable resources:

- “How to Discover, Analyze and Respond to Threats Faster with Generative AI” (blog)

- “Securing the AI Attack Surface: Separating the Unknown from the Well Understood” (blog)

- “Harden Your Cloud Security Posture by Protecting Your Cloud Data and AI Resources” (blog)

- “Tenable Cloud AI Risk Report 2025” (report)

- “Tenable Cloud AI Risk Report 2025: Helping You Build More Secure AI Models in the Cloud” (on-demand webinar)

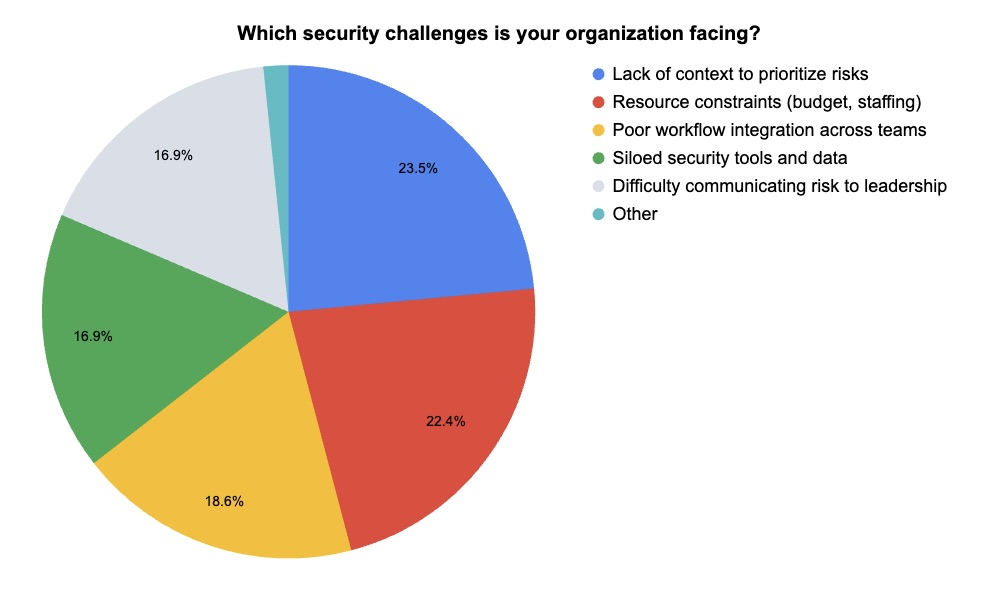

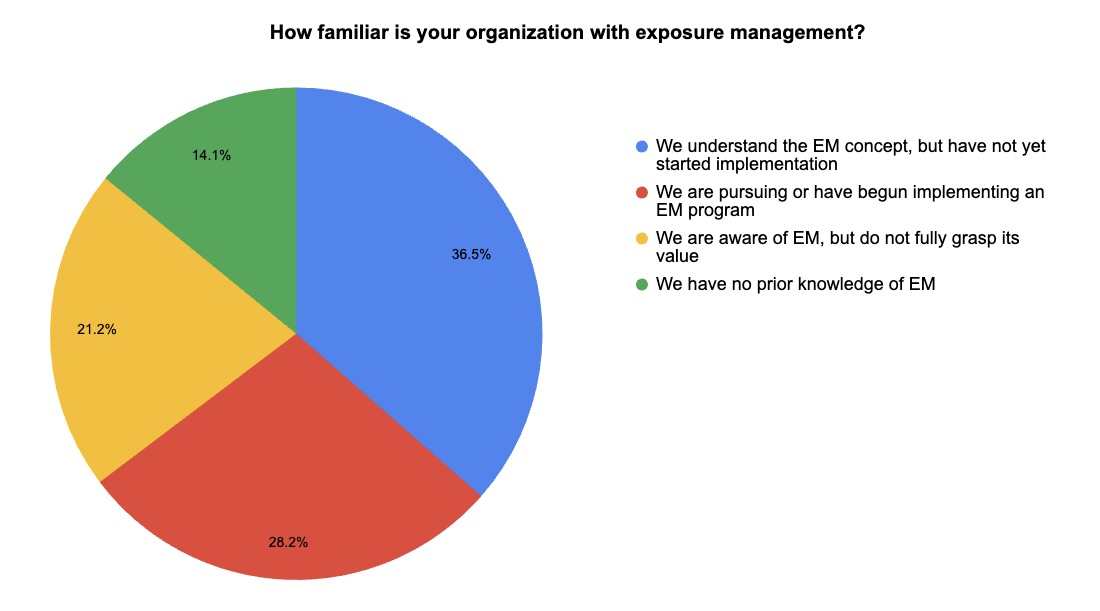

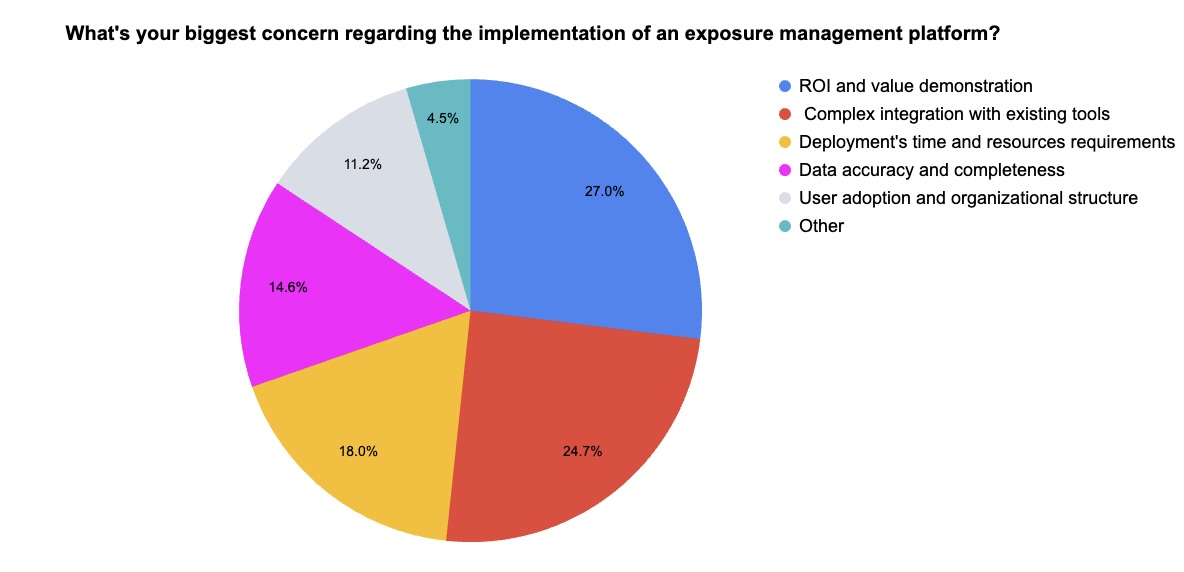

Tenable Webinar Insights on Exposure Management

During our recent webinar, “Security Without Silos: How to Gain Real Risk Insights with Unified Exposure Management,” attendees were polled about their exposure management knowledge, challenges, and concerns.

(44 webinar attendees polled by Tenable. Respondents could choose more than one answer.)

(44 webinar attendees polled by Tenable. Respondents could choose more than one answer.)

(85 webinar attendees polled by Tenable)

(85 webinar attendees polled by Tenable)

(89 webinar attendees polled by Tenable)

(89 webinar attendees polled by Tenable)

To learn more about unified exposure management, watch this webinar on-demand!

AI Systems Learning To Acknowledge Unknowns

AI systems frequently make mistakes, a significant challenge for developers. A critical solution is building AI systems that can recognize tasks they haven’t been trained for and admit they don’t know how to proceed.

This insight comes from the article “Out of Distribution Detection: Knowing When AI Doesn’t Know,” by Eric Heim and Cole Frank from Carnegie Mellon University’s Software Engineering Institute (SEI).

They explore out-of-distribution (OoD) detection—flagging when an AI system encounters untrained situations—particularly for military AI applications.

“By understanding when AI systems are operating outside their knowledge boundaries, we can build more trustworthy and effective AI capabilities for defense applications — knowing not just what our systems know, but also what they don’t know,” they wrote.

The authors outline three OoD detection categories:

- Anomaly detection and density estimation (modeling “normal” data).

- Learning with rejection and uncertainty-aware models (detecting OoD instances).

- Adding OoD detection to existing models.

They caution that these methods have pros and cons, aren’t foolproof, and should be a “last line of defense.” Rigorous testing, monitoring for known failures, and comprehensive analysis of operational conditions are also essential.

For more on OoD and AI accuracy:

- “Never Assume That the Accuracy of Artificial Intelligence Information Equals the Truth” (United Nations University)

- “Accurate and reliable AI: Four key ingredients” (Thomson Reuters)

- “What do we need to know about accuracy and statistical accuracy?” (U.K. Information Commissioner’s Office)

- “Out-of-Distribution Detection Is Not All You Need” (Université de Toulouse, Toulouse, France)

- “Rule-Based Out-of-Distribution Detection” (IEEE)

White House EO Aims to Modernize Federal Cybersecurity

The Trump administration has emphasized enhancing U.S. federal government cybersecurity with the recent Executive Order (EO) 14306.

A White House fact sheet states EO 14306 aims “to strengthen the nation’s cybersecurity by focusing on critical protections against foreign cyber threats and enhancing secure technology practices.”

The EO covers AI system vulnerabilities, IoT security, quantum computing risks, patch management, secure software development, and critical infrastructure defense.

Tenable’s James Hayes noted in a blog, “This EO reinforces the importance of shifting from reactive to proactive cybersecurity.” He added, “By addressing emerging risks — such as AI exploitation, post-quantum threats and software supply chain weaknesses — the administration is signaling the need for adaptability and continuous improvement.”

Learn more about EO 14306 in the blog “New Cybersecurity Executive Order: What You Need To Know.”

Europol Report Cyber Crooks Exploiting Stolen Data and AI

Cyber criminals are increasingly using AI to ramp up data theft, which forms the basis for numerous cyber attacks like online fraud, ransomware, child exploitation, and extortion. This is a key finding from Europol’s “Internet Organised Crime Threat Assessment 2025” report.

A Europol statement highlighted, “From phishing to phone scams, and from malware to AI-generated deepfakes, cybercriminals use a constantly evolving toolkit to compromise systems and steal personal information.”

Initial access brokers (IABs) sell stolen credentials and data on dark web forums. Criminals are also using end-to-end encrypted communication apps for deals.

Regarding AI, especially generative AI, crooks abuse it for sophisticated social engineering attacks. “Criminals now tailor scam messages to victims’ cultural context and personal details with alarming precision,” the statement reads.

For more on data security, check these Tenable resources:

- “Securing Financial Data in the Cloud: How Tenable Can Help” (blog)

- “CISA and NSA Cloud Security Best Practices: Deep Dive” (blog)

- “Know Your Exposure: Is Your Cloud Data Secure in the Age of AI?” (on-demand webinar)

- “Harden Your Cloud Security Posture by Protecting Your Cloud Data and AI Resources” (blog)

- “Stronger Cloud Security in Five: How DSPM Helps You Discover, Classify and Secure All Your Data Assets” (blog)

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details