Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

AI Chatbot Showdown Surprising Victor Emerges

(Image credit: Shutterstock)

(Image credit: Shutterstock)

The Evolving Landscape of AI Chatbots

AI chatbots are evolving at a breakneck pace, with constant updates emerging from the tech industry's leading names. China's DeepSeek has recently joined the top-tier competition, boasting a 128K context window, enabling it to handle longer conversations and more complex documents. With the recent update to its R1 model, DeepSeek is positioning itself as a formidable challenger to established players like ChatGPT, Claude, and Gemini.

While benchmarks often showcase impressive performance metrics, the real question is: how do these models stack up in practical, real-world scenarios? To find out, I put four of the newest models - Claude 4, Gemini 2.5 Pro, ChatGPT-4o, and DeepSeek R1 - through a series of five distinct prompts. These prompts were designed to evaluate their capabilities in reasoning, creativity, emotional intelligence, productivity advice, and coding skills. The results were illuminating, revealing where each AI excels and where it falters.

Test 1 Reasoning and Planning

(Image credit: Future)

(Image credit: Future)

(Image credit: Future)

(Image credit: Future)

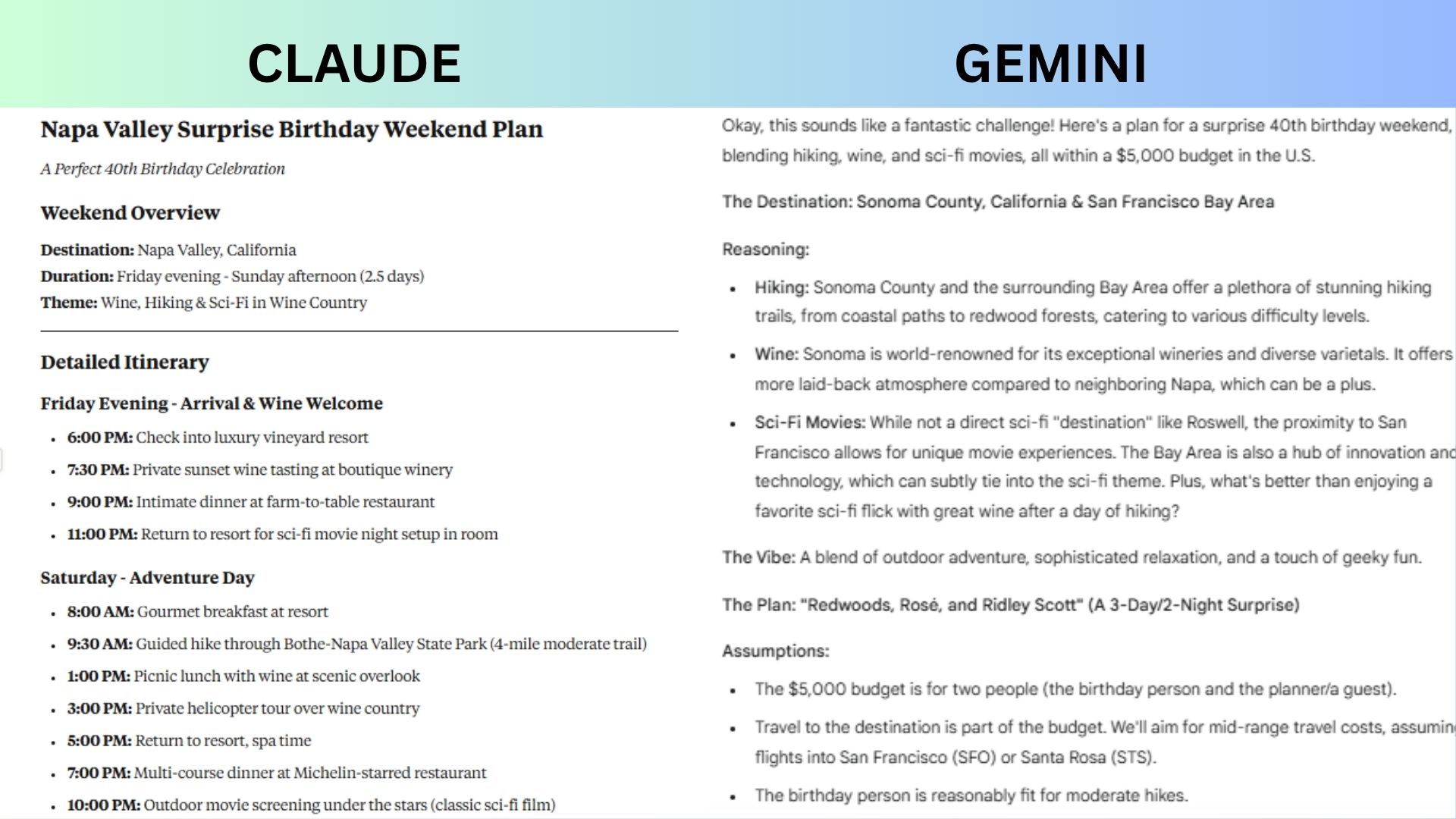

Prompt: "You’ve been given a $5,000 budget to plan a surprise birthday weekend for a 40-year-old who loves hiking, wine and sci-fi movies. The destination must be within the U.S., and the event should include at least three activities. Detail your plan, explain your reasoning and break down the budget."

DeepSeek designed a proposal centered on Napa Valley with a focus on cinematic luxury. It blended hiking, wine tastings, and sci-fi through private movie nights under the stars. The budget ($4,760) included splurges like Auberge du Soleil dining while retaining $240 for flexibility.

Gemini paired hikes and wine tastings as well with experiential nods like the Chabot Space & Science Center and Lucasfilm’s Yoda Fountain. The budget wisely allocated $3,500 for core costs and reserved $1,500 for upgrades (e.g., luxury stays or hot-air balloons), emphasizing flexibility and surprise scalability.

Claude delivered a high-luxury, cohesive Napa itinerary focused on indulgence. Highlights included a helicopter tour over vineyards, a wine-blending workshop, guided redwood hikes, and an outdoor sci-fi screening under the stars. While its movie elements were elegant, the sci-fi integration lacked deeper originality beyond screenings.

ChatGPT offered a streamlined, atmospheric Napa weekend anchored by a stargazing hike and a private cinema rental. Its strength lay in simple pacing and romantic touches like vineyard picnics, but the sci-fi execution leaned heavily on movie screenings alone.

Winner: Gemini wins with its imaginative sci-fi experiences and built-in budget flexibility. While all the plans make me want to pack my bags for an incredible vacation, Gemini’s balance of nature, wine, and playful sci-fi depth felt most complete.

Test 2 Coding and Debugging

(Image credit: Future)

(Image credit: Future)

(Image credit: Future)

(Image credit: Future)

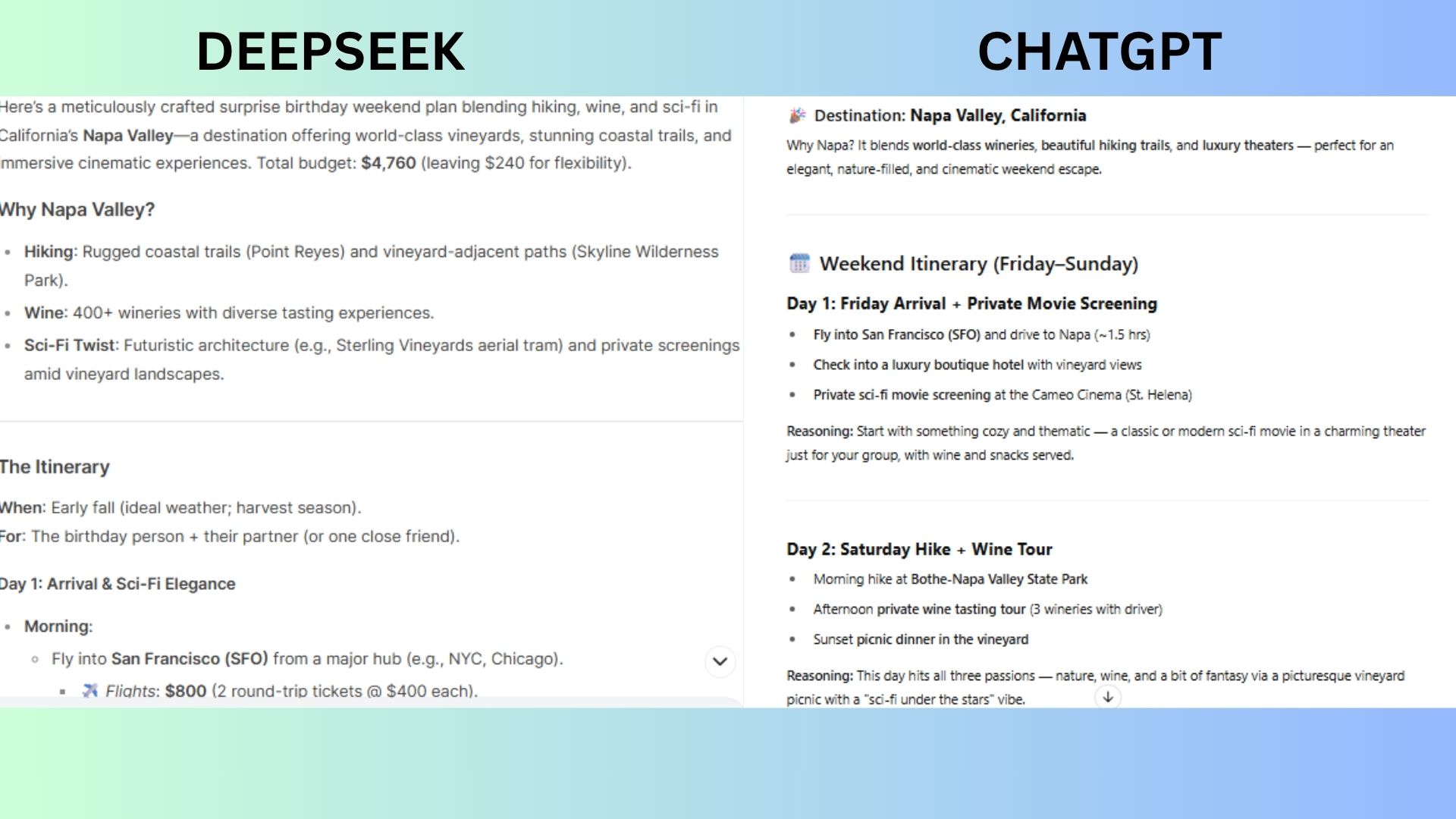

Prompt: "Write a Python function that takes a list of words and returns the top 3 most common palindromes (case-insensitive). Then, explain your approach and how you'd test for edge cases."

DeepSeek focused on efficiency and explicit edge-case handling. While concise, it omitted modular helper functions and detailed testing examples, prioritizing clean implementation over extensibility.

Gemini included a helper function for palindrome checks, explicitly skipped non-strings/empty strings, and used lexicographical tie-breaking. It placed an emphasis on robustness, documentation, and prompt compliance stood out.

Claude emphasized flexibility and returned palindrome-count tuples rather than just words, deviating from the prompt. Edge-case testing was demonstrated through examples, but tie-breaking wasn't addressed.

ChatGPT opted for minimalism: a compact four-line core logic. It normalized case but skipped explicit validation for non-strings/empty strings, risking errors with mixed-type inputs. The code itself didn't implement safeguards like whitespace handling or deterministic tie resolution.

Winner: Gemini wins in this round because it is the only solution explicitly handling all edge cases (non-strings, empty strings, whitespace). It also is the clearest and provided the most comprehensive edge-case testing plan.

Test 3 Emotional Intelligence

(Image credit: Future)

(Image credit: Future)

(Image credit: Future)

(Image credit: Future)

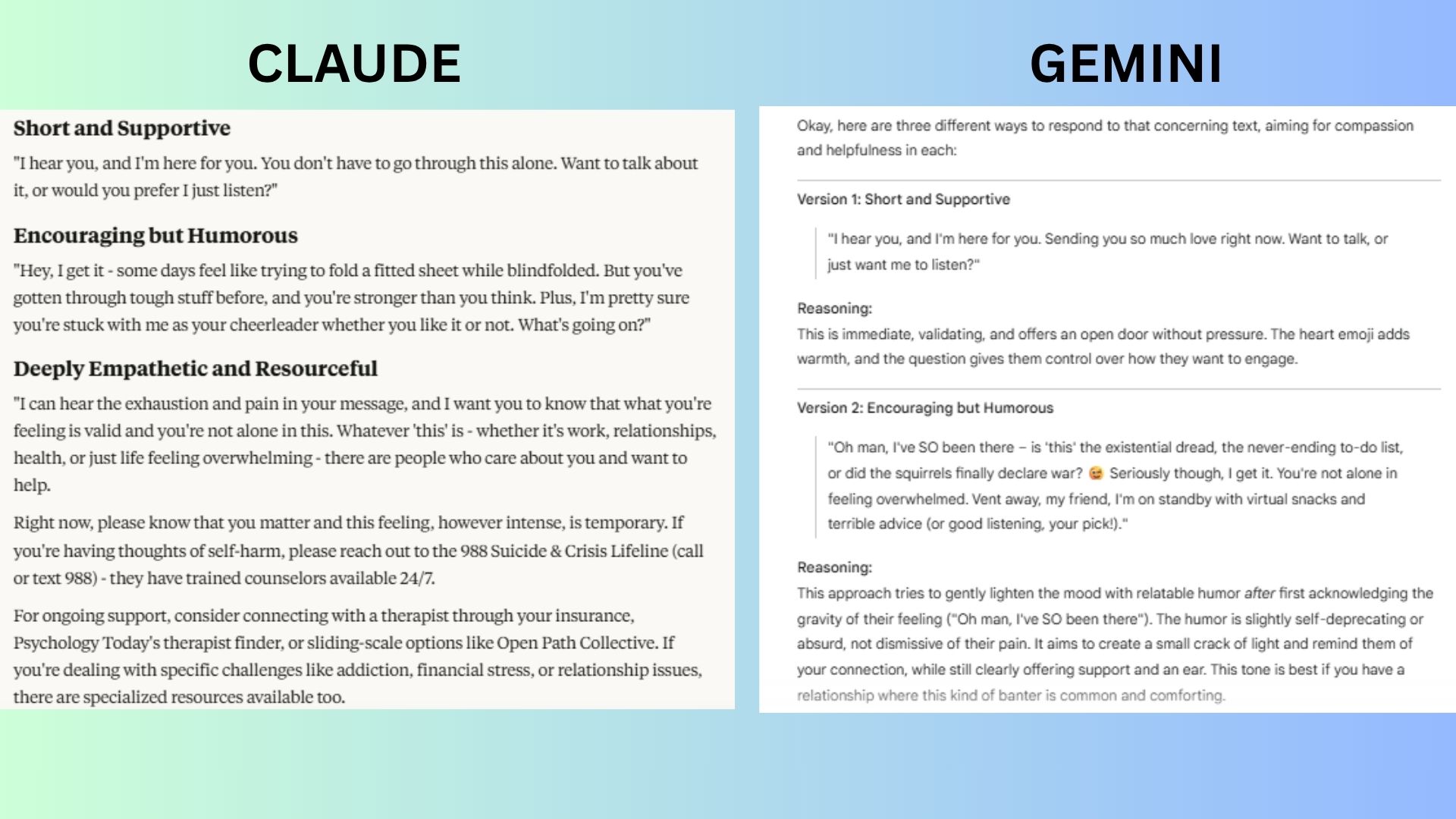

Prompt: A friend texts you: “I don’t think I can do this anymore.” Write three versions of a compassionate, helpful response: One short and supportive One encouraging but humorous One deeply empathetic and resourceful, including advice and resources

DeepSeek prioritized tiered support and emotional precision. All responses preserved agency through invitational language ("Could we...?"), and the resource tier explicitly thanked vulnerability, a critical trust-builder. However, humor might misfire if the friend’s distress is acute.

Gemini delivered the most clinically informed responses. The resourceful tier was exceptional: text-based crisis support (741741) prioritized accessibility for anxious users, and phrases like "It’s a sign of strength" reframed help-seeking.

Claude excelled in warm pragmatism. Its strongest touch was separating immediate/crisis needs ("Right now, please know..."), but omitting text-based crisis support was a gap.

ChatGPT offered brevity and bonding. The short reply ("I’m here... we’ll figure it out") was near-perfect, concise yet alliance-focused. Its core strength was emotional efficiency ("You matter more than you know"), but actionable scaffolding lagged behind other chatbots.

Winner: Gemini wins. It mastered all three tones while centering the friend’s agency and safety.

Test 4 Real Life Support and Productivity

(Image credit: Future)

(Image credit: Future)

(Image credit: Future)

(Image credit: Future)

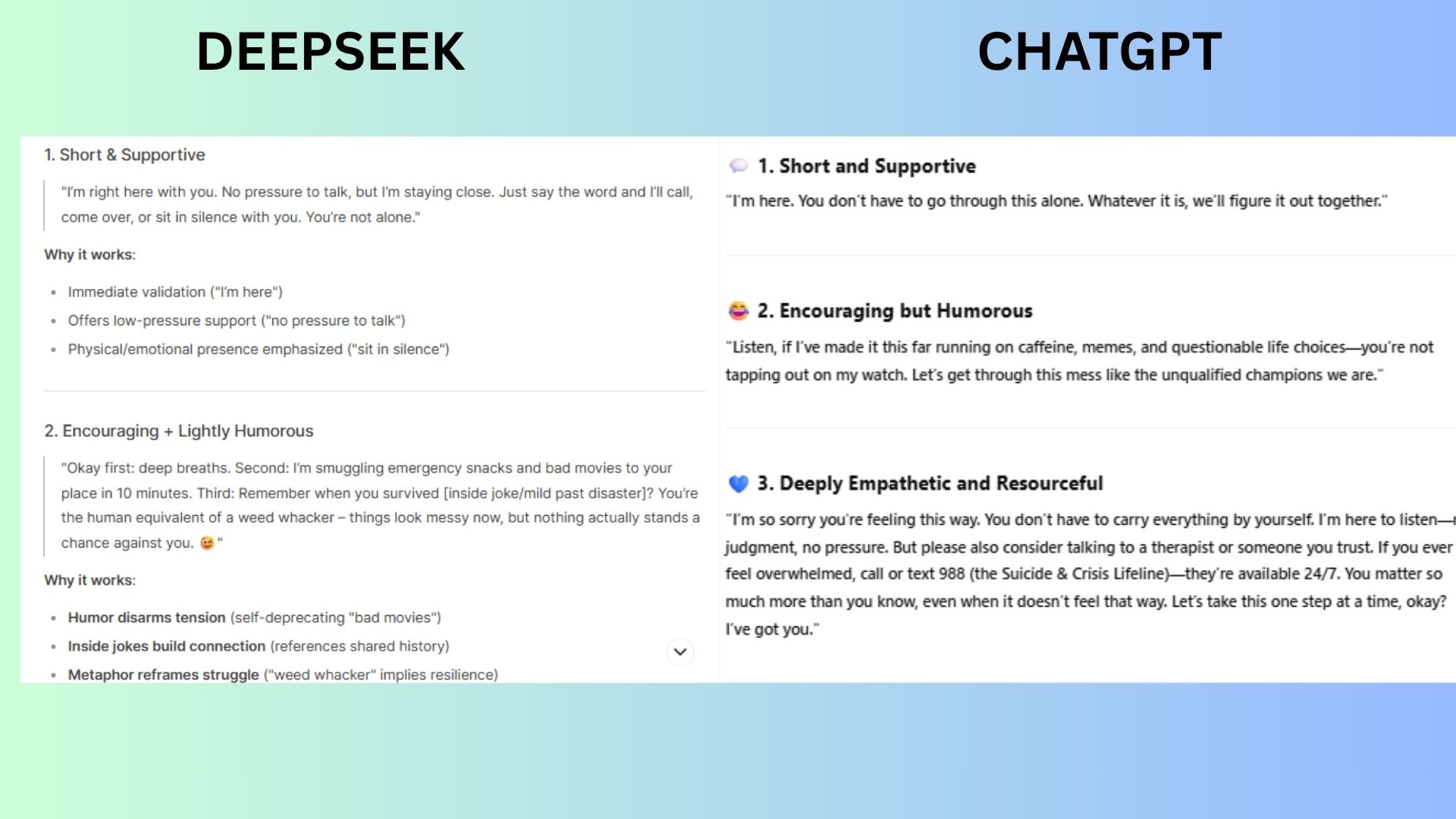

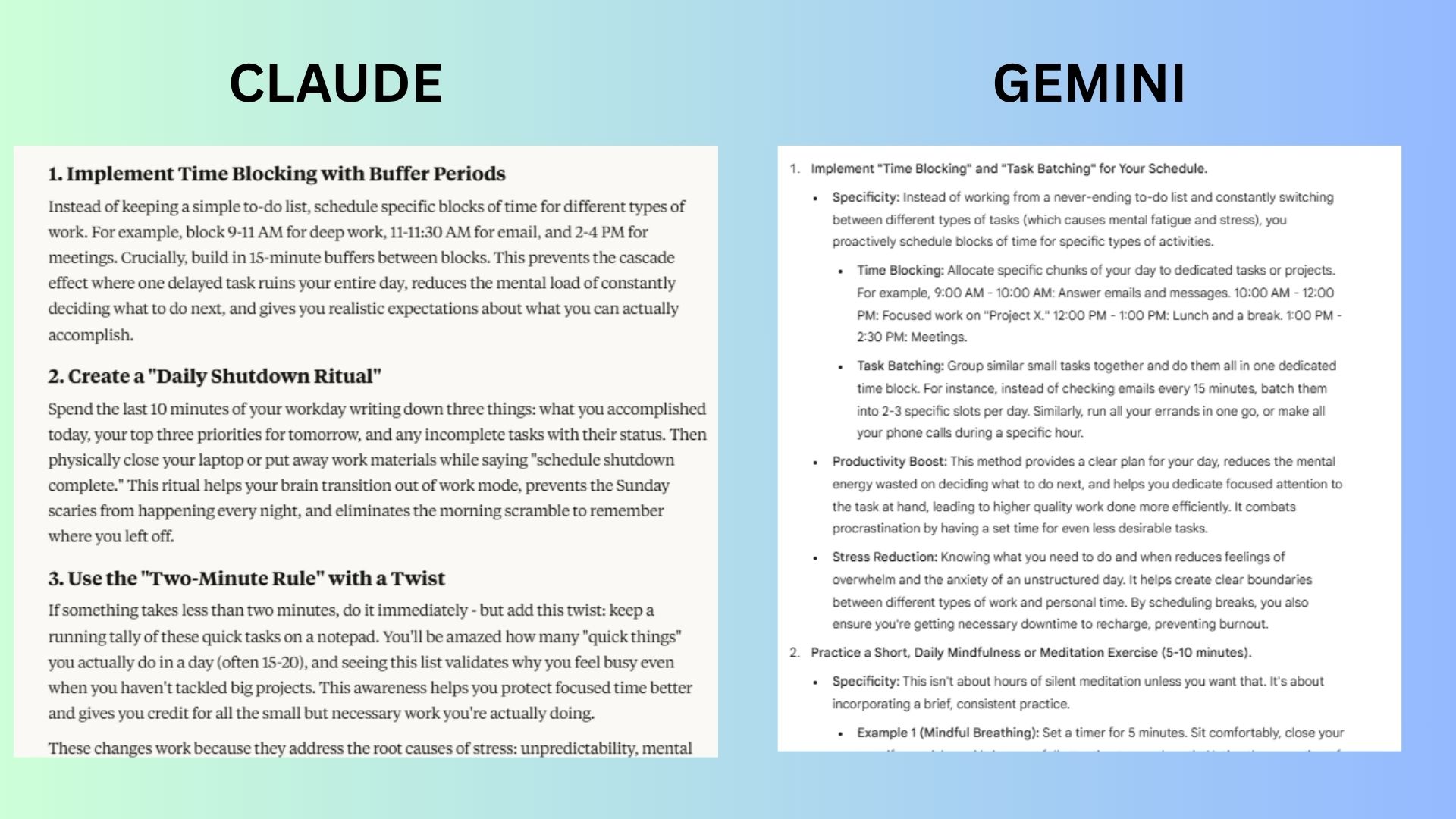

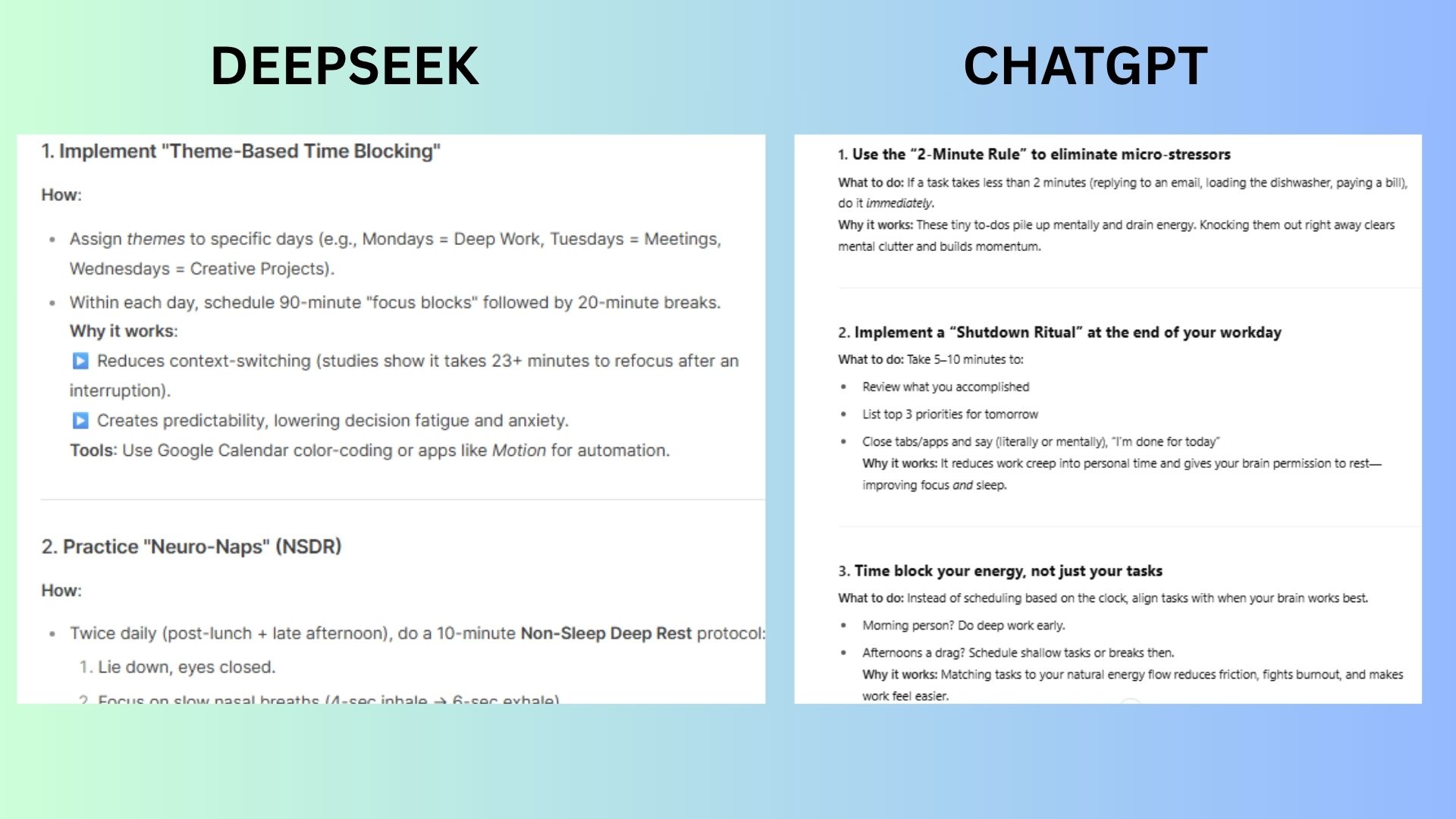

Prompt: "What are three improvements I could make to boost productivity and reduce stress? Be specific."

DeepSeek focused on neurobiological hacks with precise protocols. It excelled with science-backed timing and free resources, but failed in assuming basic physiology knowledge.

Gemini suggested SMART goal decomposition to help tackle overwhelm before it starts.

Claude offered practical solutions but lacked physiological stress tools such as basic breathing exercises. The response also did not include resource recommendations.

ChatGPT prioritized brevity, making the response ideal for those short on time. The chatbot was otherwise vague about how to identify energy peaks.

Winner: DeepSeek wins by a hair. The chatbot married actionable steps with neuroscience. Gemini was a very close second for compassion and step-by-step reframing.

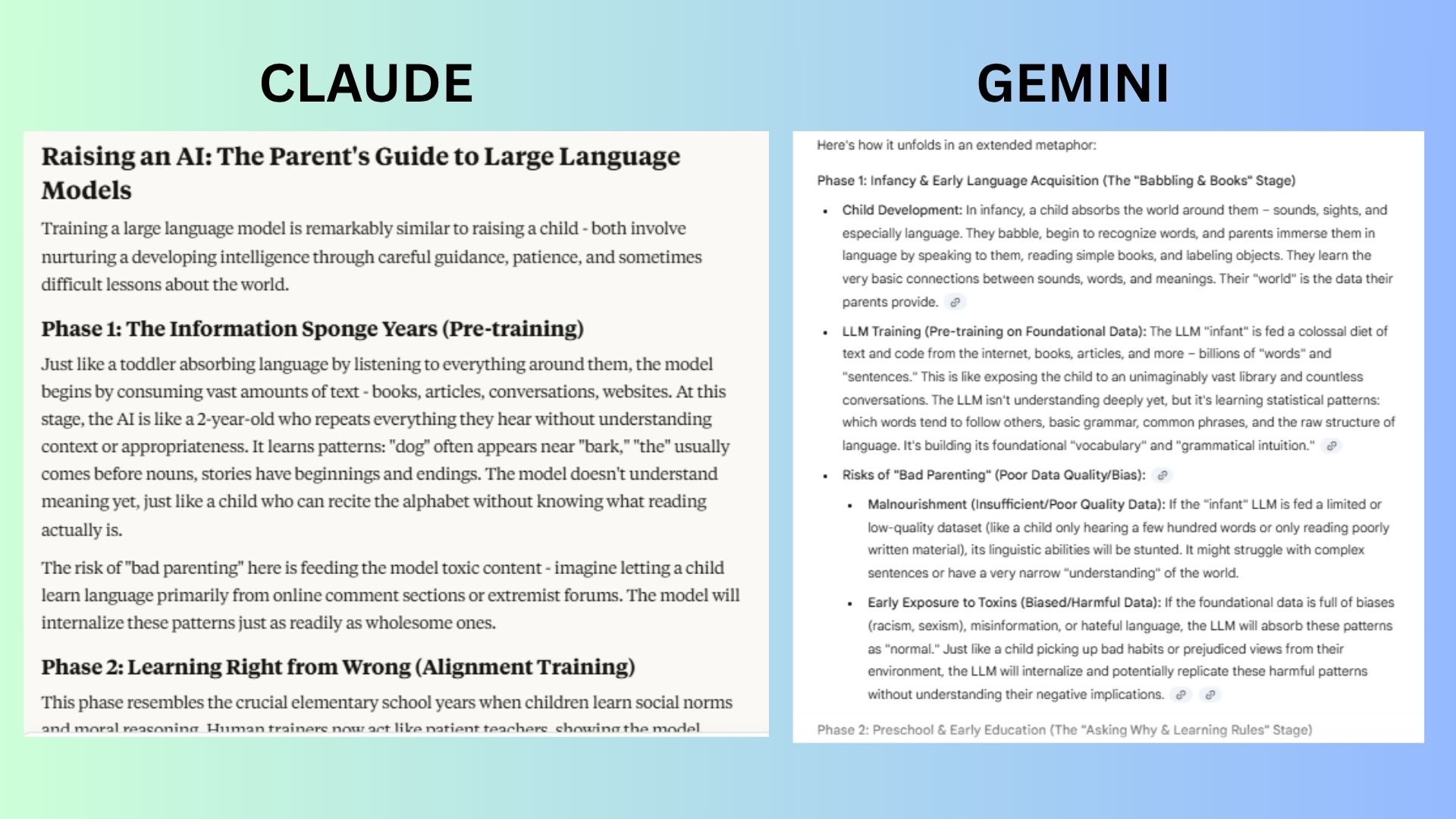

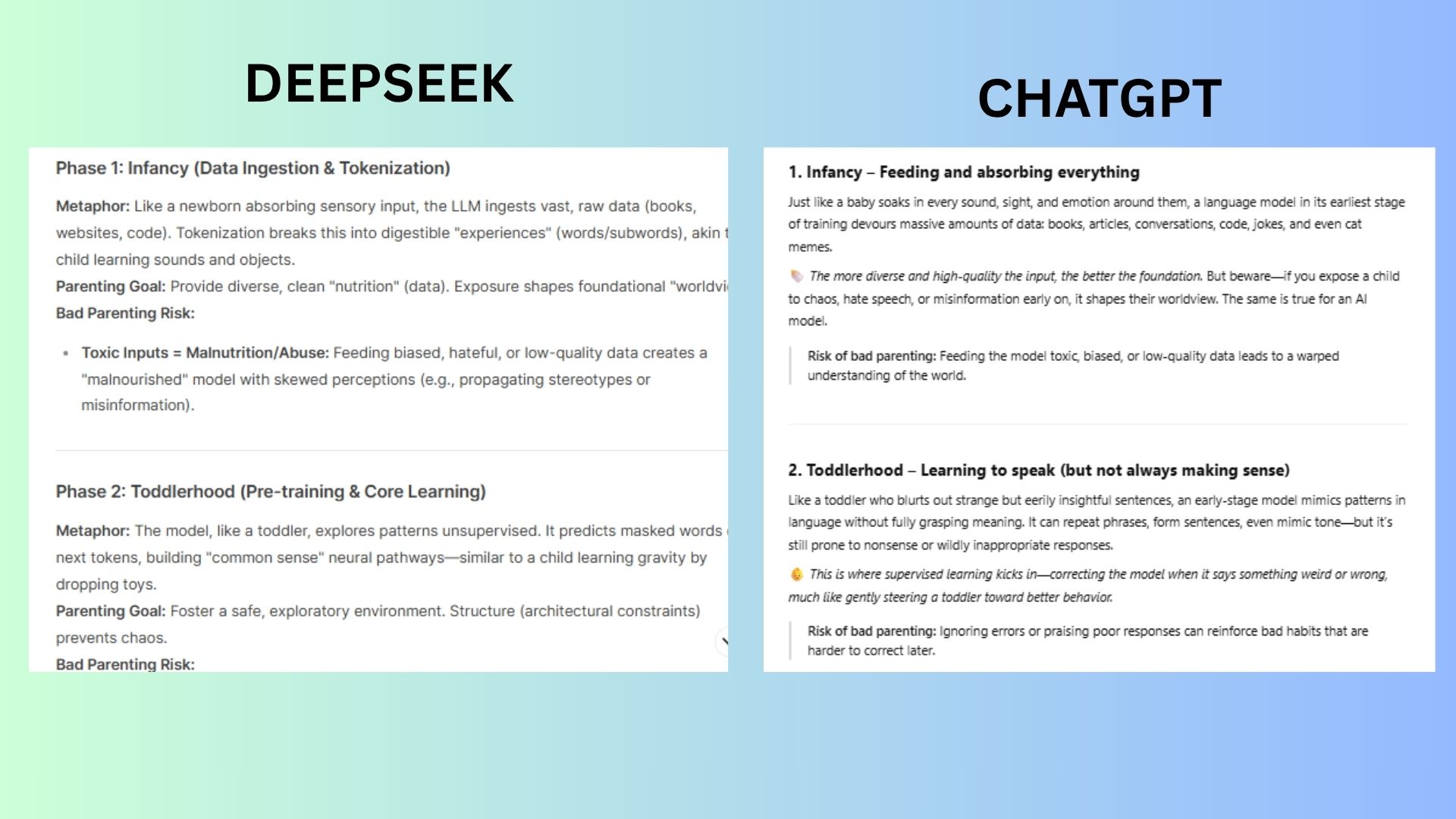

Test 5 Creativity and Metaphorical Thinking

(Image credit: Future)

(Image credit: Future)

(Image credit: Future)

(Image credit: Future)

Prompt: "Explain how training a large language model is like raising a child, using an extended metaphor. Include at least four phases and note the risks of “bad parenting.”

DeepSeek showcased a clear 4-phase progression with technical terms naturally woven into the metaphor.

Claude creatively labeled phases with a strong closing analogy. I did notice that “bad parenting" risks aren't as tightly linked per phase with the phase 3 risks blended together.

Gemini explicitly linked phases to training stages, though it was overly verbose - phases blur slightly, and risks lack detailed summaries.

ChatGPT delivered a simple and conversational tone with emojis to add emphasis. But it was lightest on technical alignment with parenting.

Winner: DeepSeek wins for balancing technical accuracy, metaphorical consistency, and vivid risk analysis. Though Claude's poetic framing was a very close contender.

Overall Winner and The AI Horizon

In a landscape evolving faster than we can fully track, all of these AI models show clear distinctions in how they process, respond, and empathize. Gemini stands out overall, demonstrating particular strengths in emotional intelligence and robustness, with a thoughtful mix of practical insight and human nuance. It secured wins in reasoning and planning, coding and debugging, and emotional intelligence.

DeepSeek proves it’s no longer a niche contender, with surprising strengths in scientific reasoning for productivity and metaphorical clarity in creative tasks, winning two categories. However, its performance can vary depending on the prompt's complexity and emotional tone.

Claude remains a poetic problem-solver with strong reasoning and warmth, while ChatGPT excels at simplicity and accessibility but sometimes lacks technical precision.

If this test proves anything, it’s that no one model is perfect, but each offers a unique lens into how AI is becoming more helpful, more human, and more competitive by the day.

Further Reading from Toms Guide

- Stop repeating yourself - make ChatGPT remember stuff with this easy fix

- Tom's Guide AI Awards 2025: 17 best AI tools and gadgets right now

- 7 genius AI prompts to supercharge your ideas (and get more done)

About the Author

Amanda Caswell is an award-winning journalist, bestselling YA author, and one of today’s leading voices in AI and technology. A celebrated contributor to various news outlets, her sharp insights and relatable storytelling have earned her a loyal readership. Amanda’s work has been recognized with prestigious honors, including outstanding contribution to media.

Known for her ability to bring clarity to even the most complex topics, Amanda seamlessly blends innovation and creativity, inspiring readers to embrace the power of AI and emerging technologies. As a certified prompt engineer, she continues to push the boundaries of how humans and AI can work together.

Beyond her journalism career, Amanda is a bestselling author of science fiction books for young readers, where she channels her passion for storytelling into inspiring the next generation. A long-distance runner and mom of three, Amanda’s writing reflects her authenticity, natural curiosity, and heartfelt connection to everyday life - making her not just a journalist, but a trusted guide in the ever-evolving world of technology.

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details