Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

Apple Visual Intelligence A Surprising iPhone AI Contender

Apple Intelligence has faced a challenging introduction, as many are aware. Not only was its AI toolset delayed until after the iPhone 16 line's September launch, but many of the initial Apple Intelligence features on the best iPhones have been perceived more as novelties than significant advancements.

TechRadar’s Senior AI Writer John-Anthony Disotto even wrote, "I believed in Apple Intelligence, but Apple let me down," summarizing the brand's AI struggles. As a long-time iPhone user, I've also felt the disappointment of seeing Apple lag behind Google and Samsung in the AI race.

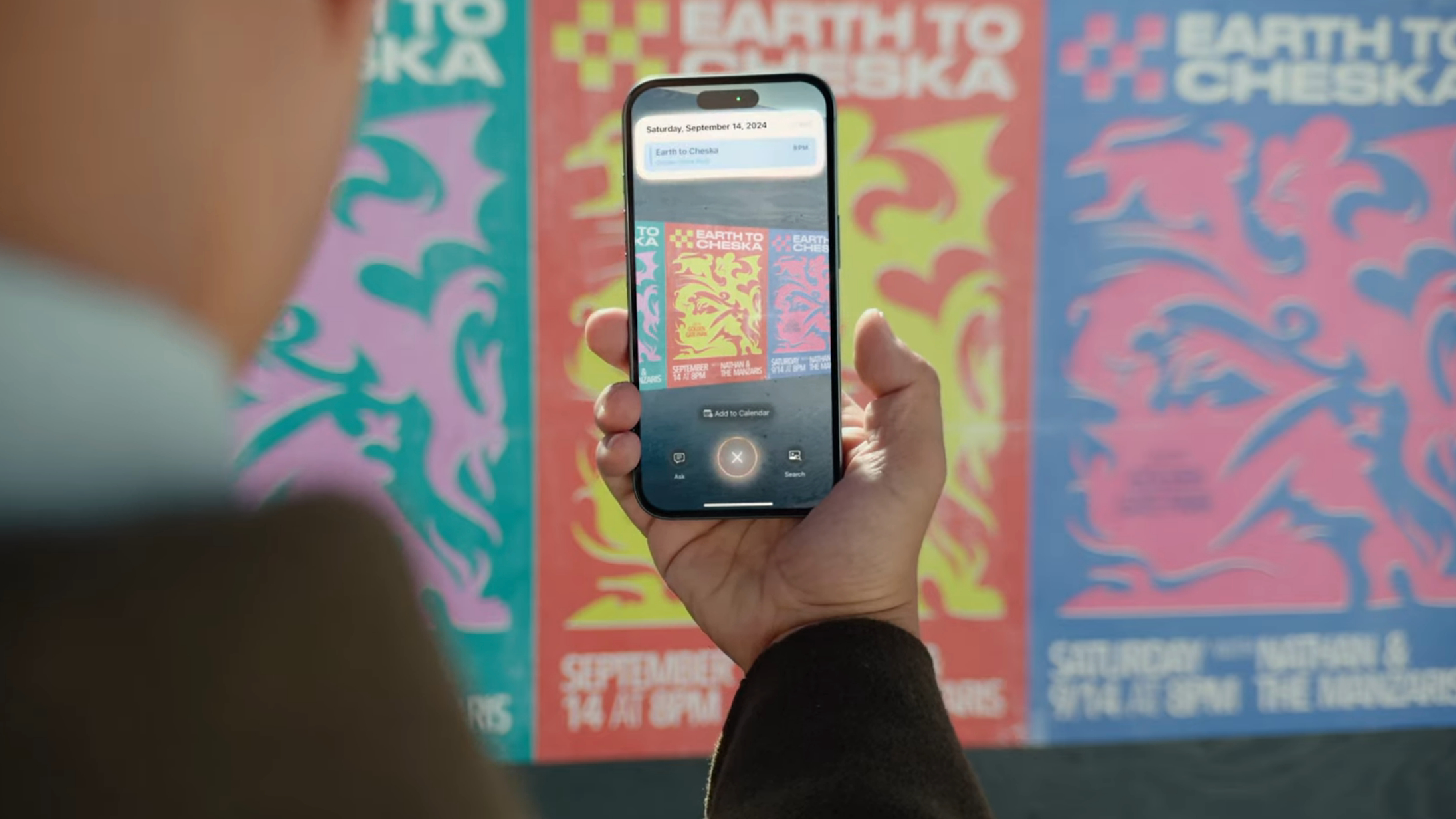

However, not all is lost. Despite its shortcomings, Apple Intelligence shows promise, particularly in how its AI tools are presented and accessed. They may not be the most powerful, but they integrate seamlessly. After a week of using Apple Intelligence daily during a vacation in Madeira, Portugal, I've found that Visual Intelligence, specifically, is a genuinely useful addition to the best iPhones.

(Image credit: Apple)

(Image credit: Apple)

Out in the Field with Visual Intelligence

(Image credit: Apple)

(Image credit: Apple)

Apple’s version of Google Lens, Visual Intelligence, launched with iOS 18.2 in December—three months after the iPhone 16 release. While I experimented with it briefly then, I didn't find consistent use for it in London.

In Madeira, however, I intentionally tested Visual Intelligence. The island's unique natural beauty, exotic animals, and distinct customs provided an ideal testing ground.

Visual Intelligence is activated by a long press of the Camera Control toggle on all iPhone 16 models, or via the Action Button, Lock Screen, or Control Center on the iPhone 16e, iPhone 15 Pro, and iPhone 15 Pro Max. It uses the iPhone’s camera to identify, interpret, and act on visual information and is integrated with both ChatGPT and Google Search.

As an iPhone 16 Pro user, I consistently use Camera Control to summon Visual Intelligence, which has given the physical button new purpose for me.

I rarely use Camera Control for photos, but Apple’s physical-meets-haptic addition to the iPhone 16 series is a perfect trigger for Visual Intelligence. Similar features on Samsung and Pixel phones are activated by side and power buttons, respectively, but I appreciate that my iPhone 16 Pro now has what feels like a dedicated Visual Intelligence toggle.

So, how did Visual Intelligence perform with my visual queries in Madeira? It provided useful and accurate information every time. While it often relied on ChatGPT, I don't see this as an issue as long as ChatGPT remains free and seamlessly integrated. It might not be solely an Apple tool, but it's certainly an effective iPhone tool.

As you can observe in this TikTok video (featuring @techradar and music ♬ coffee chat - choppy.wav), Visual Intelligence identified my location from a fairly nondescript valley and an obscure botanical garden. It correctly translated Portuguese and Latin text and identified a lizard I encountered as a common wall lizard (Podarcis muralis). It also recognized a traditional Portuguese spice crusher and even suggested where to buy some delicious Madeira wine.

Even when Visual Intelligence couldn't pinpoint my exact location or the origin of my grilled fish, it still provided useful context. For example: “This is a stunning waterfall cascading down a lush, green cliff, surrounded by dense vegetation. The area appears to be a popular spot for visitors, with people enjoying the natural scenery, taking photos, and relaxing near the water’s edge.” This was all correct, even if it couldn't identify the waterfall as Madeira’s 25 Fontes.

The key takeaway is that Visual Intelligence works and shouldn't be dismissed as a gimmick by those who have only tried Apple Intelligence's more superficial features.

The Bigger Better Competition

Gemini Live on the Samsung Galaxy S25 Edge (Image credit: Google)

Gemini Live on the Samsung Galaxy S25 Edge (Image credit: Google)

Of course, the challenge for Apple is that many of the best Android phones offer comparable or superior versions of the same tool. Gemini Live is essentially Visual Intelligence on steroids. When Google’s AI assistant helps you cook a meal or discusses local music, it genuinely feels like interacting with the future.

Ironically, Gemini Live is now available for free on iOS as well as Android. The harsh reality for Apple is that, currently, there’s little reason for iPhone owners to use Visual Intelligence over Gemini Live. AI is evolving so rapidly that Visual Intelligence already feels somewhat outdated; Apple Intelligence isn't the most intelligent software on the iPhone.

However, I am convinced that Apple has a functional AI platform to build upon at the upcoming WWDC 2025 summit. Yes, Apple Intelligence is significantly behind the competition, but features like Visual Intelligence demonstrate genuine utility in Apple's current offerings. Apple remains a leader in UI and design, and I hope we’ll soon see an Apple Intelligence that is less reliant on third-party platforms to be truly useful.

You Might Also Like

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details