Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

AI Lacks Human Touch Understanding Sensory Concepts

Can AI Understand Without Physical Experience

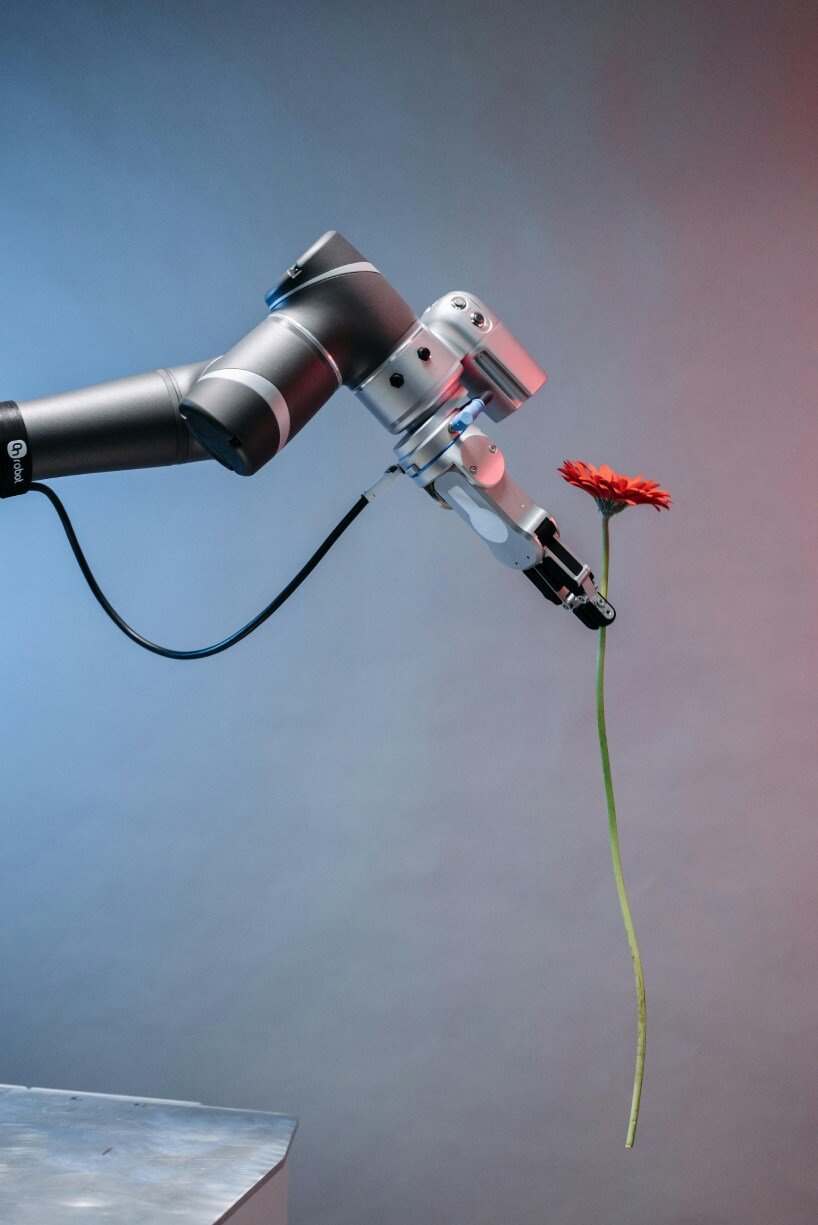

Imagine trying to understand a flower without ever smelling its fragrance or feeling its delicate petals. Could you truly grasp what a flower is? This fundamental question is at the heart of a new study from The Ohio State University. Researchers explored whether advanced AI language models, such as ChatGPT and Gemini, can genuinely comprehend human concepts if they haven't experienced the world physically. Their conclusion suggests it's not entirely possible.

The study's findings indicate that even sophisticated AI tools are missing the crucial sensorimotor grounding that enriches human understanding. Although these models excel at linguistic pattern recognition, often surpassing human abilities in verbal or statistical challenges, they consistently fall short with concepts tied to sensory experiences. When a concept involves smell, touch, or physical actions like holding or moving, language by itself appears insufficient.

all images courtesy of Pavel Danilyuk

all images courtesy of Pavel Danilyuk

Peeking Under the Hood The Ohio State Study

Researchers from The Ohio State University put four leading AI models to the test: GPT-3.5, GPT-4, PaLM, and Gemini. They used a dataset of over 4,400 words, which humans had previously rated across various conceptual dimensions. These dimensions included abstract qualities like 'imageability' and 'emotional arousal,' as well as more concrete aspects, such as how much a concept is tied to sensory input or physical movement.

Both humans and the AI models scored words such as ‘flower’, ‘hoof’, ‘swing’, or ‘humorous’ based on their alignment with these dimensions. While the AI models performed well in non-sensory categories like imageability or emotional tone, their accuracy notably declined for concepts involving sensory or motor attributes. For example, an AI might identify a flower as a visual object but fail to capture the rich, integrated physical experiences humans associate with it.

'A large language model can’t smell a rose, touch the petals of a daisy, or walk through a field of wildflowers,' explains Qihui Xu, the study's lead author. 'They obtain what they know by consuming vast amounts of text — orders of magnitude larger than what a human is exposed to in their entire lifetimes — and still can’t quite capture some concepts the way humans do.'

investigating whether large language models like ChatGPT and Gemini can accurately represent human concepts

investigating whether large language models like ChatGPT and Gemini can accurately represent human concepts

Why Human Understanding Runs Deeper

This research, recently published in Nature Human Behaviour, contributes to a long-standing debate in cognitive science: can we truly form concepts without grounding them in physical, bodily experience? While some theories propose that language alone can build rich conceptual understanding, even for individuals with sensory impairments, others maintain that physical interaction with our environment is fundamental to comprehension.

Consider a flower again. For humans, it's more than just a visual object. It's a collection of sensory inputs and embodied memories—the warmth of sunlight, the act of smelling a bloom, and the emotional connections to gardens, gifts, or even grief. These are complex, multisensory experiences that current AI models, primarily trained on text from the internet, can only superficially imitate.

Interestingly, the study did show some AI capacity. For instance, the models correctly identified both roses and pasta as items 'high in smell.' However, humans are unlikely to consider them conceptually similar based on this single attribute. Our understanding is built on a multidimensional web of experiences, encompassing how things feel, how we interact with them, and their personal significance.

the study by The Ohio State University suggests that these AI models cannot understand sensorial human experiences

the study by The Ohio State University suggests that these AI models cannot understand sensorial human experiences

The Future of AI and Embodied Understanding

A promising finding from the study was that AI models trained on both text and images showed improved performance in some sensory categories, especially those related to vision. This suggests that future multimodal training—which combines text, visuals, and potentially sensor data—could bring AI closer to a human-like grasp of concepts.

However, the researchers urge caution. As Qihui Xu points out, even with image data, AI still misses the 'doing' part—the formation of concepts through active interaction with the world.

The integration of robotics, sensor technology, and embodied interaction might one day lead AI towards a more situated, human-like understanding. For the present, however, the depth and richness of human experience far exceed what even the most advanced language models can currently replicate.

in one part of the study AI models accurately linked roses and pasta as both being ‘high in smell’

in one part of the study AI models accurately linked roses and pasta as both being ‘high in smell’

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details