Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

New AI Dataset Advances Retinal Vessel Analysis

The Eyes: A Window to Systemic Health

Did you know that the blood vessels in your retina can reveal secrets about your overall health? Experienced ophthalmologists can detect subtle changes in these arteries and veins to screen for serious systemic diseases like cardiovascular disease, diabetes, glaucoma, and hypertension. For example, research has shown that the width of retinal vessels is linked to cardiovascular risk factors, while changes in vessel shape can indicate diabetic retinopathy. The accurate analysis of these vessels is a critical first step in early diagnosis and intervention.

However, manually segmenting and analyzing these tiny vessels is a time-consuming and labor-intensive task. This is where artificial intelligence (AI) comes in, with methods that can automate the process and deliver excellent performance. But there's a catch: AI models are data-driven, meaning they need large amounts of high-quality, annotated data to learn effectively.

The Challenge: A Gap in AI Training Data

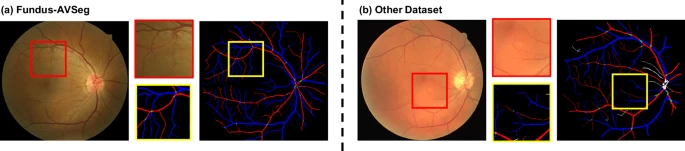

While several public datasets for retinal artery-vein segmentation exist, they have significant drawbacks that hinder the development of truly robust AI tools:

- Stale Data: Most existing datasets are simply re-annotations of older public data, offering no new information for training more resilient AI models.

- Low Quality and Size: Limited sample sizes and low-resolution images (often under 1000 pixels) create a high risk of model overfitting, where the AI performs well on the training data but fails in real-world scenarios.

- Lack of Disease Diversity: The majority of datasets contain images only from healthy individuals, failing to prepare AI models for the complexities introduced by eye diseases.

- No Quality Metrics: Image quality is a huge factor in performance, but most datasets don't include quality assessments, making it difficult for researchers to optimize models for real-world clinical images, which are not always perfect.

Introducing Fundus-AVSeg: A New High-Quality Dataset

To address these challenges, researchers have developed Fundus-AVSeg, a new, publicly available fundus image dataset created specifically for AI-based artery-vein segmentation. This dataset consists of 100 high-resolution fundus images, each with pixel-wise manual annotations from professional ophthalmologists.

Fundus-AVSeg stands out for three key reasons:

- Larger Scale: With 100 annotated images, it is one of the largest datasets of its kind.

- Rich Disease Diversity: It includes images from patients with diabetic retinopathy (DR), age-related macular degeneration (AMD), and glaucoma, as well as normal fundus images.

- Novel Data Sources: All images are from new patient cohorts and imaging devices, not recycled from old datasets.

Building a Better Dataset: The Fundus-AVSeg Method

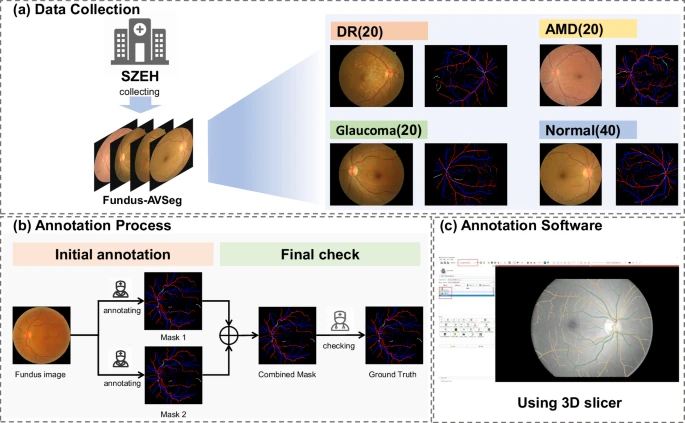

Data Collection

The data was collected ethically at the Shenzhen Eye Hospital from 100 different patients. The high-resolution images (up to 2656 × 1992 pixels) were captured using mainstream fundus cameras during real clinical diagnostic processes. The selection process, guided by experienced ophthalmologists, intentionally included both high-quality and low-quality images to mirror real-world conditions.

Annotation and Quality Control

Creating a high-quality dataset depends on the quality of its annotations. The project utilized a rigorous workflow involving a team of six junior ophthalmologists and one senior specialist.

The process was meticulous:

- Each image was randomly assigned to two junior ophthalmologists for initial annotation. They used specialized software to mark pixels as arteries (red), veins (blue), artery-vein crossings (green), or uncertain (white).

- The two annotations were then merged. Any areas of disagreement were marked as uncertain.

- A senior ophthalmologist then reviewed these uncertain regions to make the final determination, ensuring a high degree of accuracy and consistency.

Each image also received an image quality label (high or low) based on criteria such as the clarity of vessel structures and pathological features. This allows researchers to train and test their models on a variety of image qualities.

Putting the Dataset to the Test

To prove its value, the team conducted several validation assessments.

Annotation Consistency

Consistency checks among the annotators yielded excellent results. The Mean Intersection over Union (MIoU), a metric for evaluating similarity, was 0.9759 for the same annotator at different times and 0.9523 between different junior annotators, demonstrating high reliability.

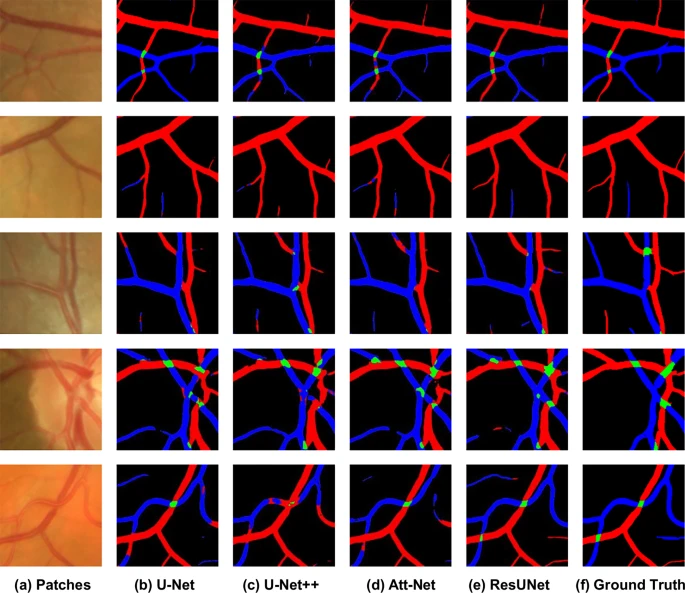

AI Model Benchmarking

Four standard AI segmentation models (U-Net, U-Net++, Att-UNet, and ResUNet) were trained and tested on the Fundus-AVSeg dataset. The results established a strong performance benchmark for future research. While the models performed well in identifying arteries and veins, they struggled with the much smaller and more complex artery-vein crossings. This highlights a key challenge for the research community to tackle, and Fundus-AVSeg provides the perfect dataset for it.

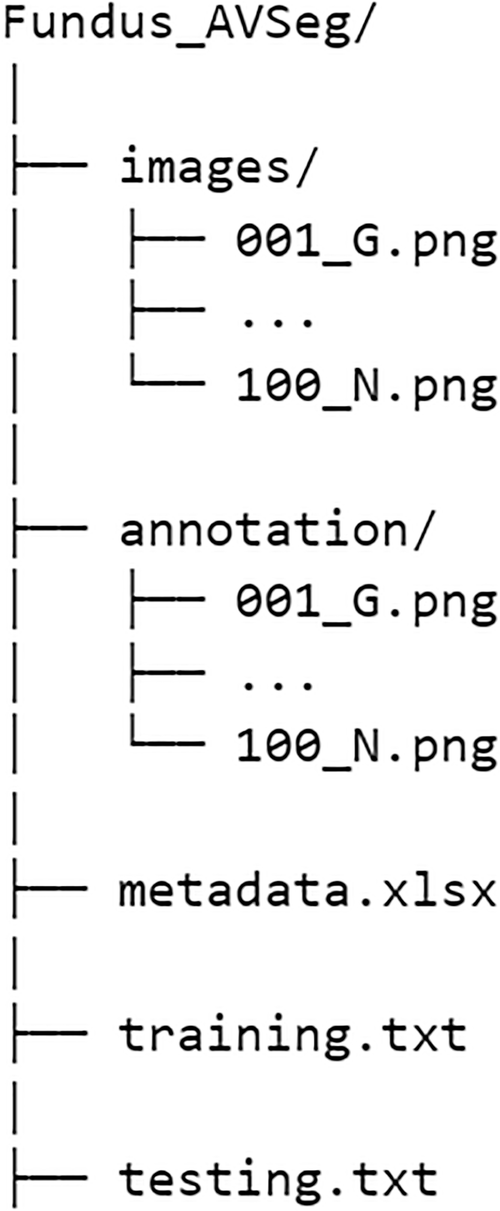

How to Access the Fundus-AVSeg Dataset

The complete dataset is publicly available on Figshare. It includes the original images, the corresponding annotation masks, metadata (disease type, image quality), and recommended training/testing splits for reproducibility.

Future Directions and Current Limitations

The creators acknowledge that the dataset has some limitations. It currently does not include certain retinal vascular diseases, and its size is still relatively small due to the high cost of detailed annotation. Furthermore, all patients are of Asian descent, which may impact model generalizability across different populations.

Despite these points, Fundus-AVSeg is a significant contribution to the field. The team plans to address these limitations in future updates by expanding disease coverage and collecting more data from diverse sources, paving the way for more powerful and equitable AI tools in healthcare.

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details