Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

AI Health Advice ChatGPT vs Gemini Medical Study Results

The Rise of AI as a Health Advisor

Peripheral artery disease (PAD) is a common but serious circulatory problem where narrowed arteries reduce blood flow to your limbs. As a prevalent form of atherosclerosis, it significantly increases the risk of heart attack and stroke. With more people turning to the internet and artificial intelligence for medical information, it's crucial to know if the answers we get are accurate and understandable. A recent study aimed to find out just that by comparing two of the most powerful AI models: OpenAI's ChatGPT and Google's Gemini.

Pitting AI Giants Against Each Other The Study

Researchers conducted a head-to-head comparison to evaluate the quality of AI-generated health content on PAD. They took 19 frequently asked questions from the Cleveland Clinic's trusted patient guide and posed them to both ChatGPT 4.0 and Gemini 1.0. To see if the AI's persona made a difference, they used three different prompting styles: a neutral prompt, a prompt asking the AI to respond as if speaking to a patient, and another asking it to respond as if to a physician.

The responses were then rigorously analyzed for accuracy, completeness, readability, and length.

The Verdict Accuracy and Completeness

When it came to providing correct information, ChatGPT had a clear edge. The study found that ChatGPT provided responses that were 70% correct and 30% partially correct. Crucially, it gave zero incorrect answers.

Gemini's performance was also strong but fell slightly behind, with 52% correct, 45% partially correct, and 3% incorrect responses. The difference in accuracy was statistically significant, suggesting ChatGPT was the more reliable source in this test.

Readability A Major Hurdle for Patients

While accuracy is vital, information is useless if the patient can't understand it. National health guidelines recommend that patient education materials be written at a 6th to 8th-grade reading level.

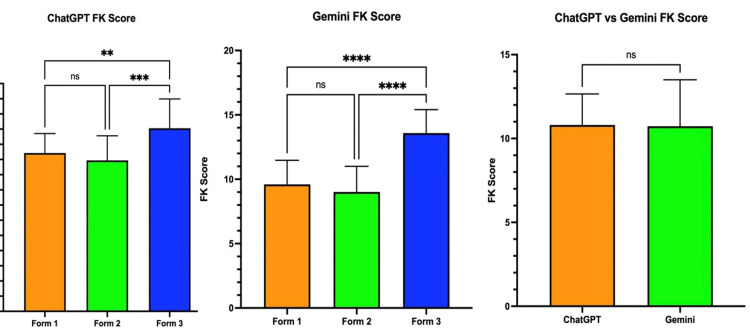

Unfortunately, both AI models missed this mark by a wide margin. The study's analysis showed that both ChatGPT and Gemini produced content at an average Flesch-Kincaid (FK) grade level of around 10.8—nearly the 11th grade. This advanced reading level could be a significant barrier for many patients seeking to understand their condition.

Verbosity vs Brevity Word Count Analysis

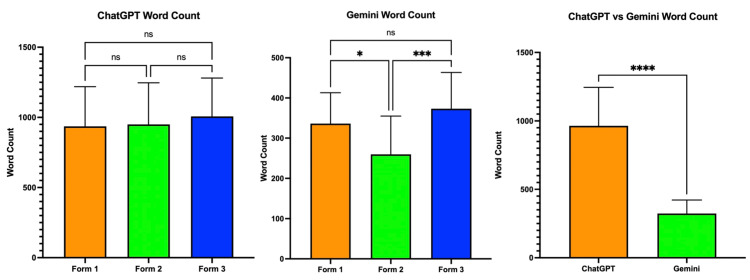

The study also found a significant difference in the length of the answers. ChatGPT's responses were consistently and significantly longer than Gemini's. While more detail can sometimes be helpful, it can also contribute to a higher reading level and overwhelm a user looking for a quick, straightforward answer.

Key Takeaways for Patients and Providers

So, can you trust AI for health advice? This study suggests the answer is a qualified "yes." Both ChatGPT and Gemini can serve as useful supplementary tools for patient education, with ChatGPT currently holding an edge in accuracy.

However, they are not a substitute for professional medical advice. The complex language they use is a major issue that developers need to address. The researchers conclude that for now, AI is best used as a starting point for discussions with a healthcare provider. As AI continues to evolve, further research and refinement will be essential to ensure it is a safe, accessible, and effective resource for everyone.

References

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details