Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

Study Tests ChatGPT On Smoking And Oral Health Advice

Artificial Intelligence is quickly changing how we find health information. Instead of sifting through search engine results, many people are turning to conversational AI like ChatGPT for direct, human-like answers. This is especially true for health topics, including the serious effects of smoking on oral health, which range from gum disease and tooth loss to oral cancer.

Given this trend, a new study published in BMC Oral Health investigated a critical question: How good is ChatGPT at answering the public's questions about smoking's impact on dental health? Researchers put the AI to the test to see if its advice is useful, reliable, and easy to act on.

The Rise of AI in Healthcare

For years, platforms like Google and Wikipedia have been the go-to sources for health information. However, using them effectively requires users to navigate multiple sources and judge their credibility, which can be overwhelming. AI chatbots like ChatGPT offer a streamlined alternative by synthesizing information into a single, conversational response.

Since its launch, ChatGPT has been widely used for health inquiries. But this convenience raises important concerns about the accuracy and reliability of its answers. While studies have explored ChatGPT's performance in fields like dermatology, oncology, and general dentistry, its effectiveness in the specific area of smoking-related oral health was unknown until now.

Smoking is a major risk factor for numerous oral health issues, including periodontal disease, tooth loss, and oral cancer. While patients often ask about aesthetic concerns, understanding the severe health risks is vital. This study aimed to evaluate ChatGPT's responses on this topic, focusing on their quality, usefulness, reliability, and readability.

Putting ChatGPT to the Test

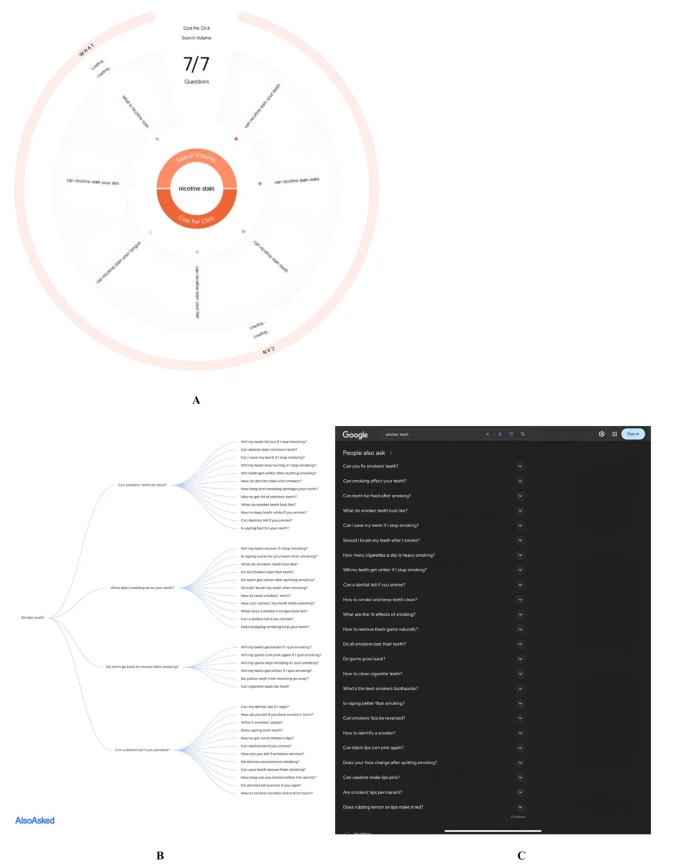

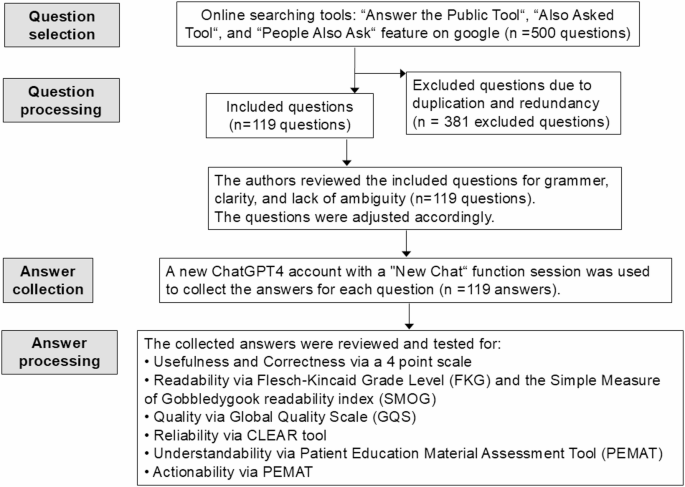

To evaluate ChatGPT 3.5, researchers first gathered 119 common questions about smoking and dental health using online tools like "Answer the Public" and Google’s "People Also Ask" feature. These questions were sorted into five categories:

- Periodontal (gum) conditions

- Teeth and general health

- Oral hygiene and breath

- Oral soft tissues (lips, palate)

- Oral surgery

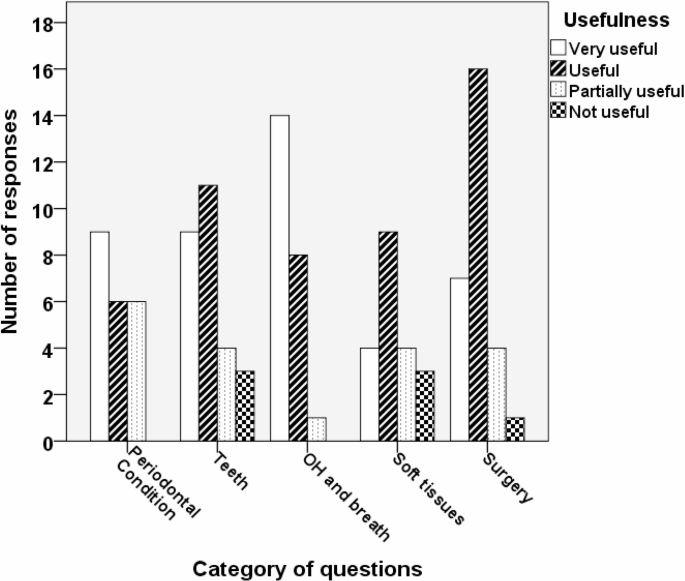

Each question was entered into a fresh ChatGPT session to avoid bias from previous conversations. The AI’s answers were then rigorously evaluated by researchers using a set of validated tools:

- Usefulness: A 4-point scale from "Not useful" to "Very useful."

- Readability: The Flesch-Kincaid (FKG) and SMOG indices, which measure the educational level needed to understand the text.

- Quality: The Global Quality Scale (GQS), a 5-point scale from "Poor" to "Excellent."

- Reliability: The CLEAR tool, which assesses content for completeness, accuracy, and evidence.

- Understandability & Actionability: The Patient Education Materials Assessment Tool (PEMAT), which checks if the information is easy to grasp and provides clear, actionable steps.

The Verdict: How Did ChatGPT Perform?

The study revealed a mixed but generally positive performance. For the most part, ChatGPT provided valuable information, but it struggled in key areas, particularly with providing advice that is both easy to read and practical for users.

Here’s a breakdown of the key findings:

-

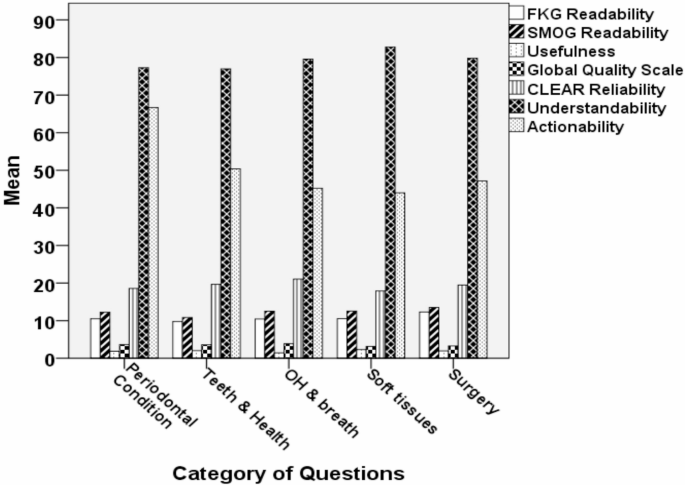

Usefulness: The majority of responses were rated as either "very useful" (36.1%) or "useful" (42.0%). Questions about oral hygiene and breath received the highest usefulness scores.

-

Readability: This was a significant weakness. Over a third of the responses (35.3%) were considered "very difficult to read," requiring a university-level education to understand. Answers related to oral surgery were the most challenging.

-

Quality and Reliability: The overall quality of the information was moderate to good. Similarly, reliability was rated highly, with most responses (62.2%) categorized as "very good content" by the CLEAR tool.

-

Understandability vs. Actionability: While the content was generally easy to understand (90% of responses scored acceptably), it fell short on being actionable. Only 23.5% of the answers provided clear, practical steps that a user could easily follow. Responses about periodontal conditions were the most actionable.

What This Means for Patients and Professionals

The study suggests that while ChatGPT can be a good starting point for general knowledge, it has clear limitations. The AI performed well on broad questions but struggled to provide the detailed, nuanced information needed for more specialized topics, such as the effects of smoking on oral soft tissues or post-surgery care.

One of the biggest takeaways is the gap between understandability and actionability. A person might understand that smoking is bad for their gums, but ChatGPT often fails to provide concrete, easy-to-follow steps to improve their oral hygiene. This lack of actionable guidance limits its effectiveness as a tool for promoting real behavioral change.

Furthermore, the poor readability of many responses is a major barrier. Health information should be accessible to everyone, regardless of their educational background. The complex language used in many of ChatGPT's answers, especially on technical topics like surgery, makes the information less useful for the average person.

Final Thoughts: A Useful Tool with Caveats

This research demonstrates that ChatGPT is a valuable tool for general education on the effects of smoking on oral health. It delivers information that is largely reliable, high-quality, and understandable. However, it is not a substitute for professional medical advice.

The key challenges for ChatGPT are improving the readability of its responses and providing more actionable, personalized guidance. While it can supplement health education, users should be aware of its limitations and always consult a qualified dental professional for diagnosis, treatment, and personalized advice. Future advancements that integrate visuals, audio, or interactive elements could further enhance its role in public health communication.

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details