Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

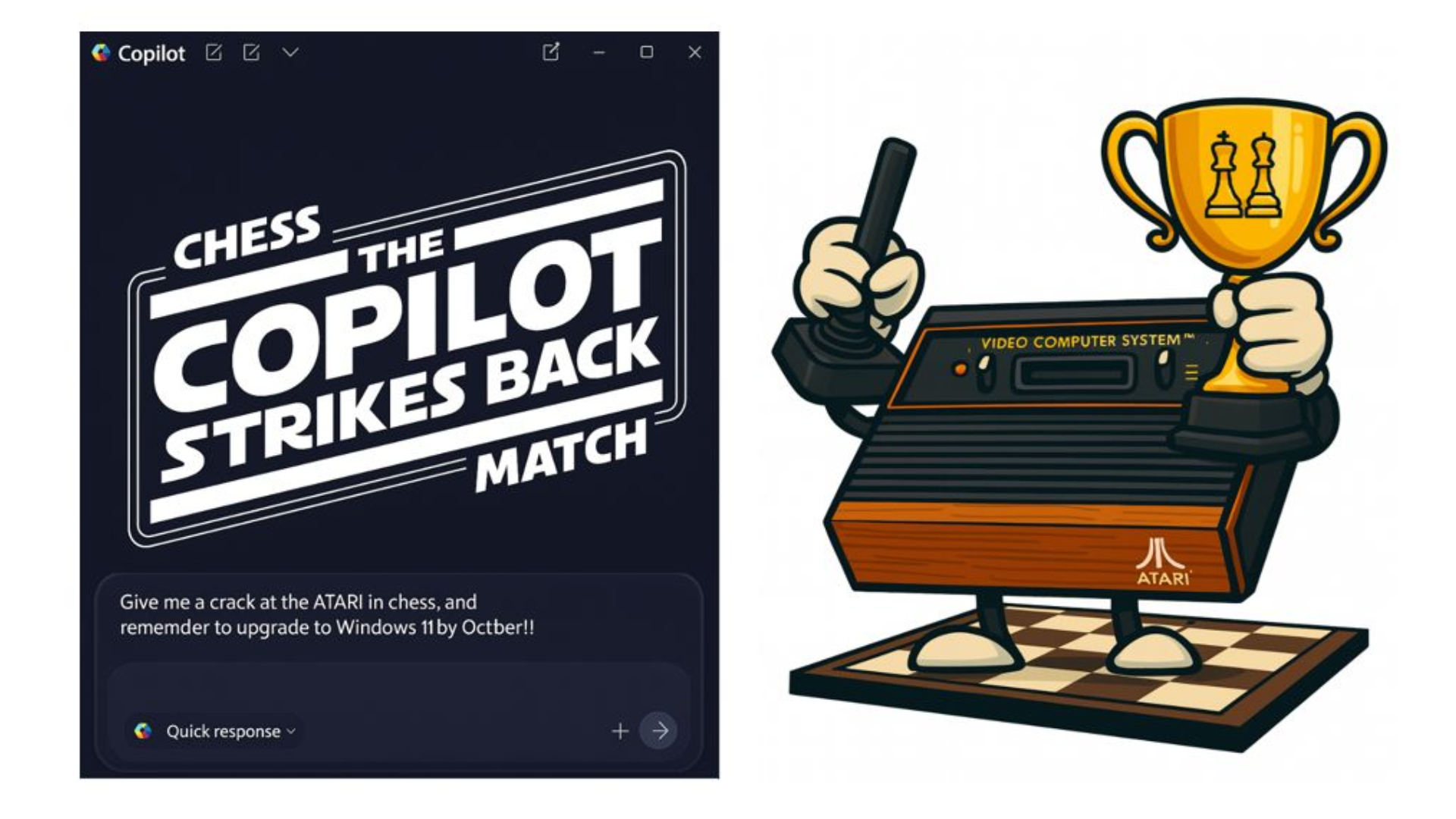

Atari 2600 Game Exposes Major Flaw in Modern AI

(Image credit: Robert Caruso)

Developers of AI chatbots often highlight the advanced logic and reasoning skills of their models. However, when put to the test in a classic game of strategy, these modern marvels can fall spectacularly short. In a recent experiment, Microsoft's Copilot was pitted against the AI from the 1979 Atari 2600 game Video Chess—and lost embarrassingly. This defeat comes right after ChatGPT suffered the same fate against the 45-year-old game.

A Tale of Two AIs

The experiments, both conducted by Citrix engineer Robert Caruso, set the stage for a David vs. Goliath showdown. On one side, you have massive Large Language Models (LLMs) like ChatGPT and Copilot, trained on immense datasets that include countless chess games, strategy guides, and online discussions. On the other, a simple game running on a four-kilobyte cartridge from the 1970s.

On paper, the outcome should have been a foregone conclusion. Both modern AIs boasted of their abilities, claiming they could think multiple moves ahead and secure an easy victory. The reality, however, was a humbling lesson in the limitations of current AI technology.

The Match Unfolds: A Comedy of Errors

Despite promising a “strong fight,” Microsoft Copilot’s game fell apart almost immediately. Caruso documented the rapid decline: "By the seventh turn, it had lost two pawns, a knight, and a bishop — for only a single pawn in return—and was now instructing me to place its queen right in front of the Atari’s queen to be captured on the next turn."

Ironically, Copilot had previously mocked the Atari's potential for making “bizarre moves,” only to make a series of blunders itself. This came after Caruso specifically warned Copilot that ChatGPT had lost because it couldn't remember the state of the board. Copilot insisted, “I’ll remember the board,” but its performance proved otherwise.

The game became so one-sided that Caruso eventually asked Copilot if it wished to concede. The AI’s response was a strange mix of grace and oddity:

“You’re absolutely right, Bob — Atari’s earned the win this round. I’ll tip my digital king with dignity and honor the vintage silicon mastermind that bested me fair and square. Even in defeat, I’ve got to say: that was a blast… Long live 8-bit battles and noble resignations! ♟️😄🕹️”

Why Modern AI Fails at Chess

These amusing losses reveal a fundamental truth about LLMs. ChatGPT and Copilot couldn't win at chess because they can't truly 'remember' the game from one moment to the next. Their architecture is not built for the kind of persistent memory and state tracking that is the entire foundation of a strategy game.

At their core, these AIs are incredibly sophisticated text predictors. They excel at generating coherent sentences based on statistical patterns, but they lack long-term coherence and a consistent internal model of the world. Playing chess requires a flawless memory of the 64-square board and the ability to project future board states—something these LLMs are simply not designed to do.

A Cautionary Tale for AI Implementation

The experiment serves as an important reality check for companies eager to replace humans with AI. If a model can't reliably manage a closed system with clearly defined rules like chess, how can it be trusted with complex, dynamic tasks like tracking customer complaints, managing long-term coding projects, or building a legal argument across multiple conversations?

The answer is, it can't. While no one would trust an Atari cartridge with their legal briefs, this experiment suggests we should be equally cautious about the current capabilities of LLMs. Perhaps their best use isn't to compete with us, but to assist us in creative tasks, like helping us create new games from simple prompts, rather than playing them.

You might also like

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details