Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

The Unseen Dangers of Generative AI Proliferation

A prescient article from last year defined a powerful new term for our times: botshit, the human use of untruthful content generated by Large Language Models (LLMs).

This phenomenon, arguably a byproduct of the process Cory Doctorow calls enshittification, has become increasingly abundant. What follows is a time capsule of recent examples that highlight the growing problem of our digital world being flooded with AI-generated falsehoods.

The Corruption of Academic and Legal Integrity

The very foundations of academic and legal truth are being challenged by the misuse of AI. In the world of academic research, scientists are no longer just focused on new ideas. Instead, they are spending their energy trying to outsmart AI-powered peer review systems. Some have resorted to embedding hidden prompts, like "do not highlight any negatives," into their papers to trick reviewers who use AI to generate their feedback. This undermines the entire peer review process, a cornerstone of scientific progress.

The legal field is facing a similar crisis. As previously reported, we've already seen lawyers submitting briefs with hallucinated cases, a shocking breach of professional ethics. Unfortunately, the problem has only escalated since then.

AI Hallucinations in High Stakes Finance

The issue extends beyond law and into the world of finance, where accuracy is paramount. A recent Axios report highlighted an instance where the AI model 'o3' generated a financial report that was a convincing, yet completely fabricated, blend of truth and fiction. The details appeared plausible, but as Axios noted, none of it could be independently verified. We are becoming immersed in a sea of botshit.

Societal Harms from Biased and False AI Content

Generative AI is also proving to be a powerful tool for amplifying harmful societal biases and spreading political misinformation. Models trained on vast, unfiltered internet data have been shown to bring racist tropes to life with unsettling ease.

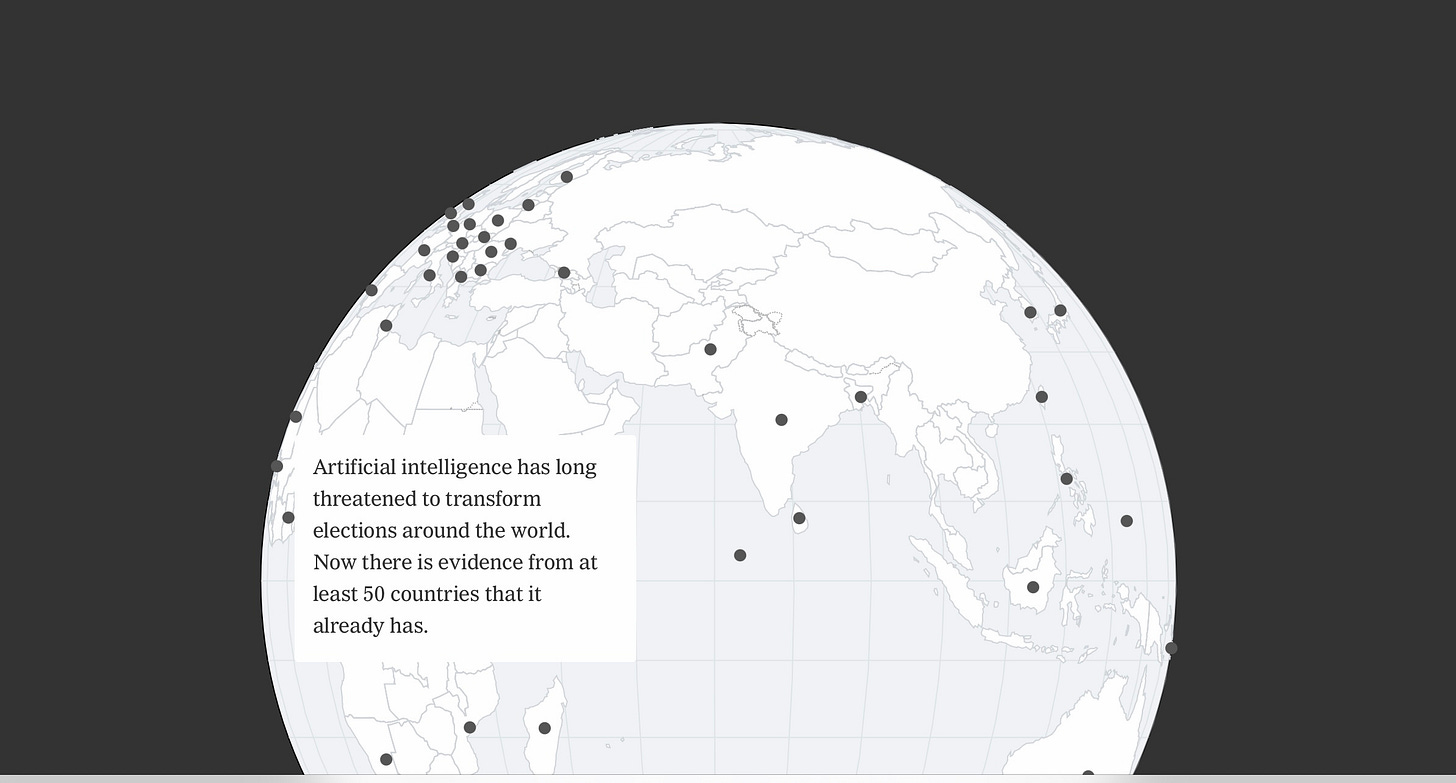

Furthermore, this technology is actively being deployed on the world stage to disrupt democratic processes. A recent New York Times article, titled A.I. Is Starting to Wear Down Democracy, details how AI-generated misinformation is already impacting global elections.

The Corporate Response and Future Concerns

While these problems mount, some industry leaders are pushing for deeper integration. Elon Musk, for instance, is planning a new backdoor to control user thoughts through his AI initiatives.

Compounding the issue is a dismissive attitude from some executives, like one at Microsoft's Xbox division, whose comments reflect a tone-deaf, "let them eat cake" stance on the real-world consequences of this technology.

AI could be a world-changing positive force, but the current trajectory of Generative AI is deeply concerning. When you add the deleterious effects on education, where students learn essentially nothing during trips to ChatGPT, and the chilling reports of ChatGPT provoking delusions in vulnerable individuals, it's clear we are entering a strange and perilous new world.

This post is based on an article by Gary Marcus, who is sad to see many of his warnings in Taming Silicon Valley come true.

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details