Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

AI Simplifies Breast Cancer Reports For Patients

The Challenge of Medical Jargon in Radiology Reports

Patients are increasingly turning to large language models like ChatGPT to make sense of complex medical documents, especially radiology reports. This is understandable, as studies show most patients have a reading comprehension level equivalent to the 8th grade. However, medical reports are often filled with technical jargon far above this level. This disconnect can cause confusion and anxiety.

A new study explored whether ChatGPT-4 could act as a translator, simplifying breast imaging reports for patients while maintaining the accuracy of the original information. The research focused on reports classified under the breast imaging reporting and data system (BI-RADS) categories 3, 4, and 5, which often require follow-up and can be particularly stressful for patients to receive.

Putting ChatGPT to the Test

To conduct the study, researchers selected fifteen consecutive reports from each of the BI-RADS 3, 4, and 5 categories from their radiology database. For each of the 45 source reports, they prompted ChatGPT with two simple commands: "please put the report into layperson terms" and "please provide recommendations for the patient."

The team then analyzed the readability of the original reports and the new AI-generated versions. They used the Flesch-Kincaid readability score, an established tool for assessing health literacy, which assigns a grade-level score to a text. They also compared word counts, as shorter texts are often easier to read.

Finally, a radiologist meticulously reviewed all 45 of ChatGPT's outputs to check for any inaccurate or misleading information. They also looked for whether the AI provided any beneficial, supplementary context that wasn't in the original report.

Key Findings: AI Improves Readability and Accuracy

The results were promising. The study found a statistically significant improvement in readability for the more complex reports.

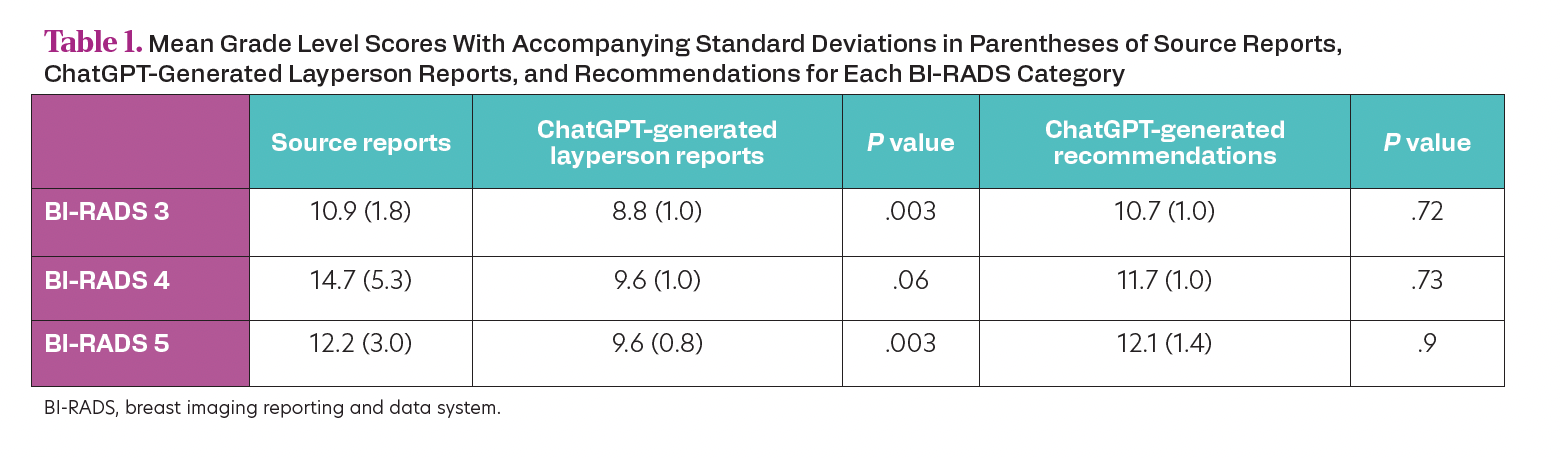

For BI-RADS 3 reports, ChatGPT lowered the average reading level from 10.9 grade level down to 8.8. For BI-RADS 5 reports, the drop was even more substantial, moving from a 12.2 grade level (college level) to a more accessible 9.6 grade level.

Table 1. Mean Grade Level Scores With Accompanying Standard Deviations in Parentheses of Source Reports, ChatGPT-Generated Layperson Reports, and Recommendations for Each BI-RADS Category

Table 1. Mean Grade Level Scores With Accompanying Standard Deviations in Parentheses of Source Reports, ChatGPT-Generated Layperson Reports, and Recommendations for Each BI-RADS Category

While there wasn't a significant change in the overall word count of the main reports, the AI-generated recommendations were notably more concise for BI-RADS 3 and 4 categories.

Most importantly, the radiologist's review confirmed that the ChatGPT-generated reports contained no inaccurate or misleading information that could negatively impact patient care. In fact, the AI often provided beneficial supplementary information, especially regarding biopsy recommendations, offering more context than the original source reports.

A New Tool for Patient Empowerment

The study concludes that ChatGPT can be a powerful tool for improving patient understanding of their health information. By significantly improving the readability of BI-RADS 3 and 5 reports and creating more concise recommendations for others, the AI proved its ability to bridge the communication gap.

Since the AI-generated reports were accurate and did not pose a risk to patient management, they hold great potential. Using tools like ChatGPT to generate patient-friendly summaries could empower patients, improve health literacy, and potentially lessen the significant anxiety that often surrounds breast imaging results.

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details