Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

Intel Releases Free AI Tool to Judge Game Graphics

(Image credit: Intel)

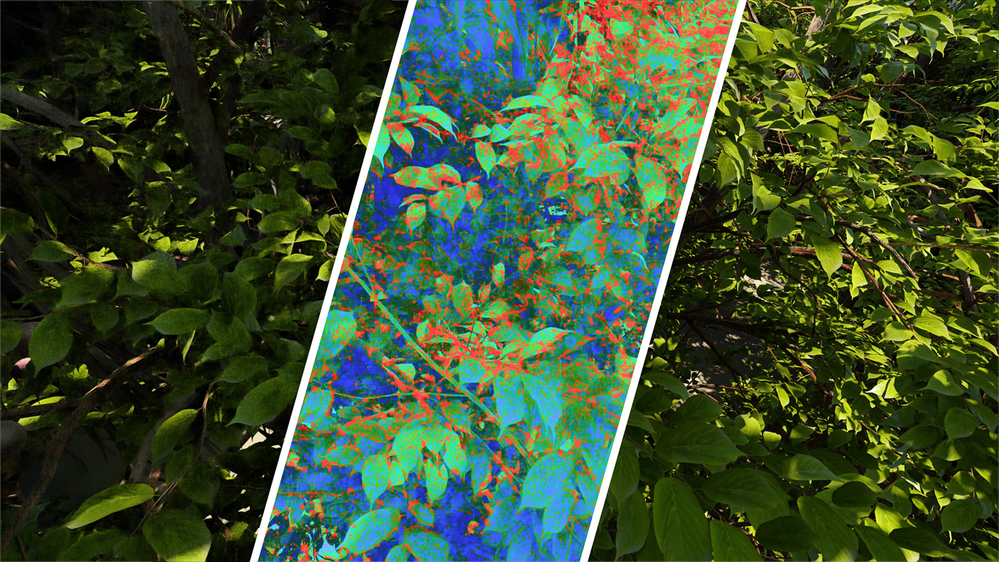

Intel is aiming to make the evaluation of image quality in modern video games a more objective process. The company has released a new AI-powered tool, the Computer Graphics Visual Quality Metric (CGVQM), which is now available on GitHub as a PyTorch application.

In the current gaming landscape, a frame of animation is rarely rendered natively without some form of enhancement. Technologies like upscalers (DLSS, FSR, XeSS) and frame-generation are now commonplace. While they boost performance, they can also introduce visual artifacts such as ghosting, flickering, aliasing, and disocclusion. We often discuss these issues subjectively, but assigning a concrete, objective score to them has always been a significant challenge.

The Problem with Old Metrics

For years, metrics like the peak signal-to-noise ratio (PSNR) have been used to quantify video image quality. However, these tools have limitations and are often misused. PSNR, for instance, was primarily designed to measure the quality degradation from lossy video compression.

This makes it less suitable for real-time graphics, where compression artifacts aren't the main concern. PSNR alone simply can't account for the wide range of unique visual issues that arise from modern rendering techniques.

Intel's Twofold Solution: A New Dataset and AI Model

To build a better tool, researchers at Intel adopted a two-part approach detailed in their research paper, "CGVQM+D: Computer Graphics Video Quality Metric and Dataset."

First, they created a new video dataset called the Computer Graphics Visual Quality Dataset (CGVQD). This dataset is specifically designed to include a wide variety of visual degradations caused by modern techniques, including path tracing, neural denoising, neural supersampling (like FSR, XeSS, and DLSS), Gaussian splatting, frame interpolation, and variable-rate shading.

Second, the researchers used this dataset to train their new AI model, the Computer Graphics Visual Quality Metric (CGVQM). The goal was to create a scalable tool that could produce a reliable image quality rating that accounts for this broad spectrum of potential distortions.

Training an AI to See Like a Human

To ensure their AI's ratings aligned with human perception, the researchers first established a ground truth. They presented the CGVQD dataset to a group of human observers, who rated the severity of the distortions in each video on a scale from "imperceptible" to "very annoying."

With this human-verified baseline, they trained a neural network to identify these same distortions. They chose a 3D convolutional neural network (CNN) architecture based on a residual neural network (ResNet). Specifically, they used a 3D-ResNet-18 model, which was critical for the model's success. A 3D network can analyze not only spatial information (the 2D pixel grid) but also temporal information, allowing it to understand artifacts that occur over time across multiple frames.

How Does It Perform?

The paper claims that the CGVQM model outperforms nearly every other similar image quality tool when tested on their custom dataset. The more intensive CGVQM-5 model's performance was second only to the human baseline scores. This indicates a high degree of accuracy in identifying and rating visual artifacts.

Crucially, the researchers also demonstrated that the model can generalize its capabilities, successfully identifying distortions in videos that were not part of its original training set. While it didn't top the charts on every external dataset, it remained highly competitive, proving its potential as a broadly applicable tool.

What's Next for CGVQM?

The research paper outlines several potential paths for improvement. One suggestion is to explore a transformer neural network architecture, which could further enhance performance, though it would require significantly more computational power. Another idea is to incorporate additional data, like optical flow vectors, to refine the quality evaluation.

Even in its current form, the CGVQM model represents an exciting step forward in the objective evaluation of real-time graphics, offering developers, reviewers, and enthusiasts a powerful new tool for analyzing image quality.

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details