Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

Can ChatGPT Assess Medical Trial Bias Like Humans

The Critical Challenge of Assessing Medical Research

Evidence-based medicine relies on systematic reviews (SRs) and health technology assessments (HTAs) to inform critical healthcare decisions. A cornerstone of this process is assessing the "risk of bias" (RoB) in clinical studies like randomized controlled trials (RCTs). This step is vital because flawed study methods can lead to exaggerated treatment effects and, ultimately, poor patient outcomes. However, conducting RoB assessments is a major bottleneck. It is a resource-intensive and error-prone task that requires reviewers to have a deep understanding of trial design, reporting standards, and specific assessment tools like RoB1 and RoB2. Even highly skilled human reviewers often disagree, which can compromise the integrity of the final evidence synthesis.

Could Artificial Intelligence Offer a Solution?

To address these challenges, researchers have explored automating parts of the review process with machine learning (ML) and artificial intelligence (AI). Tools like RobotReviewer have shown promise in assisting with RoB assessments, demonstrating potential gains in accuracy and efficiency. However, most existing tools are built on models trained on relatively small, specialized datasets.

Enter ChatGPT, a powerful large language model (LLM) trained on an internet-scale corpus of text. Unlike specialized tools, ChatGPT is a general-purpose model developed to understand and generate human-like language. Its ability to perform complex tasks, such as passing medical licensing exams, has sparked interest in its potential application for specialized scientific work. While its broad training could allow it to generalize knowledge effectively, it also comes with risks, such as the potential to "hallucinate" and generate confident but incorrect information.

A Pilot Study to Test ChatGPT's Mettle

To explore this potential, a new study protocol outlines a plan to formally evaluate ChatGPT's ability to assess RoB in medical trials. This research, conducted as part of horizon scanning at the Norwegian Institute of Public Health, aims to provide the first evidence on the level of agreement between RoB assessments made by human experts and those generated by an LLM.

The primary goal is to measure the interrater agreement between human consensus-based RoB assessments and ChatGPT's assessments. The study will also assess ChatGPT's own consistency (intrarater agreement) by having two different people use it to assess the same trials.

Study Design and Methodology

The researchers will follow a rigorous, prespecified plan:

-

Trial Selection: The study will randomly select 100 two-arm, parallel RCTs from recent Cochrane systematic reviews. To ensure a focused comparison, it will exclude trials conducted under emergency conditions (like COVID-19), public health interventions, and those with more than two arms.

-

Prompt Engineering: The first 25 trials will be used for "prompt engineering." This involves carefully crafting and testing instructions to find the most effective way to ask ChatGPT to perform the RoB assessment based on a trial's methods text.

-

Data Collection: For the remaining 75 trials, researchers will provide ChatGPT with the methods section copied from each trial's report. They will then ask it to provide an overall RoB judgment, categorized as "low," "unclear," or "high."

-

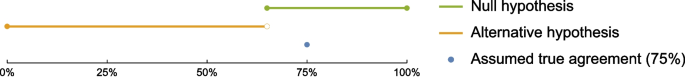

Statistical Analysis: The core of the study is to compare ChatGPT's assessments with the original human consensus assessments from the Cochrane reviews. The level of agreement will be calculated using Cohen’s κ, a statistical measure that accounts for agreement occurring by chance. The sample size was chosen to provide sufficient statistical power for this comparison.

Several secondary and subgroup analyses are also planned, such as comparing performance on trials that used different RoB tools (RoB1 vs. RoB2) and trials published before and after the implementation of CONSORT reporting standards.

Anticipated Impact and Future Directions

This study represents an important early step in understanding how general-purpose LLMs can be applied to specialized scientific tasks. If ChatGPT demonstrates a reasonable level of agreement with human reviewers, it could pave the way for profound changes in how evidence syntheses are conducted. The automation or semi-automation of RoB assessment could dramatically reduce the time and cost required to produce systematic reviews, allowing crucial evidence to reach decision-makers faster. The data collected will also serve as a foundation for future research, including developing more sophisticated prompts or fine-tuning models specifically for this task.

Acknowledged Risks and Ethical Considerations

The authors are clear about the study's limitations and the broader ethical implications. The results may not generalize to other types of trials, LLMs, or non-medical interventions. Furthermore, they caution against the premature replacement of human experts. Incorrectly outsourcing such a critical function to an AI could have severe negative health and economic consequences, particularly in under-resourced settings.

There are also significant ethical concerns about relying on commercial AI systems developed in foreign jurisdictions. The potential for these systems to be influenced by corporate interests or attacked by malicious actors represents a new threat to scientific integrity. As the researchers note, careful evaluation of the benefits, risks, and costs is essential to ensure scientific accountability and maintain the resilience of our health systems.

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details