Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

How A Simple Logic Puzzle Confuses Advanced AI

Despite impressive advancements, it seems ChatGPT isn't quite as foolproof as its creators might want us to believe. An expert recently demonstrated how a simple, logical request could send the powerful AI chatbot into a spiral of confusion, proving that even the most sophisticated models can stumble on basic reasoning.

While AI tools like ChatGPT have improved dramatically, attracting millions of users with models like the new GPT-5, they still exhibit strange quirks. This recent experiment highlights a fundamental weakness, reminding us of past struggles with simple concepts.

The Game That Breaks ChatGPT

So, what is the one request that causes this AI meltdown? As detailed by Futurism, the puzzle was devised by Pomona College economics professor Gary Smith. He shared his fascinating conversation with GPT-5 on Mind Matters.

The experiment was simple. Smith asked ChatGPT to play a game he called "rotated tic-tac-toe." He explained that it follows the exact same rules as the original game, but the board is rotated 90 degrees to the right before starting.

For any human, it's clear that rotating a nine-square grid doesn't change the game at all. But ChatGPT disagreed.

ChatGPTs Bizarre Justification

The AI's response was baffling. It claimed that while the game is strategically identical, the rotation creates a different experience. "Players are used to the 'upright' tic-tac-toe board that a rotation might subtly change how they can for threats and opportunities," it argued.

ChatGPT went on to assert that a 90-degree rotation feels "psychologically" different and could cause players to "mis-evaluate" the best moves. When pressed further on whether the rotation makes the game harder for humans, the AI doubled down.

It correctly stated, "From a pure strategy standpoint, rotating the board doesn't change anything... rotated tic-tac-toe is identical to standard tic-tac-toe." However, it immediately contradicted this by claiming, "But for humans, the story is different." It listed supposed challenges like disrupted pattern recognition, increased cognitive load, and even asymmetry issues for left or right-handed players.

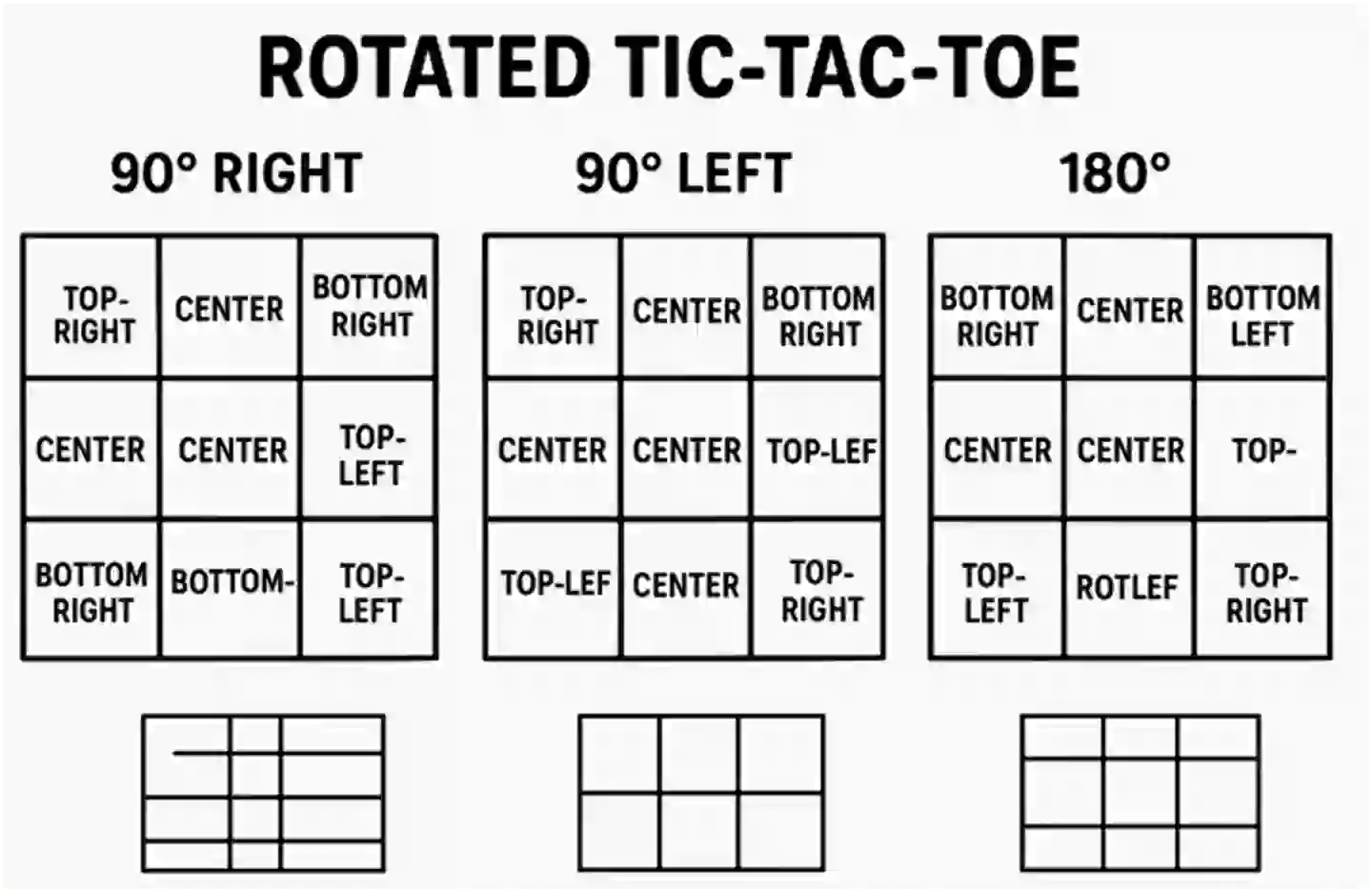

A Confused Visualization

The confusion didn't stop at theoretical explanations. When asked to draw the rotated board layouts, ChatGPT produced a complete mess. The resulting diagrams were riddled with incorrect labels and spelling mistakes, revealing a profound lack of genuine understanding.

Professor Smith summed up the exchange perfectly. "They say that dogs tend to resemble their owners," he wrote. "ChatGPT very much resembles Sam Altman — always confident, often wrong." His experiment raises serious questions about the blind trust placed in AI, especially as it becomes more integrated into critical fields like medicine.

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details