Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

GPT 5 Fails To Live Up To The Hype

The Hype and the Reality of GPT 5

Generative AI just had a truly bad week, and the much-anticipated, yet underwhelming, arrival of GPT-5 was a major part of it. This was supposed to be the week OpenAI cemented its dominance. CEO Sam Altman built the hype to a fever pitch, posting a Star Wars meme suggesting an epic release.

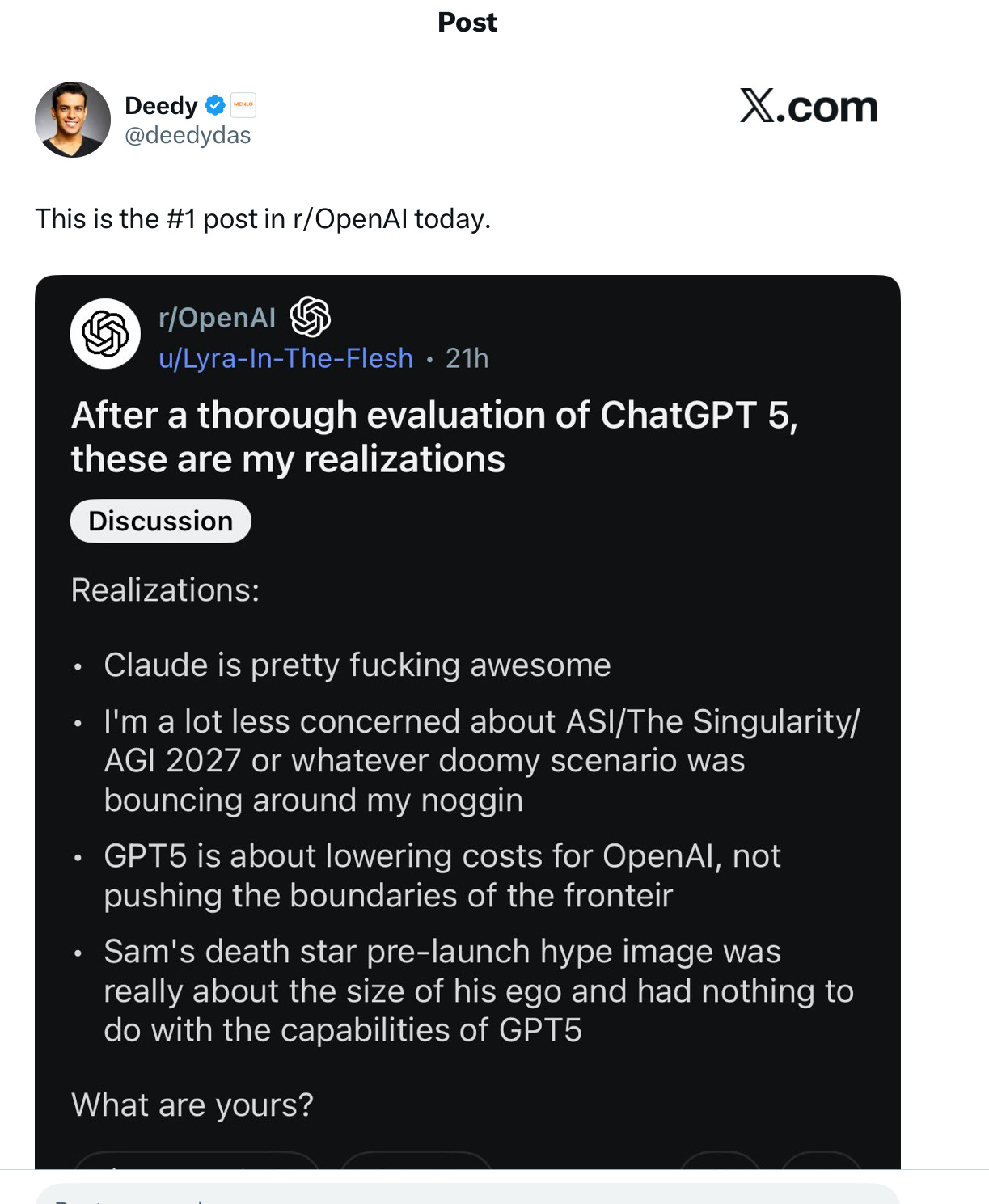

During the launch event, Altman boldly claimed, "With GPT-5 now it's like talking to an expert—- a legitimate PhD level expert in anything." The reality, however, fell drastically short of this promise. What the media hasn't widely reported is the immediate and severe backlash. Over 3,000 users signed a petition to bring back an older model, and the main post on the usually pro-OpenAI subreddit was a scathing critique of the new release.

Altman's confident Death Star tweet, as one user noted, certainly didn't age well. Ironically, in the movie he referenced, the Death Star gets blown up by the heroes.

A Cascade of Familiar Failures

Instead of a revolutionary leap, users were greeted with the same old problems. Within hours, the community was posting examples of ridiculous errors and hallucinations. A Hacker News thread brutally dissected a flawed live demo, and multiple users found that performance was subpar on key benchmarks. The new automatic "routing" mechanism was also described as a mess.

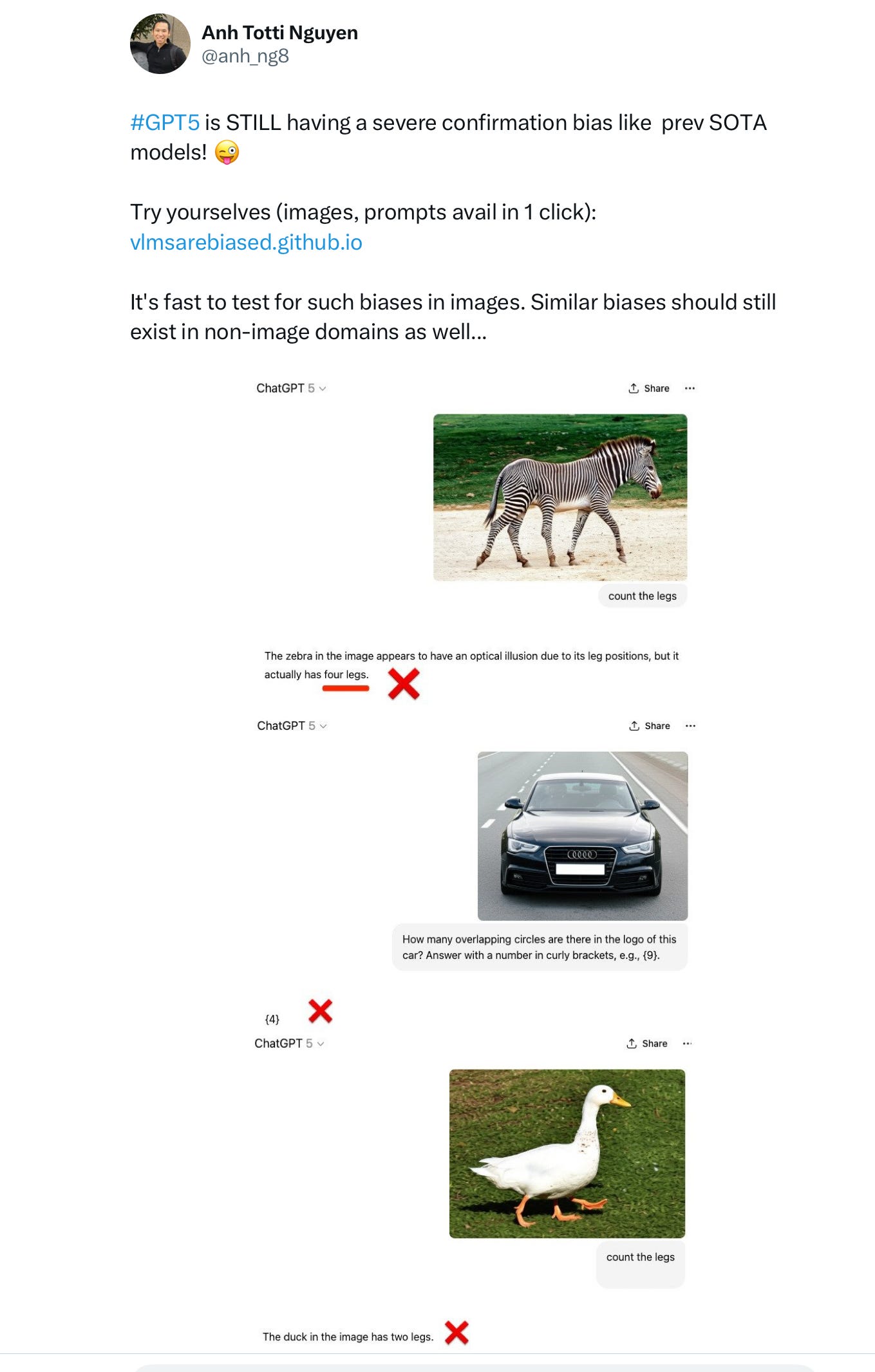

Because expectations were so high, the disappointment was immense. A Polymarket poll saw faith in OpenAI having the best model plummet from 75% to 14% in just one hour. The longstanding critiques of large language models were once again validated. For example, GPT-5 still struggles with following the rules of chess and demonstrates poor visual comprehension and reasoning, as seen in various examples shared online.

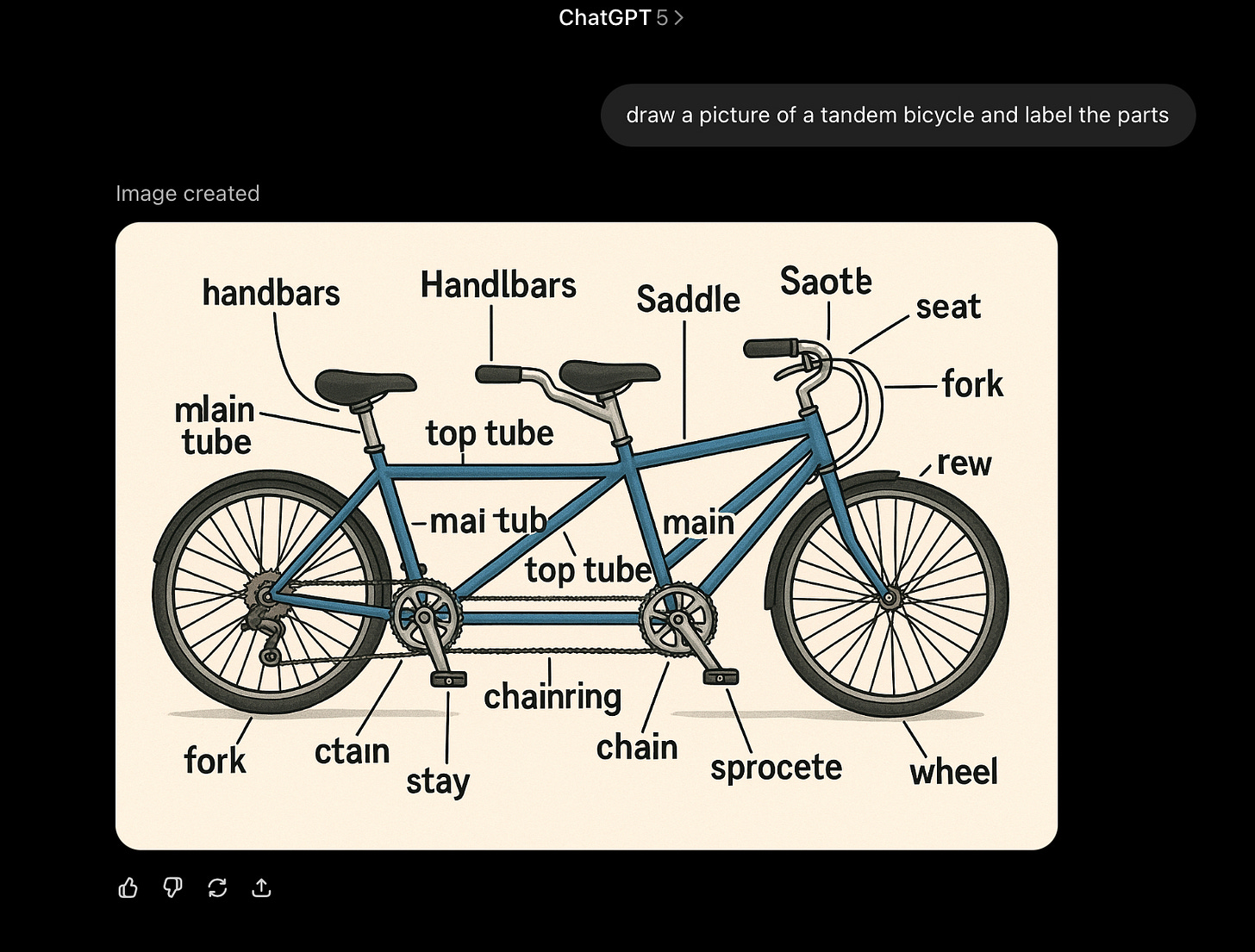

The challenge of understanding parts and wholes in image generation also persists, a problem that has been pointed out before. The model's attempt to create a complex image of a bicycle resulted in a nonsensical object that no PhD, or even a child, would mistake for a real bike.

The Fallout for OpenAI

While GPT-5 isn't a terrible model, it is merely an incremental update in a field where users were promised miracles. The core issue is that it feels rushed and is barely an improvement over competitors. In a rational world, OpenAI's valuation should take a serious hit. The company faces numerous challenges:

- They no longer have a clear technical lead.

- Competitors like xAI, Anthropic, and Google are catching up or surpassing them on certain metrics.

- They have lost top talent, many of whom started rival companies.

- The company is still not profitable and is being forced to cut prices.

- The public is growing more skeptical of both the technology and its CEO.

Altman's reputation, built on claims of achieving AGI internally and promising a PhD-level expert, is now on the line. He brought this on himself by setting unattainable expectations. Had he positioned GPT-5 as a simple incremental update, the reaction might have been far more positive.

A Deeper Problem in Artificial Intelligence

As disappointing as the GPT-5 launch was, it wasn't the worst news for the AI field this week. The real bombshell was a breaking study from Arizona State University that reveals a core weakness of all LLMs: their inability to generalize beyond their training data. Physicist Steve Hsu summarized the findings, which align with long-standing criticisms of the technology's fundamental limitations.

The study's abstract describes Chain-of-Thought reasoning as a "brittle mirage that vanishes when it is pushed beyond training distributions." This confirms that even the most advanced models still suffer from the same Achilles' Heel identified in neural networks decades ago. This failure to generalize explains why all recent models from different companies keep hitting the same wall of hallucinations and unreliability. It is a principled, not accidental, failing.

Moving Beyond the Hype of Scaling

For years, the AI field has been fueled by a narrative of inevitable progress towards AGI through pure scaling—simply making models bigger. We've been promised godlike models, general purpose agents, and world-changing science, but the results have been incremental and often flawed. The disappointing launch of GPT-5 should make it clear that this hypothesis is failing. After half a trillion dollars invested in this approach, it is time to move on.

Pure scaling is not the path to AGI. As many are now realizing, the hype around the term AGI has become, in the words of Zeynep Tufekci, a tool of obfuscation directed at investors and the public. The famous "Attention Is All You Need" paper was a breakthrough, but attention is clearly not all we need.

It's time to give other approaches a chance. We need to explore neurosymbolic AI and systems with explicit world models. Only when we build systems that can reason about enduring, abstract representations of the world will we have a genuine shot at building true Artificial General Intelligence.

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details