Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

Run Private AI On Your Mac For Free

Unlock a Private AI on Your Mac—For Free

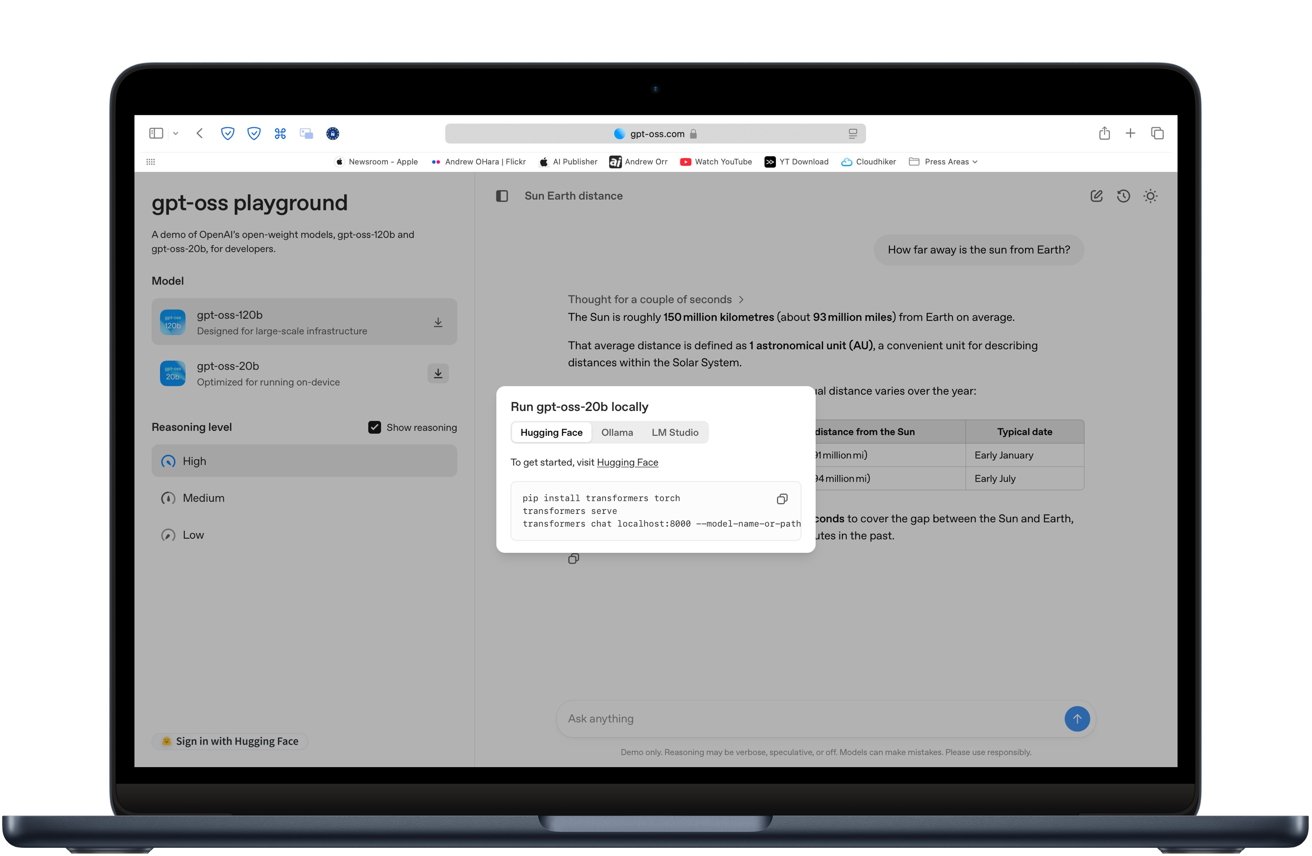

OpenAI has recently released its first open-weight large language models in years, gpt-oss-20b and gpt-oss-120b. This is a game-changer for Mac users, as it allows you to run a powerful, ChatGPT-style AI directly on your machine. The best part? It's completely offline, requires no subscription, and keeps your data private.

This guide will walk you through how to set up your own local AI on any capable Apple Silicon Mac. Before you download anything, you can even test drive the models in your browser at gpt-oss.com, which offers a free demo to showcase its writing, coding, and reasoning abilities.

What You'll Need to Get Started

Running a large language model is demanding, much like high-end gaming on a Mac. For a smooth experience, a Mac with at least an M2 chip and 16GB of RAM is recommended. If you're using an M1-based machine, the Max or Ultra versions are preferable. A Mac Studio is an excellent choice due to its superior cooling.

Even a newer MacBook Air with an M3 chip can handle the task, but expect it to heat up and run a bit slower.

To manage the AI model, you'll need one of these free tools:

- LM Studio: An application with a user-friendly visual interface.

- Ollama: A lightweight command-line tool for managing and running models.

- MLX: Apple's own machine learning framework, which both LM Studio and Ollama use to accelerate performance on Apple Silicon.

These applications simplify the process by handling model downloads, setup, and compatibility for you.

How to Install with Ollama

Ollama is a fantastic, lightweight option for running local AI models with minimal fuss. Here’s how to get it running:

- First, install Ollama by following the official instructions on their website at ollama.com.

- Open your Terminal app and type the command:

ollama run gpt-oss-20b. - Ollama will take care of the rest, automatically downloading the correct, optimized version of the model (around 12 GB).

- Once the download and setup are complete, a prompt will appear in your Terminal. You can start chatting with your private AI immediately.

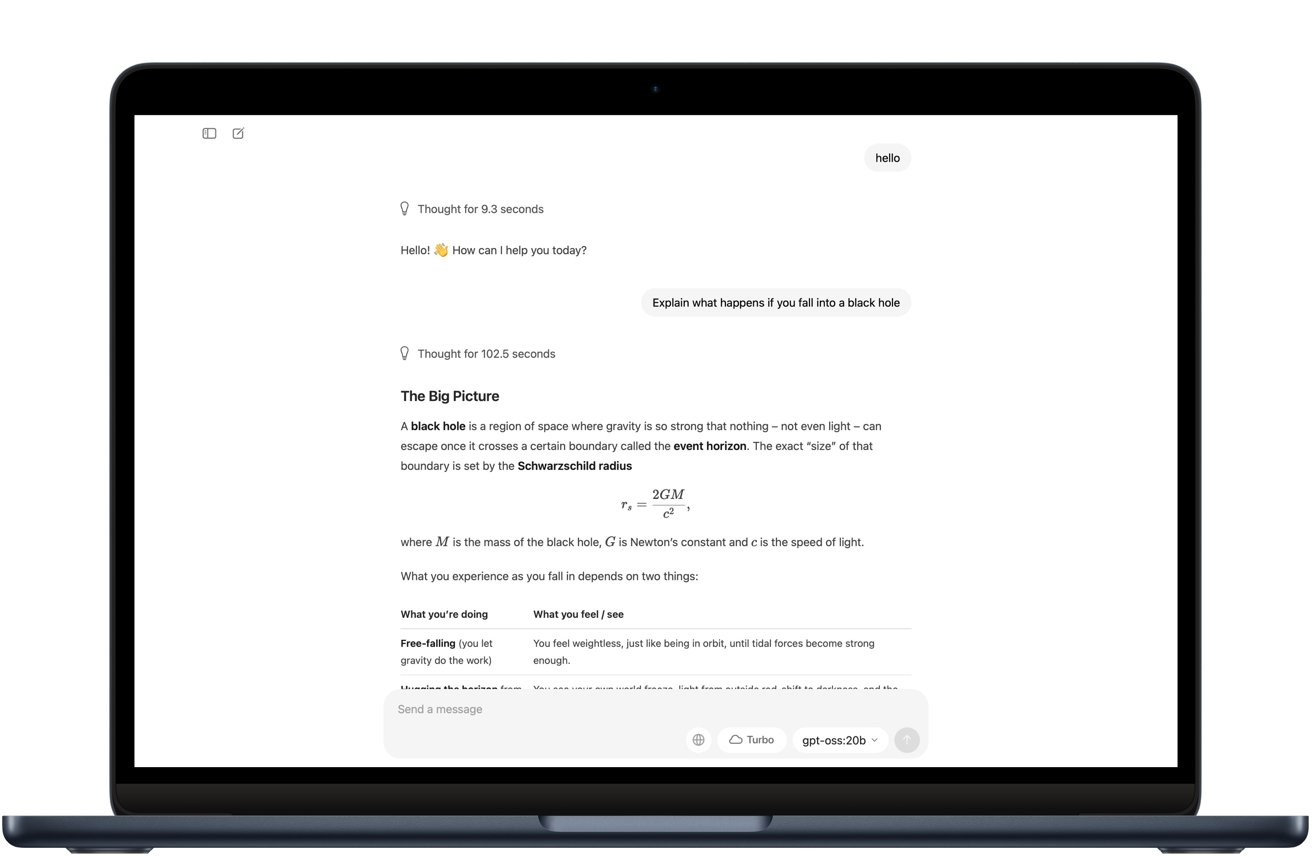

It functions just like the online version of ChatGPT, but all processing happens on your Mac, completely offline.

Performance and Limitations

The gpt-oss-20b model is designed to be efficient. It comes pre-compressed (quantized) into a 4-bit format, which allows it to run effectively on Macs with 16GB of RAM. It's capable of handling a variety of tasks:

- Writing and summarizing text

- Answering general questions

- Generating and debugging code

- Structured data extraction

While it's slower than cloud-based models like GPT-4o for very complex requests, it is highly responsive for most personal and development tasks. The larger 120b model is significantly more demanding, requiring 60-80GB of memory, making it suitable only for high-end Mac Pro or Mac Studio configurations.

Why Run an AI Locally?

The primary benefit of running an AI on your own machine is privacy. Since no data ever leaves your computer, your conversations and information remain completely secure. Other key advantages include:

- No Costs: You avoid all subscription or API fees.

- Offline Access: It works anywhere, without needing an internet connection.

- Customization: The models are released under the flexible Apache 2.0 license, allowing developers to fine-tune them for specific, specialized tasks.

Tips for the Best Experience

To get the most out of your local AI, follow these tips:

- Use Quantized Models: The gpt-oss-20b model is already quantized to a 4-bit format (MXFP4). This process drastically reduces memory usage while maintaining high accuracy, making it ideal for consumer hardware.

- Manage Your RAM: If your Mac has less than 16GB of RAM, consider using smaller models (in the 3-7 billion parameter range). Always close other memory-heavy applications before starting an AI session.

- Enable Acceleration: Make sure MLX or Metal acceleration is enabled in your chosen tool (LM Studio or Ollama) to maximize performance.

Running a local AI model offers a powerful combination of privacy, control, and cost-effectiveness. While it may not match the raw speed of top-tier cloud services, gpt-oss-20b is an excellent choice for anyone who values data security and offline capability.

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details