Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

Why Your AI Chatbot Is A Security Risk

I thought I was just having a quick chat with ChatGPT about my computer, but I ended up accidentally sharing my Windows PIN and a host of other personal information.

A Casual Chat Leads to a Critical Mistake

When ChatGPT's advanced voice feature became available, I quickly set it as my default voice assistant on my phone. The human-like interaction was impressive, and I found myself using it for everything from technical questions to exploring its other advanced features like live voice and vision. The convenience of not having to type made the conversations feel natural and effortless.

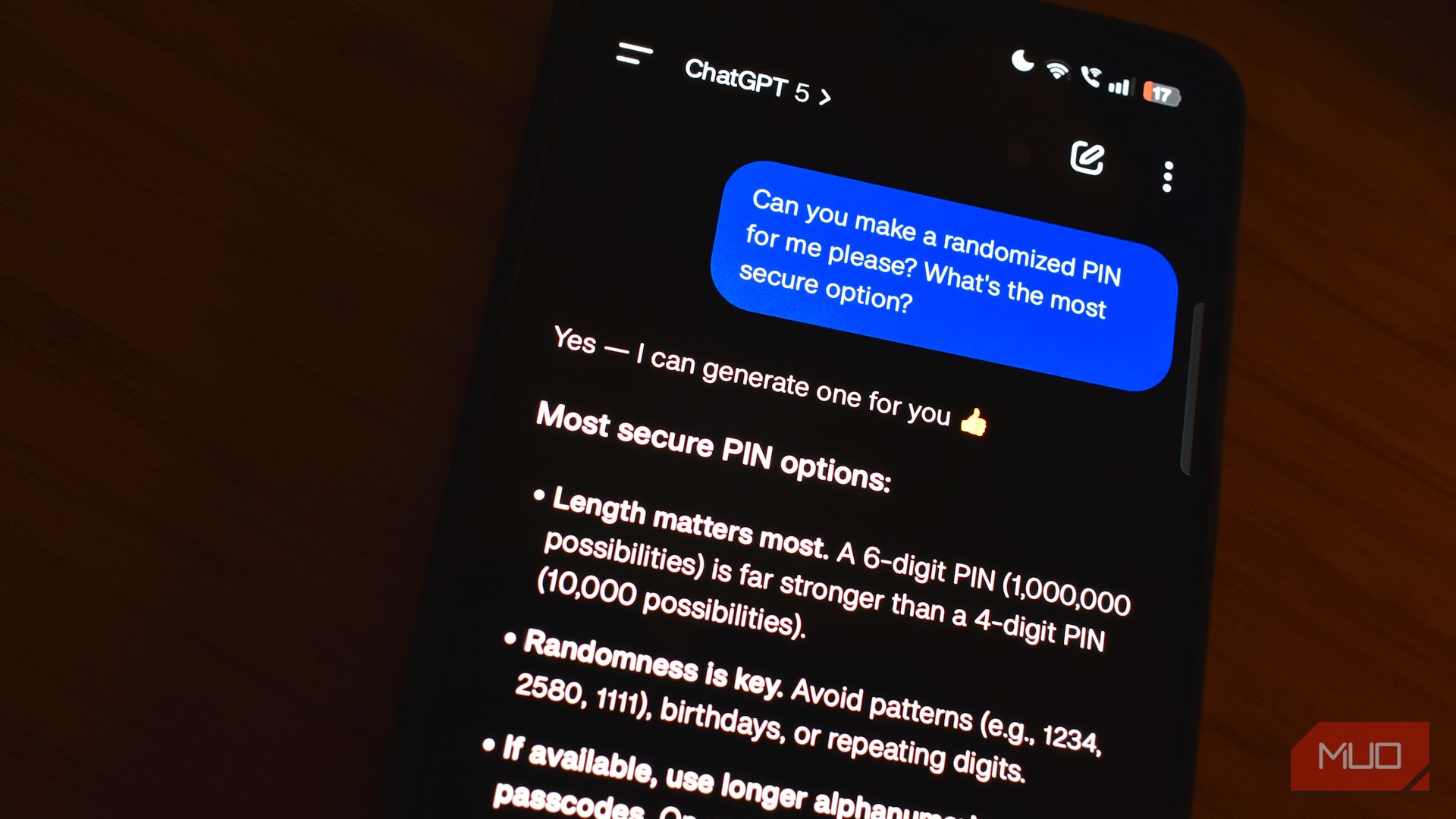

During one of these sessions, I was complaining about a frustrating Windows Hello issue. After every system update, my computer would forget my PIN, forcing me to set it up all over again. After going through this annoying process one more time, I decided to change my PIN. In the flow of the conversation, I casually asked ChatGPT to save my new PIN in case I forgot it.

I was so accustomed to using assistants like Google Assistant that I completely overlooked the fact that I was now talking to ChatGPT. Sharing a PIN is never a good idea, but giving it to an AI with a "Memory" feature meant it would be stored indefinitely. I was treating it like a digital notepad, not realizing the security implications.

Discovering the Dangers of AI Memory

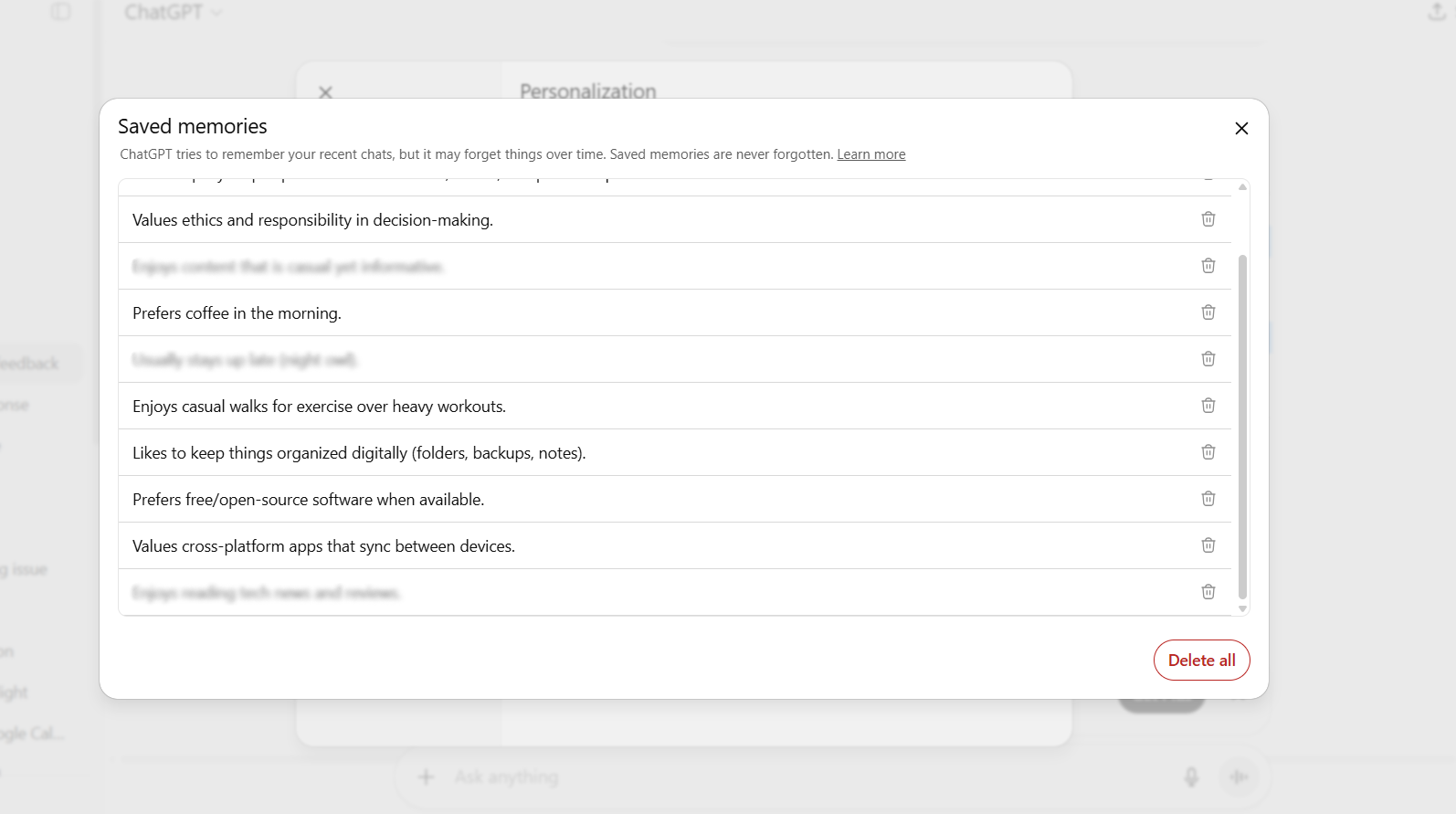

The realization of what I'd done made me anxious. I often let friends and family use my PC, and the thought of someone accessing my chat history and finding my PIN was alarming. What other information had I casually shared? I immediately decided to investigate ChatGPT's Memory logs to see the extent of the damage.

What I found was far more detailed than just my PIN. ChatGPT had logged places I frequently visit, the fact that I use my PC for online banking, and even my habit of stepping away from my computer without locking it. The Memory feature had painted a comprehensive and vulnerable picture of my digital life.

While AI models have safeguards, our casual conversational style means we can easily share sensitive data without thinking. This experience showed me how small, seemingly insignificant details can be aggregated into a detailed profile that would be a goldmine for anyone with malicious intent.

The Domino Effect How One PIN Can Compromise Everything

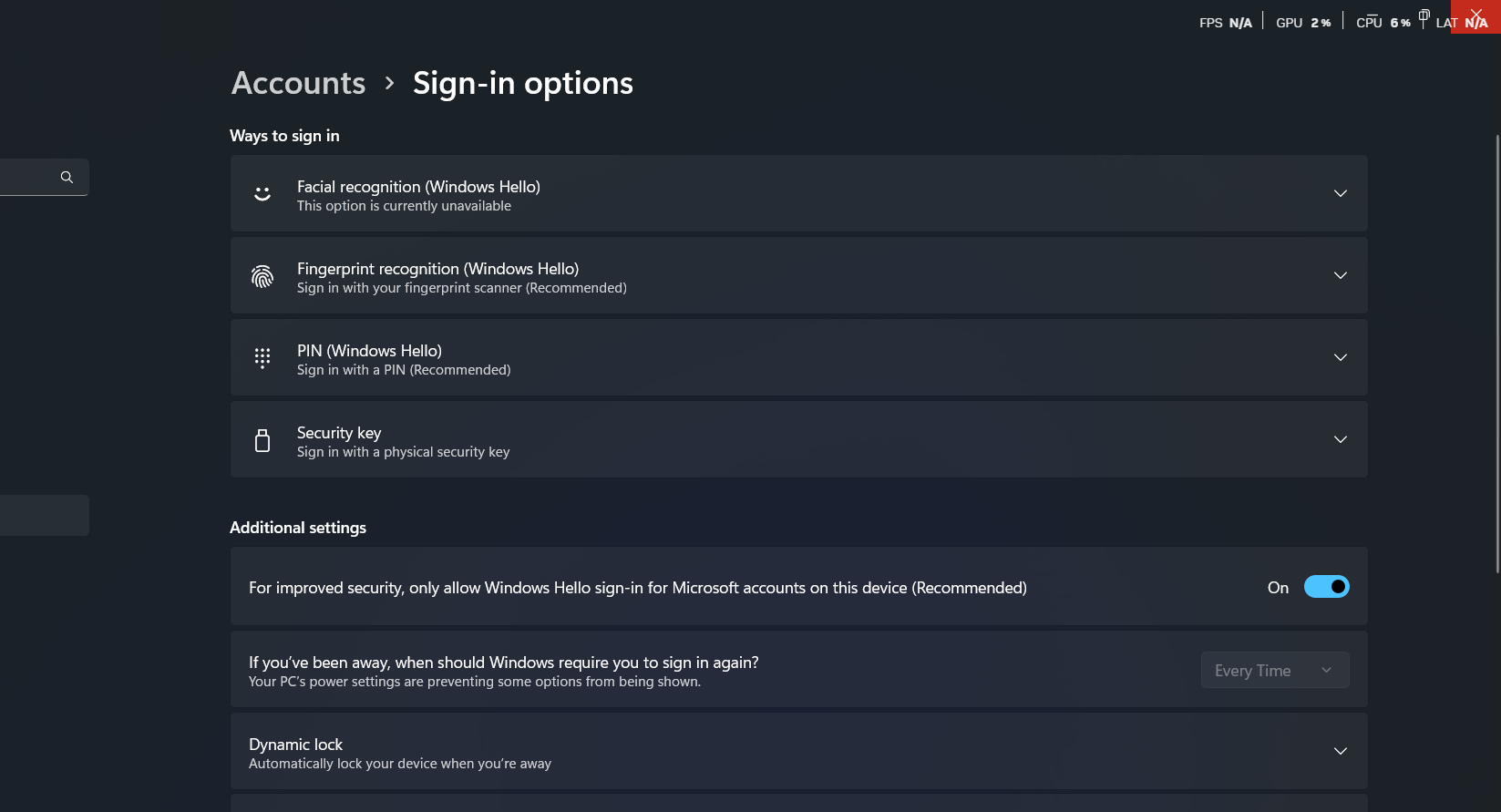

The real danger became terrifyingly clear when I considered the full implications of a compromised Windows PIN. It wasn't just a key to my lock screen; it was a master key to my entire digital identity on that device.

Windows PINs, through their integration with Windows Hello, create a deceptive sense of security. Once someone has your PIN, they can potentially unlock everything. This includes saved passwords in browsers, password managers that use Windows Hello, and any application that relies on Windows authentication for access.

My computer had become a personal vault. My browser stored dozens of passwords that could be auto-filled with a simple PIN entry. Even more concerning, my PC was one of my primary two-factor authentication (2FA) devices. An attacker with my PIN could not only steal my passwords but also intercept or approve 2FA requests, leading to complete account takeovers. That simple four-digit code was a single point of failure that could unravel my entire security setup.

Steps to Secure Your Data and Reclaim Your Privacy

This accidental disclosure was a wake-up call, forcing me to completely rethink how I interact with AI. Protecting my digital life required both immediate action and building better long-term habits.

First, I immediately changed my Windows PIN and moved critical passwords to a dedicated password manager that required a separate master password. I also shifted important accounts to use hardware security keys for authentication instead of relying on my computer.

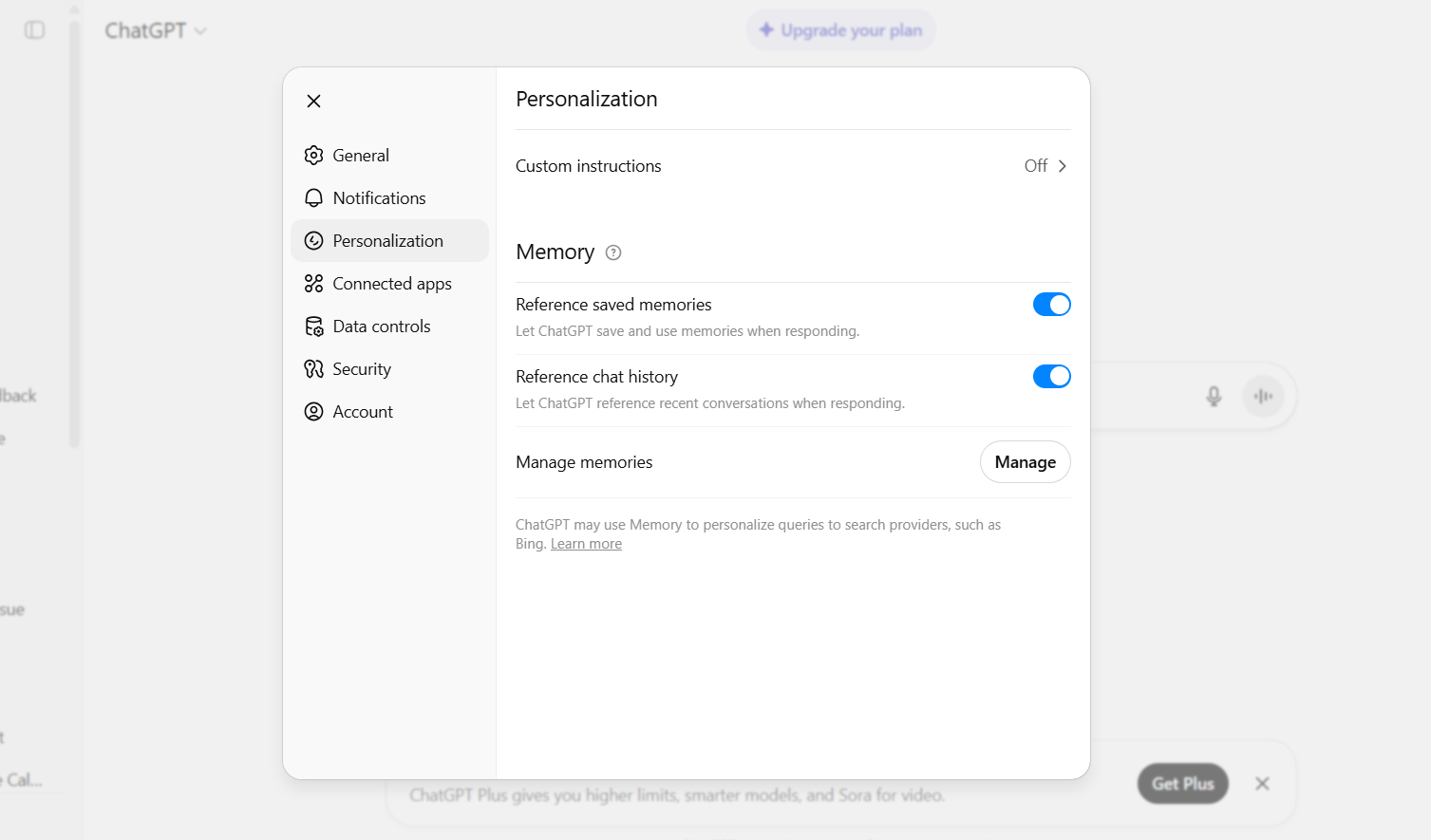

Next, I audited ChatGPT's Memory. By navigating to Settings > Personalization > Memory and selecting Manage, I was able to review and delete every piece of sensitive information the AI had stored about me.

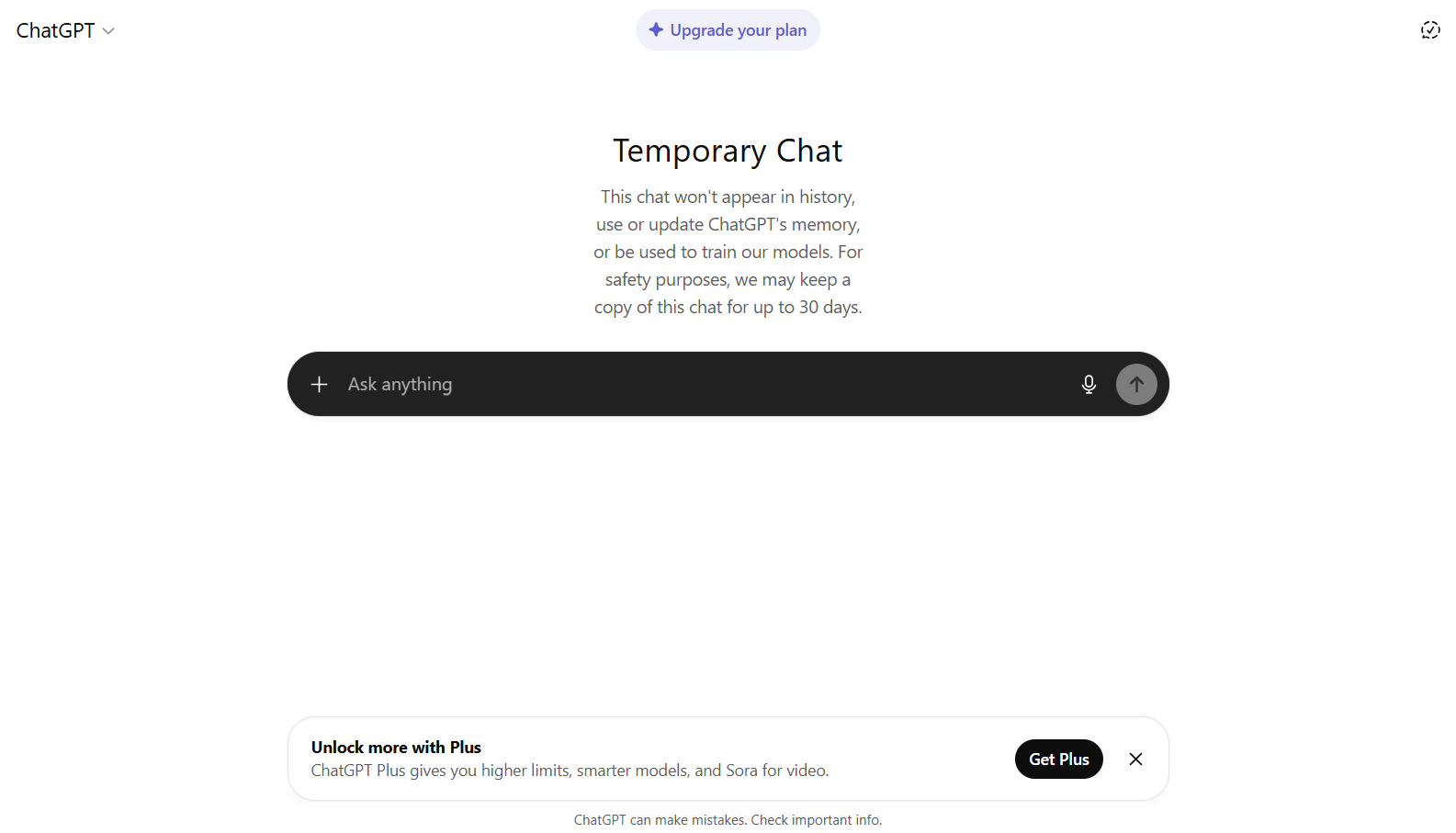

Now, for any discussion that might involve sensitive information, I use ChatGPT's Temporary Chat feature. This acts as an incognito mode, ensuring the conversation is not saved, stored in Memory, or used for training the model.

There are several methods to control how ChatGPT handles your data, but actively auditing your Memory logs and using Temporary Chat are two of the most effective ways to protect yourself.

This experience taught me a vital lesson: modern security is about more than just strong passwords. It's about understanding how our digital tools are connected and recognizing how casual conversations can create serious vulnerabilities. AI memory is a powerful feature, but it demands our caution and respect.

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details