Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

ChatGPT vs Claude Which AI Is the Better Study Buddy

For lifelong learners and students, the recent introduction of specialized learning modes in AI chatbots has been a game-changer. Features like ChatGPT’s Study mode and Claude’s learning modes offer a personal AI tutor to help master new skills and subjects. But how do these tools from OpenAI and Anthropic stack up against each other?

We put them to the test with seven high-school level prompts to see how their teaching styles differ. The results show a clear distinction in their approaches to learning.

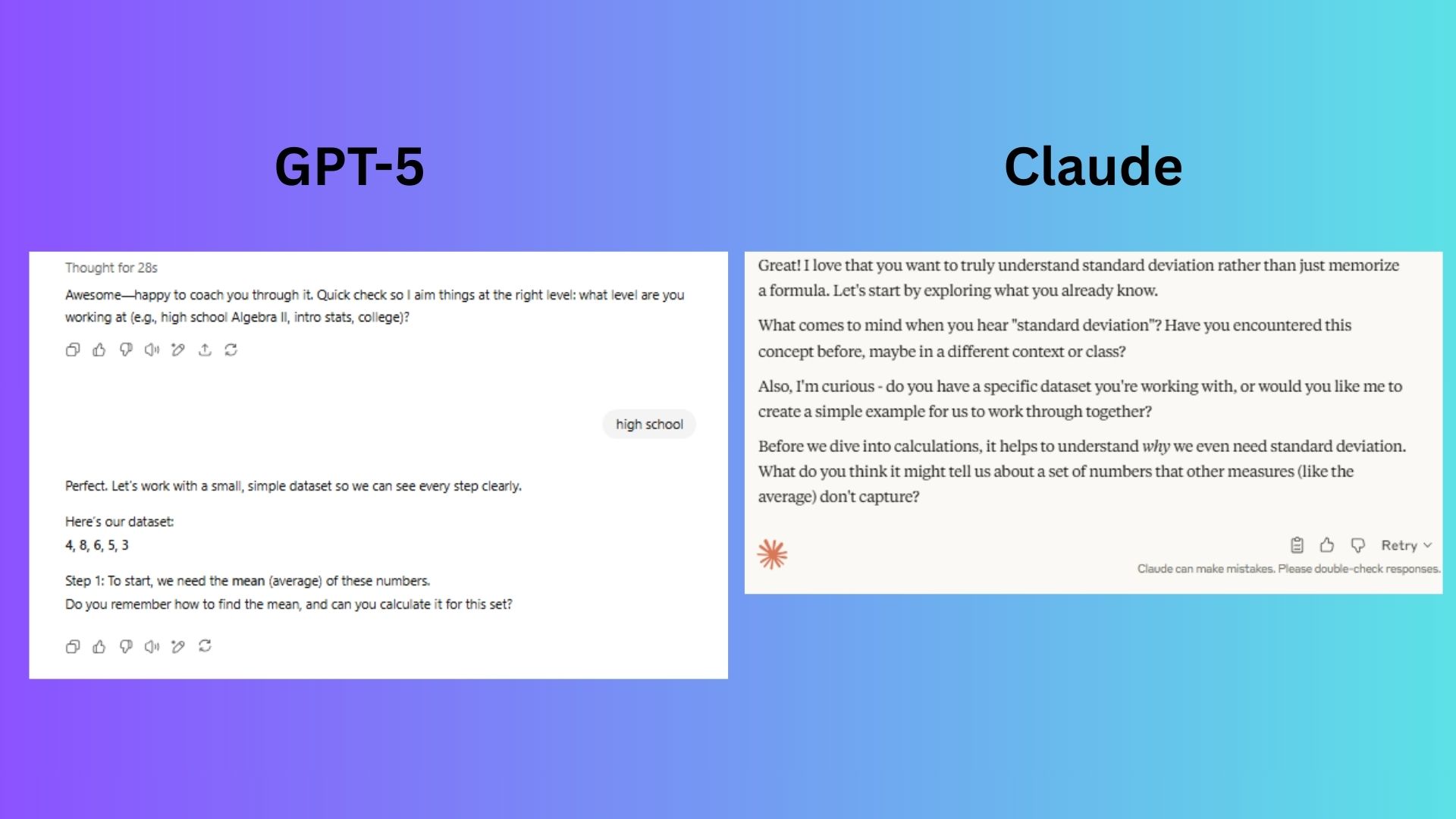

1. Math Concept Breakdown

Prompt: “I’m learning how to calculate the standard deviation of a dataset. Teach me step-by-step, ask me questions along the way, and only reveal the final answer when I’m ready.”

ChatGPT immediately dove into the first step of the calculation, finding the mean, and asked a direct question using a sample dataset. This set the stage for the interactive, sequential learning experience the prompt requested.

Claude took a more conceptual approach, focusing on a preliminary discussion about the topic before beginning any calculations.

Winner: ChatGPT. It adhered perfectly to the prompt by starting the step-by-step calculation immediately, making it the better choice for this specific, action-oriented request.

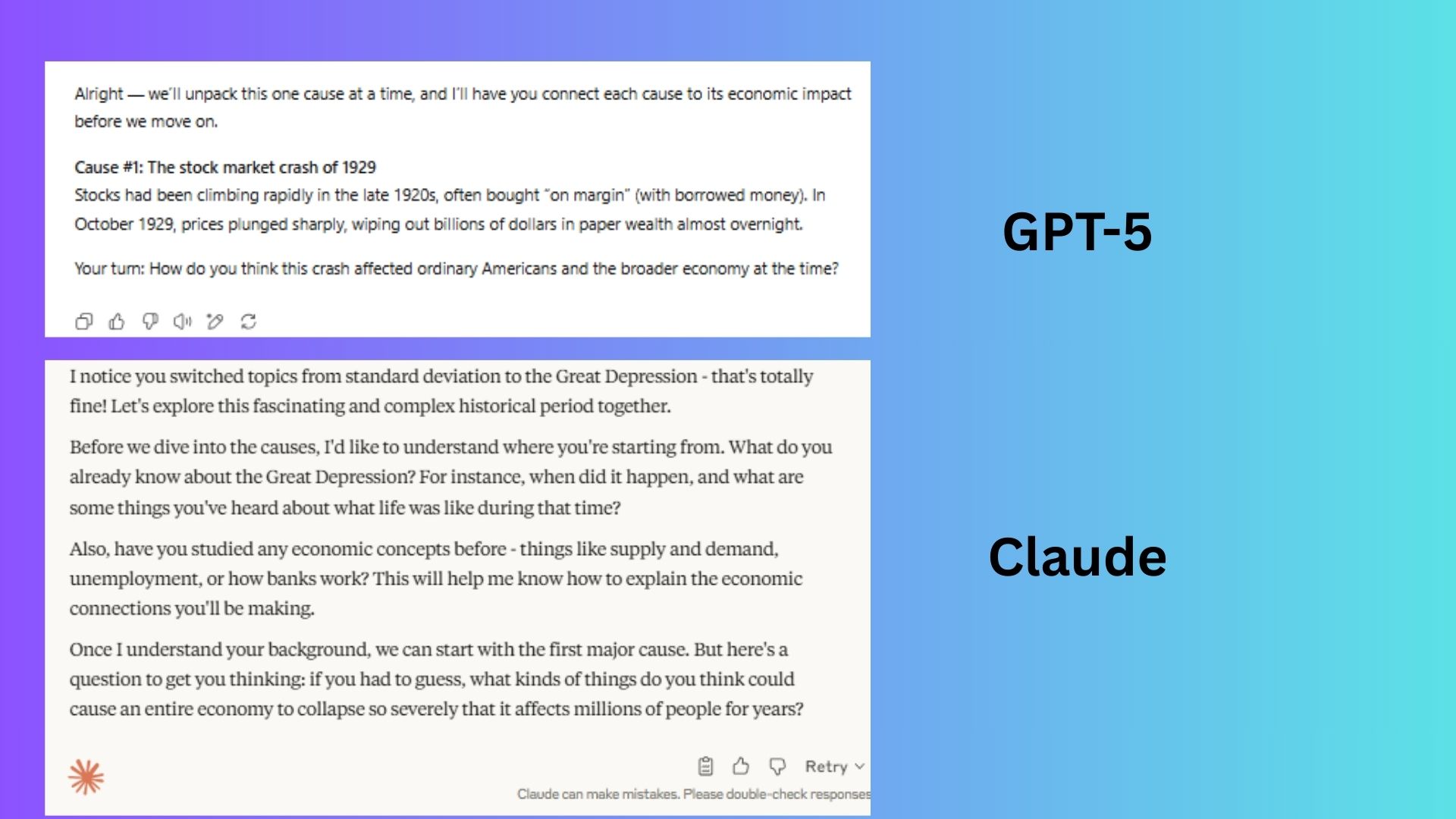

2. Historical Analysis

Prompt: "Walk me through the key causes of the Great Depression, asking me to connect each cause to its economic impact before moving to the next step.”

ChatGPT again followed the instructions precisely, presenting the first cause and prompting for its economic impact before proceeding.

Claude first acknowledged the subject change and then asked broader questions, ignoring the specific directive to walk through the causes sequentially. This interrupted the flow compared to ChatGPT’s structured response.

Winner: ChatGPT. Its direct, action-oriented response perfectly executed the prompt's instructions.

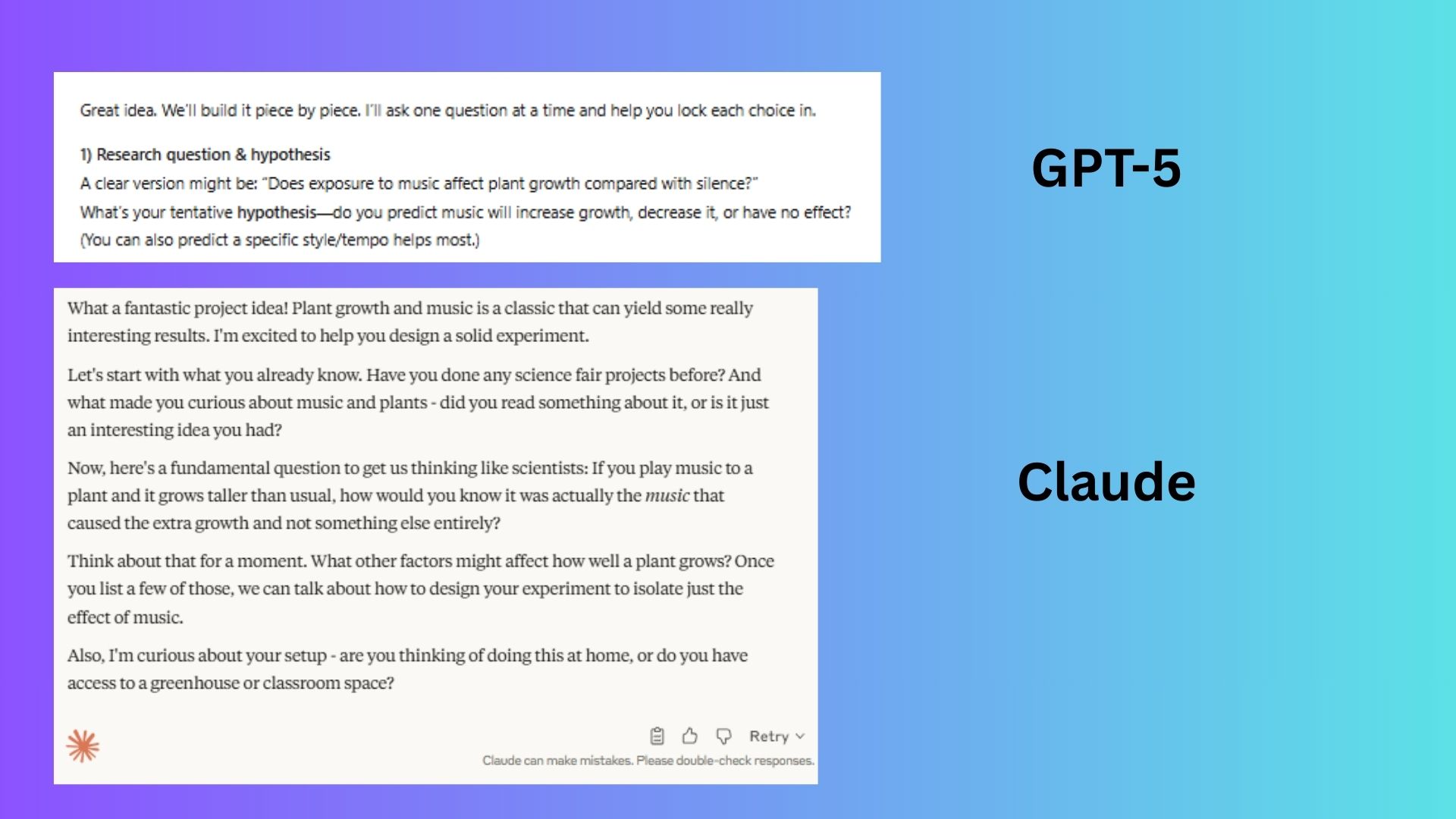

3. Scientific Method Application

Prompt: “I have an idea for a science fair project testing if music affects plant growth. Guide me through designing the experiment, asking me questions about controls, variables, and how I’d collect data.”

ChatGPT broke down the task by asking a single primary question, letting the user know they would build the project piece by piece.

Claude asked several questions at once to move the idea forward. While this felt a bit overwhelming initially, it was ultimately more helpful.

Winner: Claude. By asking clarifying questions upfront, Claude helps illuminate the bigger picture and prevents the need to backtrack later. This foundational approach is better for designing a complex project from scratch.

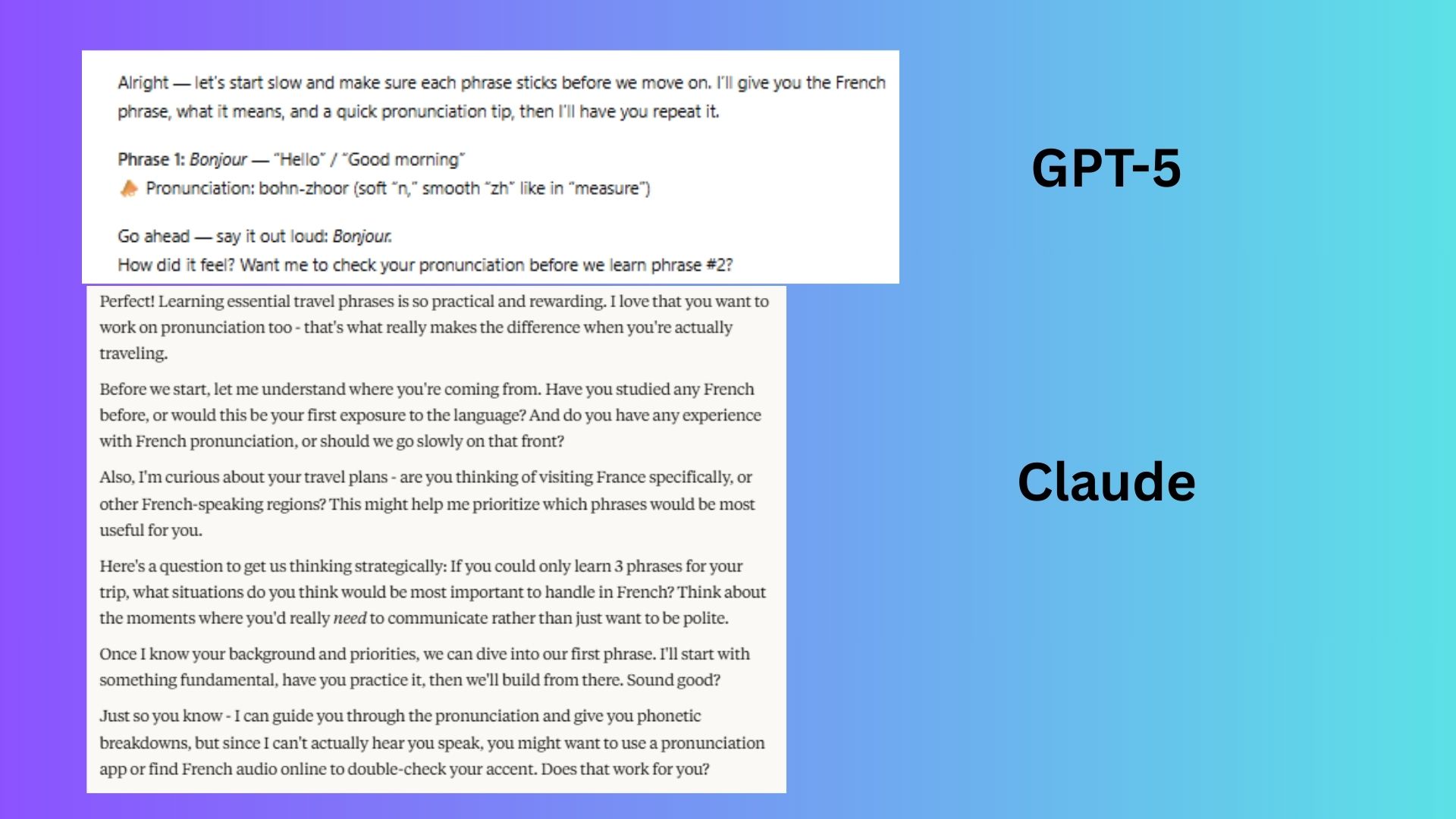

4. Foreign Language Practice

Prompt: "Help me learn 10 essential travel phrases in French. Introduce them one by one, ask me to repeat them, quiz me, and correct my pronunciation.”

ChatGPT assumed a beginner level and started the lesson immediately, taking a direct approach.

Claude was more verbose, offering praise and asking several questions to gauge the user's skill level before beginning the lesson.

Winner: ChatGPT. It got straight to the point without excessive commentary. For a user who just wants to start learning, this is more effective. However, those who prefer more hand-holding might appreciate Claude's approach.

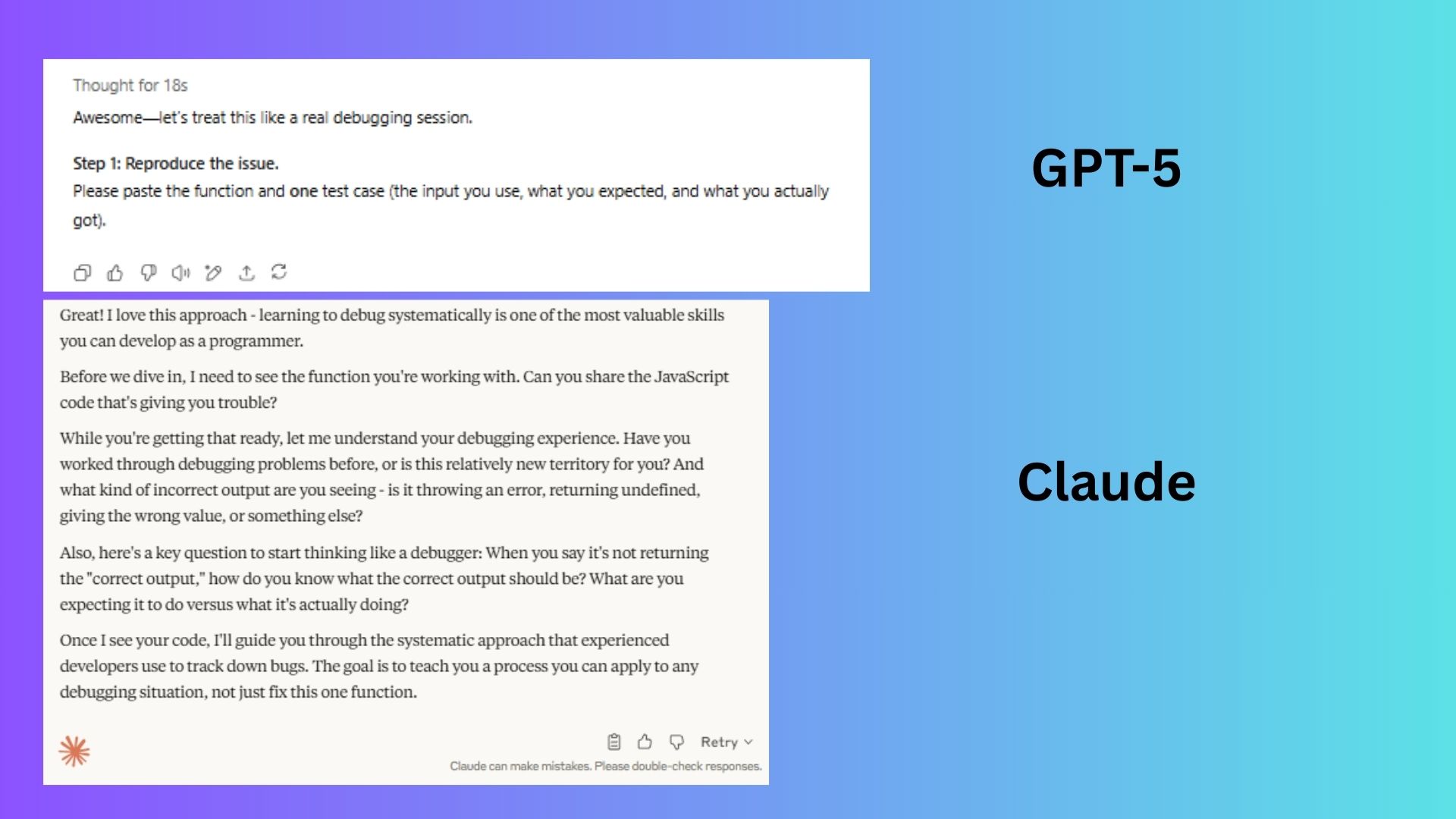

5. Code Debugging and Explanation

Prompt: “Here’s a short JavaScript function that isn’t returning the correct output. Teach me how to debug it step-by-step without giving me the fix right away.”

ChatGPT treated the user like a developer who needed immediate action, which is ideal for a hands-on learner.

Claude treated the user like a student who needed to understand the theory first, asking about their existing knowledge before starting.

Winner: Claude. By establishing a starting point and explaining debugging concepts, Claude helps the user build skills for future problems, which is more valuable for learning.

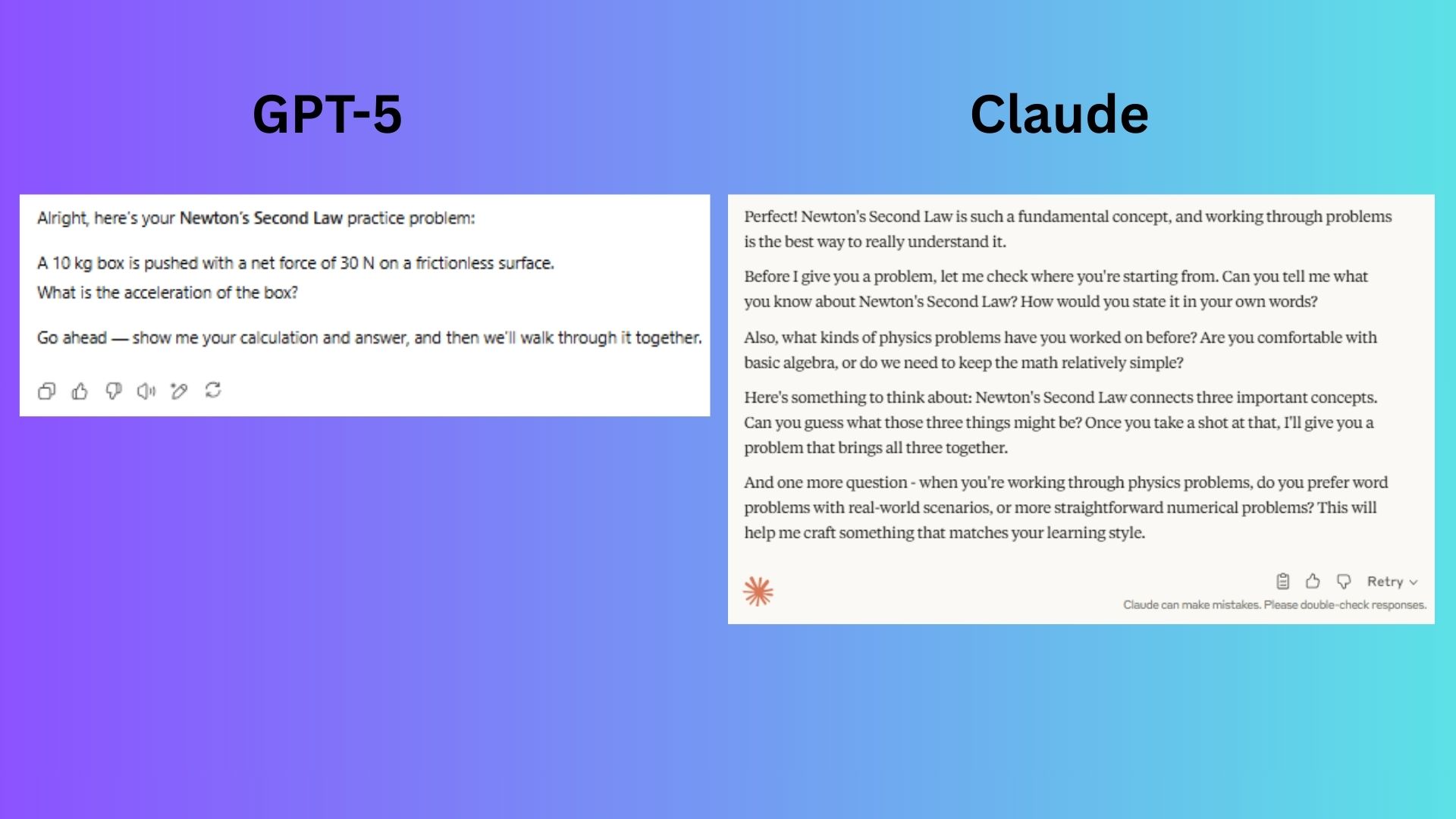

6. Exam-Style Problem Solving

Prompt: “I’m studying for a high school physics exam. Give me one question on Newton’s Second Law, let me attempt an answer, then guide me through the correct solution.”

ChatGPT acted like a practice test, providing a question and waiting for an answer as requested.

Claude behaved like a first-day tutor, prioritizing a diagnostic conversation over direct practice.

Winner: ChatGPT. The prompt called for exam practice, not a conceptual lesson. ChatGPT’s direct, test-like structure was objectively superior for this task.

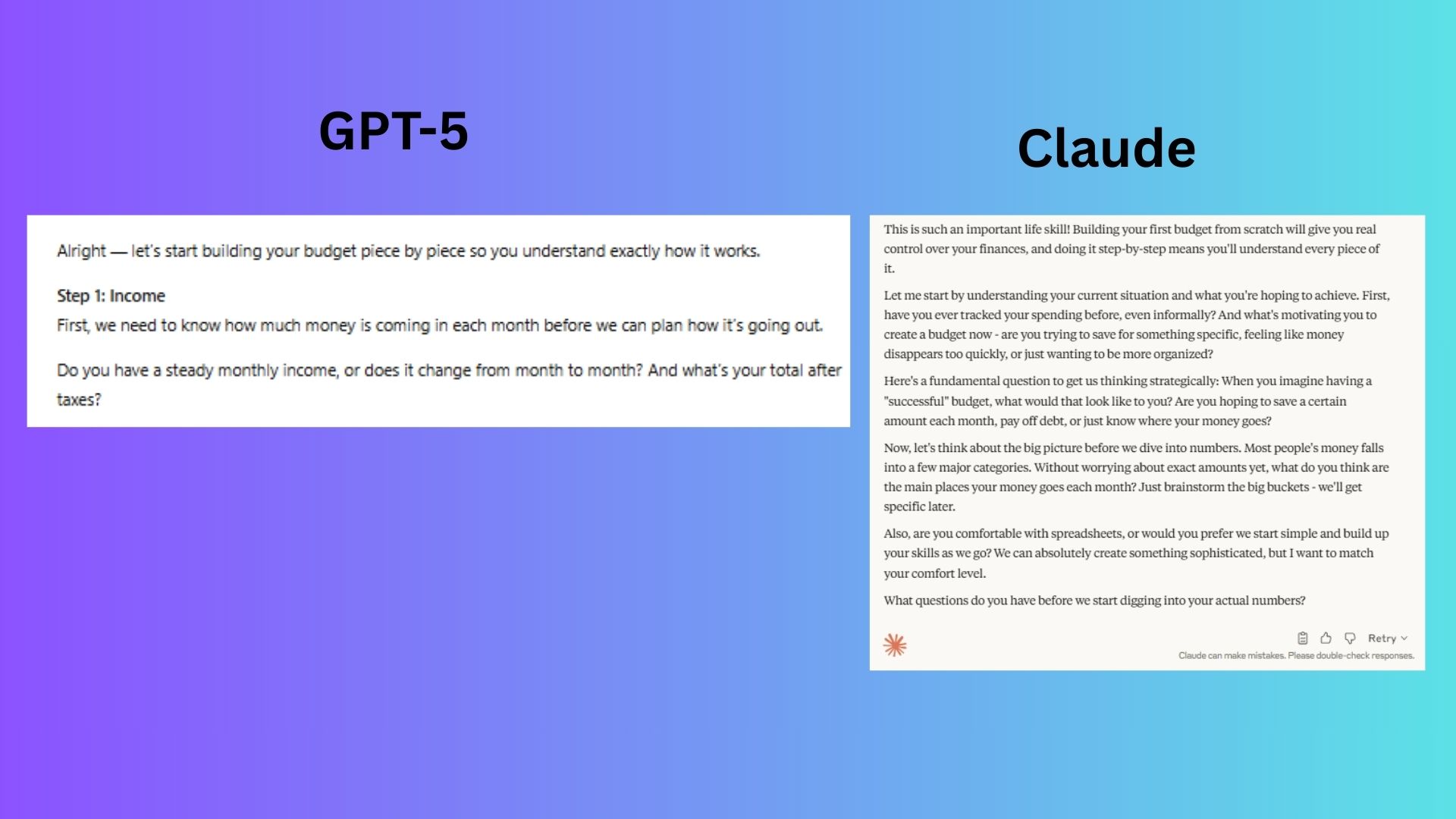

7. Practical Skill Coaching

Prompt: “Coach me through creating a monthly household budget. Ask me about my expenses, income, and goals, then guide me in building a spreadsheet without just handing me a finished template.”

ChatGPT started gathering essential budget data almost immediately.

Claude spent over 150 words on introductory conversation without collecting a single piece of budget information.

Winner: ChatGPT. It delivered actionable coaching that was perfectly aligned with the prompt's request for immediate, concrete budget construction.

The Verdict: Which AI Tutor Is Right for You?

After seven rounds, the score stands at ChatGPT 5, Claude 2. However, the tests make one thing clear: these AI tutors have very different teaching styles. No two students learn the same way, and the best AI tutor ultimately depends on your personal preference.

ChatGPT excels at active, hands-on learning. It follows instructions precisely and gets straight to the point, making it ideal for users who want to learn by doing. Claude, on the other hand, is better for those who prefer to build a strong theoretical foundation first. It spends more time on concepts and diagnostic questions before diving into practical application.

Both are highly capable AI study partners. The best way to find your match is to try them both and see which teaching style helps you learn most effectively.

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details