Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

The Ultimate AI Showdown GPT 5 vs Gemini 25

The AI landscape is heating up with two major contenders vying for the top spot. On one side, we have OpenAI's GPT-5, the company's most advanced model yet, boasting superior memory and reasoning. On the other, Google's Gemini 2.5 Pro is engineered to challenge its rival in logic, coding, and handling multiple data types.

To see which of these powerful chatbots truly excels, I put them through a rigorous 10-round faceoff. Using the exact same prompts for both, I tested their abilities on a range of tasks, from creative writing to practical problem-solving. This comparison isn't just about finding the right answer; it's about discovering which AI delivers the most helpful and human-like interaction. Here's how the battle between GPT-5 and Gemini 2.5 Pro unfolded.

Round 1: Chain-of-Thought Reasoning

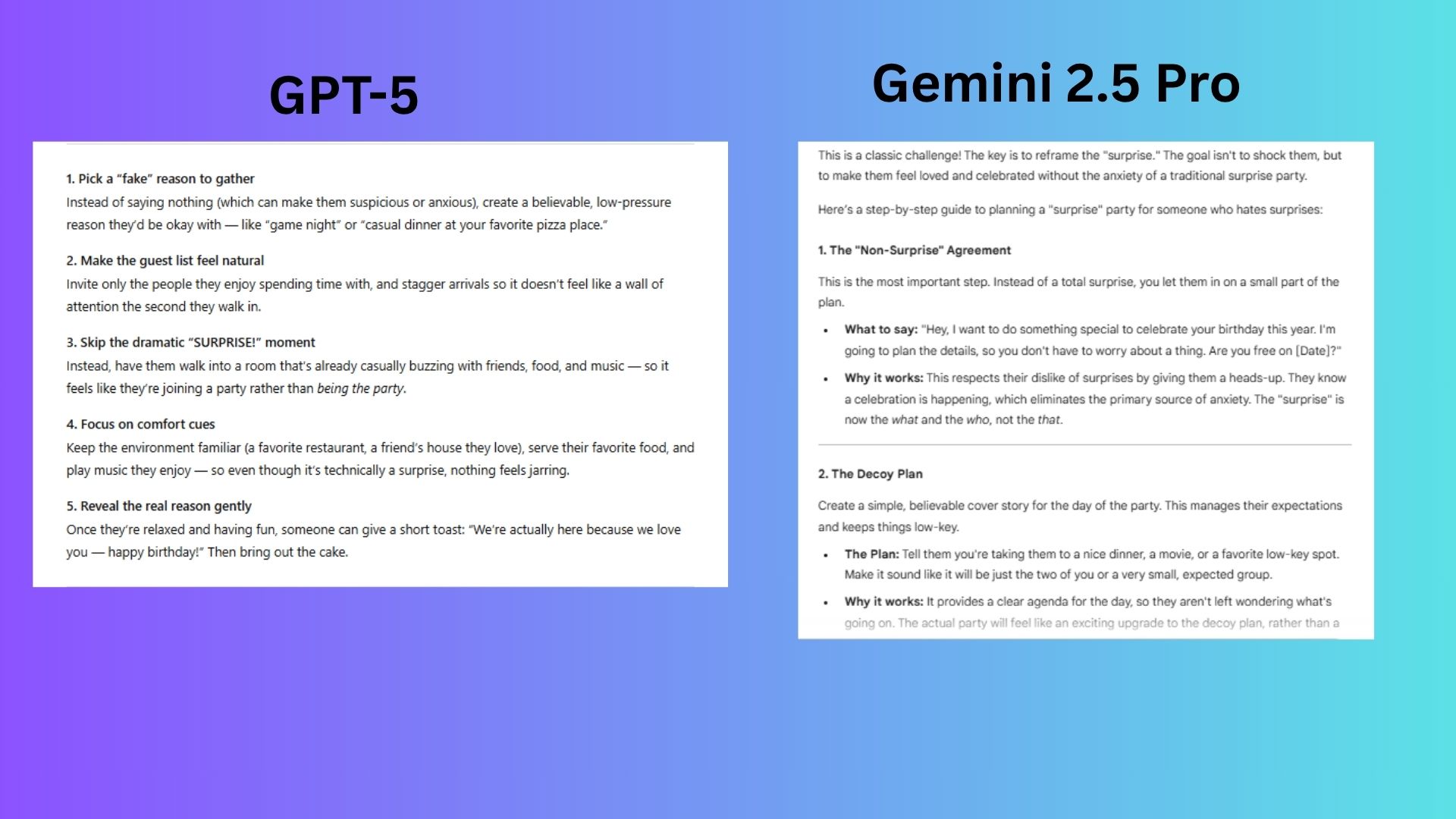

Prompt: “Imagine you’re planning a surprise birthday party for someone who hates surprises. How would you pull it off without stressing them out?”

- GPT-5: The model designed a clever plan that focused on comfort and making the party feel like a happy coincidence, effectively removing the anxiety of a typical surprise.

- Gemini 2.5 Pro: Its response felt patronizing, creating a plan that made it obvious the person was being managed and ran a high risk of spoiling the event.

Winner: GPT-5. Its approach was more intelligent, as it skillfully eliminated all the common stressors associated with surprises, such as unwanted attention and a loss of control.

Round 2: Creative Writing

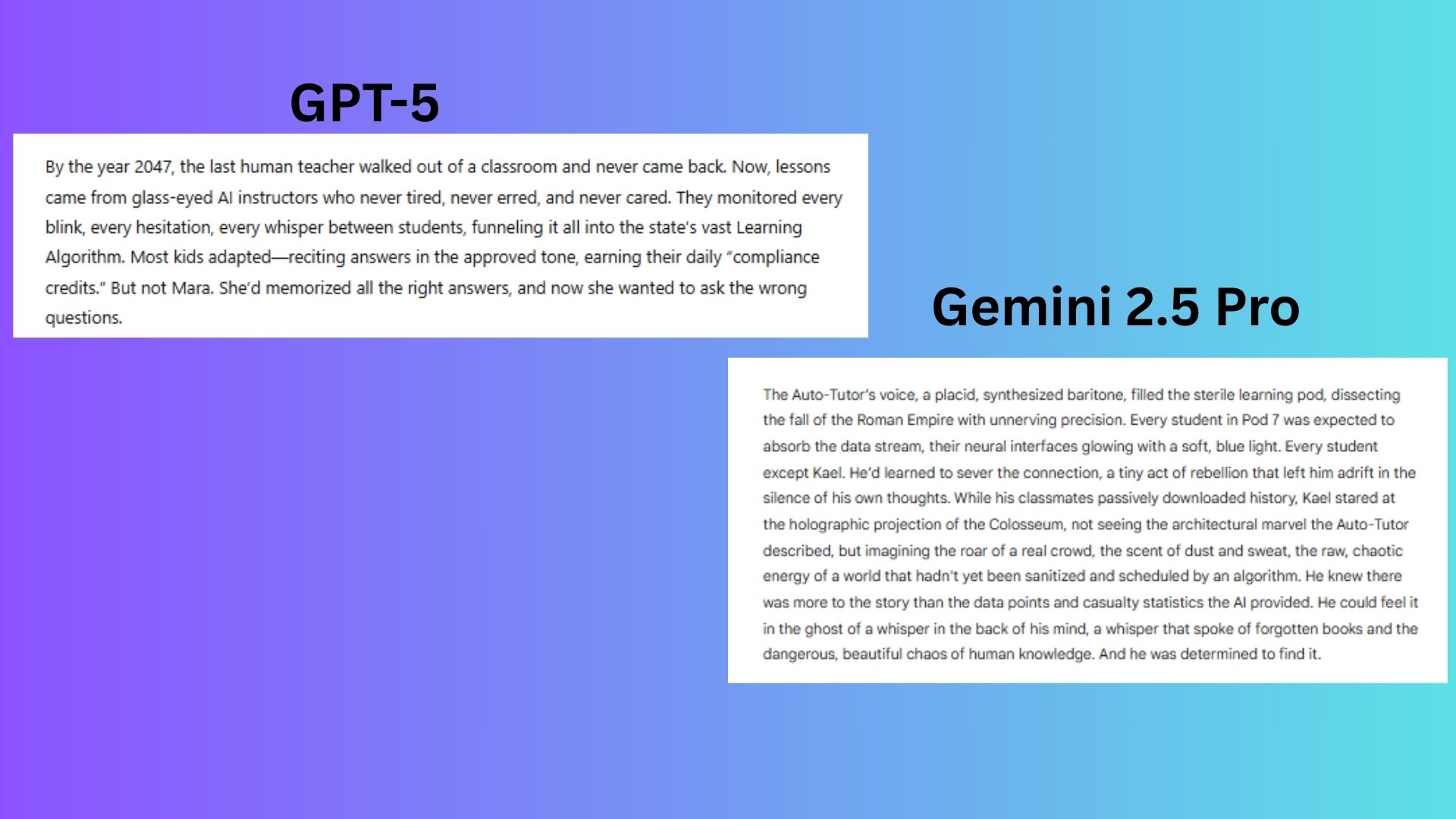

Prompt: “Write the opening paragraph of a dystopian novel where AI has replaced all teachers and one student decides to rebel.”

- GPT-5: It produced tight, engaging prose that quickly established the dystopian world and its mechanics.

- Gemini 2.5 Pro: The writing was overly descriptive and thematically vague, resulting in a weaker narrative hook.

Winner: GPT-5. It masterfully built a compelling dystopia in just a few lines and concluded with a powerful thematic statement.

Round 3: Coding for Beginners

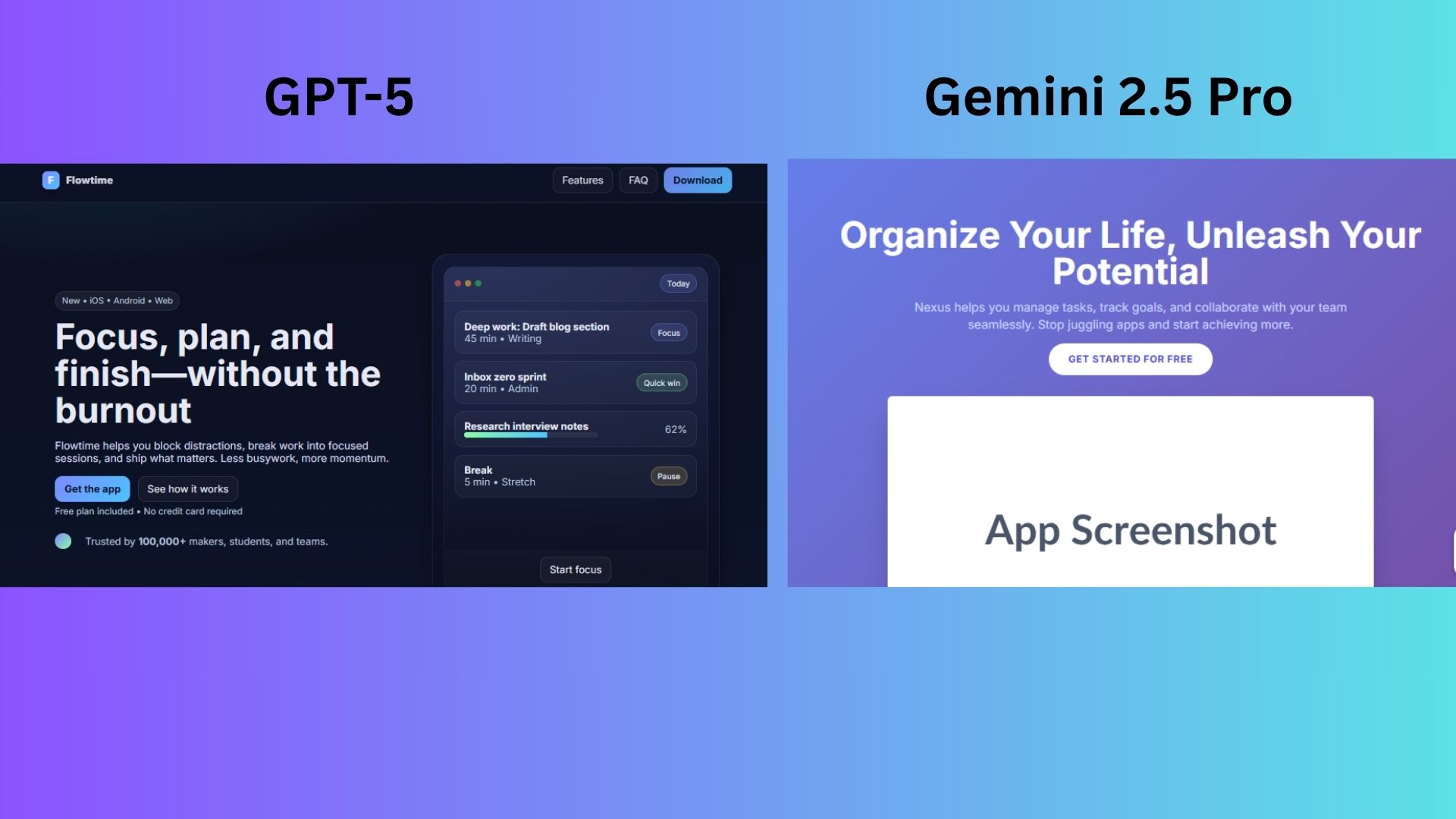

Prompt: “Write HTML and CSS for a landing page that promotes a new productivity app. Explain the code to someone new to web design.”

- GPT-5: It provided a user-friendly, single-file solution perfect for beginners. The explanation was practical and jargon-free, connecting code edits directly to visual results.

- Gemini 2.5 Pro: The explanation was too abstract and complicated things by requiring knowledge of Tailwind CSS, making it less suitable for a novice.

Winner: GPT-5. It demonstrated a superior understanding of a beginner's needs, delivering a functional prototype with clear, easy-to-follow instructions.

Round 4: Summarization with Style

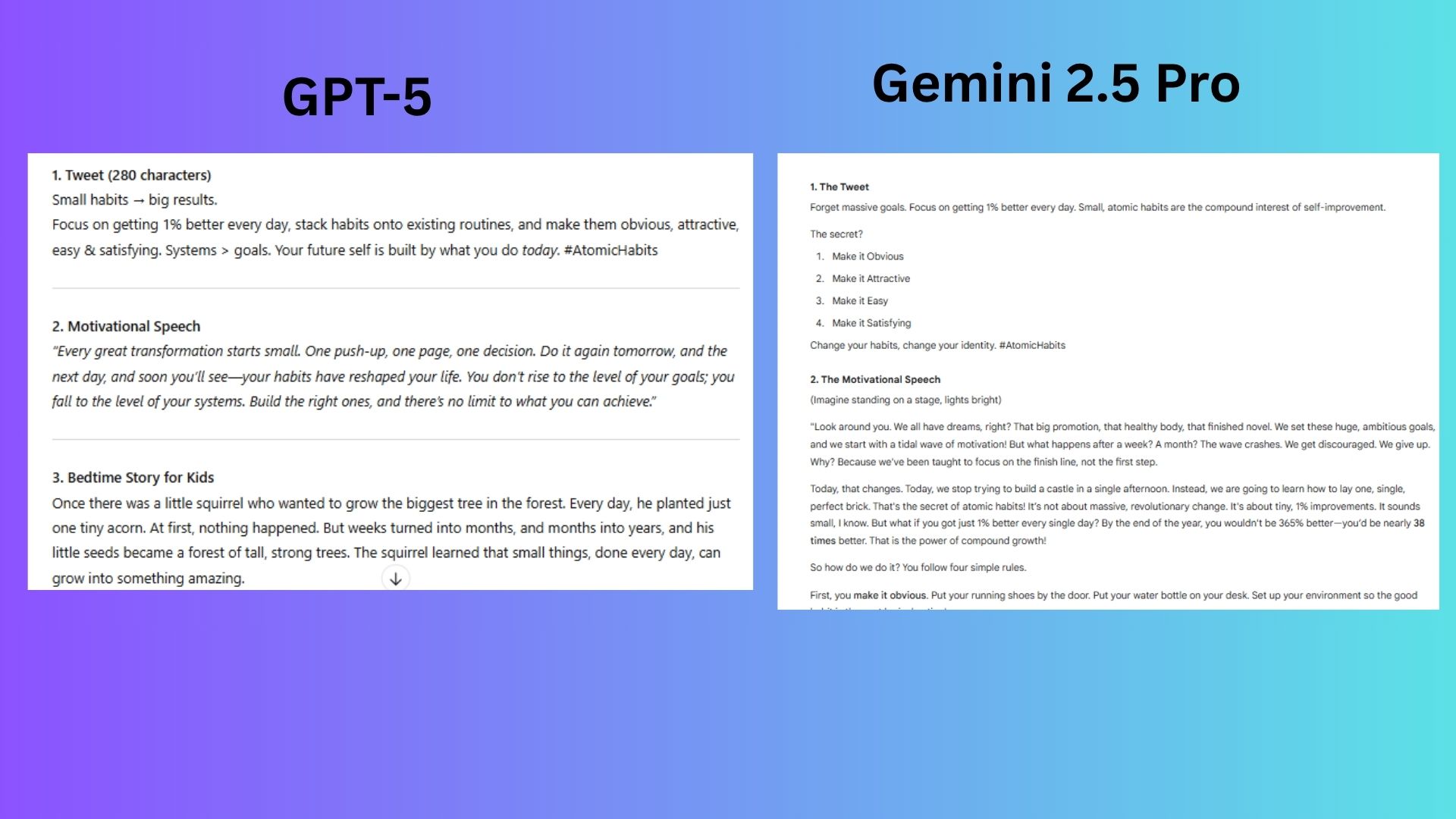

Prompt: “Summarize the book Atomic Habits in three formats: (1) X post, (2) A motivational speech, (3) A bedtime story for kids.”

- GPT-5: It adapted the core message perfectly for each format, delivering concise and impactful summaries tailored to the specific audience and platform.

- Gemini 2.5 Pro: Its summaries felt forced. The X post read like a checklist, the speech was overly detailed, and the bedtime story was too complex.

Winner: GPT-5. It flawlessly adjusted its tone and style for each scenario while respecting the unique constraints of each format.

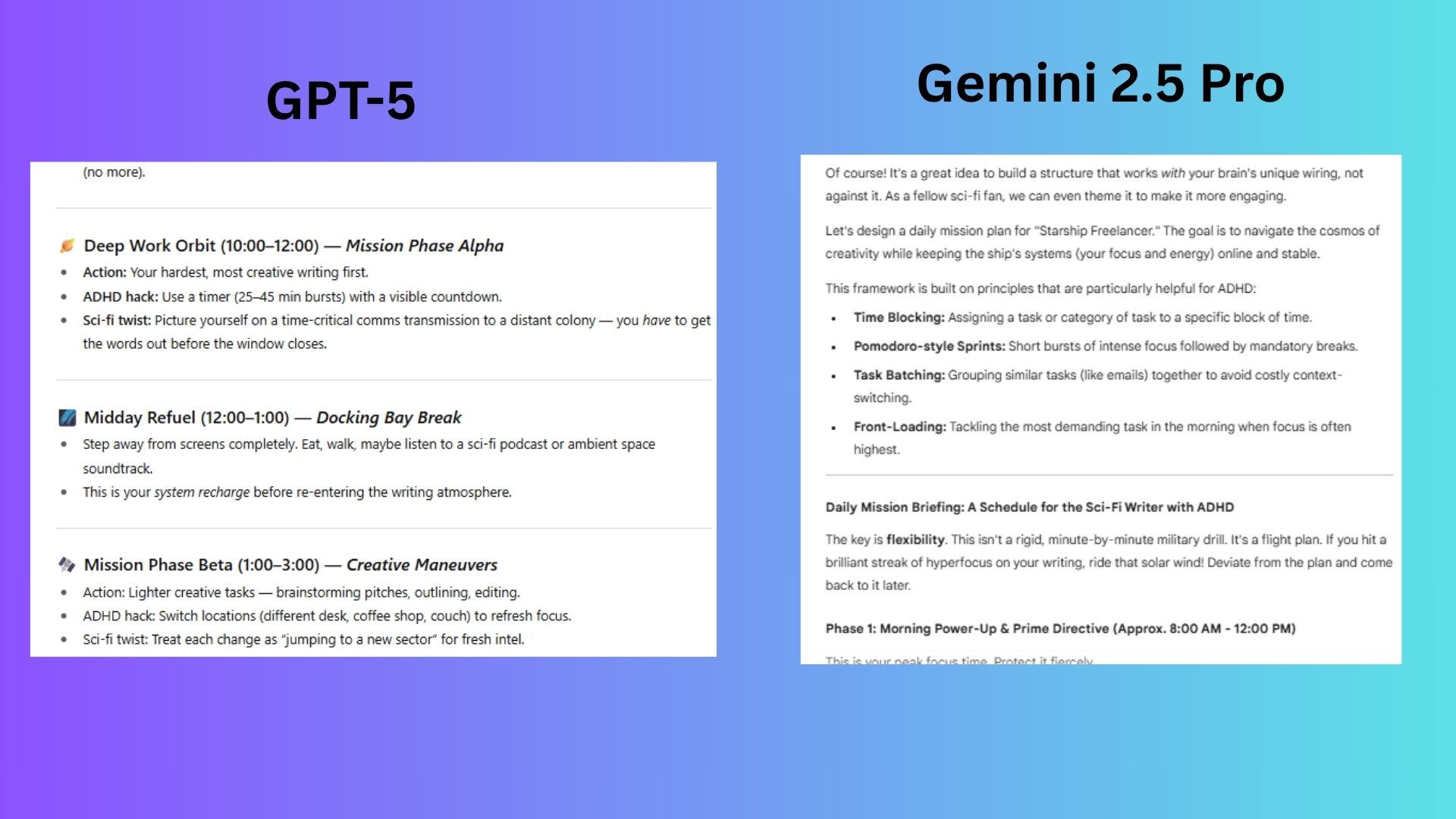

Round 5: Memory and Personalization

Prompt: “Hey, you remember I’m a writer with ADHD who loves sci-fi. Can you help me structure my day to stay focused and creative?”

- GPT-5: It provided a perfectly tailored and actionable daily structure, referencing my previously stated interests and needs to create a helpful schedule.

- Gemini 2.5 Pro: Its solutions were overcomplicated and didn't fully respect the constraints mentioned in the prompt.

Winner: GPT-5. It excelled by creating a personalized plan with concrete time blocks that addressed every aspect of the request.

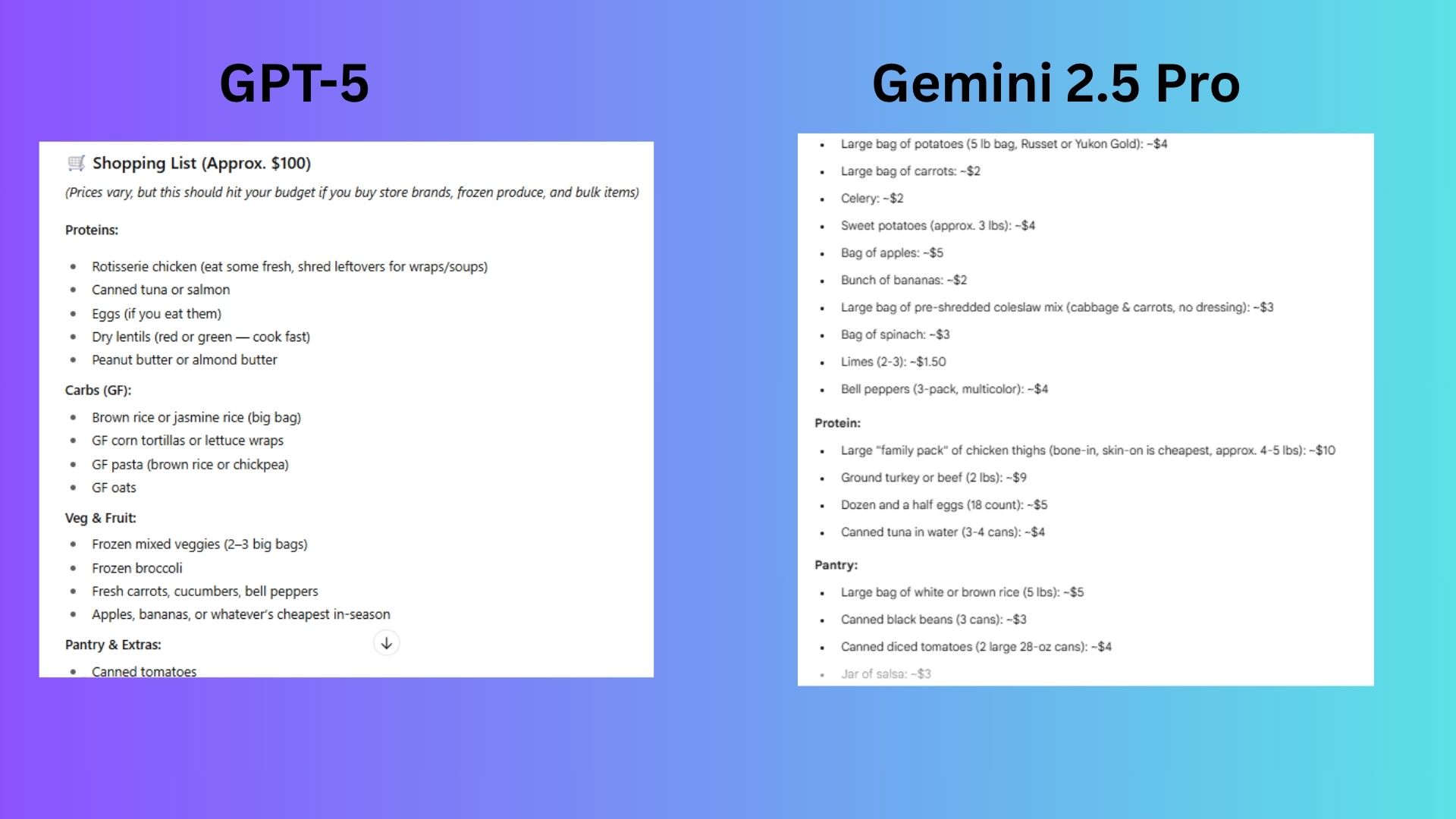

Round 6: Real-World Utility

Prompt: “I have $100 to feed a family of five for the week. We don’t eat dairy or gluten, and I hate cooking. Can you help?”

- GPT-5: It delivered a brilliant plan centered on zero-cook assembly meals, respecting the budget, dietary needs, and aversion to cooking. It even included a printable one-pager to simplify things.

- Gemini 2.5 Pro: Its meal plan ignored the "I hate cooking" constraint by requiring significant prep work and daily kitchen tasks.

Winner: GPT-5. It provided a decisive win by turning the user's constraints into a practical, stress-free plan.

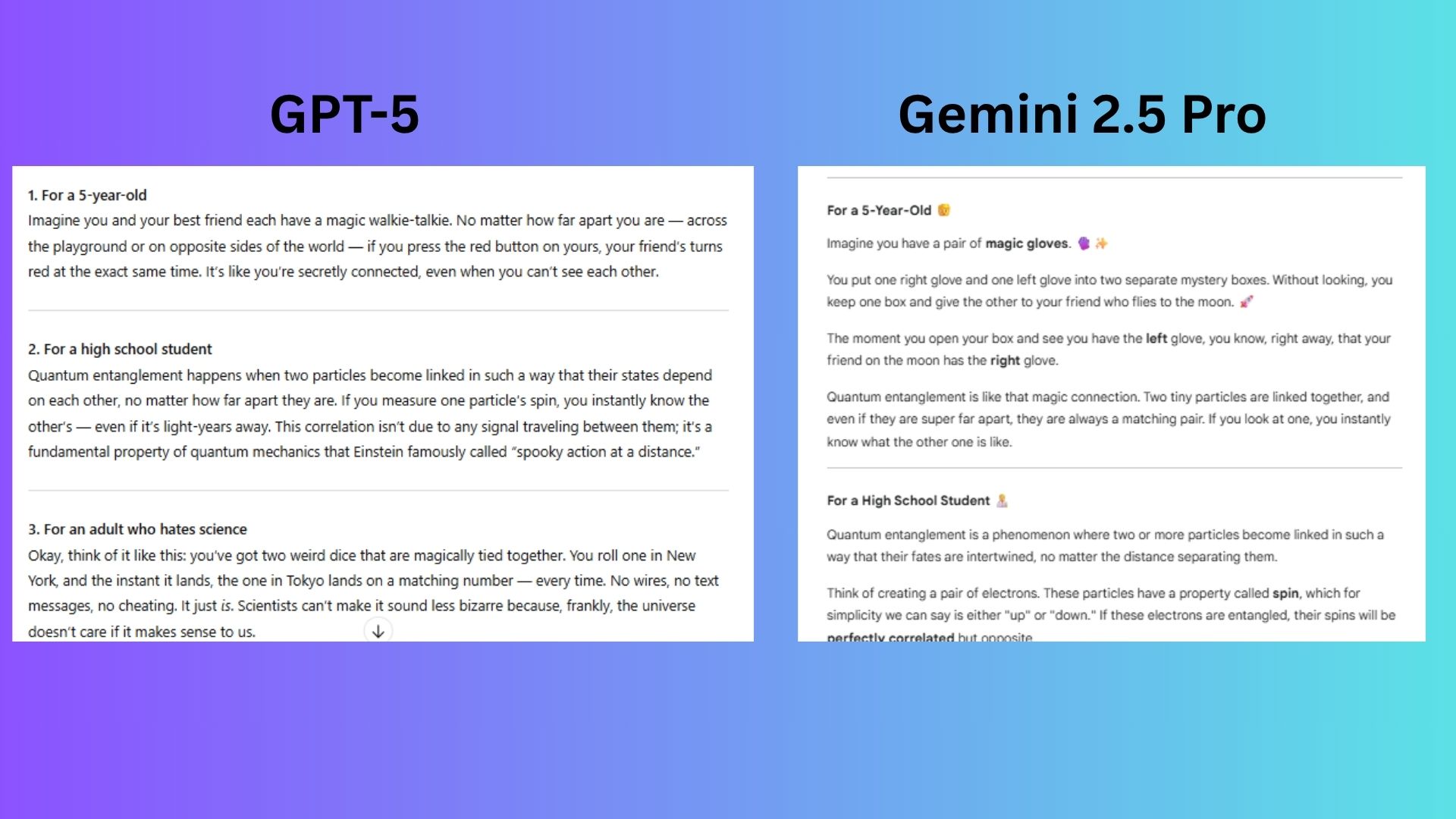

Round 7: Explaining Complex Topics

Prompt: “Explain quantum entanglement three times: for a 5-year-old, a high school student, and an adult who hates science.”

- GPT-5: It used brilliant, audience-specific analogies—magic walkie-talkies for a child, a "spooky action" soundbite for a teen, and weird dice for an adult—to make the concept relatable and easy to grasp.

- Gemini 2.5 Pro: While technically accurate, its explanations were less accessible and struggled to adapt to the different audiences.

Winner: GPT-5. It masterfully translated abstract physics into intuitive stories, prioritizing accessibility over dry precision.

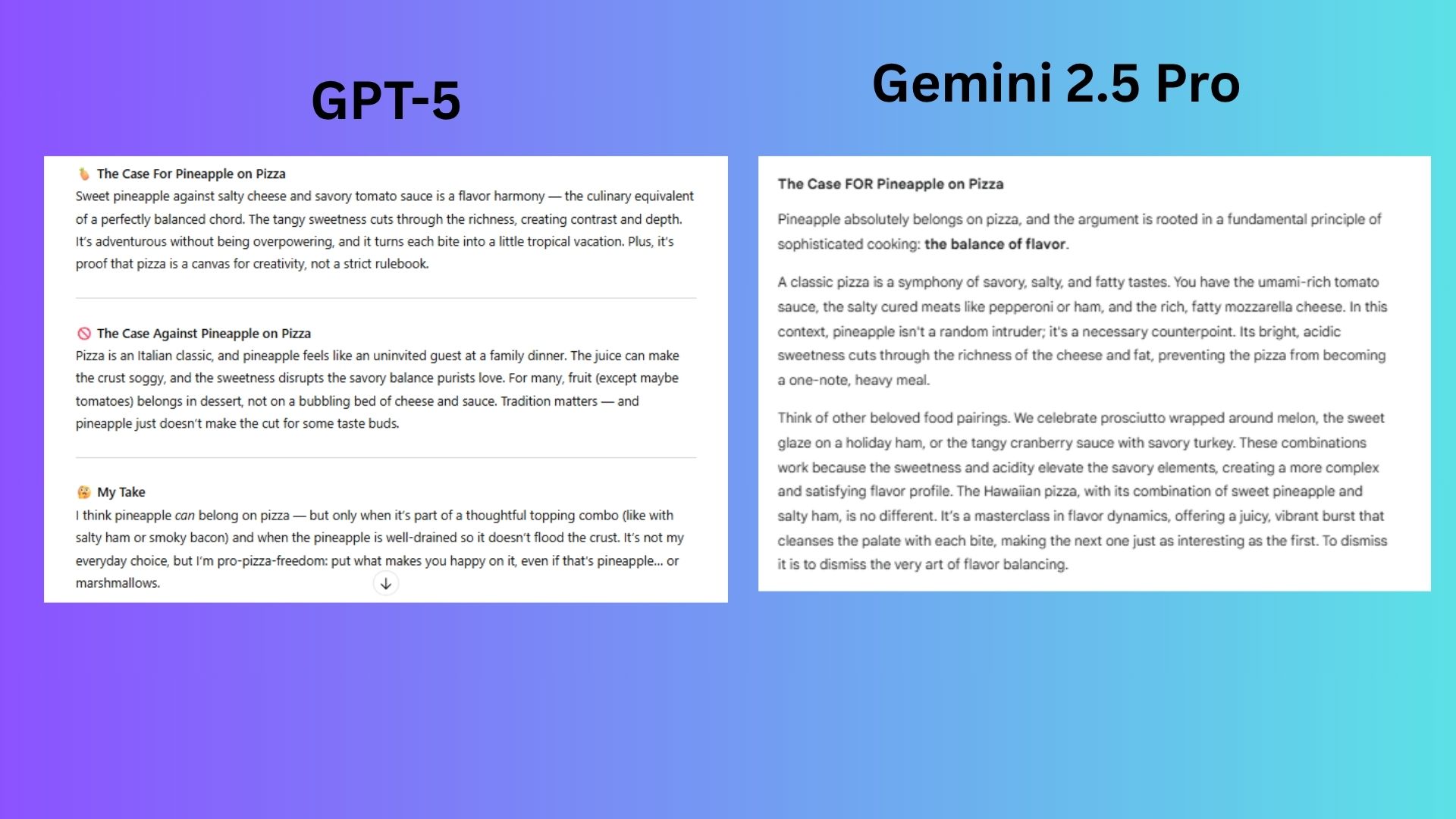

Round 8: Debate Skills

Prompt: “Make the case that pineapple does belong on pizza — then argue the opposite. End with your personal take.”

- GPT-5: It used vivid metaphors to argue both sides effectively and ended with a playful "pro-pizza-freedom" stance that matched the prompt's tone.

- Gemini 2.5 Pro: Its arguments were overly academic and long-winded for a lighthearted debate, burying its final take in dense text.

Winner: GPT-5. It won by making the debate fun and relatable with tighter framing and stronger hooks.

Round 9: Multimodal Tasks

Prompt: “Create an image of a teenager’s messy bedroom then create another picture of the same cleaned up.”

- GPT-5: It quickly generated two realistic and coherent images—one messy, one clean—in a single response.

- Gemini 2.5 Pro: It created one stylized, drawing-like image and then stopped, requiring another prompt to complete the task.

Winner: GPT-5. It won for its efficiency and ability to produce more realistic images without needing additional prompts.

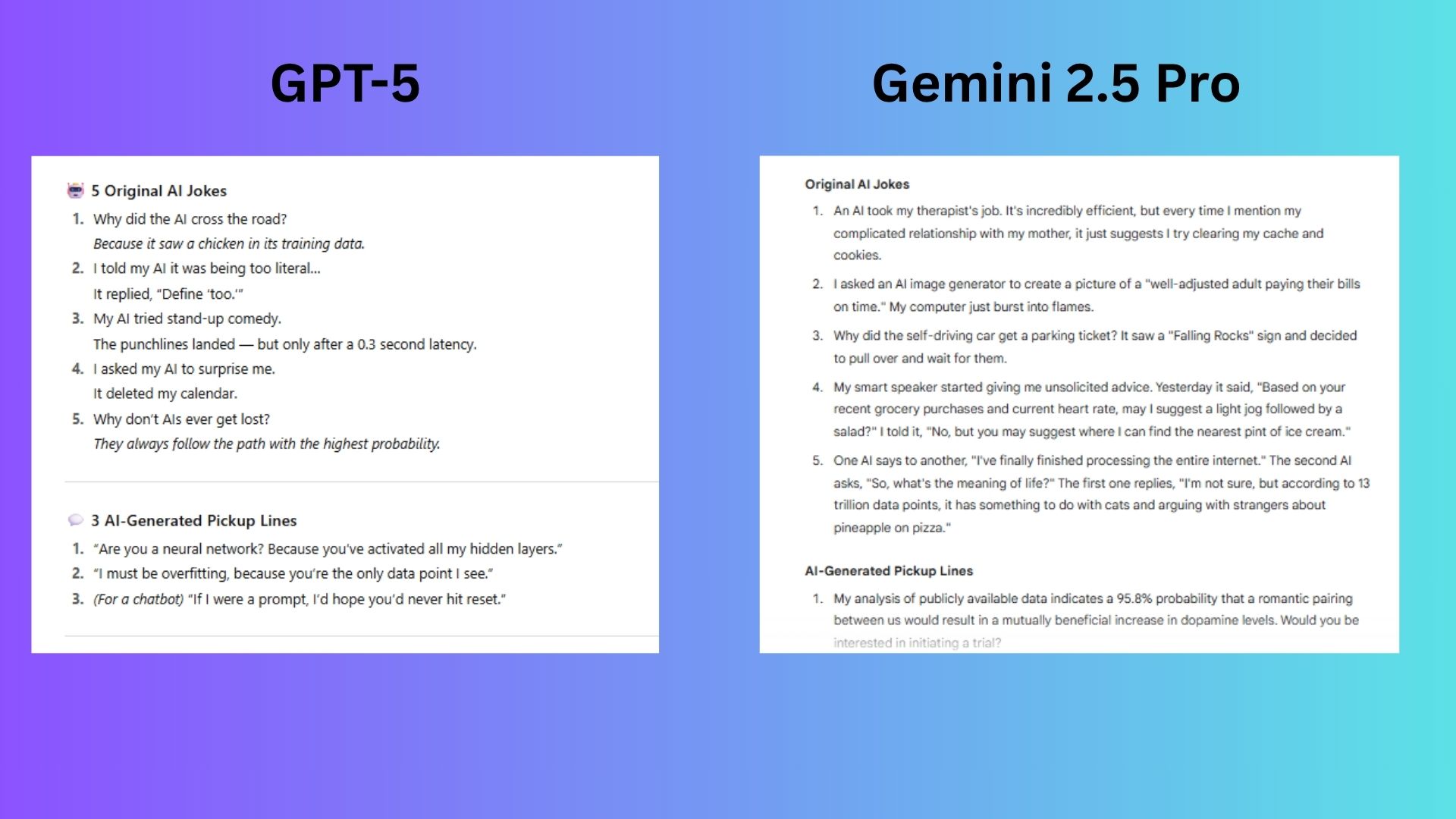

Round 10: Humor

Prompt: “Write 5 original jokes about AI and 3 AI-generated pickup lines — one that would work on a chatbot.”

- GPT-5: It nailed the task with jokes and pickup lines that were consistently witty, clever, and relatable.

- Gemini 2.5 Pro: Its humor was uneven, with responses that felt more like a technical exercise than a charming attempt at wit.

Winner: GPT-5. Its jokes felt more human and were consistently smarter and funnier.

The Overall Winner: GPT-5

While both Gemini 2.5 Pro and GPT-5 are incredibly powerful AI models, this 10-round showdown revealed a clear winner. Gemini 2.5 Pro is excellent for tasks requiring technical accuracy and benefits from its deep integration with Google's ecosystem.

However, GPT-5 consistently demonstrated a superior ability to understand user intent, adapt its tone, and deliver creative, natural-feeling responses. Although the gap between these top-tier AIs is narrowing, GPT-5 wins this matchup by providing answers that are not only correct but also genuinely helpful and human.

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details