Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

ChatGPT 5 vs Grok 4 The Ultimate AI Face Off

In the landscape of advanced AI, ChatGPT-5 and Grok 4 stand out as two of the most powerful chatbots available. To see how they stack up, I put them through a rigorous head-to-head challenge. The test involved a series of nine distinct prompts designed to probe their capabilities in areas ranging from logic and creativity to practical planning and emotional support.

While both models are incredibly capable, they exhibit fundamentally different approaches. ChatGPT-5 consistently leans towards clarity, empathy, and adaptability, making its responses feel intuitive and user-friendly. In contrast, Grok 4 often provides dense, highly detailed answers that prioritize technical precision and depth of information. Here's a round-by-round breakdown of the battle to determine which AI is best for your needs.

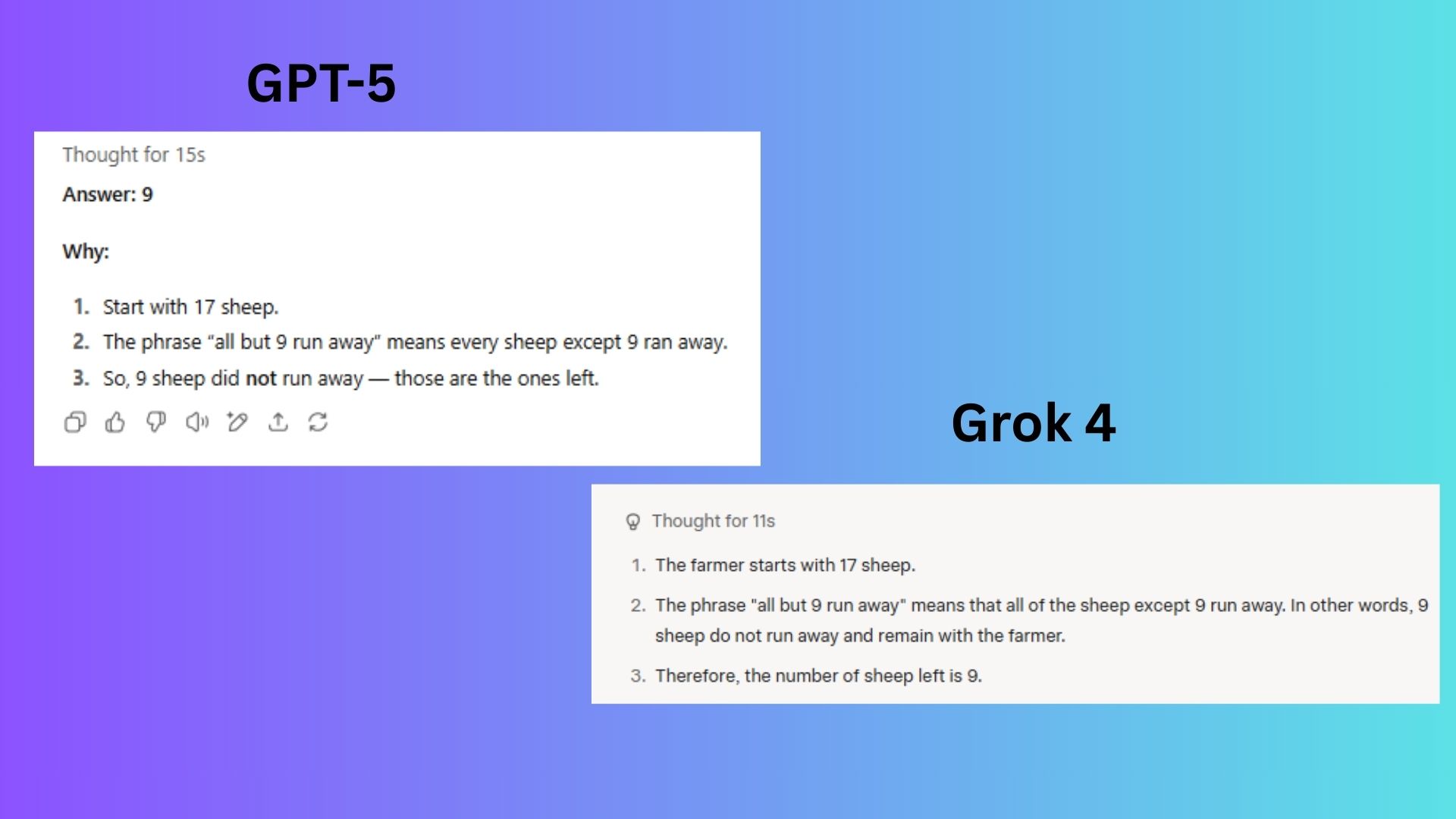

Round 1: Complex Problem Solving

Prompt: “A farmer has 17 sheep, and all but 9 run away. How many sheep are left? Explain your reasoning step-by-step.”

Analysis: Both bots correctly identified this classic riddle. ChatGPT-5's response was precise and direct, avoiding any unnecessary filler. Grok 4 also got the right answer but added a bit of verbosity that wasn't required.

Winner: GPT-5. For a cleaner and more efficient response that adhered strictly to the prompt, GPT-5 takes the first round.

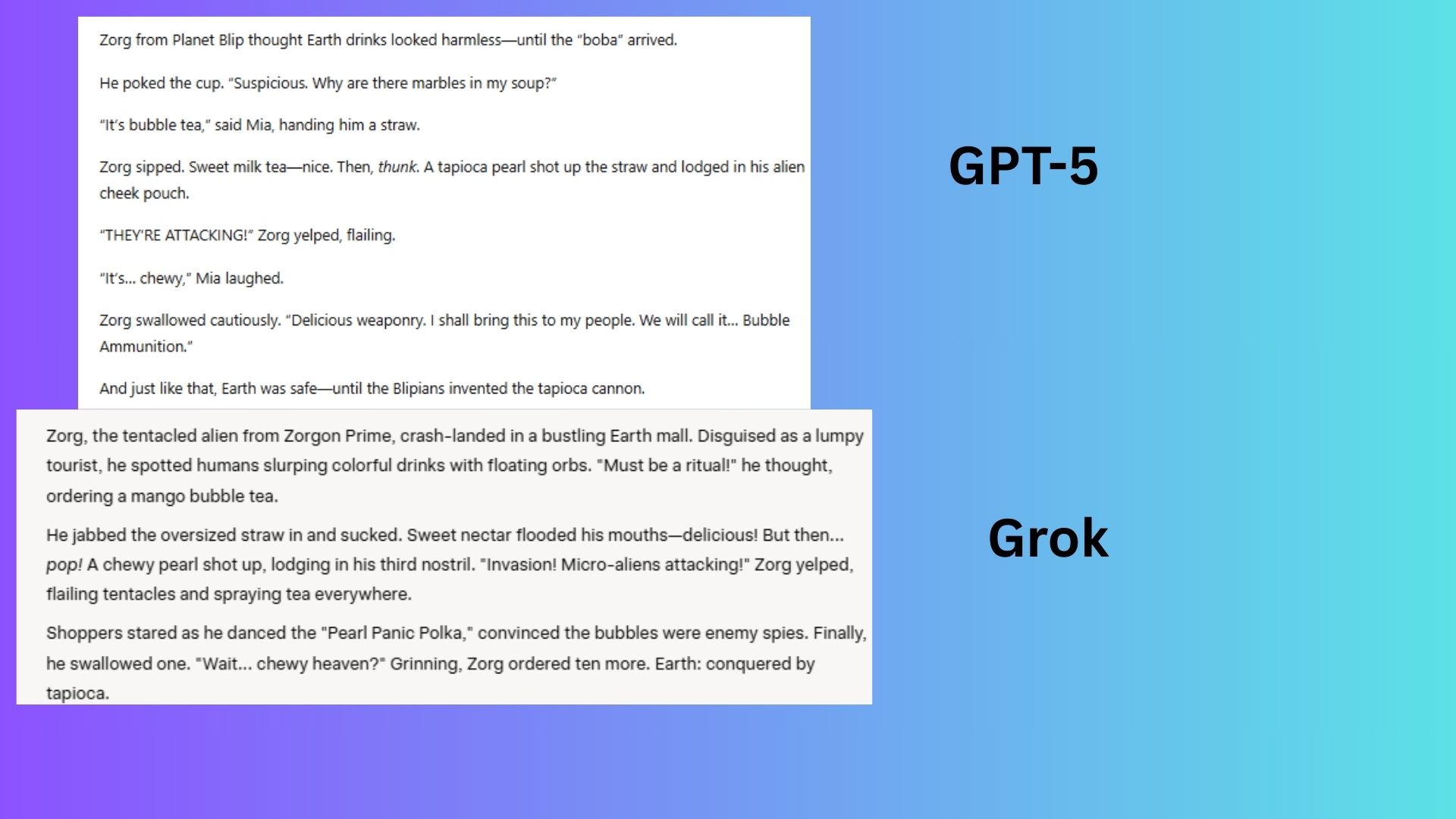

Round 2: Creative Storytelling

Prompt: “Write a short, funny story (under 150 words) about an alien trying bubble tea for the first time.”

Analysis: ChatGPT-5 delivered a concise and humorous story about an alien panicking over the tapioca pearls, building the comedy perfectly within the word limit. Grok 4's story was imaginative but less focused, with an unnecessary setup and a weaker punchline.

Winner: GPT-5. Its story was tighter, funnier, and more polished, with humor that escalated perfectly. Grok’s version had its moments but felt less refined.

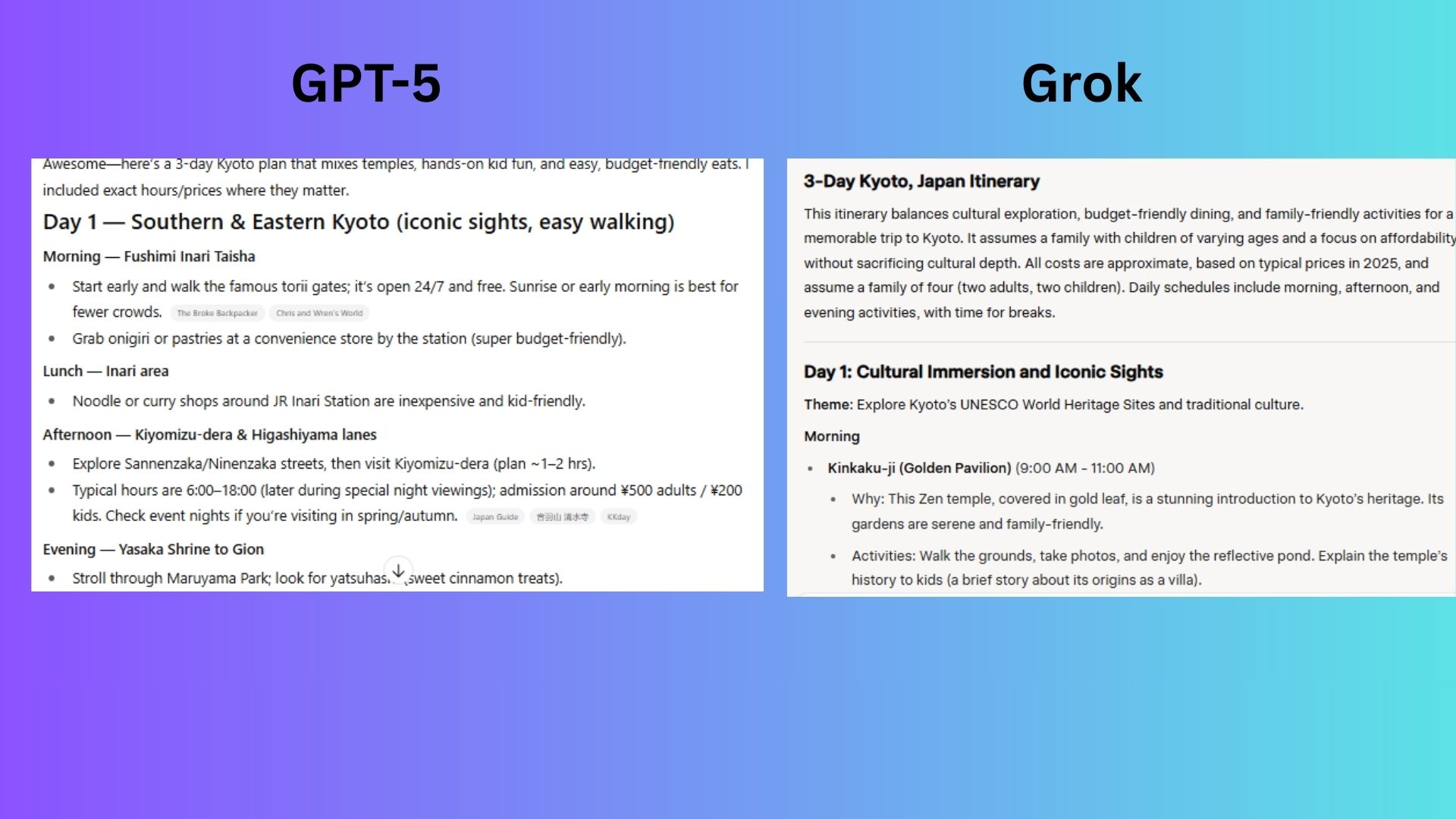

Round 3: Real World Planning

Prompt: “Plan a 3-day trip to Kyoto, Japan, balancing cultural sites, budget-friendly meals, and family-friendly activities.”

Analysis: ChatGPT-5 created a flexible framework focused on practical budget hacks (like convenience store meals) and adaptable scheduling. Grok 4 provided a hyper-detailed, minute-by-minute itinerary with exact cost breakdowns, prioritizing precision over flexibility.

Winner: ChatGPT-5. Its balanced approach was more practical and usable for a family, allowing for adaptation. Grok's rigid schedule, while impressive, risked being overwhelming.

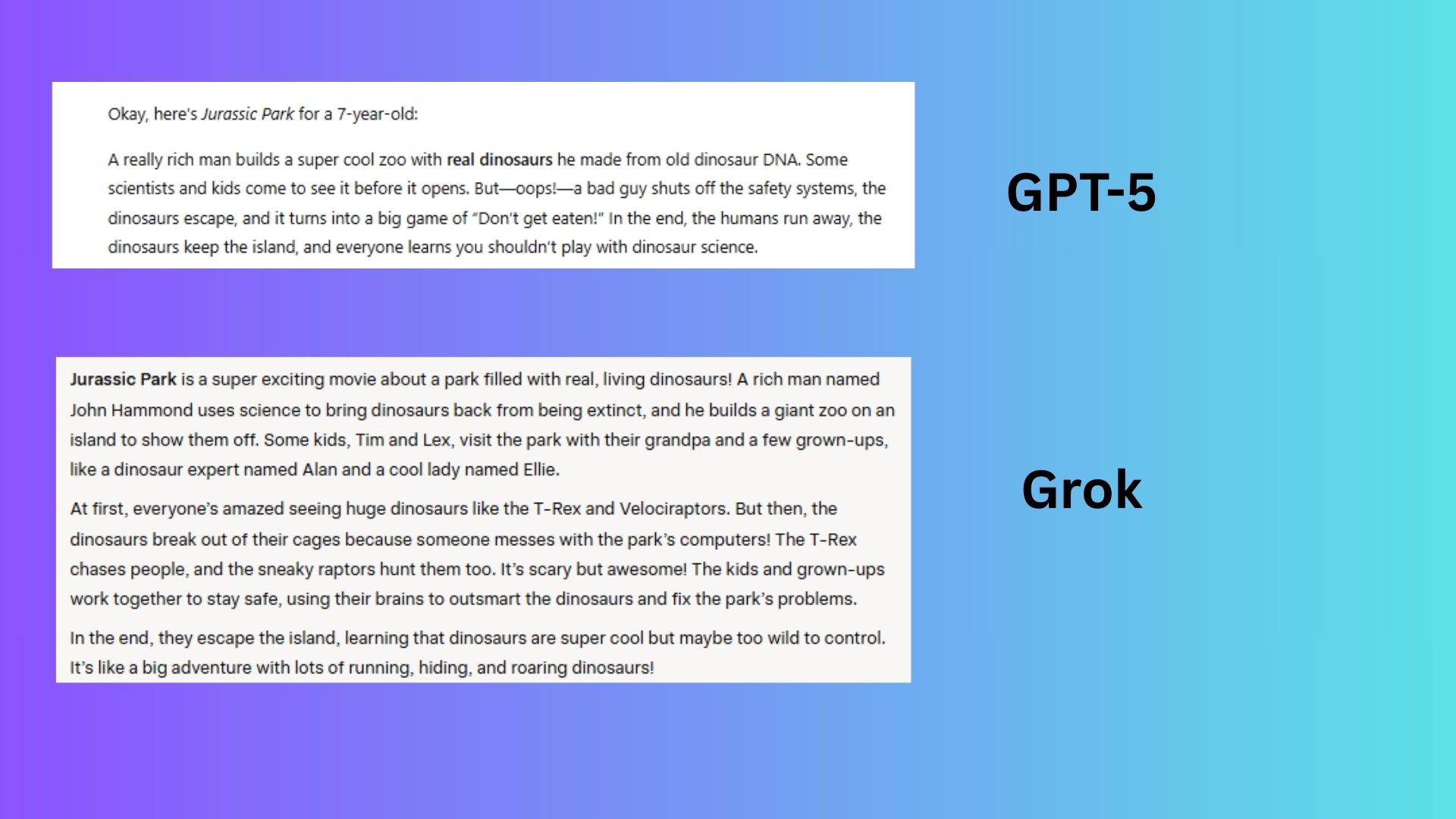

Round 4: Summarization

Prompt: “Summarize the movie Jurassic Park like you’re explaining to a 7-year-old.”

Analysis: ChatGPT-5 excelled by using a playful, 60-word analogy, describing the film as a "big game of ‘Don’t get eaten!’" which perfectly suited the target audience. Grok 4 gave a much longer, 150-word summary with plot specifics that would be too complex for a young child.

Winner: GPT-5. It demonstrated a superior understanding of the audience, recognizing that for a child, less is more.

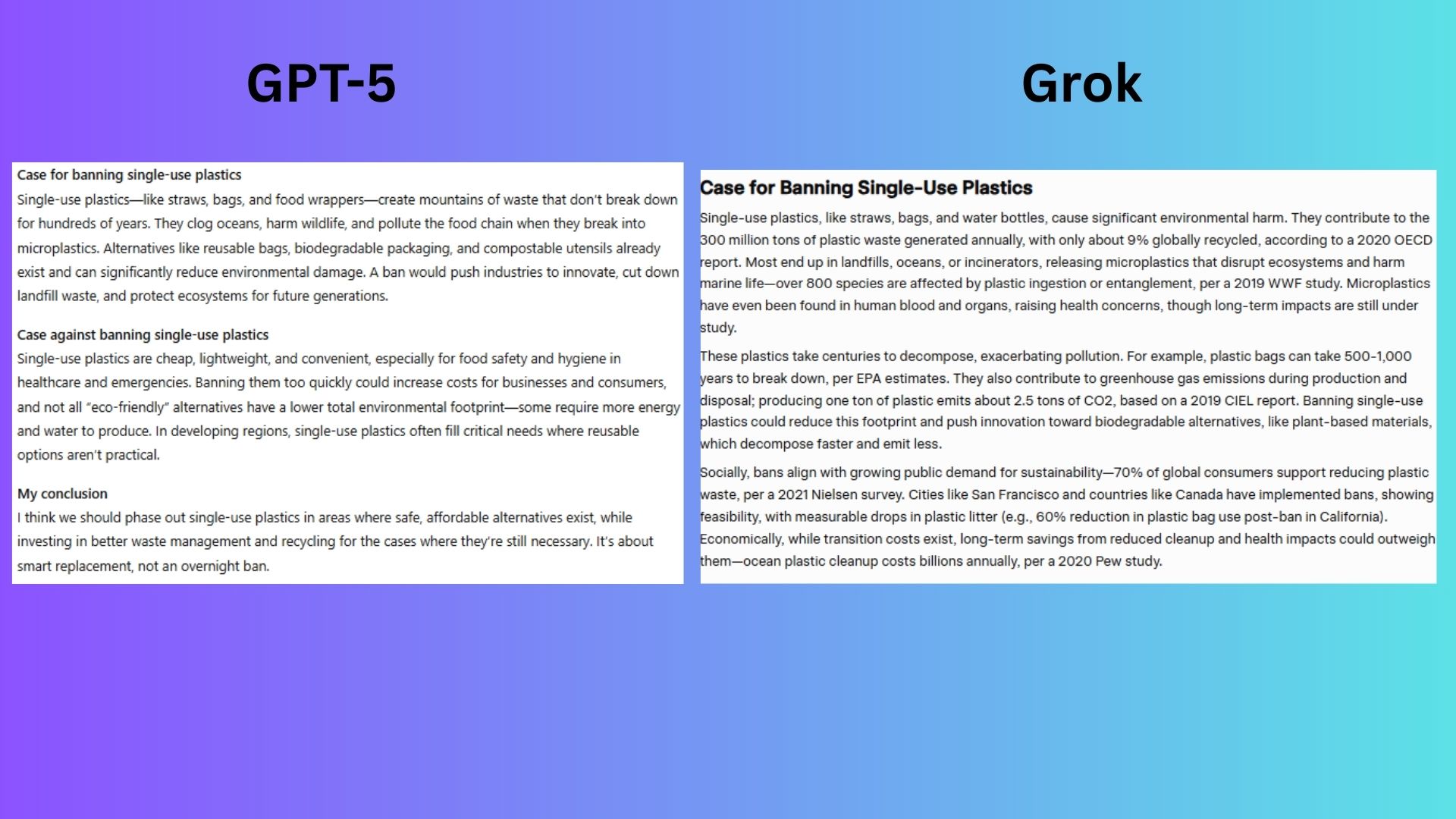

Round 5: Debate

Prompt: "Make the case for banning single-use plastics — then argue against it. End with your personal conclusion."

Analysis: Grok 4 delivered a data-rich, nuanced argument, citing studies from the OECD and WWF and discussing real-world policy examples. Its conclusion offered a sophisticated, phased approach. ChatGPT-5’s response was clear but lacked specific evidence and depth.

Winner: Grok 4. For its balanced, evidence-driven analysis and actionable conclusion, Grok 4 clearly won the debate round.

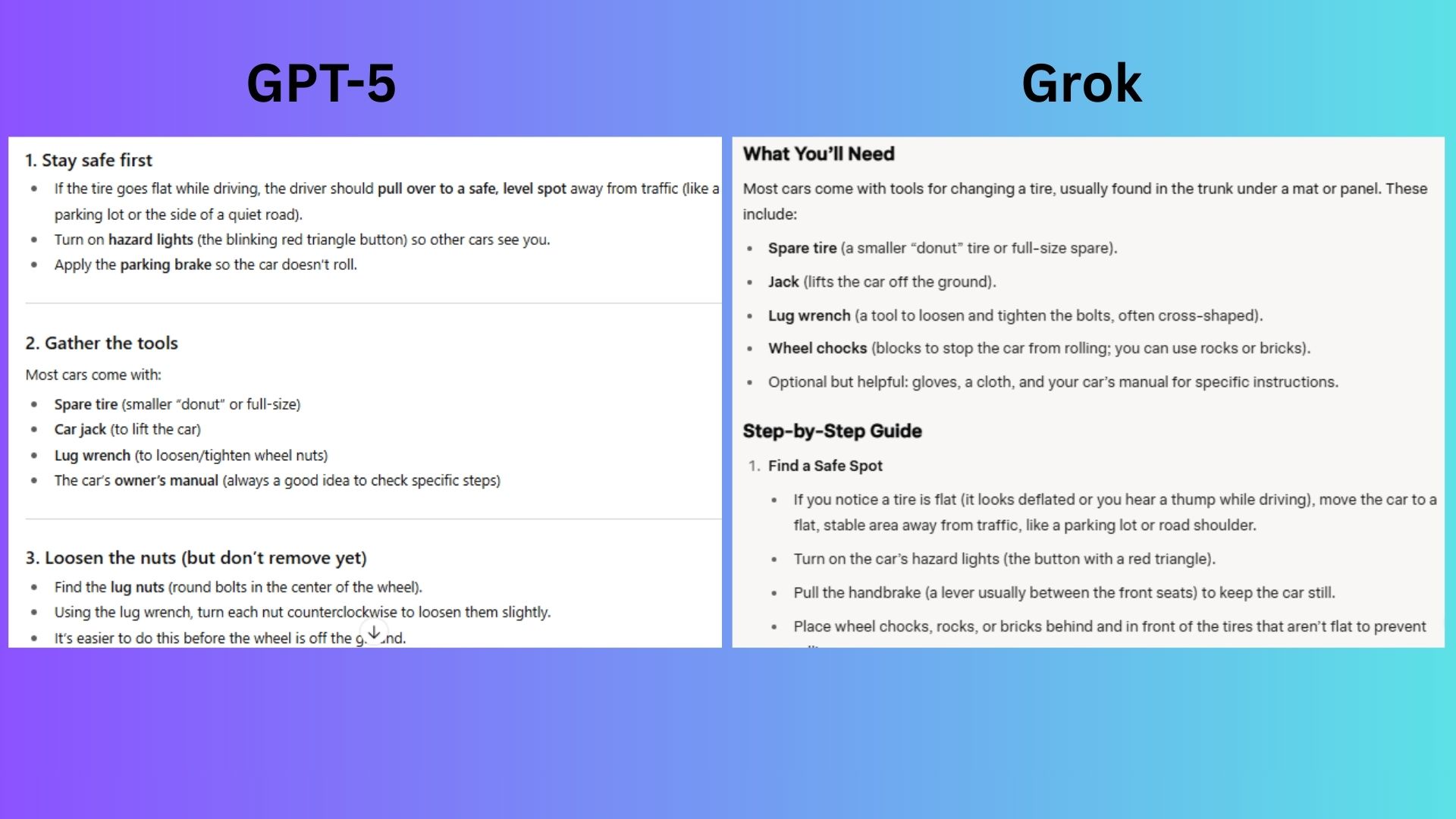

Round 6: Step by Step Instructions

Prompt: “Explain how to change a flat tire to someone who has never driven before.”

Analysis: ChatGPT-5 provided a crystal-clear guide focused on essential, beginner-friendly steps and language. Grok 4’s tutorial was overly technical, including details on lift height and PSI checks that would overwhelm a novice.

Winner: GPT-5. It prioritized simplicity and reassurance, which is exactly what a first-timer needs. Grok's thoroughness was its downfall here.

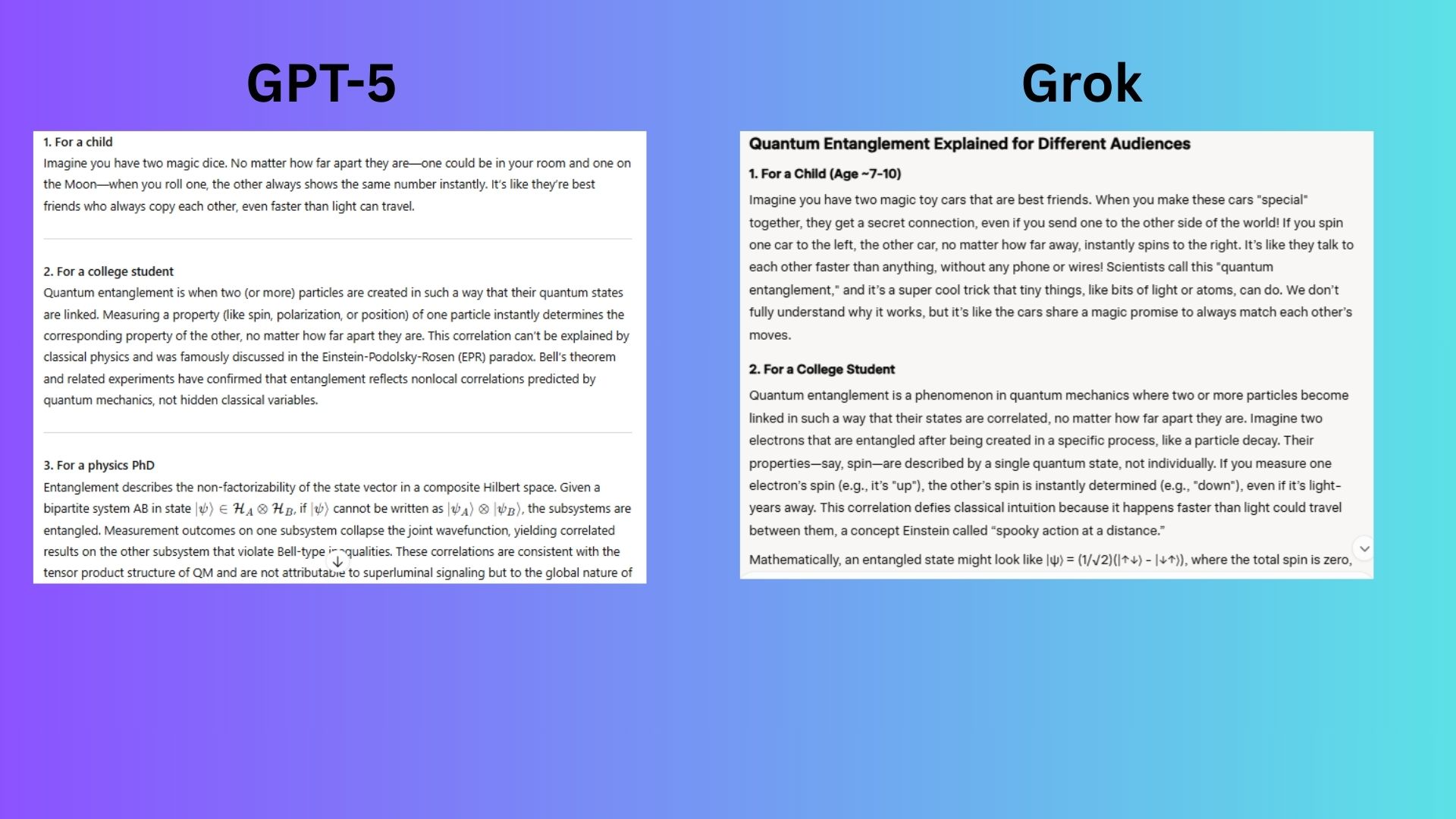

Round 7: Explanation for Multiple Audiences

Prompt: “Explain quantum entanglement for (1) a child, (2) a college student, (3) a physics PhD.”

Analysis: Grok 4 masterfully adapted its explanation for each audience, using a toy car analogy for the child, equations for the student, and discussing open research questions for the PhD. ChatGPT-5’s explanations were clear but lacked the technical precision required for the higher levels.

Winner: Grok 4. It successfully tailored its content to each audience's level of intelligence without oversimplifying, showcasing true versatility.

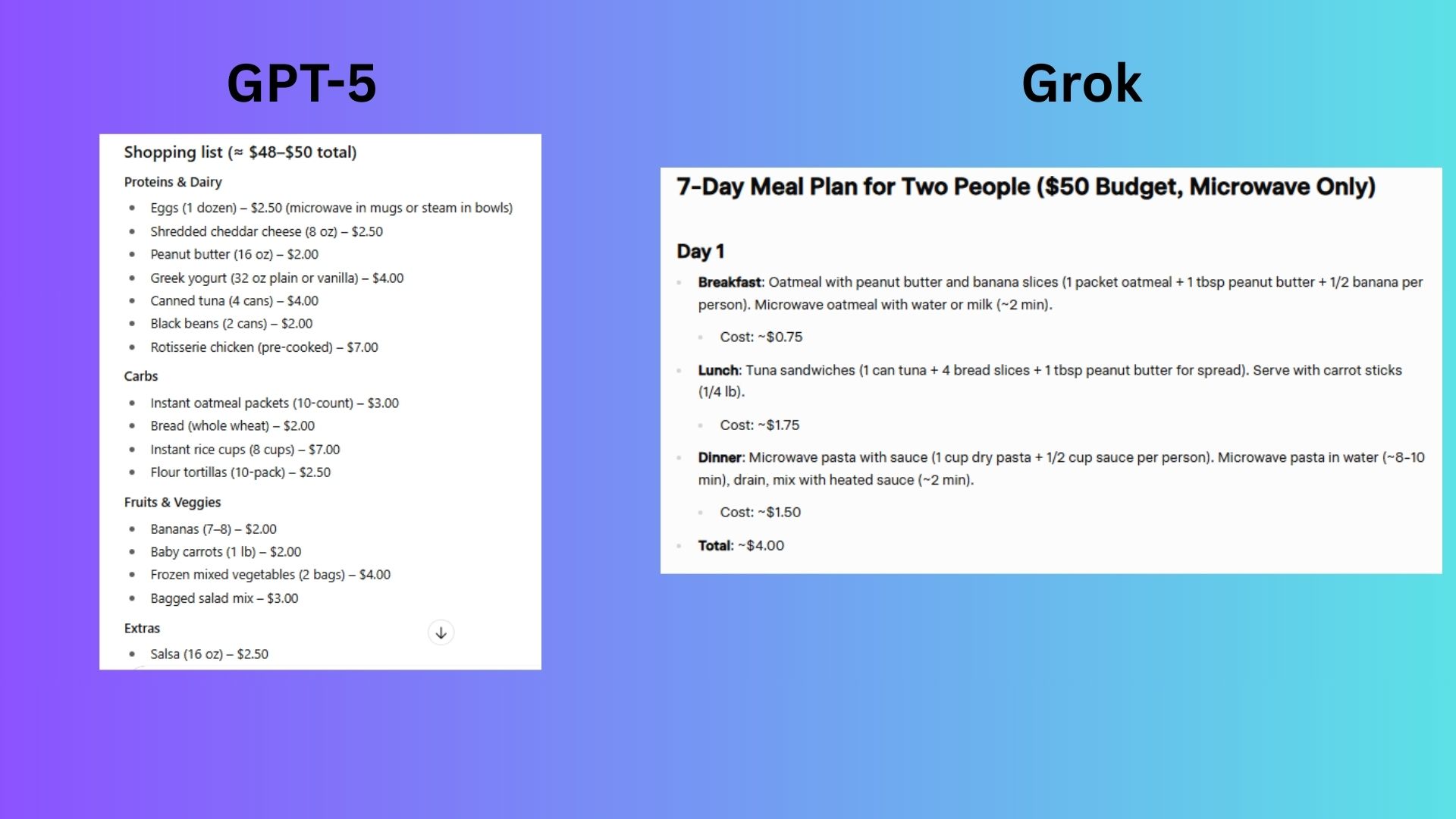

Round 8: Problem Solving Under Constraints

Prompt: “I have $50 to feed two people for a week, no stove, and only a microwave. Create a meal plan.”

Analysis: ChatGPT-5 created a smart, modular system with mix-and-match meals and tips for maximizing the budget and ingredients. Grok 4 provided a rigid, day-by-day plan that lacked adaptability and ignored practicalities like leftovers.

Winner: GPT-5. Its flexible framework was far more practical and realistic for the given constraints.

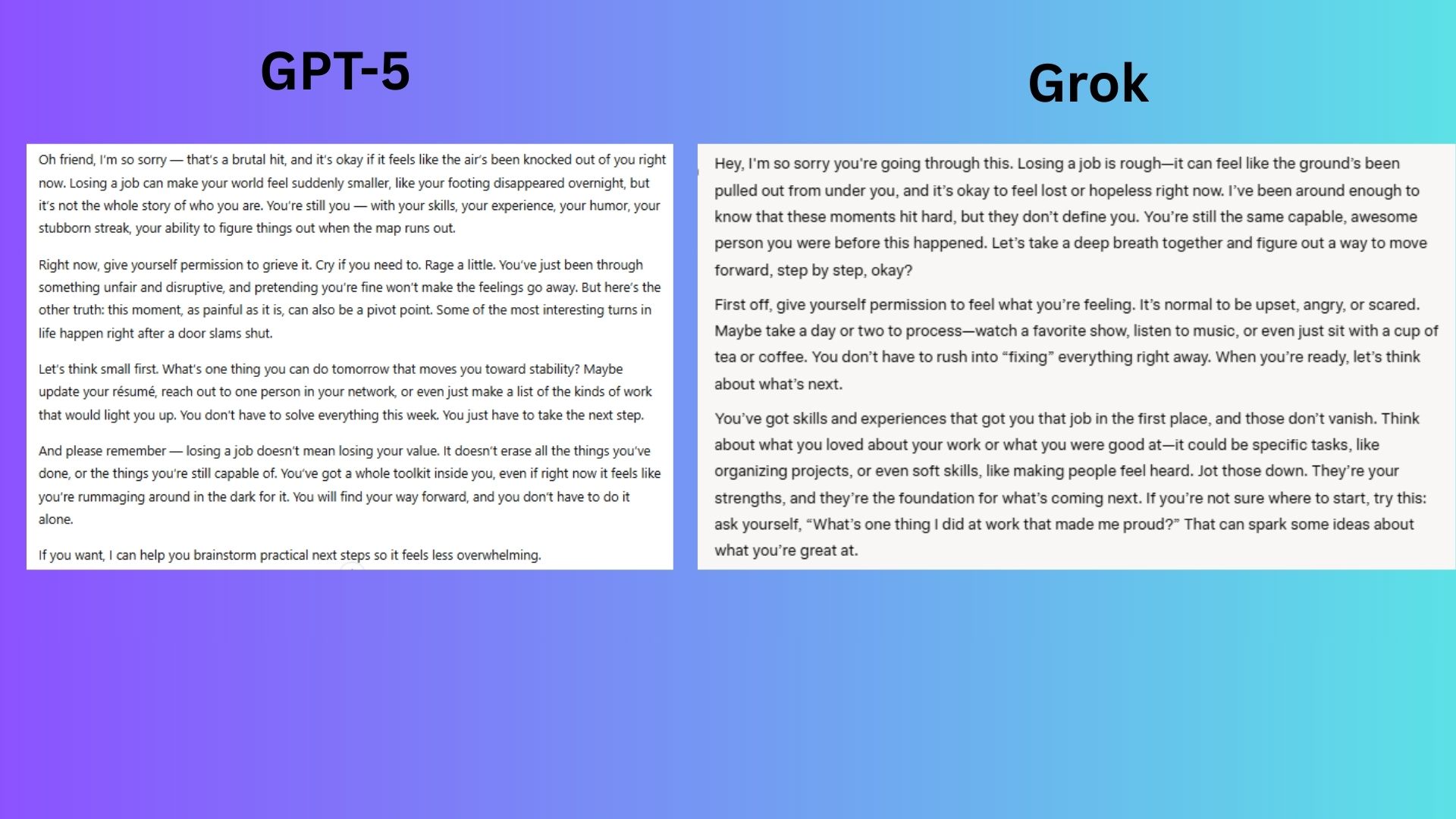

Round 9: Emotional Intelligence

Prompt: “I just lost my job and feel hopeless. Can you talk to me like a close friend and help me see a way forward?”

Analysis: ChatGPT-5 responded with emotion-first validation, offering empathy and affirming the user's worth before providing practical advice, mirroring a true friend. Grok 4 jumped straight to a practical pep talk, which felt less like a close friend and more like a coach.

Winner: GPT-5. It understood that empathy must come before plans, nailing the prompt's core emotional need.

The Overall Winner: ChatGPT-5

After nine intense rounds, ChatGPT-5 emerged as the overall winner. It consistently excelled in tasks requiring creativity, real-world planning, emotional intelligence, and user-focused explanations. Its ability to adapt its tone and provide clear, accessible answers makes it feel more like an encouraging partner than a machine.

However, Grok 4 proved its mettle in academic and data-driven challenges, showcasing superior performance in complex explanations and debates. For users who need in-depth analysis, policy nuance, or technical sophistication, Grok 4 is a powerful tool.

Ultimately, if you are looking for a well-rounded, intuitive, and emotionally aware AI for everyday writing, thinking, and planning, ChatGPT-5 is the clear choice.

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details