Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

Run Powerful OpenAI Models Offline On Your Mac

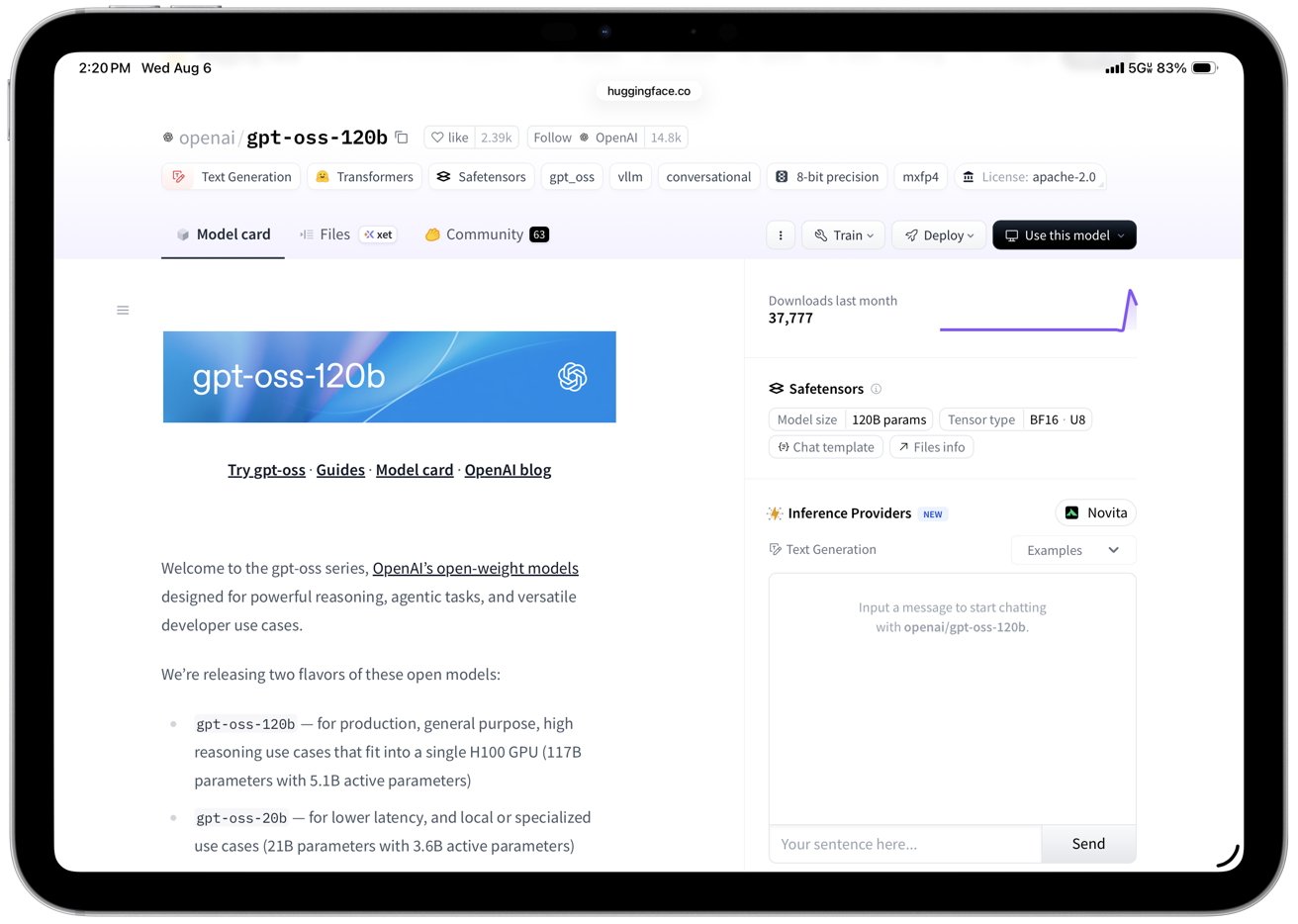

In a significant move, OpenAI is bringing ChatGPT-style reasoning power directly to your Mac, no subscription required. On August 5, the company announced two new large language models with publicly available weights: gpt-oss-20b and gpt-oss-120b. This marks their first major open-weight release since GPT-2 in 2019.

Both models are available under the Apache 2.0 license, which grants free commercial use and modification. OpenAI's CEO, Sam Altman, has praised the smaller model as the best and most practical open model on the market, with reasoning capabilities that rival the company's own GPT-4o-mini.

This release comes amid growing competition from the open-source AI community, with models like Meta's LLaMA 3 and China's DeepSeek gaining considerable attention. OpenAI's decision appears to be a strategic effort to re-engage with this community and maintain its leadership position.

Can Your Mac Handle It? System Requirements

So, can you run these new models on your personal computer? For the smaller 20 billion parameter model, the answer is likely yes if you have a modern Mac. OpenAI specifies that this model performs well on devices with at least 16 gigabytes of unified memory or VRAM.

This makes it a perfect fit for higher-end Apple Silicon Macs, such as those with M2 Pro, M3 Max, or better configurations. In fact, OpenAI specifically highlights Apple Silicon support as a primary use case for the 20b model.

The larger 120 billion parameter model is a different beast entirely. It demands a substantial 60 to 80 GB of memory, putting it far beyond the reach of standard consumer desktops or laptops. This model is intended for powerful GPU workstations or cloud-based setups, making the 20b version the go-to choice for most Mac and PC users.

Performance and Developer Freedom

The gpt-oss models are packed with modern features, including chain-of-thought reasoning, function calling, and code execution. This opens the door for developers to fine-tune them, build sophisticated tools, and run everything without an internet connection. The potential for privacy-first apps, offline personal assistants, and bespoke AI workflows is immense.

OpenAI model on HuggingFace

OpenAI model on HuggingFace

To get developers up and running, OpenAI has provided reference implementations for several popular toolkits, including PyTorch, Transformers, Triton, vLLM, and Apple's own Metal Performance Shaders. Support is also available in third-party tools like Ollama and LM Studio, which greatly simplify the process of downloading, compressing, and interacting with the models.

For Mac users, the 20b model leverages the power of Apple's Metal system and the shared memory architecture of Apple Silicon. The model is already compressed using an efficient 4-bit format that boosts speed and reduces memory usage without sacrificing quality. While some technical setup is still needed, tools like LM Studio and Ollama make it more accessible than ever.

What This Means for AI and Apple Users

OpenAI's return to open-weight models is a game-changer. The 20b model delivers impressive performance for its size and is accessible on a wide range of local hardware, especially Apple Silicon Macs. This empowers developers to build local AI solutions without being tied to cloud servers or API subscription fees.

While the 120b model demonstrates the cutting edge of AI, it will primarily serve as a research benchmark rather than a daily tool for most people. Still, its release under a permissive license is a major win for transparency and AI accessibility.

For Apple users, this is an exciting preview of what powerful, on-device AI can achieve. As Apple continues to integrate local-first machine learning into macOS and iOS, OpenAI's latest move fits perfectly into this evolving landscape, bringing the future of AI directly to your desktop.

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details