Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

North Korean Hackers Wield ChatGPT for Advanced Attacks

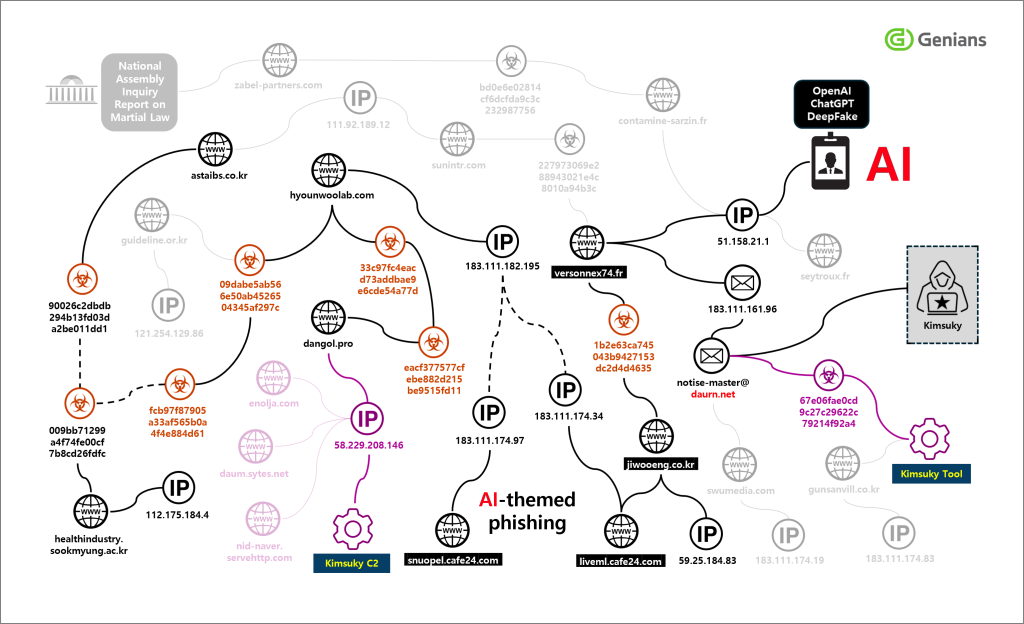

A new and alarming wave of cyber attacks demonstrates how state-sponsored actors are leveraging generative AI to create highly sophisticated and deceptive campaigns. Researchers have attributed a recent series of advanced persistent threat (APT) attacks to the North Korea-linked Kimsuky group, which is now using tools like ChatGPT to craft convincing fakes for its spear-phishing operations.

According to a report from the Genians Security Center (GSC), a campaign discovered on July 17, 2025, targeted South Korean defense-related organizations using deepfake images of military employee ID cards. This attack highlights a significant shift in cyber warfare, where AI is used not just for malware creation but for social engineering and identity forgery, all while using complex tactics to fly under the radar of conventional security tools.

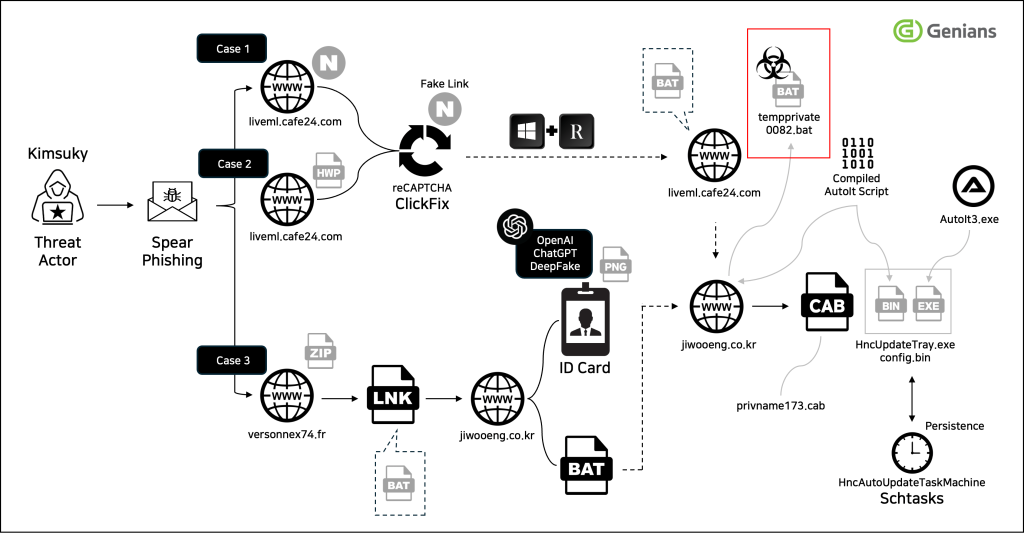

The Anatomy of an AI-Powered Attack

Investigators uncovered that the Kimsuky group used ChatGPT to generate incredibly realistic deepfake military ID cards. These forgeries were then embedded in phishing emails disguised as official government correspondence, sent from domains that mimicked South Korean defense institutions. The emails contained a compressed file named “Government_ID_Draft.zip” to lure victims.

Once a victim opened the zip file, a malicious LNK shortcut file executed obfuscated commands through cmd.exe. These commands dynamically reconstructed PowerShell scripts that were hidden in encoded environment variables. The decoded script then connected to a command-and-control (C2) server hosted on jiwooeng.co[.]kr to download the next stage of the attack.

At this point, the compromised system downloaded the AI-generated deepfake ID image along with a malicious batch file. Analysis confirmed the image was created by AI, with the TruthScan Deepfake-Detector service flagging it with a 98% probability of being a forgery. This proves that attackers successfully manipulated ChatGPT with tailored prompts to bypass its safety restrictions.

The executed batch script included a 7-second delay to evade automated detection before fetching its main payload—an executable disguised as a legitimate Hancom Office updater named “HncUpdateTray.exe.” This file was, in reality, malware based on AutoIt, which then deployed additional scripts to steal data and grant remote control over the infected device.

Bypassing Defenses with Advanced Obfuscation

This sophisticated campaign is an evolution of Kimsuky's earlier “ClickFix” tactics, which used phishing lures impersonating security alerts from South Korean portal companies. The core strategy remains consistent: hide the malicious execution flow from antivirus engines through heavy obfuscation, multi-stage downloads, and by masquerading as legitimate software.

Researchers emphasized that traditional antivirus solutions are largely ineffective against these methods. Signature-based detection fails to catch the heavily obfuscated batch commands and cannot recognize the nuance of AI-generated social engineering content.

Why EDR is Essential in the Age of AI Threats

To counter these advanced threats, security experts stress the critical need for endpoint detection and response (EDR) solutions. Unlike traditional antivirus, an EDR platform monitors system behavior, allowing it to identify the entire malicious chain of events—from the initial .lnk shortcut execution to the hidden PowerShell and AutoIt scripts.

By correlating this activity, EDR can detect and neutralize threats that rely on stealth and deception. The Genians report confirmed that its EDR solution successfully identified these staged intrusions, showcasing how behavior-based monitoring is essential to combat Kimsuky’s AI-powered tactics. This case marks a pivotal moment where AI-aided forgery is redefining cyber threats, making proactive EDR monitoring a necessity for all high-security organizations.

Indicators of Compromise (IoC)

MD5 Hashes:

09dabe5ab566e50ab4526504345af29733c97fc4eacd73addbae9e6cde54a77d143d845b6bae947998c3c8d3eb62c3af8684e5935d9ce47df2da77af7b9d93fb90026c2dbdb294b13fd03da2be011dd1

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details