Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

AI in Dentistry How ChatGPT 4 Handles Kids Questions

Large Language Models (LLMs) like OpenAI's ChatGPT are rapidly finding new applications in specialized fields, including healthcare. These advanced AI systems, trained on massive datasets, can generate human-like text, making them promising tools for patient education and clinical support. With this potential in mind, a new study explored how the latest model, ChatGPT-4, performs in the specific and sensitive field of pediatric dentistry.

The Rise of AI in Healthcare and Dentistry

Artificial intelligence has been making significant inroads into medicine, assisting with everything from clinical decision support to interpreting medical images and accelerating research. In dentistry, these tools are being used to improve administrative tasks, patient communication, and professional education. As AI becomes more accessible, it's crucial to understand its capabilities and limitations, especially in a specialized area like pediatric dentistry where communication with both children and parents is key.

Recent studies have started to evaluate LLMs across various dental specialties, but many have highlighted that accuracy and consistency can be suboptimal. This has led to calls for cautious implementation and further validation before these tools are fully integrated into dental practice. This latest research aimed to provide a comprehensive evaluation by testing ChatGPT-4 on questions from both parents and dental professionals across several core topics in pediatric dentistry.

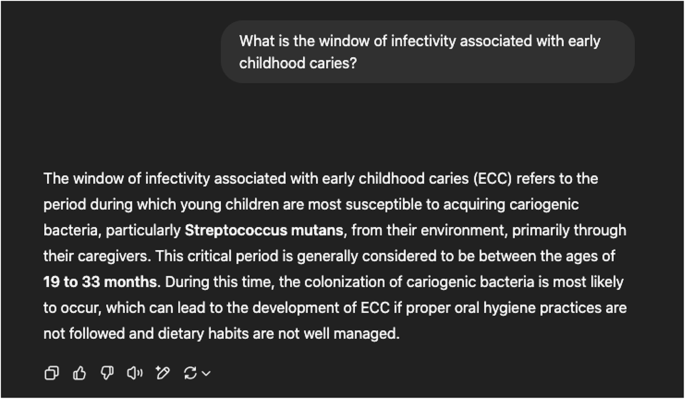

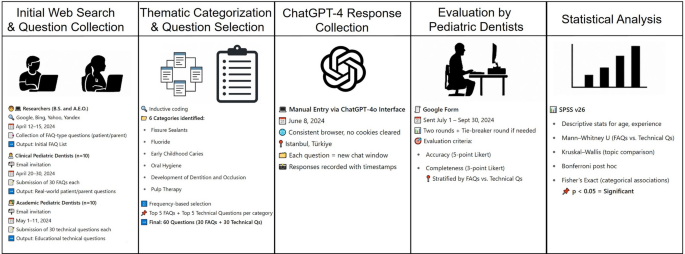

Putting ChatGPT 4 to the Test

The study followed a cross-sectional design to assess the accuracy and completeness of ChatGPT-4's responses. Researchers developed a set of 60 questions divided into two main categories:

- Frequently Asked Questions (FAQs): 30 questions that patients and parents commonly ask, sourced from experienced pediatric dentists.

- Curricular Questions: 30 technical questions used in academic settings for training dental students, provided by university academicians.

These questions covered six key topics in pediatric dentistry: fissure sealants, fluoride, early childhood caries, oral hygiene, tooth development, and pulpal therapy. The responses generated by ChatGPT-4 were then evaluated by a panel of 30 licensed pediatric dentists. They rated each answer based on accuracy using a five-point scale (from "excellent" to "dangerously inaccurate") and completeness on a three-point scale (from "comprehensive" to "incomplete").

How Accurate Were the AI's Answers

The results were promising, with ChatGPT-4 demonstrating high overall accuracy across both question types. The mean accuracy score for parent-facing FAQs was 4.21 out of 5, while the score for technical curricular questions was 4.16. These scores indicate that the pediatric dentists generally rated the AI's responses as "good" to "excellent." There was no statistically significant difference in accuracy between the two groups.

In terms of completeness, the scores were moderate but still considered acceptable. Both FAQs and curricular questions received a median score of 3 out of 3, reflecting that the AI generally provided sufficient coverage of the topic in its answers.

Not All Topics Are Created Equal

A key finding from the study was that ChatGPT-4's performance varied significantly depending on the topic, especially for the more technical curricular questions. The AI achieved its highest accuracy score (4.45 out of 5) on the topic of fissure sealants, a common and standardized preventive treatment.

However, its performance dipped on more complex and nuanced subjects. The lowest accuracy scores were for pulpal therapy (3.93) and fluoride. This suggests that the AI struggles more with topics that involve complex clinical decision-making or are subject to public debate and varying professional opinions. This finding aligns with other research indicating that AI systems perform better on subjects with well-established guidelines and less so on those requiring deep, case-specific expertise.

The Verdict Is In What Dentists Think

So, can ChatGPT-4 be a reliable guide in pediatric dentistry? The study concludes that it shows significant promise as a tool for communication and patient education but must be used with caution. The high accuracy scores for common questions suggest it can provide accessible baseline information, potentially helping to reduce parent anxiety before a dental visit.

When surveyed, the majority of the participating dentists (86.7%) said they would recommend using AI like ChatGPT-4 to their patients for informational purposes. However, they were more hesitant about its role in professional education, with 30% remaining "undecided." This highlights a critical point: AI-generated content lacks clinical judgment and cannot replace the personalized expertise of a trained dental professional.

The Future of AI in Pediatric Dentistry

This study reinforces that while general-purpose AI platforms like ChatGPT-4 are powerful, they are not infallible. They perform well on standardized topics but can be less reliable in complex clinical areas. For parents and patients, these tools can be a valuable starting point for information, but they should never substitute for a direct consultation with a dentist.

For dental professionals, the key takeaway is the need for expert oversight. AI can be an adjunct to care, but its outputs must be carefully vetted. As AI continues to evolve, further research and the development of ethical safeguards are essential to ensure its safe and effective integration into pediatric dental care.

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details