Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

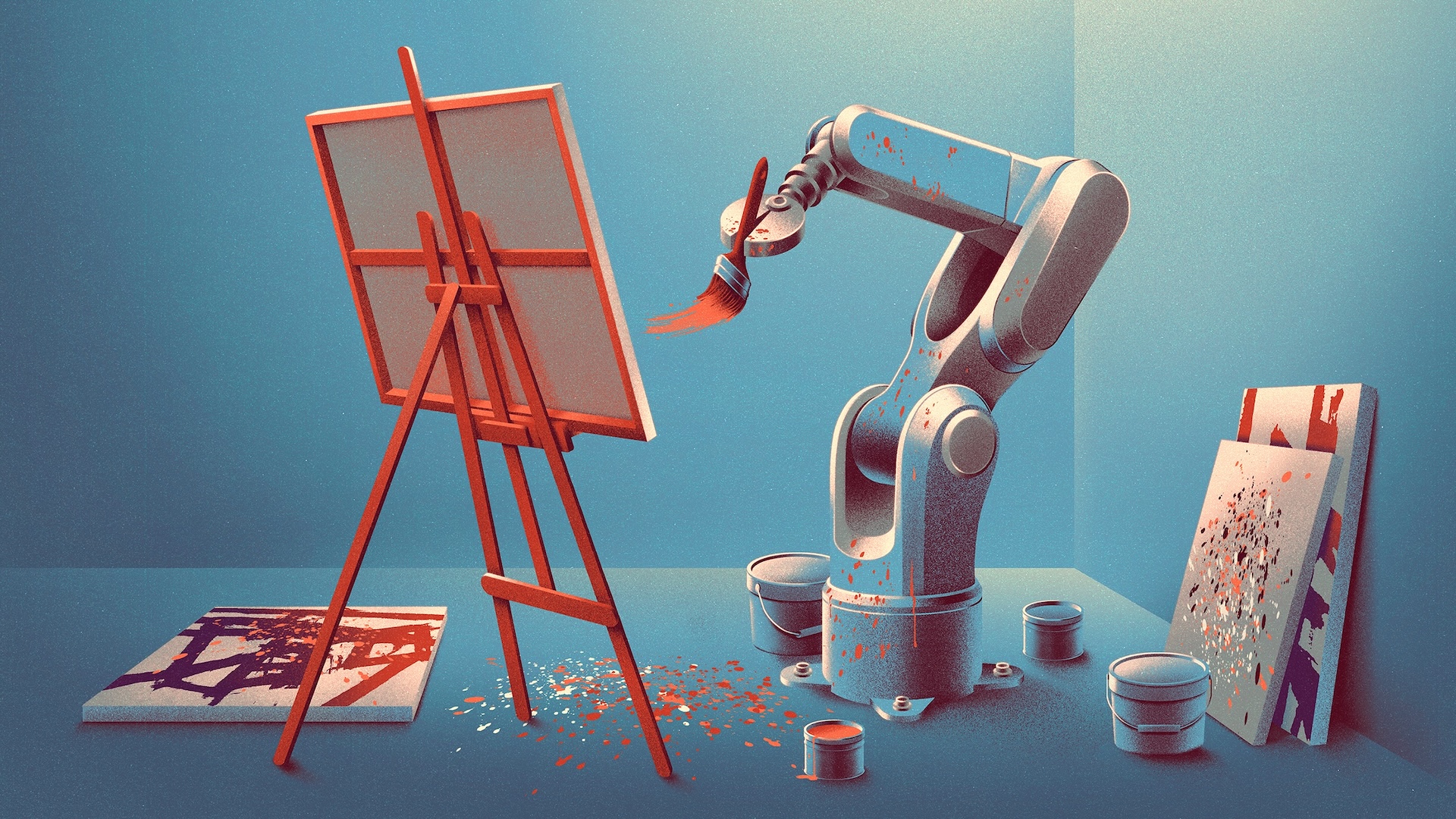

How AI Imperfections Spark Digital Creativity

In the modern era of technology, one of the biggest surprises has been the unique capabilities of artificial intelligence. While we once imagined a future of self-driving cars and robot butlers, we instead received AI systems that master complex games, analyze vast amounts of text, and even compose art. This has flipped our expectations: tasks easy for humans, like physical chores, are hard for robots, while intellectual feats are becoming increasingly achievable for algorithms.

Yet, within this intellectual prowess lies another mystery: the strange, emergent creativity of AI. This is especially true for diffusion models, the technology powering image generators like DALL·E and Stable Diffusion.

The Paradox of AI Creativity

Diffusion models are designed to do one thing very well: replicate the data they were trained on. In theory, if they worked perfectly, they would only produce copies of images they've already seen. But as AI researcher Giulio Biroli notes, that's not what happens. "They don't—they're actually able to produce new samples," he says. This is the paradox at the heart of AI creativity.

The process these models use, known as denoising, involves taking an image, breaking it down into digital noise (a random collection of pixels), and then meticulously reassembling it. If you imagine shredding a painting into fine dust and then putting it back together, you get the idea. But how does this process result in a completely new work of art?

Now, two physicists have proposed a groundbreaking answer. In a new paper, they argue that AI creativity isn't a magical, emergent property. Instead, it's a direct and inevitable result of technical imperfections built into the denoising process itself. Their work suggests that this creativity is a deterministic consequence of the AI's architecture.

From Biology to AI A New Hypothesis

Mason Kamb, a Stanford graduate student and lead author of the paper, found inspiration in an unlikely place: morphogenesis, the biological process of how living things self-assemble. In nature, patterns like those on an animal's coat or the structure of our limbs are formed by cells interacting locally, without a central blueprint. This concept, known as a Turing pattern, sometimes results in errors, like an extra finger on a hand.

When Kamb saw early AI images with similar oddities—like people with too many fingers—he suspected a similar bottom-up process was at play. He focused on two known technical shortcuts in diffusion models:

- Locality: The models process images in small, isolated patches of pixels, without considering the image as a whole.

- Translational Equivariance: The models follow a strict rule to maintain structural consistency. If you shift the input slightly, the output shifts in the same way.

For a long time, researchers saw these features as mere limitations. Kamb and his co-author, Surya Ganguli, hypothesized that these very limitations were, in fact, the source of the model's creativity.

Putting the Theory to the Test

To prove their hypothesis, Kamb and Ganguli built a mathematical system called the equivariant local score (ELS) machine. The ELS machine isn't a trained AI; it's a set of equations designed purely to simulate the effects of locality and equivariance.

They then took images that had been converted to noise and ran them through both their ELS machine and powerful, fully trained diffusion models. The results were, in Ganguli's words, "shocking." The simple ELS machine was able to predict and match the outputs of the complex AI models with an average accuracy of 90%.

This stunning result appears to confirm their theory. "As soon as you impose locality, [creativity] was automatic; it fell out of the dynamics completely naturally," Kamb explained. The very constraints that force the AI to focus on small patches without seeing the big picture are what enable it to generate novel combinations and, occasionally, bizarre artifacts like extra fingers.

What This Means for AI and Us

This research provides the first clear, mathematical explanation for how creativity arises in diffusion models. It demystifies the process, showing it to be a predictable byproduct of the system's architecture rather than an inexplicable phenomenon. While it doesn't explain creativity in other systems like large language models, it's a major step forward in understanding the inner workings of generative AI.

Interestingly, this work may also shed light on our own minds. As machine learning researcher Benjamin Hoover suggests, human and AI creativity might not be so different. We also create by assembling building blocks from our experiences and knowledge. "Both human and artificial creativity," he notes, "could be fundamentally rooted in an incomplete understanding of the world." Perhaps what we call creativity, in both humans and machines, is simply the novel and valuable result of trying to fill in the gaps of what we know.

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details