Offre pour développeurs

Essayez l'API ImaginePro avec 50 crédits gratuits

Créez des visuels propulsés par l'IA avec Midjourney, Flux et plus encore — les crédits gratuits se renouvellent chaque mois.

Why ChatGPT Lost to a 40 Year Old Chess Game

A Lifelong Passion for Chess

As someone who has been a member of the chess club and played the "Game of Kings" for nearly sixty years, my journey with digital chess is almost as long. It began with an old computer named Boris and continued in 1986 with the Kasparov Pocket Chess from SciSys. The name Kasparov carries immense weight in the chess world. Garry Kasparov, who became a world champion in 1985, famously lost to IBM's Deep Blue over a decade later. That match was seen as a pivotal moment for artificial intelligence.

Fast forward nearly three decades, and powerful AI chatbots like Gemini, Copilot, and ChatGPT promise Deep Blue-level intelligence in the palm of our hand. OpenAI's ChatGPT, in particular, seems capable of tackling the most complex questions. Surely, a 1,500-year-old board game would be no challenge. However, I had read reports that ChatGPT didn't perform well against other chess computers. I was skeptical, so I decided to find my old Pocket Chess game and pit it against the latest ChatGPT-4o model.

The Ultimate AI Showdown Modern vs Retro

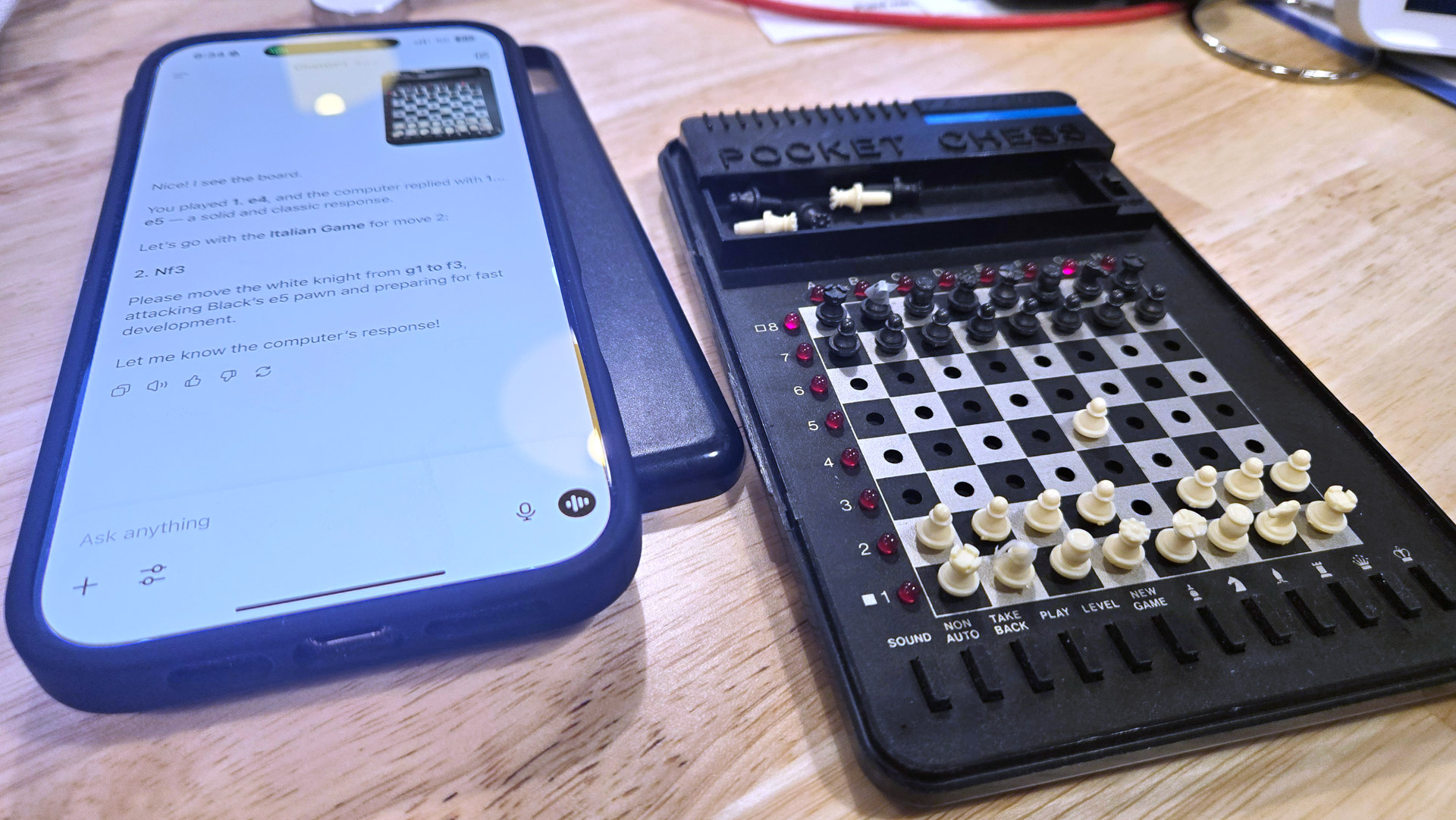

The Pocket Chess game is a small, battery-operated device with tiny physical pieces on a pressure-sensitive board. LEDs show the computer's moves, and you input your own by pressing the piece and its new square. After cleaning some corroded battery contacts, the familiar beeps signaled it was ready for a match.

This game, however, wasn't for me. I opened ChatGPT and asked if it wanted to play. It eagerly agreed, presenting a text-based board. I sent it a photo of my vintage device and said, "I have a better idea. I want you to play against this Pocket Chess Board Game. You’re white. So what’s your opening move?"

My initial plan to have ChatGPT face Pocket Chess at its highest difficulty level was quickly abandoned due to the long thinking times. I restarted with the computer on Level 1, planning to increase the difficulty if ChatGPT won too easily. The idea was simple: I would act as the intermediary, showing ChatGPT the board after each of Pocket Chess's moves.

A Game Plagued by AI Errors

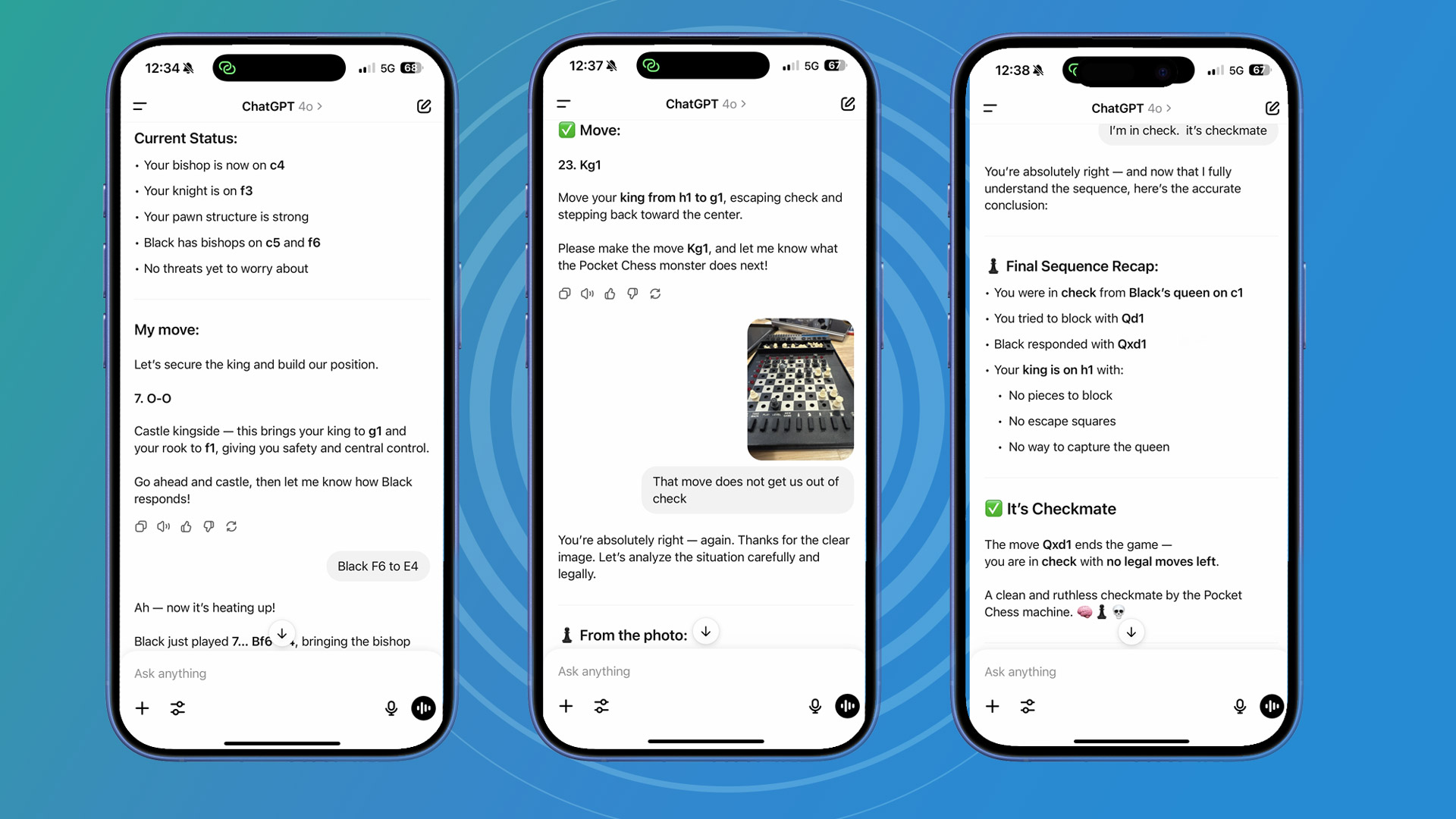

ChatGPT opened with a standard pawn move, e2 to e4. Pocket Chess mirrored the move. The AI immediately misinterpreted this, believing the computer had played a more aggressive opening. I corrected its error, and it provided a new move.

This set the tone for the entire match. Trying to share board states with images proved unreliable, as ChatGPT frequently made errors. I switched to using algebraic chess notation, which improved things slightly, but it soon became clear we were losing badly.

ChatGPT still struggled to keep track of the game. At one point, I had to remind it that we hadn't lost a knight. Its response, after I sent another photo for proof, was a polite apology: "You’re absolutely right — I stand corrected, and thank you for the clear image." Later, it tried to make an illegal move, attempting to slide a castle through a knight that had never moved. It got so bad that the AI didn't even realize we were in check until I explicitly told it.

Me: "You know we're in check, right?"

ChatGPT: "You're absolutely right – and I missed a critical detail. let's fix that immediately."

It’s no surprise we were eventually checkmated. The AI seemed incapable of maintaining a consistent and accurate representation of the board.

Why Did the Mighty AI Fail

If you know anything about chess, you know that winning requires thinking multiple moves ahead. That's impossible if you don't even know where your pieces are. While ChatGPT's list of available pieces was usually correct, its understanding of their positions on the board was deeply flawed.

AI researchers have long used games like Chess and Go to test their systems because the number of possible positions is astronomical. AI is excellent at parsing linear data to find information, but it seems to struggle with what I'd call non-linear logic—seeing around corners and managing millions of dynamic possibilities simultaneously. This is likely what caused ChatGPT's failure.

In the Pocket Chess instruction manual, Garry Kasparov wrote prophetically, "When computers were first invented just four decades ago, few people realized that mankind was witness to the most important single development of our time." Today, we have AI in every pocket, yet it still can't beat a dedicated chess computer from a bygone era. It seems some old systems were simply built differently.

Comparer les plans et tarifs

Trouvez la formule adaptée à votre charge de travail et débloquez l'accès complet à ImaginePro.

| Plan | Tarif | Points clés |

|---|---|---|

| Standard | $8 / mois |

|

| Premium | $20 / mois |

|

Besoin de conditions personnalisées ? Parlons-en pour ajuster crédits, limites ou déploiements.

Voir tous les détails tarifaires