Effortless AI Image Editing With FLUX1 and NVIDIA NIM

The world of AI-powered image creation just became more accessible. Black Forest Labs’ innovative FLUX.1 Kontext [dev] image editing model is now available as a user-friendly NVIDIA NIM microservice, streamlining the process for creators and developers.

Traditionally, deploying powerful AI models like FLUX.1 involved a complex series of steps, from managing data and quantizing models to reduce memory usage, to converting them for optimized inference backends. The new FLUX.1 Kontext [dev] NIM microservice eliminates these hurdles. It's a pre-packaged, optimized solution designed for RTX AI PCs that unlocks faster and more intuitive generative AI workflows.

For a deeper dive into what NVIDIA NIM microservices offer, check out this overview: Watch: What are NVIDIA NIM Microservices and AI Blueprints for RTX AI PCs?

Generative AI in Kontext

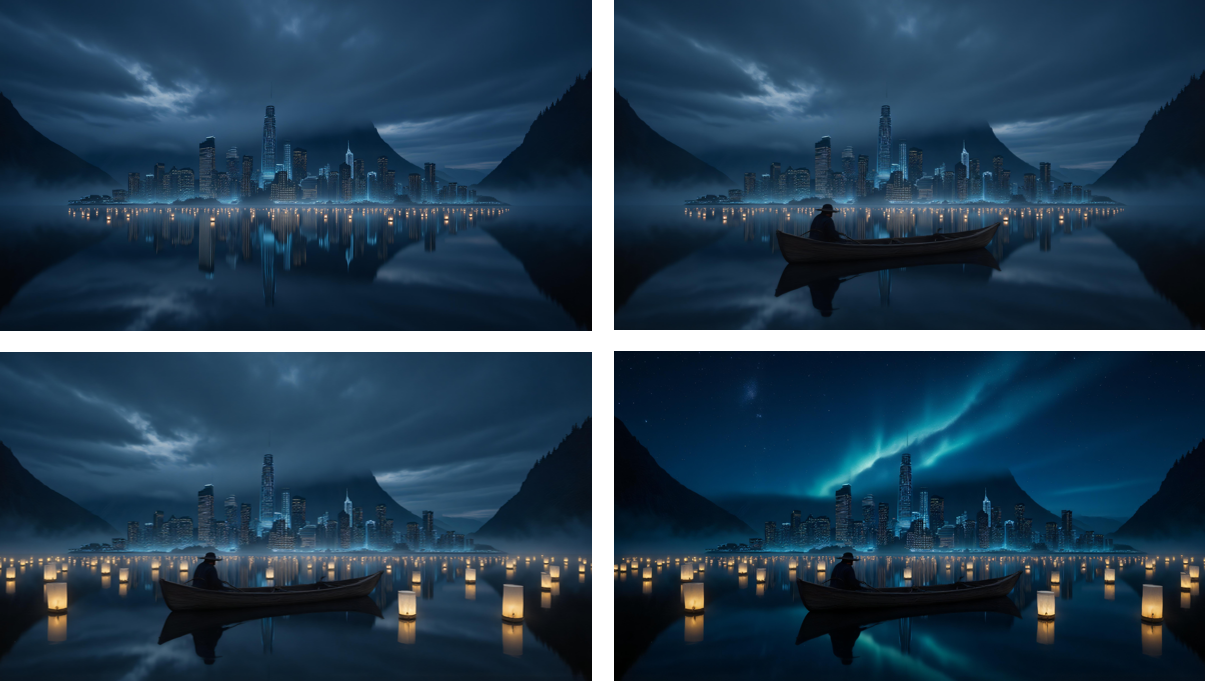

The FLUX.1 Kontext [dev] model is an open-weight generative tool purpose-built for editing images. Its standout feature is a guided, step-by-step generation process. This gives users precise control over how an image evolves, whether they're making minor tweaks to small details or completely transforming a scene. The model uniquely accepts both text and image inputs, allowing you to provide a visual concept and then steer its evolution with simple, natural language.

![An image of a colorful bird generated by FLUX.1 Kontext [dev] with a simple text prompt.](https://blogs.nvidia.com/wp-content/uploads/2025/08/flux-bird-1680x934.png)

This dual-input method results in coherent, high-quality edits that remain faithful to the original concept without needing complex fine-tuning.

The FLUX.1 Kontext [dev] NIM microservice delivers these capabilities in a one-click download through ComfyUI NIM nodes, making it incredibly easy to get started.

Unlocking Peak Performance on RTX GPUs

A key part of this release is the significant optimization work done by NVIDIA and Black Forest Labs. They successfully quantized the FLUX.1 Kontext [dev] model, drastically reducing its size and memory requirements. The model was shrunk from 24GB to:

- 12GB for FP8 precision, optimized for NVIDIA Ada Generation GPUs like the GeForce RTX 40 Series.

- 7GB for FP4 precision, optimized for the upcoming NVIDIA Blackwell architecture using a new method called SVDQuant that preserves quality while reducing size.

![A chart showing the speedup compared with BF16 GPU and memory usage required to run FLUX.1 Kontext [dev] in different precisions.](https://blogs.nvidia.com/wp-content/uploads/2025/08/flux-chart.png)

Furthermore, by leveraging NVIDIA TensorRT—a framework that accesses the Tensor Cores in RTX GPUs—the model achieves over a 2x performance acceleration compared to running the original BF16 model with PyTorch. These are performance gains that were once reserved for AI experts, but are now accessible to any enthusiast thanks to the NIM microservice.

Get NIMble: How to Get Started

The FLUX.1 Kontext [dev] is available on Hugging Face with all the TensorRT optimizations and is ready to use with ComfyUI. Here’s how you can start using it:

- Install NVIDIA AI Workbench.

- Get ComfyUI.

- Install the NIM nodes via the ComfyUI Manager.

- Accept the model license on the Black Forest Labs’ FLUX.1 Kontext [dev] Hugging Face page.

- The node will automatically prepare the workflow and help you download all necessary models.

For a quick guide on deploying NVIDIA NIM, see the video below: Watch: How to Deploy NVIDIA NIM in 5 Minutes

NIM microservices are optimized for GeForce RTX and RTX PRO GPUs. You can explore more NIMs on GitHub and build.nvidia.com.

Stay Connected

This project is featured in the RTX AI Garage blog series, which highlights community-driven AI innovations. To stay updated on AI agents, creative workflows, and more for AI PCs, follow NVIDIA AI PC on Facebook, Instagram, TikTok, and X. You can also subscribe to the RTX AI PC newsletter and join the community Discord server.