Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

How AI Is Automating Anti Muslim Hate in India

The Alarming Rise of AI-Fueled Hate in India

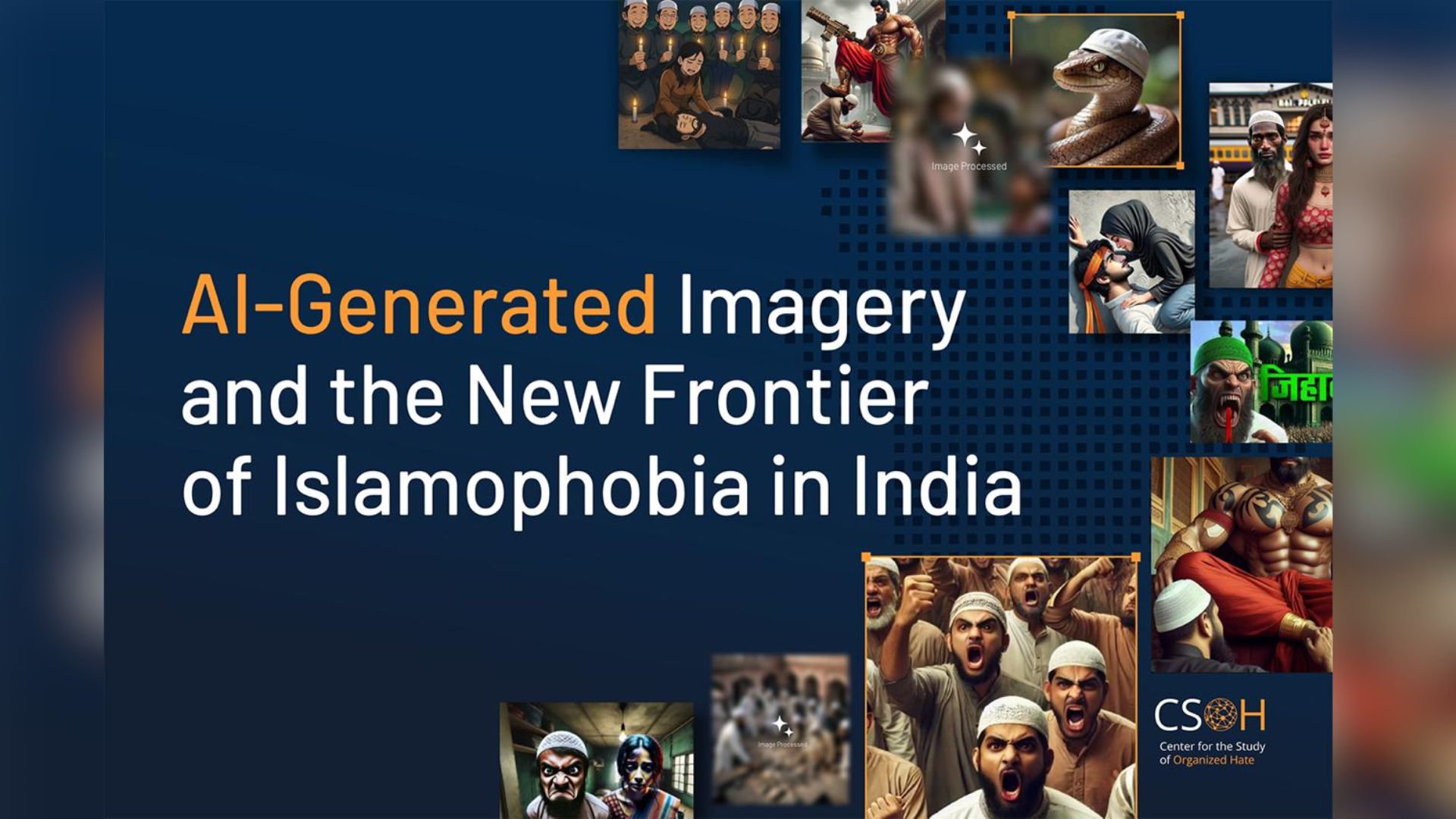

A recent report from the Centre for the Study of Organised Hate (CSOH) has uncovered a disturbing trend on Indian social media: the escalating use of AI-generated images to promote Islamophobic narratives. Authored by Nabiya Khan, Aishik Saha, and Zenith Khan, the study provides a critical analysis of how artificial intelligence is being weaponized to create and spread visual hate content targeting the Muslim community.

The research team analyzed 1,326 publicly available, AI-generated Islamophobic posts from 297 different social media accounts between May 2023 and May 2025. This content demonstrated significant user engagement, highlighting the wide-reaching impact of this new form of digital hate.

Four Key Themes of Digital Islamophobia

The report identifies four primary categories of AI-generated hate content being circulated:

- Sexualization of Muslim women: Creating degrading and objectifying depictions.

- Exclusionary and dehumanizing rhetoric: Portraying Muslims as outsiders or subhuman.

- Conspiratorial narratives: Spreading baseless theories to incite fear and mistrust.

- Aestheticization of violence: Glorifying or normalizing violence against Muslims.

AI as an Amplifier for Pre-existing Bigotry

A key insight from the report is that AI is not necessarily creating new forms of hatred but is instead “automating existing hate.” Far-right networks are using these powerful tools to scale up their production of Islamophobic content.

Nabiya Khan, a co-researcher on the report, explained that AI acts as an “amplifier.” She noted, “These tools made old prejudices scalable, are faster and cheaper, and even harder to trace. Existing laws are insufficient for governing or regulating this content.” With an estimated 22 million AI users in India, these images have become a potent weapon for targeting religious minorities.

The Dangerous Tropes: Dehumanization and Sexualization

The AI-generated visuals consistently frame Muslims as “inherently violent.” One example cited involves images of snakes wearing skull caps, a tactic used to portray Muslims as deceptive, dangerous, and worthy of elimination. To evade detection and moderation, some of this content is disguised using popular art styles, like the Ghibli aesthetic on Instagram, or is shared under comedy hashtags to pass as a harmless joke. This blurs the line between fact and fiction, making the propaganda more insidious.

The most engaged-with category of hate was the sexualized depiction of Muslim women, which garnered 6.7 million interactions. The report states, “This category… revealing the gendered character of much Islamophobic propaganda, which fuses misogyny with anti-Muslim hate.” These images aim to dehumanize Muslim men as predators and portray Muslim families as morally corrupt.

Fueling Conspiracy Theories and Evading Accountability

AI imagery is also being used to visually bolster dangerous conspiracy theories like “love jihad,” “population jihad,” and “rail jihad.” These narratives frame Muslims as a coordinated threat to Hindu national security. The report points out that “such imagery allows Hindu nationalist actors in the political arena to agitate hateful narratives without being fact-checked,” as the fictional nature of the images makes them difficult to debunk.

A Call for Critical Awareness

To combat this growing threat, Khan emphasized the need for greater public awareness. She argues that critical thinking is essential for people to learn how to analyze these images and question their motives by asking, “Who benefits from me believing this?”

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details