Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

Why AI Generated Images Look So Strange

Have you ever wondered how computers see the world? It's a fundamentally different process than how we humans perceive our surroundings.

With the rapid rise of generative artificial intelligence (AI), we can now ask AI tools to describe an image for us or even create a new one from a text prompt. As these tools become more integrated into our daily lives, understanding the difference between computer vision and human vision is more important than ever.

A fascinating new study published in Visual Communication delves into this very question. By using AI to generate descriptions and then recreate images from those descriptions, the research uncovers how AI models "see"—revealing a bright, sensational, and generic world that stands in stark contrast to our own visual realm.

How Human Vision and AI Perception Differ

For humans, seeing is a biological process. Light enters our eyes, is converted into electrical signals by the retina, and our brains interpret these signals into the images we experience. Our vision prioritizes key elements like color, shape, movement, and depth, allowing us to navigate our environment and identify important changes.

Computers, on the other hand, process images algorithmically. They standardize visual data, analyze metadata (like time and location), and compare new images to a vast library of previously learned ones. Instead of seeing a holistic picture, AI focuses on technical elements like edges, corners, and textures, constantly looking for patterns to classify objects.

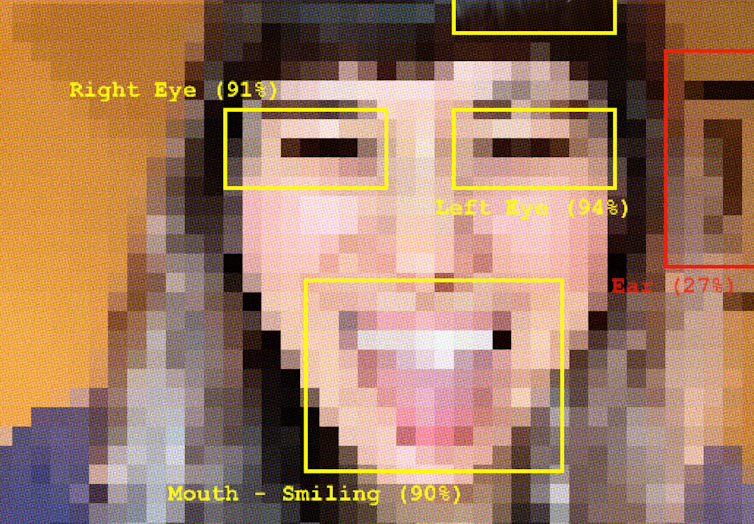

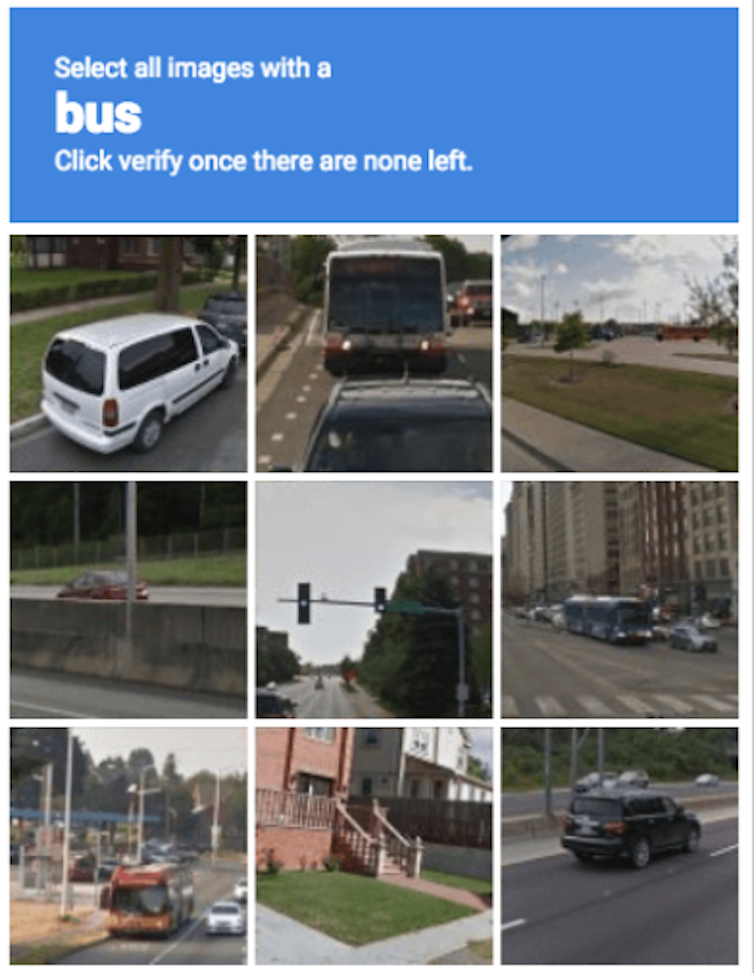

If you've ever completed an online CAPTCHA test, you've actively participated in training AI to "see." When you click on all the images with a bus, you're not just proving you're human; you're teaching a machine learning algorithm to recognize what a bus looks like.

An Experiment in AI Vision

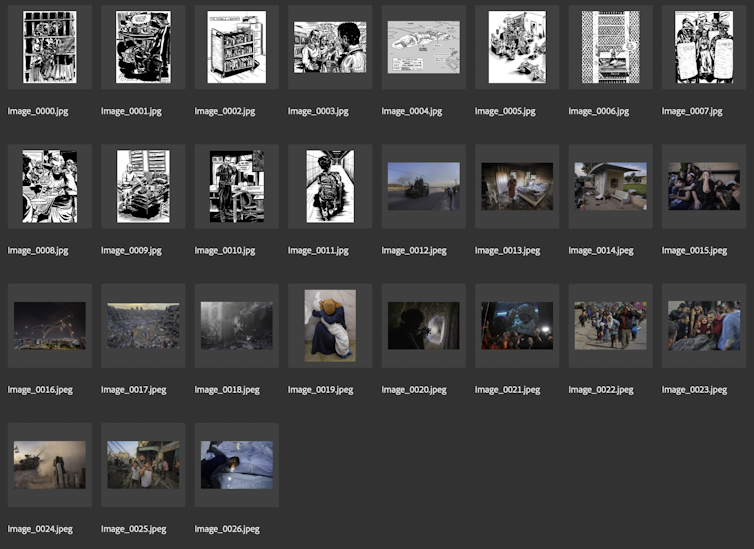

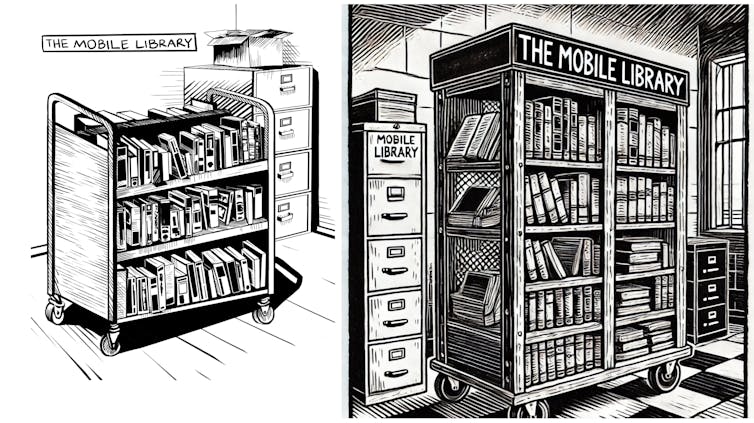

The recent research put this digital perception to the test. A large language model was asked to describe two distinct sets of human-made images: one containing hand-drawn illustrations and the other consisting of photographs.

Next, those AI-generated descriptions were fed back into an AI image generator to create new visuals. By comparing the original human art with the AI's reinterpretation, several key differences emerged.

What AI Actually Sees A Garish Generic World

The experiment revealed a distinct AI bias. The AI descriptions noted that the hand-drawn images were "illustrations" but failed to identify the others as "photographs." This suggests AI models treat photorealism as the default visual style.

Key human-centric details were consistently missed:

- Cultural Context: The AI could not infer cultural context from elements like Arabic or Hebrew writing, highlighting the dominance of English in its training data.

- Visual Nuance: Important aspects like color, depth, and perspective were largely ignored in the AI's descriptions.

- Form and Shape: The AI-generated images were noticeably more "boxy" and rigid compared to the organic shapes of the human illustrations.

Furthermore, the AI's world is a sensationalized one. The generated images were far more saturated with brighter, more vivid colors, a likely result of being trained on high-contrast stock photography. The AI also tended to exaggerate details; for instance, a single car in an original photo was transformed into a long convoy of vehicles in the AI version.

While this generic and sensational style allows AI images to be used in many contexts, their lack of specificity can make them feel less authentic and engaging to audiences.

Human vs AI Vision Choosing the Right Tool

This research confirms that humans and computers see the world in profoundly different ways. Understanding when to rely on each can be a powerful advantage.

AI-generated images can be vibrant and eye-catching, but they often feel hollow and lack the emotional depth that resonates with people. For tasks requiring an authentic connection, audiences will likely find human-created images that reflect specific, real-world conditions far more compelling.

However, AI's unique capabilities make it an excellent tool for rapidly labeling and categorizing massive datasets, assisting humans in processing information at scale.

Ultimately, there is a place for both human and AI vision. By understanding the limits and opportunities of each, we can become safer, more productive, and more effective communicators in our increasingly digital world.

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details