Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

China Med Students Use ChatGPT Amidst Hopes Fears

Study Overview Chinese Medical Students and ChatGPT

Background: The use of ChatGPT in education is growing rapidly. Many students are turning to ChatGPT for academic tasks. While numerous studies have looked at ChatGPT among medical students in various fields, there's a lack of research in China.

Methods: Researchers surveyed 1,133 medical students from different colleges in Sichuan Province, China, between May and November 2024. The goal was to understand their awareness of and attitudes towards ChatGPT. The study used descriptive statistics, chi-square tests, and multiple regression analysis to find factors influencing positive attitudes towards ChatGPT's future use.

Results: The study found that 62.9% of students had used ChatGPT for their medical studies, and 16.5% had even used it in a published article. The main uses were searching for information (84.4%) and completing academic assignments (60.4%). However, students also had concerns: 76.9% worried about ChatGPT spreading misinformation, and 65.4% were concerned about plagiarism. Despite these worries, 64.4% said they would use ChatGPT for help with learning problems. Overall, 60.7% had a positive view of ChatGPT's future in medicine.

Conclusion: The research indicates that most medical students see ChatGPT as a helpful tool for study and research but are cautious about its risks, especially misinformation and plagiarism. Despite these concerns, a majority are willing to use ChatGPT for problem-solving and are optimistic about its integration into medical education and clinical practice. The study suggests a need to improve students' AI literacy, create clear usage guidelines, and establish support systems to prepare medical students for AI integration.

The Rise of ChatGPT in Medical Education

Chat Generative Pretrained Transformer (ChatGPT) is a groundbreaking artificial intelligence (AI) language model that uses deep learning to create human-like responses to natural language. Since its launch, ChatGPT has gained significant attention and has been widely adopted in diverse fields like software development, journalism, and literary creation, showcasing its versatility, as noted in various studies (e.g., Kim 2023, George Pallivathukal et al. 2024). It has also shown unique value in the medical field by enabling new ways for patients and healthcare providers to interact, and by supporting medical decision-making, education, and research (Sallam 2023).

In medical education, ChatGPT can synthesize and use basic medical knowledge effectively. It can meet or come close to the passing mark in all three exams of the United States Medical Licensing Examination (USMLE) without formal training, performing similarly to third-year medical students. The tool also showed high concordance and insight in its explanations. These findings suggest ChatGPT could play a role in medical education and possibly help with clinical decision-making (Gilson et al. 2023, Wartman & Combs 2019).

Although ChatGPT offers a personalized learning experience for medical students and residents with immediate feedback in a safe environment (Kung et al. 2023), it also faces skepticism due to concerns about potential inaccuracies and ethical issues like bias, plagiarism, and copyright infringement (King 2023, Kitamura 2023). Therefore, it's crucial to empirically investigate this technology's potential for transformative innovation and assess its risks.

ChatGPT is being integrated into education at an unprecedented rate, with a growing trend of students using it for academic work (Eysenbach 2023). This study is one of the first to systematically investigate the awareness, usage patterns, and perceptions of ChatGPT among medical students in China. While previous research has explored AI adoption in medical education globally, the unique academic, cultural, and regulatory context of China remains underexamined. This study aims to fill this gap by providing data on how Chinese medical students use ChatGPT, their concerns, and their optimism about AI's future in medicine. The main objective was to evaluate medical students' perceptions of ChatGPT, analyze their usage behaviors, and investigate their attitudes towards this technology.

How the Study Was Conducted

Study Design and Ethics Data from survey respondents were kept anonymous, with no personal information collected. The West China Second Hospital of Sichuan University Institutional Review Board deemed the study exempt research (Approval No. 2024-083, Date: June 12, 2024). A password-protected survey platform ensured data security. The survey targeted medical students in Sichuan province. Participation was voluntary and uncompensated.

This study used a convenience sampling method and was carried out from May 2024 to November 2024 across medical colleges in Sichuan Province, China. Participants scanned a QR code to access an introduction page and voluntarily join the survey. The introduction assured confidentiality and anonymity and confirmed voluntary participation. Participants then completed an informed consent form stating, "You acknowledge and agree that the data collected may be used for research analysis," before proceeding. Given the study's exploratory nature and the need for rapid data collection amid evolving ChatGPT adoption, convenience sampling allowed efficient access to medical students. Despite this, participants were recruited from multiple medical schools and year groups to capture diverse perspectives. While convenience sampling might limit generalizability, it enabled a timely assessment of ChatGPT's real-world use patterns among Chinese medical students, a group underrepresented in existing literature.

Questionnaire Development The questionnaire was developed from a comprehensive literature review on related topics and adapted to local perceptions in China. Before the main survey, a pilot test was conducted. Six experts evaluated content validity. The Item-Content Validity Index (I-CVI) for all items was ≥ 0.80, and the Scale-Content Validity Index/Average (S-CVI/Ave) was ≥ 0.90. Based on expert suggestions, ChatGPT usage purposes were categorized into work assistance, academic research, and clinical application. Ten students from different clinical disciplines (equal male-female ratio, including undergraduates, postgraduates, and PhDs) completed the questionnaire, timed themselves, and identified issues. The questionnaire was refined based on their feedback, incorporating questions about challenges in using ChatGPT. Pilot test responses were excluded from the final analysis.

The final 20-question questionnaire was organized into four sections: demographic characteristics, academic research engagement, ChatGPT usage, and attitudes towards ChatGPT in medicine. It was published on the WJX platform. Participants were asked if they had used ChatGPT. Users completed the full questionnaire, while non-users selected reasons for not using it and then ended the survey.

Survey Distribution The questionnaire was distributed via WeChat. Team members contacted doctors and university teachers from various medical schools, informed them of the survey's purpose, and had them forward the questionnaire to student WeChat groups. Up to three reminder messages were sent to eligible non-respondents.

Data Analysis After data collection, questionnaires were cleaned based on criteria like excessive missing data, uniform answers, and unusually fast completion times (less than 60 seconds for the full survey or less than 30 seconds after question 11 for partial completion). These time thresholds were based on the pilot test.

Responses were analyzed using SPSS software (version 27). Attitudes towards future ChatGPT use in academic research were categorized as positive, neutral, or negative. Descriptive statistics tabulated variable frequencies. Chi-square tests and multiple regression analysis examined factors associated with positive attitudes towards future ChatGPT use. A P-value <0.05 indicated significant differences.

What the Survey Revealed About ChatGPT Use

Participant Demographics The survey included 1,133 participants, with 38.1% male (432) and 61.9% female (701). A significant portion (53.8%) held a bachelor’s degree, while 10.1% had a doctoral degree. Most respondents (55.7%) were majoring in clinical medicine, and their primary research area was clinical research (54.3%).

Awareness and Initial Exposure to ChatGPT

Among respondents, 90.1% had heard of ChatGPT, and 29.7% considered it useful for researchers in their field (Figure 1A). However, most participants had not published any work in the past three years (Figure 1B). Even among the 62.9% who used ChatGPT, only 16.5% incorporated it into their published articles (Figure 1C). For the 37.1% who had not used ChatGPT, primary concerns included dependence on the technology (42.4%), lack of originality in assignments or research (42.4%), and plagiarism allegations (41.2%) (Figure 1D).

How Medical Students Are Using ChatGPT

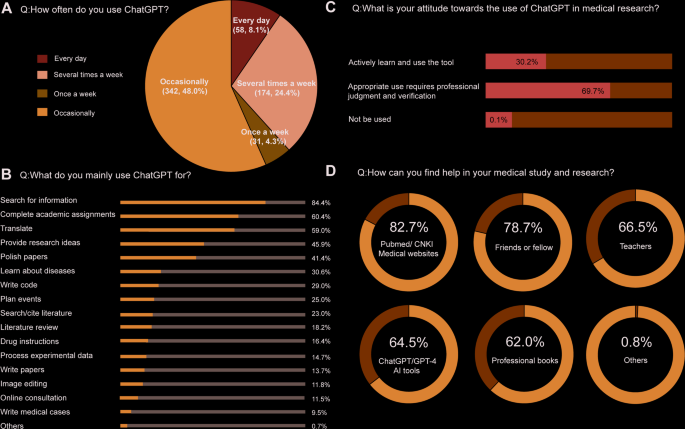

Regular ChatGPT users at work were a minority, with only 8.1% reporting daily usage and 48% indicating occasional use (Figure 2A). The most common applications were searching for information (84.4%), completing academic assignments (60.4%), translation (59.0%), providing research ideas (45.9%), and polishing papers (41.4%) (Figure 2B). Despite enthusiasm, a majority (69.7%) held a neutral stance, suggesting ChatGPT can be used but requires professional judgment and verification (Figure 2C). Notably, when facing difficulties in medical studies and research, 64.5% still chose to use AI tools like ChatGPT and GPT-4 for support (Figure 2D).

Student Attitudes Benefits and Concerns

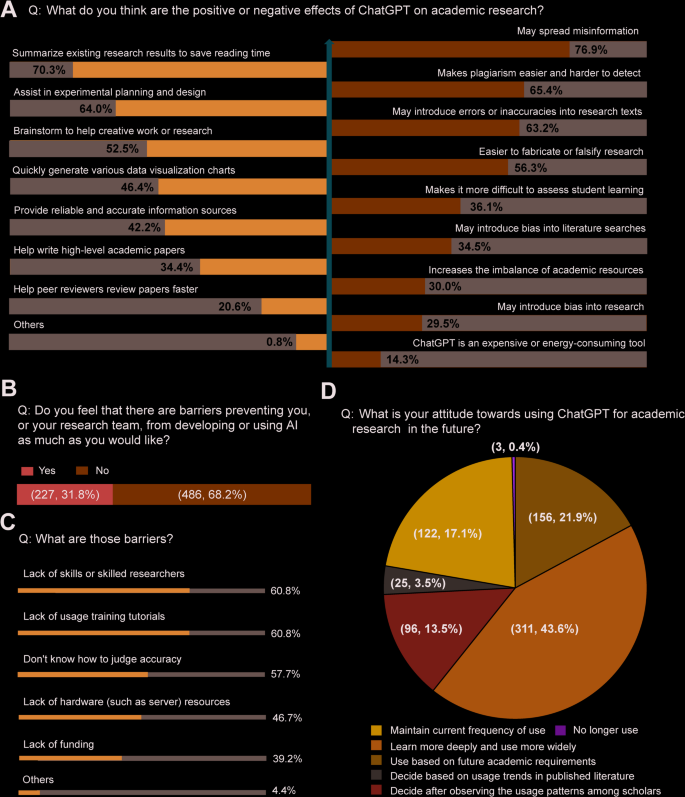

Respondents acknowledged ChatGPT could effectively summarize existing research to save reading time (70.3%), assist in experimental planning (64.0%), and brainstorm creative work (52.5%). However, concerns were raised about ChatGPT spreading misinformation (76.9%), facilitating plagiarism (65.4%), and falsifying research (63.2%) (Figure 3A). Only 31.8% reported encountering obstacles in AI development or use (Figure 3B), mainly due to a shortage of skilled researchers (60.8%) and inadequate training courses (60.8%). Despite its dual nature, 60.7% expressed a positive outlook on future ChatGPT use (Figure 3D). No significant link was found between negative impacts and obstacles on its future use among medical students.

Factors Influencing ChatGPT Acceptance Results indicated that males showed higher positive attitudes than females (odds ratio [OR] = 1.69, 95% confidence interval [CI]: 1.22–2.35, P = 0.003). The positive attitude of high-frequency users (using several times a week) was higher than that of low-frequency users (OR = 4.75, 95% CI: 2.71-9.00, P < 0.001).

Understanding the Findings ChatGPTs Role and Risks

The study's findings improve our understanding of medical students' perceptions and attitudes towards ChatGPT in medical education and research. Using the Technology Acceptance Model (TAM) and Diffusion of Innovations Theory, the observed adoption and usage patterns can be contextualized. Most participants had used ChatGPT in medical research, some in published work. A significant number expressed a favorable attitude. The high adoption rate and positive future outlook align with TAM’s concepts of perceived usefulness and ease of use, especially seen in students' primary use for information searching and academic tasks.

Data also show that male students and high-frequency users are more likely to have a favorable attitude. The gender disparity might stem from sociocultural factors, like traditional gender roles influencing technological self-efficacy. Male participants often show more confidence in technical skills, possibly from earlier exposure to programming and AI curricula. Female participants showed more awareness and concern about potential technological risks. From a Diffusion of Innovations perspective, gender-based adoption differences suggest varied positions on the innovator-laggard continuum, influenced by sociocultural factors rather than education level. This divergence persists regardless of educational attainment. Concerns about misinformation and plagiarism reflect TAM’s perceived risk, potentially affecting adoption. These findings suggest that successful AI integration in medical education needs demographically tailored strategies. This theoretical view highlights addressing perceived risks and sociocultural barriers while leveraging benefits to promote equitable AI integration, focusing on experiential learning to foster positive attitudes across all user groups.

Cross-sectional studies provide initial insights into ChatGPT's application in medical education. Most early research focused on student and educator perceptions and experiences with ChatGPT, and factors influencing students' intent to use it. This study found 62.9% of participants had a functional understanding of ChatGPT and used it for various academic tasks. Notably, 64.5% preferred ChatGPT to resolve learning challenges. These results show widespread adoption and highlight the need for structured incorporation into medical curricula. This could involve embedding ChatGPT modules into medical information retrieval courses, developing comparative teaching units, demonstrating ChatGPT's synergy with professional medical databases like UpToDate, and designing ChatGPT-facilitated clinical thinking training tasks to foster positive interaction between AI tools and medical education.

While cross-sectional studies identify correlations, randomized controlled trials (RCTs) investigate causality. Studies show AI significantly enhanced students' self-directed learning and critical thinking compared to traditional methods. As a virtual assistant, AI improved students' knowledge and surgical skills. As an auxiliary medical tool, AI markedly improved clinical decision-making quality and satisfaction. However, other research has shown adverse effects, like diminished reporting reliability with AI. Given mixed research outcomes and this study's findings that medical students use ChatGPT for academic work while equally concerned about negative impacts, further research and review are needed to address contradictions. Guidelines and policies are also necessary to regulate ethical AI use in education.

Several universities have incorporated AI into medical education. Stanford University established an AI lab to integrate AI into clinical practice. Japan developed a comprehensive AI general education curriculum. China has accelerated AI education through policy interventions. In 2020, the State Council endorsed integrating AI with medical disciplines. The 2022 national curriculum reform embedded AI fundamentals into compulsory education. By 2023, 498 universities launched AI undergraduate programs, and 28 state-approved innovation pilot zones were established. These measures reflect China’s strategy to build a multi-tiered AI talent ecosystem.

In Sichuan Province, high-quality AI medical resources are mainly in Chengdu, with remote medical colleges having limited access. This could create AI literacy disparities. Sichuan's “Medicine + AI” interdisciplinary pilot program may raise competencies in some institutions. This policy advantage might not be replicated elsewhere. The traditional view in Sichuan medical education, emphasizing AI as a tool not a decision-maker, might suppress critical thinking on AI's ethical risks, while coastal areas focus more on supervising AI decision-making. Thus, investigating medical students, the primary cohort for future medical AI, has regional and national relevance for digital transformation in medical education. However, extrapolating findings requires adjusting for local medical ecosystem variations.

Study Limitations to Consider Several limitations should be considered. Convenience sampling might have introduced selection bias, overrepresenting participants from tertiary medical institutions with advanced resources. Prefecture-level city medical colleges with AI infrastructure constraints might be underrepresented. Participation challenges among senior medical students in clinical rotations could reduce response rates from this group. AI literacy and usage among students in non-clinical specialties (public health, basic medical sciences) are insufficiently documented, limiting generalizability. Self-selection bias might also have influenced results, as students favorable to AI might have been more likely to participate, potentially yielding overly optimistic estimates.

The Future of ChatGPT in Medical Training Recommendations

ChatGPT, as an innovative AI tool, has diverse and impactful potential applications in medicine. This study's findings show that participants predominantly use ChatGPT for academic tasks in medical research, especially literature retrieval and scholarly task completion. Multivariate analysis identified significant demographic and behavioral patterns in technology acceptance: male participants and high-frequency users were more receptive to ChatGPT. These results confirm the correlation between technological proficiency, research engagement depth, and ChatGPT acceptance rates. Acknowledging concerns about risks like unauthorized information dissemination and academic integrity violations, three strategic interventions are proposed: implementing comprehensive educational programs for optimal ChatGPT use, establishing robust guidelines for ChatGPT application in academic and clinical domains, and developing an integrated support framework to nurture digital competencies among emerging healthcare professionals.

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details