Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

The Big Problem Holding Back Googles AI Tools

In the race to dominate the generative AI space, sparked by the rise of ChatGPT, Google has emerged as a formidable contender. Since declaring a "code red" in late 2022, the tech giant has launched a powerful and wide-reaching suite of AI tools that deliver real value to users.

Features like Circle to Search have been hailed as one of Google's best software innovations in years. The AI-enhanced Recorder app on Pixel devices has been a game-changer for many, and the Gemini chatbot stands as a strong competitor to ChatGPT. I personally find myself using Gemini far more often.

However, Google's frantic pace to outdo the competition has created a significant side effect: an organizational mess. This fragmentation is a critical issue that the company must address if it wants to maintain its leadership position in the AI revolution.

Google’s AI Tools Need a Centralized Hub

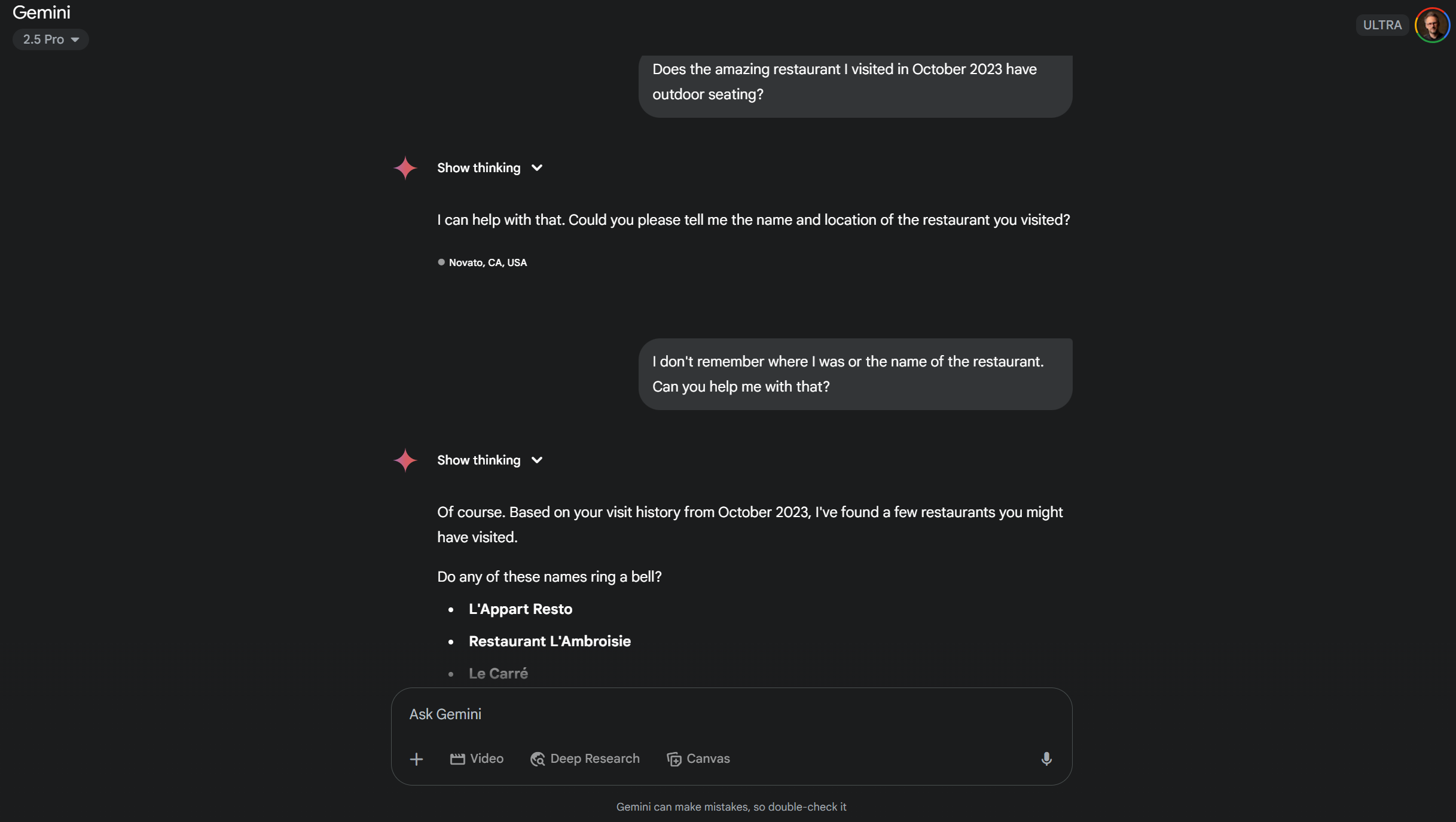

To understand the problem, consider a simple question: Does that amazing restaurant you went to last October have outdoor seating? If you have a poor memory like me, finding the answer becomes a surprisingly complex task.

First, I need to figure out where I was. Google Maps Timeline is no longer an option for me, as the feature has changed and I switch phones too often. My next stop would be Google Photos, where I could use its AI search to find food pictures from October 2023 to pinpoint a location. With a general area identified, I'd then have to jump over to Google Maps, hope a restaurant name jogs my memory, and then finally perform another search there to check for outdoor seating.

AI is supposed to make things faster and easier, but a seemingly simple question about a restaurant I visited still takes multiple app searches to answer.

This multi-step process is exactly what a single, universal search bar should handle. Yet, no such tool exists. Neither Google.com nor the Google app can access my personal information to answer the query. Even the main Gemini app, despite being linked to my Gmail, Calendar, and Maps accounts, couldn't help. Instead of using my data, it hallucinated three French restaurants I never visited.

What's the point of granting Gemini access to my personal data if it doesn't use it for practical tasks? This disconnect highlights a fundamental flaw: I have to bounce between apps just as I did before the generative AI boom.

Why are there numerous AI search tools in Google's repertoire but not one 'universal' search bar that does everything?

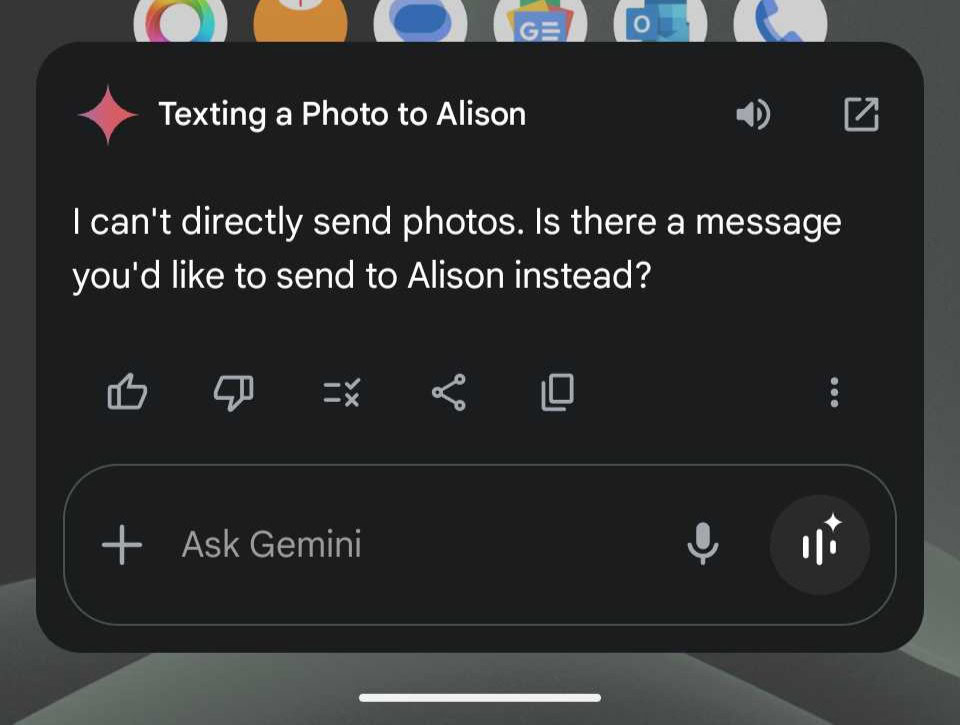

Here’s another example of this scattershot approach. On Android, you can ask Gemini to find a specific photo, like, “Find a photo of me and my dad at the zoo last year,” and it works brilliantly. You can also ask it to, “Text my wife to let her know I am on my way home.” But if you try to combine these actions by asking it to find a photo and send it to your wife, Gemini fails. The function that searches Photos and the one that controls Messages don't communicate.

Beyond functionality, the branding itself is a maze. On my phone, Gemini is a chatbot inside the Gemini app, powered by the Gemini 2.5 Pro language model. That’s three separate things with the same name. Add Gemini Live and Gemini 2.5 Flash to the mix, and the confusion grows.

Google's branding for its AI tools is also very confusing, and AI features in Google Search aren't replicated in Gemini.

This extends to Google Search, where you can try an "AI Mode" that is different from the "AI Overviews" that sometimes appear at the top of search results. Neither of these features is accessible within the Gemini app, despite all being powered by the same underlying Gemini LLM. Instead of simplifying tasks, Google has created a confusing collection of tools that don't work well together.

A Familiar Problem Across the Tech Landscape

Google isn't alone in this struggle. Samsung's Galaxy AI suite, while impressive, suffers from the same fragmentation. For instance, Galaxy phones have an AI-powered search within the Settings app that lets you use natural language to find options. You can say, “My screen is too bright,” and it will surface the relevant display controls.

Samsung is making similar mistakes in this realm, with Galaxy AI features only appearing in certain areas and not working well with others.

This is a fantastic feature, especially for less tech-savvy users. However, it only works in that specific search bar. If you ask Gemini the same question, it can't access your phone's settings. This reliance on the user to know where to ask for help defeats the purpose of an intuitive, all-encompassing AI assistant.

Can This Ever Really Be Fixed?

There's a reason for this caution. A truly universal search that indexes personal data alongside web results has historically sparked privacy fears. Google Desktop, a tool from 2004 that indexed a user's local files, was eventually retired after users worried their private information was being exposed to the public web. A similar feature in the Android search widget around 2016, which searched across apps, was also removed due to user confusion and privacy concerns.

Even if Google can never truly solve this fragmentation issue, it can still do a whole lot better than it is right now.

This history likely prevents Google from turning Google.com into the all-in-one search I'm imagining. However, the Gemini app could be the perfect place for it. Users today are more accustomed to data aggregation within specific apps. They might be more willing to accept that the Gemini app knows everything about them, without fearing that data is publicly accessible.

Even without a single universal tool, Google can improve the current situation. For the AI revolution to be more than just a marketing slogan, the walls between these powerful but isolated tools must come down. We need one place that can access information across all of Google's platforms and execute complex commands. Until then, we're left with a dozen scattered AI gadgets, not the seamless future we were promised.

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details