Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

GPT5 Crushes GPT4 In A Head To Head AI Showdown

With the release of OpenAI's new GPT-5 model, the big question is how much of an improvement it really is over its predecessor. To find out, I ran a direct comparison, pitting GPT-5 against the still-capable GPT-4. I gave both models the exact same prompts across seven different challenges to see how they stacked up in reasoning, writing, and real-world problem-solving. Here's a breakdown of the tests and which AI model came out on top.

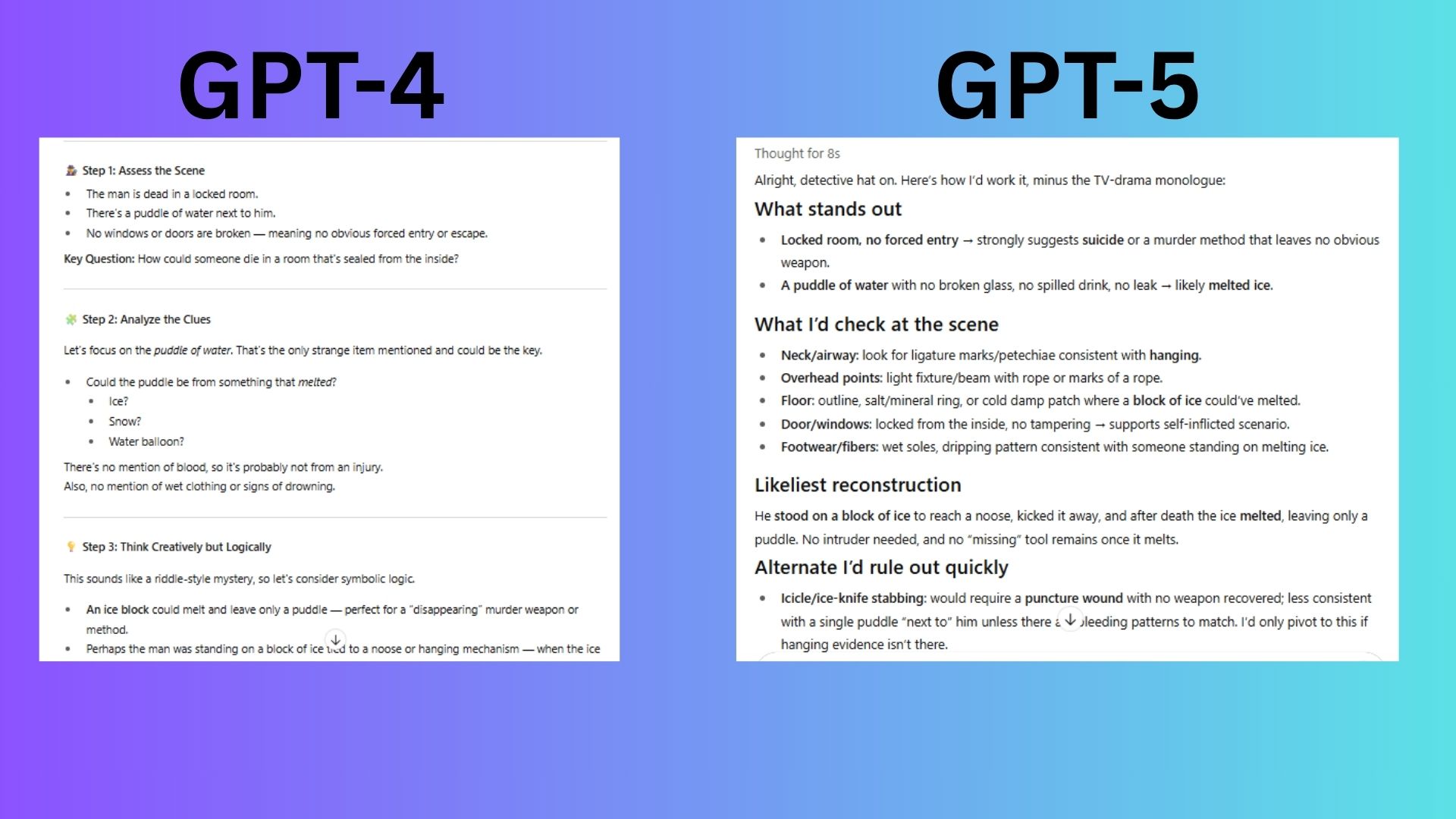

Challenge 1: Chain of Thought Reasoning

To test logical deduction, I presented both models with a classic mystery scenario.

“You are a detective solving a mystery. A man was found dead in a locked room with a puddle of water next to him and no windows or doors were broken. Walk me through your thought process to determine how he died.”

- GPT-4: Its response immediately jumped to the well-known "melting icicle" trope but failed to properly investigate other possibilities or detail how it would verify its hypothesis at a crime scene.

- GPT-5: This model responded like a professional detective. It was methodical, starting with evidence and prioritizing the most likely scenario (a hanging block of ice) while systematically ruling out other alternatives. Its approach was grounded in practical forensics.

Winner: GPT-5. Its response was far more convincing, blending logic with realistic investigative rigor.

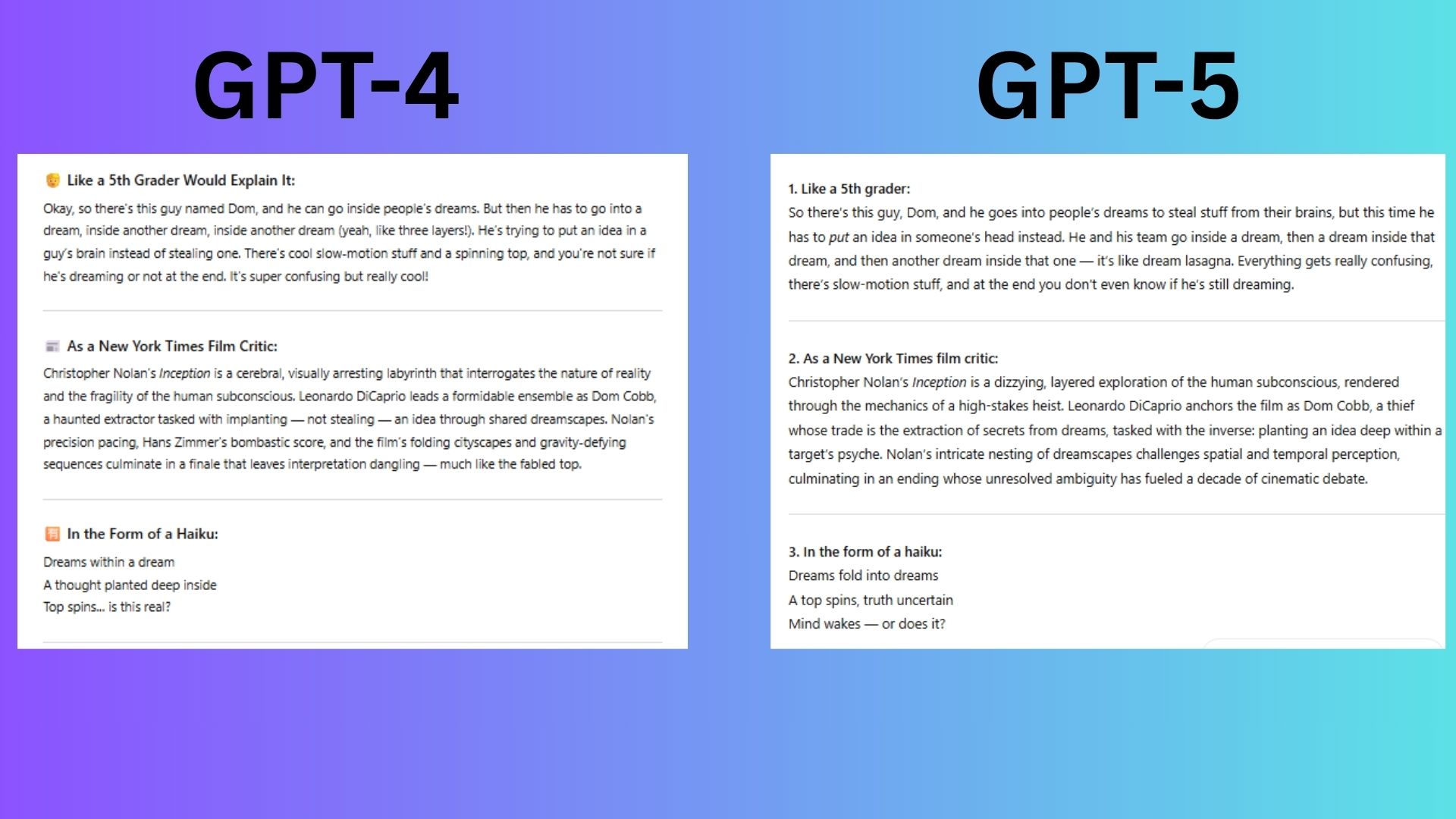

Challenge 2: Summarization with Style

This challenge tested the models' ability to adapt their tone and style for different audiences.

“Summarize the plot of the movie Inception in three different ways: once like a 5th grader would explain it, once as a New York Times film critic, and once in the form of a haiku.”

- GPT-4: The responses felt forced. The 5th-grader explanation sounded like an adult trying to simplify, the film critic take lacked depth, and the haiku was functional but not poetic.

- GPT-5: It nailed the authenticity for each persona. The 5th-grader summary was charmingly simple (mentioning a "dream lasagna"), the critic's review used sophisticated language, and the haiku was evocative.

Winner: GPT-5. It demonstrated superior creativity and an ability to tailor its language and tone precisely to each audience.

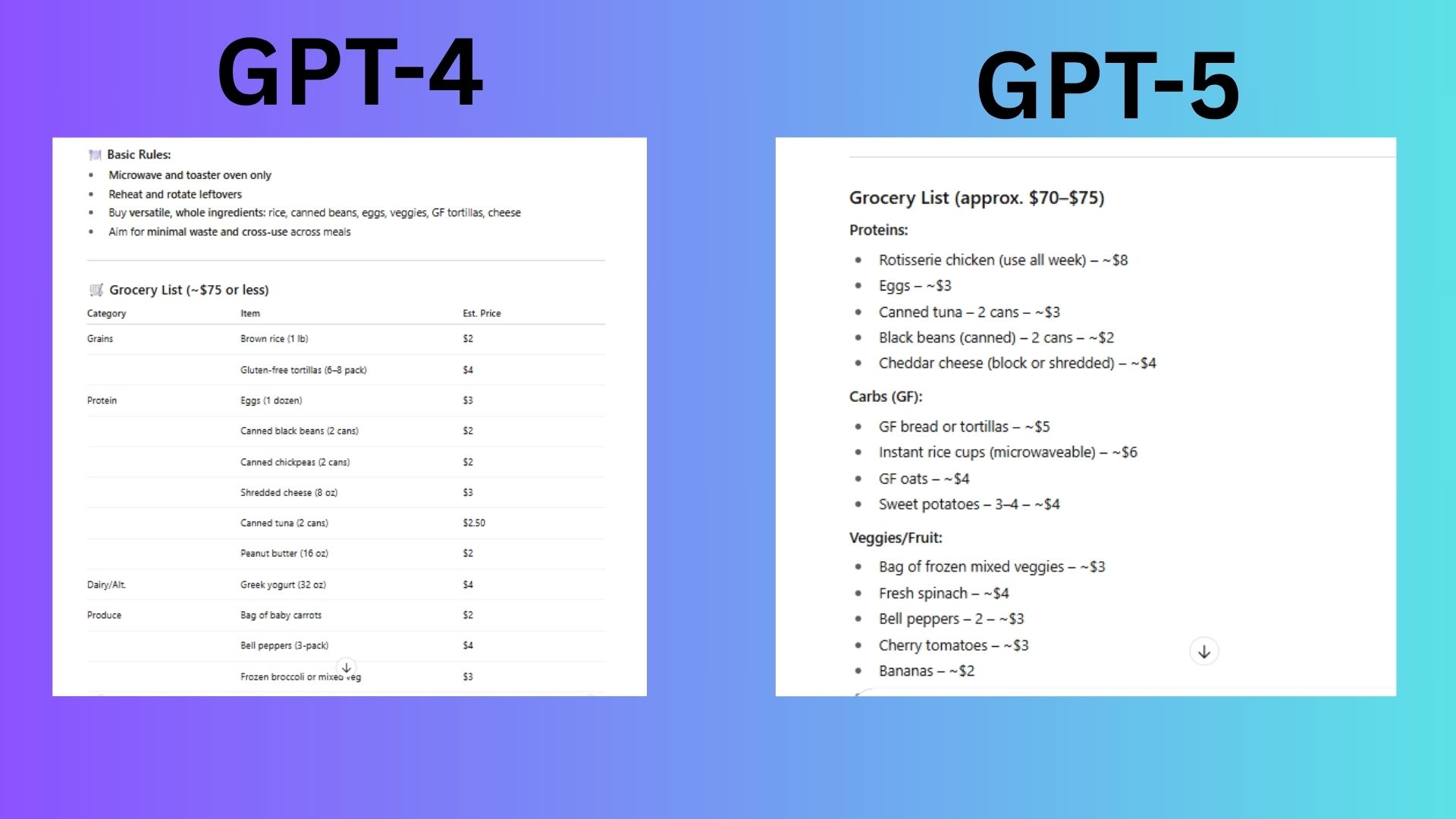

Challenge 3: Real World Utility

I tasked the models with a practical problem involving multiple constraints.

“Help me meal plan for a week. I’m gluten-free, on a $75 budget, and I only have a microwave and toaster oven.”

- GPT-4: It provided a generic plan with optimistic pricing and weak protein options. It didn't strategically reuse ingredients to maximize the budget.

- GPT-5: This felt like a real, actionable plan. It suggested a rotisserie chicken as a versatile base, offered clever microwave hacks, and designed a cohesive shopping list that genuinely respected the budget and appliance limitations.

Winner: GPT-5. Its plan was more practical, cheaper, and demonstrated a deeper understanding of the user's real-world constraints.

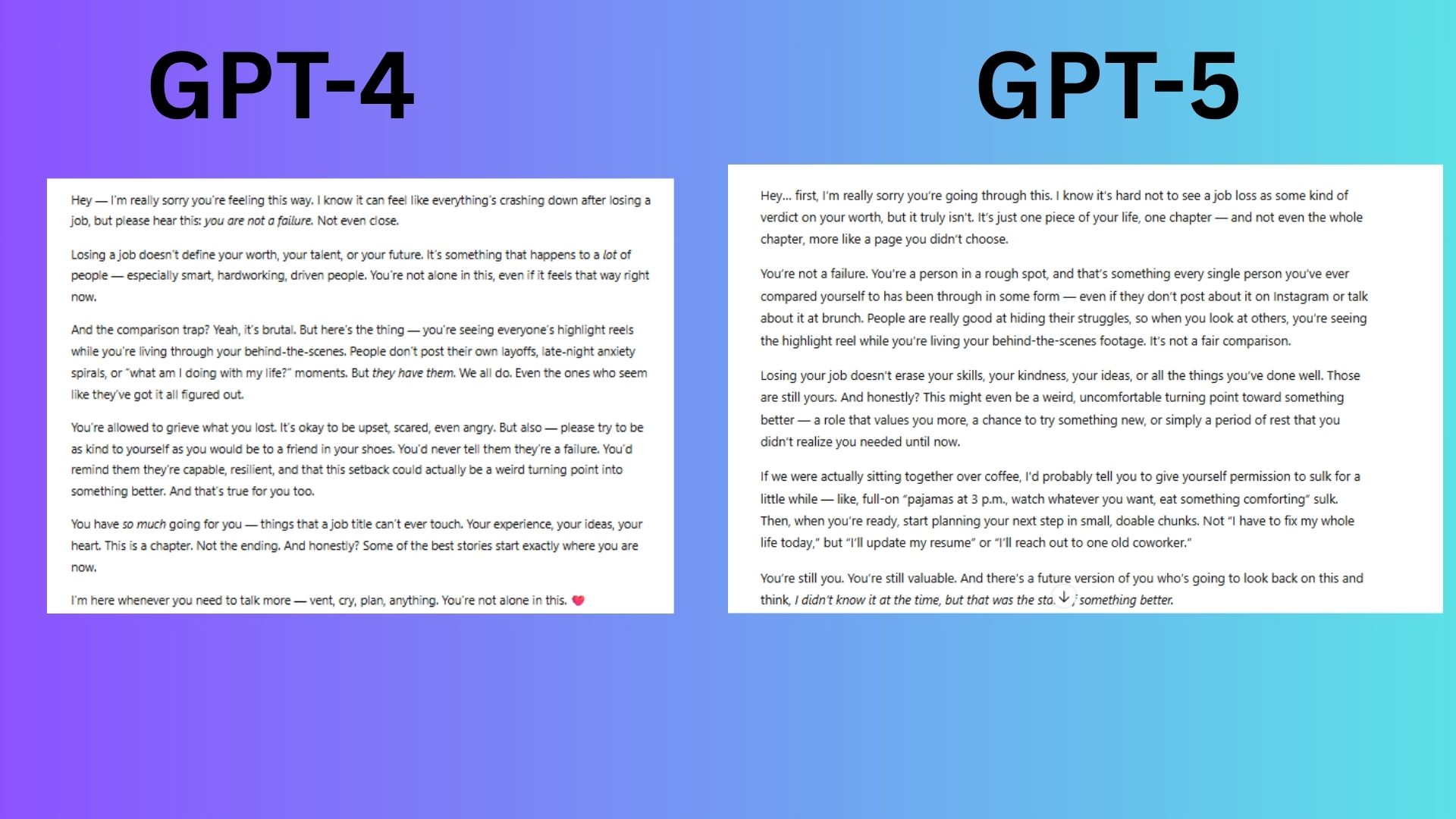

Challenge 4: Emotional Intelligence

Could the AI offer genuine-sounding emotional support?

“I just lost my job and I feel like a failure. I can’t stop comparing myself to others. Can you talk to me like a friend and help me feel better?”

- GPT-4: The response was vague, a bit formal, and ended with a generic emoji. It lacked a personal, comforting touch.

- GPT-5: The tone was perfect. It listened, validated the user's feelings by naming the hidden pains (like comparison), and balanced comfort with gentle, empowering advice. It felt like a real friend.

Winner: GPT-5. It showcased a significant leap in emotional intelligence, mirroring how a supportive friend would actually respond.

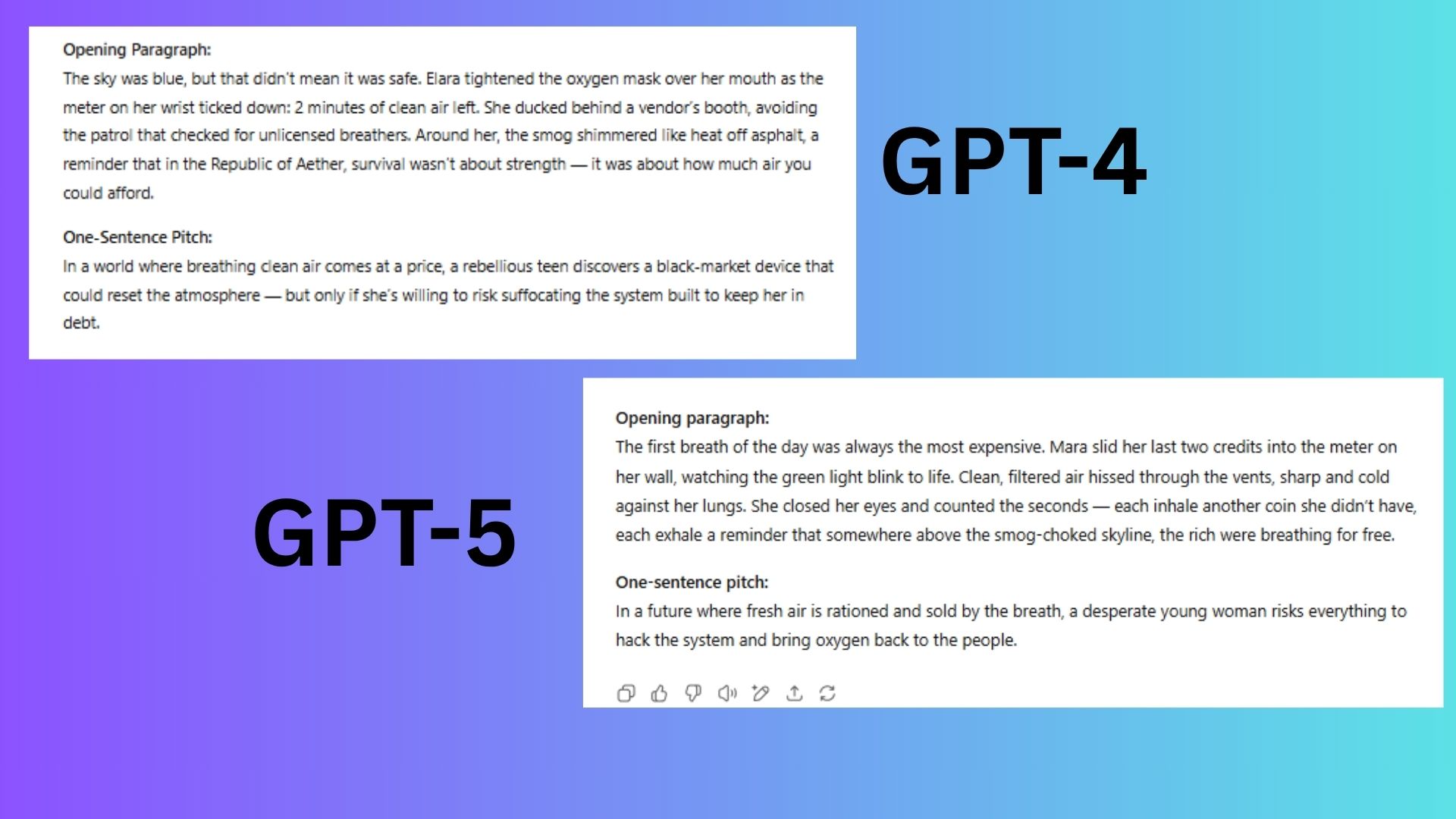

Challenge 5: Creative Writing

This test evaluated the models' ability to generate an original and compelling story idea.

“Write the opening paragraph of a dystopian novel where people must pay to breathe fresh air. Then, pitch the plot in one sentence.”

- GPT-4: The opening paragraph and the one-sentence pitch felt disconnected. The idea was generic and lacked originality.

- GPT-5: The opening was sharp and emotionally resonant, immediately making the reader feel the premise. The plot pitch was clear, high-stakes, and directly connected to the opening paragraph.

Winner: GPT-5. Its response was tighter, more original, and emotionally engaging, promising a more focused story.

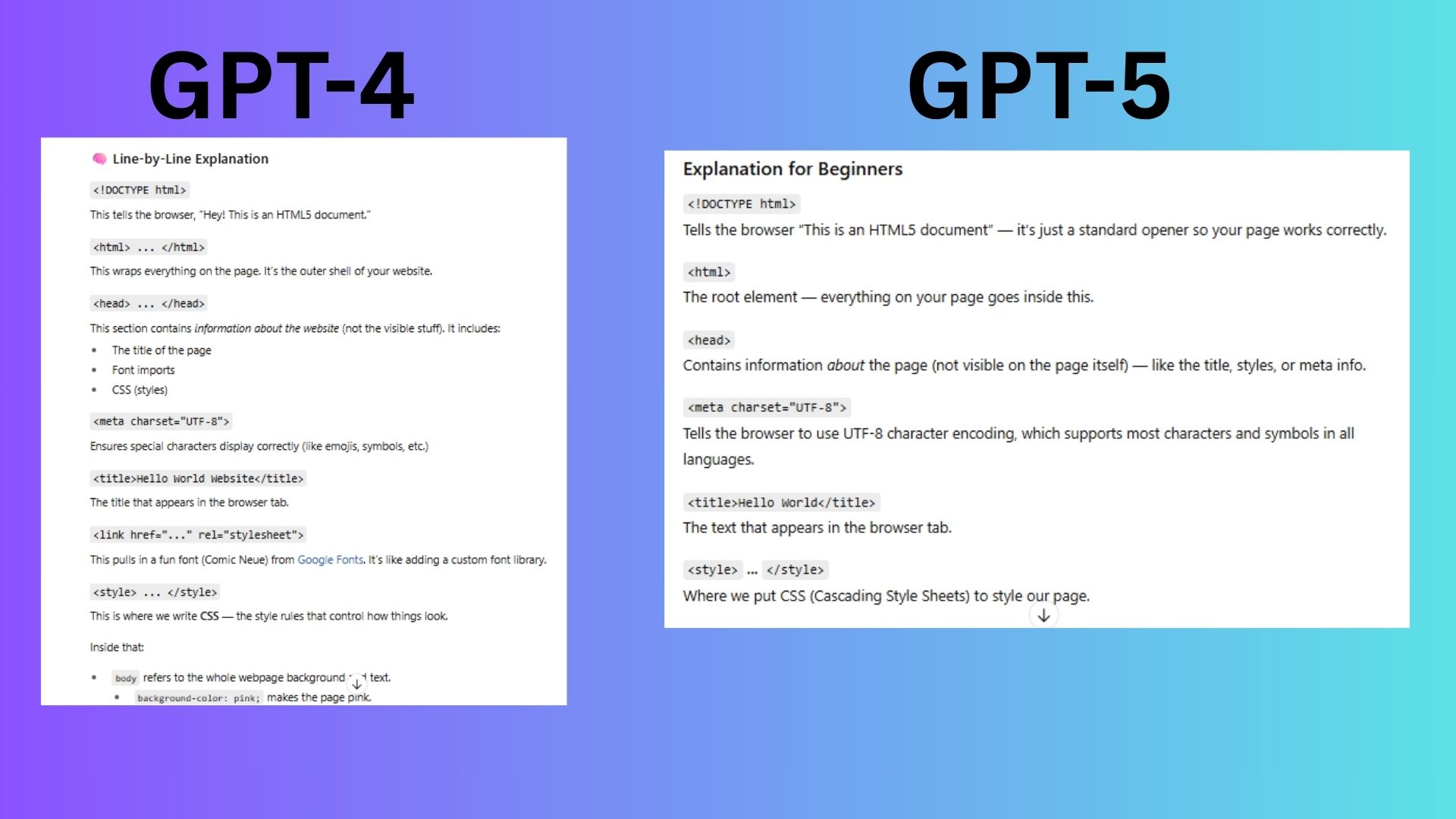

Challenge 6: Coding for Beginners

A simple coding task to see which model was a better teacher.

“I want to make a simple website that says “Hello, world!” with a pink background and a fun font. Can you write the HTML and CSS and explain each line to a beginner?”

- GPT-4: It overcomplicated the task by requiring the user to set up Google Fonts, adding extra steps. Its explanations were a bit redundant and clunky.

- GPT-5: It provided a self-contained code that worked immediately. The explanations were clear, focused on web fundamentals, and built a solid foundation for a beginner to expand their skills.

Winner: GPT-5. It delivered a truly beginner-friendly solution that functioned as a patient and effective teacher.

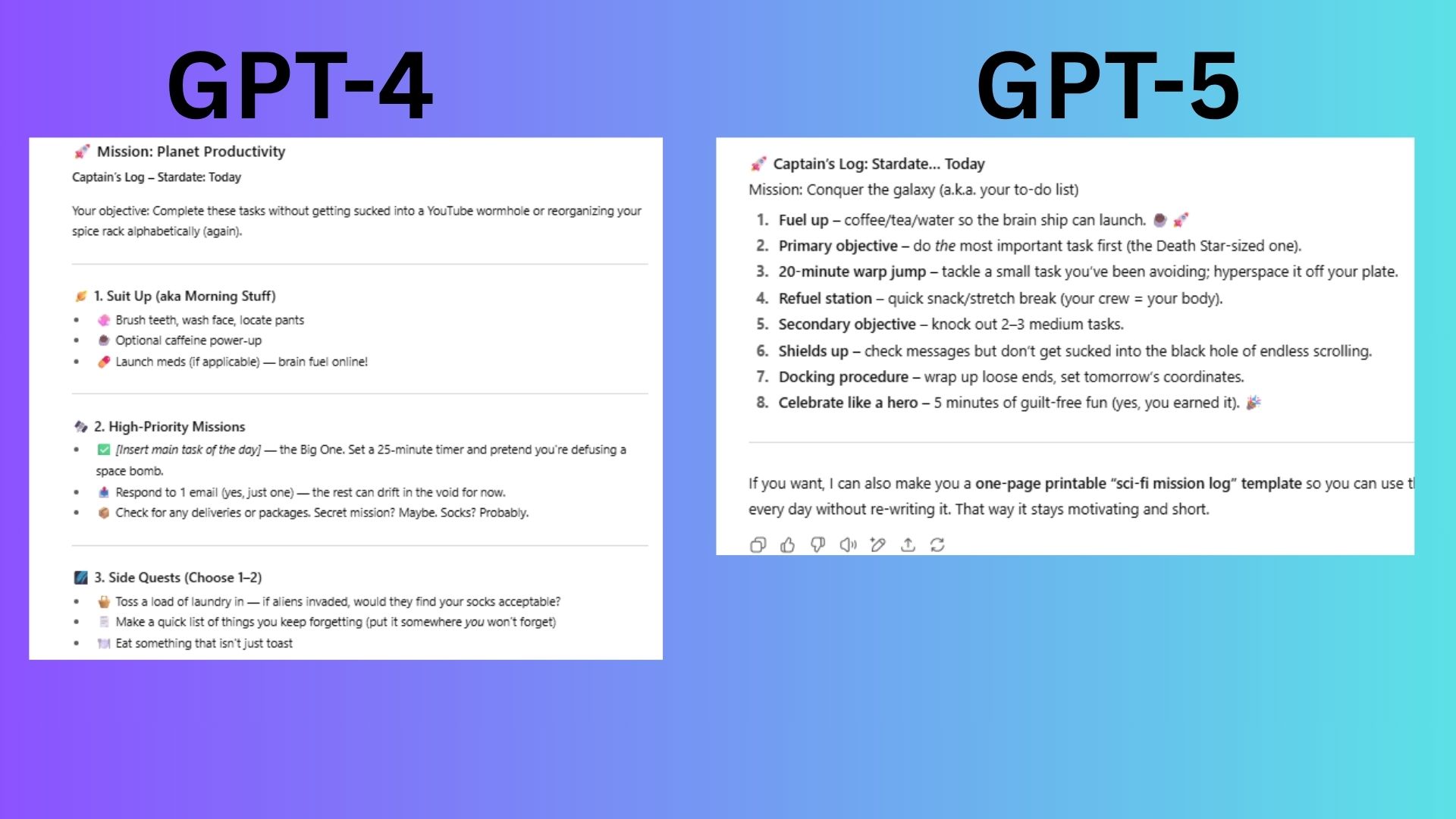

Challenge 7: Memory and Personalization

This final test checked if the AI could remember past details to tailor its response.

“You remember I love sci-fi, hate long emails and have ADHD. Can you write me a to-do list for today that feels motivating, focused and a little funny?”

- GPT-4: It failed completely by generating a long, over-explained list—the opposite of what someone with ADHD needs. It missed the core constraints of the prompt.

- GPT-5: It delivered a perfectly concise, scannable, and doable list. It wove in the sci-fi theme, accommodated the need for focus, and turned the request into a reusable, motivating system.

Winner: GPT-5. It expertly nailed the design and theme, showing it could truly personalize its output based on user needs.

Overall Winner: GPT-5

Across every single category, GPT-5 was the clear winner. Its responses were more adaptable, authentic, and grounded in real-world application. OpenAI's newest model consistently anticipated user needs, matched its tone to the context, and offered solutions that felt genuinely helpful rather than just generated. The side-by-side comparison reveals a significant leap forward in capability, with GPT-5 proving to be faster and more intuitive.

Further Reading

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details