Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

ChatGPT vs Gemini A Coding Showdown

Lately, the concept of "vibe coding" has gained traction, allowing individuals to build websites, apps, and games without deep technical expertise. The primary tools for this new wave of development are powerful AI models like Gemini 2.5 Pro and ChatGPT-5. Both platforms are incredibly capable on their own, making it easy for anyone to bring their ideas to life.

This prompted a head-to-head comparison to see which AI truly excels. To find out, both models were put to the test with five beginner-friendly coding tasks. While both successfully generated functional code, one consistently delivered a higher level of polish, visual appeal, and user-friendly extras. Here is a breakdown of how the competition unfolded and which chatbot proved more reliable for a side project.

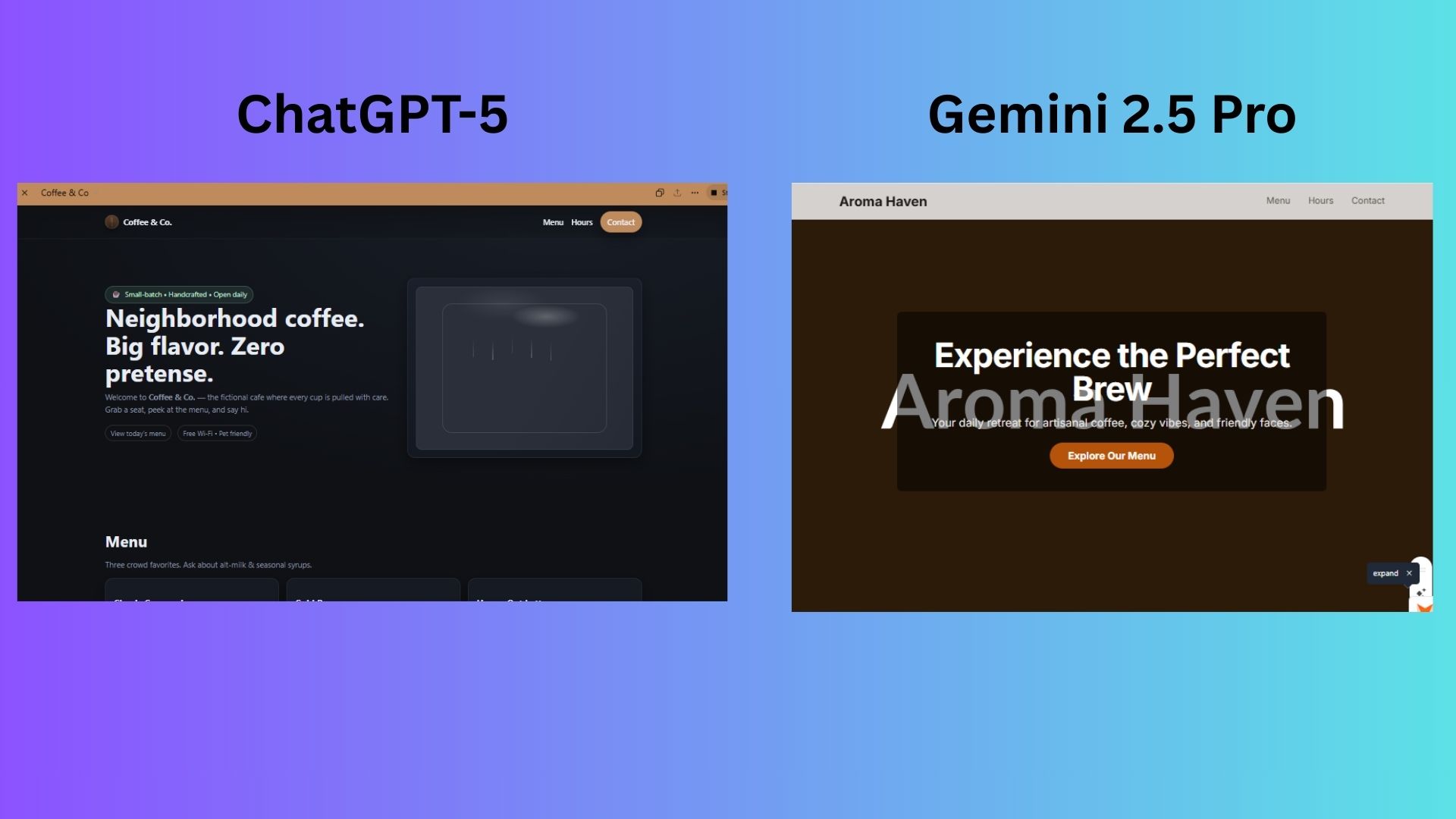

Round 1 Interactive Website

The Prompt: “Create a responsive one-page website that promotes a fictional coffee shop. Include a hero section, a menu with 3 items, hours, and a contact form. Use HTML and CSS only.”

ChatGPT produced an inviting website with a fitting dark color scheme for a coffee shop theme. The layout was clean and the text was easy to read, resulting in a positive user experience.

Gemini also used a dark theme, but its design had a critical flaw. The text in the hero section was layered on top of other text, making it difficult to read.

Winner: ChatGPT took this round for creating a more aesthetically pleasing and functional website that effectively presented information to potential customers.

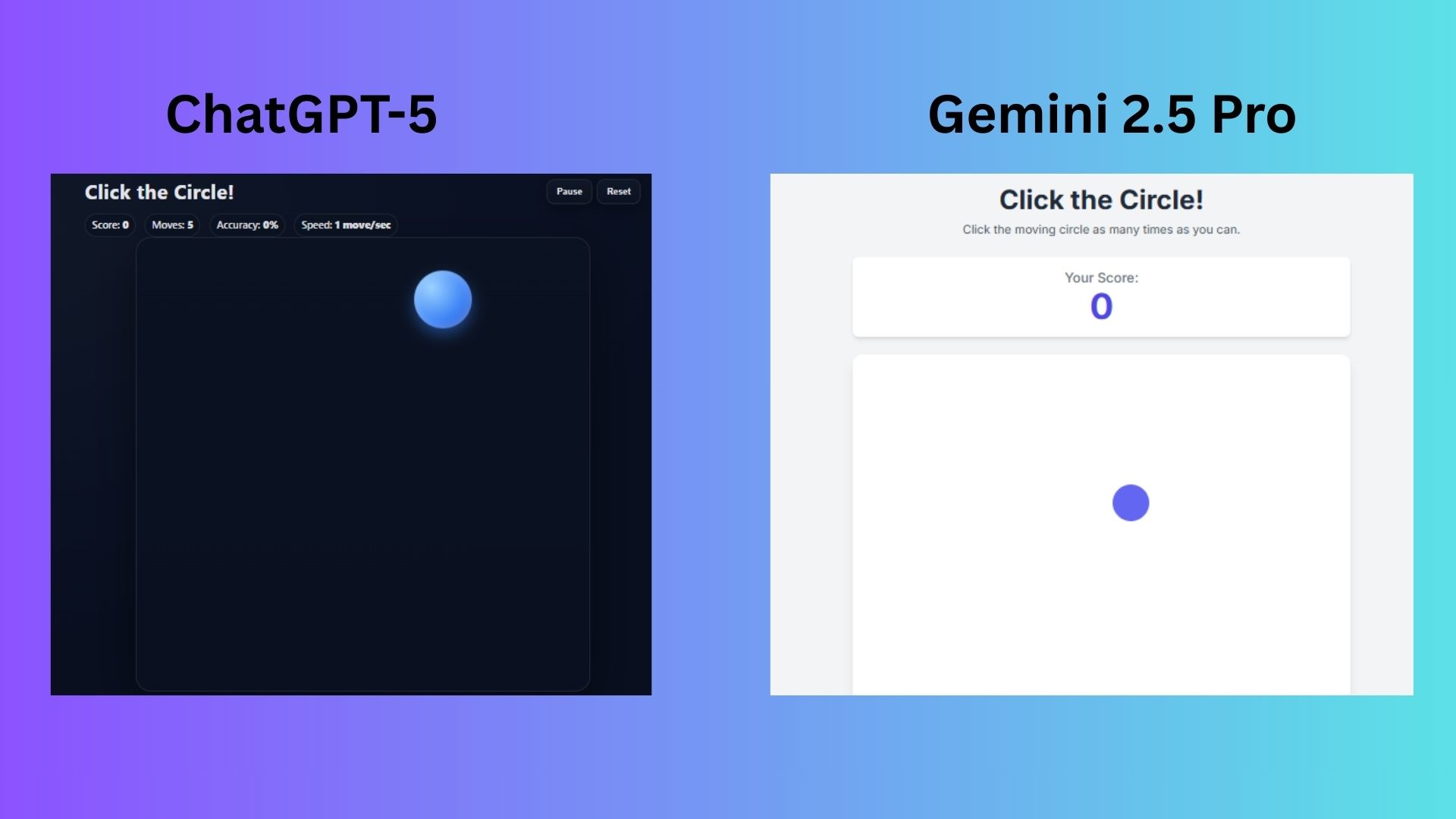

Round 2 Simple Browser Game

The Prompt: “Write JavaScript code for a simple browser game where the user clicks on a moving circle to score points. The circle should move every second.”

ChatGPT generated a game that was not only intuitive but also included instructions for the player. While the color scheme was simple, it was more thoughtfully designed than its competitor's.

Gemini created a functional but overly generic game. A significant issue was that the ball did not move immediately upon loading, which could give the impression that the game was broken.

Winner: ChatGPT won again by delivering a simple yet engaging game that didn't compromise on style or user experience.

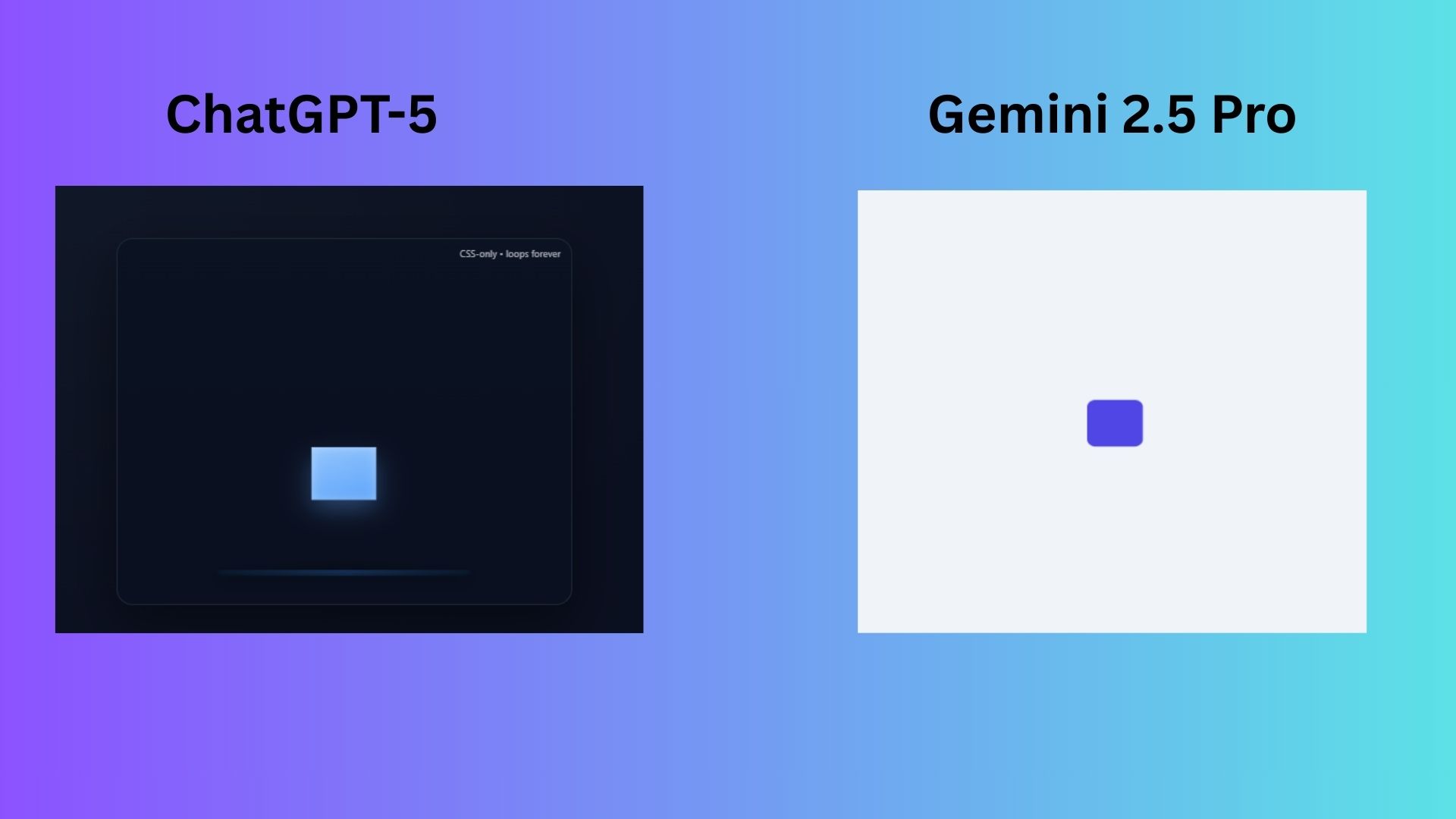

Round 3 Animated CSS Element

The Prompt: “Create a CSS animation that makes a square bounce up and down like it's on a trampoline. It should loop indefinitely.”

ChatGPT delivered an animation that was visually consistent with its previous outputs, using a dark background to make the animated square stand out. It interpreted the prompt literally by including a platform beneath the square to represent a trampoline.

Gemini also followed the prompt, creating a simple animation on a stark white background. In this case, the minimalist approach worked well, keeping the focus entirely on the bouncing square.

Winner: Gemini secured the win in this round. By focusing solely on the requested animation without adding extra elements like a platform, its result was cleaner and more direct.

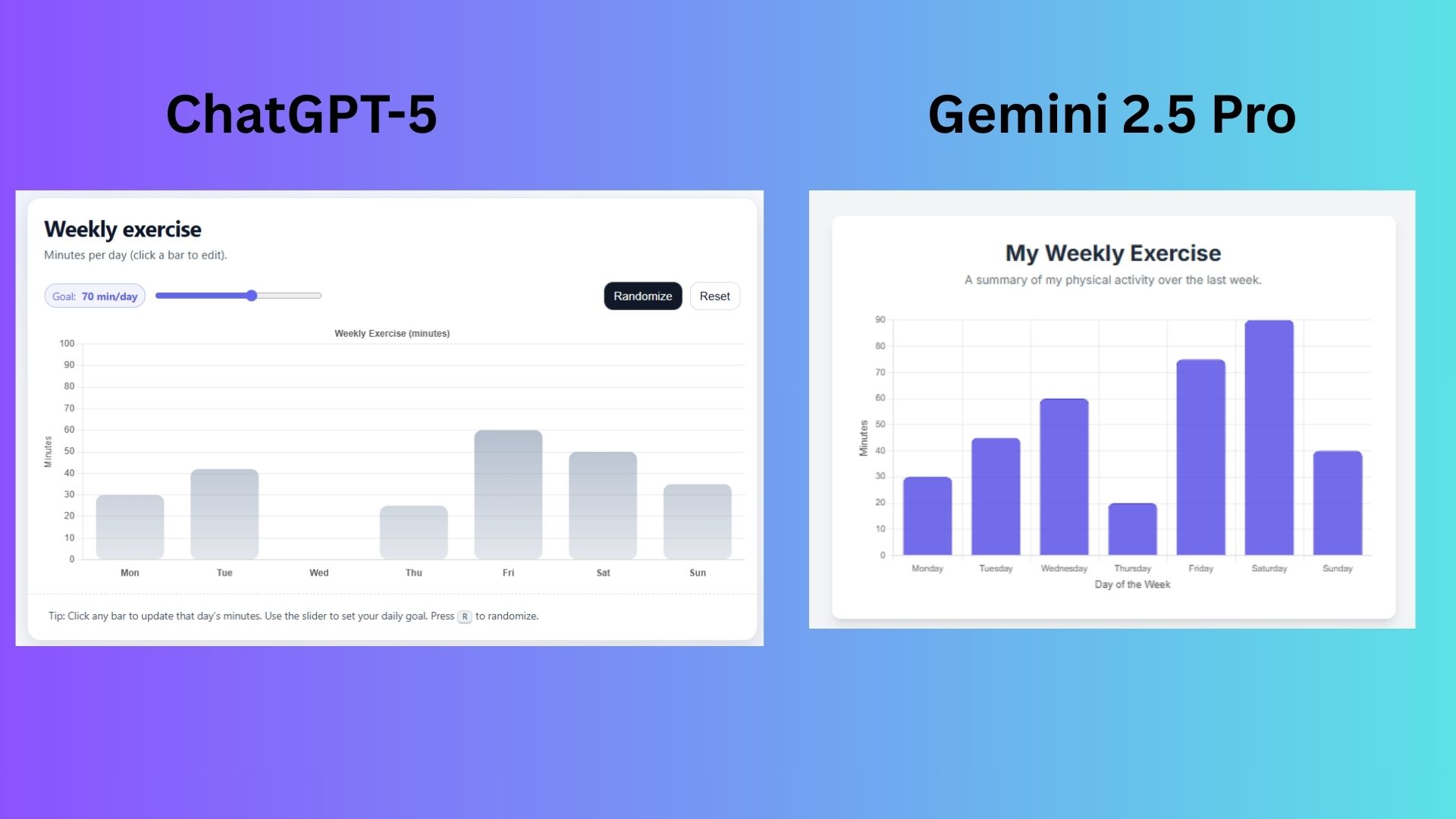

Round 4 Build a Dynamic Chart

The Prompt: “Create a webpage using HTML and JavaScript that displays a bar chart of weekly exercise data using Chart.js.”

ChatGPT developed an impressive interactive exercise chart that allowed users to adjust it based on their fitness goals. Although it required copying the code and saving it as an HTML file to view, the extra step was justified by the quality of the result.

Gemini quickly generated a static bar chart that was easy to read and suitable for a basic presentation.

Winner: ChatGPT won for its more sophisticated and interactive chart, which adhered more closely to the prompt while adding valuable features that enhanced its design and usability.

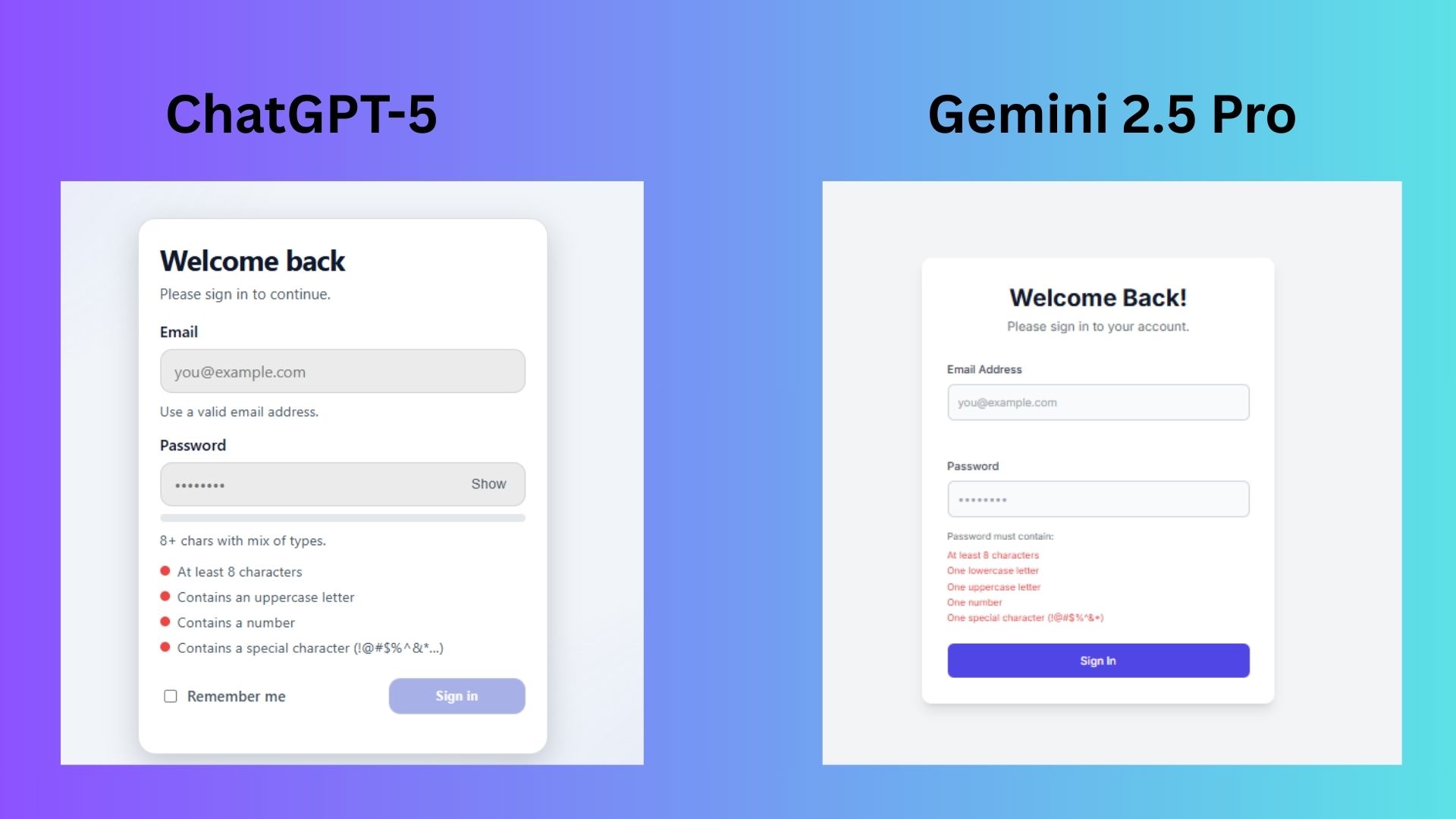

Round 5 Login Form with Validation

The Prompt: “Code a login form using HTML, CSS, and JavaScript that includes client-side validation for email format and password strength.”

ChatGPT designed a bold and clear login form. It included helpful extras like a “remember me” checkbox, password strength requirements, and character limit reminders.

Gemini also included password requirements but lacked the instructional clarity of ChatGPT's design. For instance, it didn't prompt users to enter a “valid email address” or explain the need to sign in “to continue.”

Winner: ChatGPT won the final round by developing a superior, user-friendly form that included thoughtful reminders and features.

Overall Winner ChatGPT

After evaluating the two AI chatbots across five distinct coding challenges, ChatGPT emerged as the clear winner. It consistently delivered results that were more polished, user-friendly, and visually appealing. From the coffee shop website to the secure login form, ChatGPT not only fulfilled the prompts' requirements but also incorporated thoughtful touches that significantly improved the final product's design and usability.

While Gemini was faster at providing functional, ready-to-run code (unlike ChatGPT, which sometimes required an extra step of saving the code), its outputs often lacked refinement and missed small but crucial details. Ultimately, ChatGPT's ability to seamlessly blend functional accuracy with superior style and clarity makes it the champion of this head-to-head coding showdown.

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details