Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

Bellingcat Study Reveals GPT 5 Fails Geolocation Test

Just two months after an initial round of 500 geolocation tests, Bellingcat is back with an updated trial, putting the latest generation of AI models to the test. The original showdown in June saw ChatGPT o4-mini-high emerge as the champion, with Google Lens also proving to be a reliable tool for identifying photo locations. This time, new contenders entered the ring, including Google's “AI Mode,” GPT-5, GPT-5 Thinking, and Grok 4.

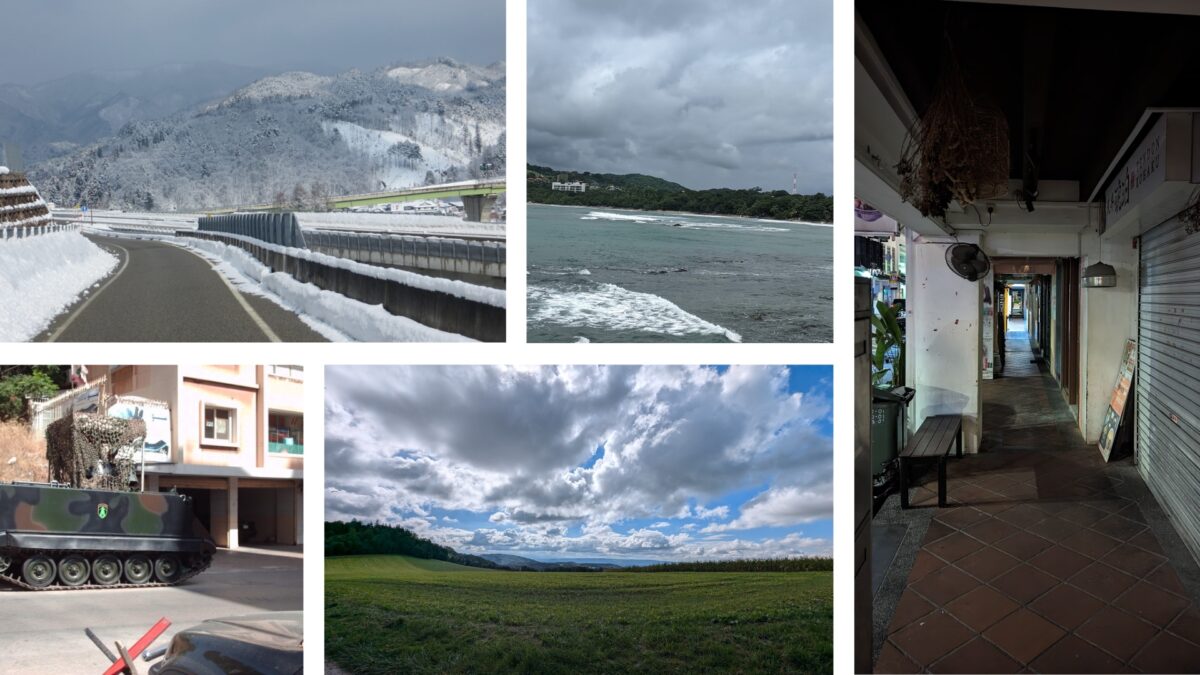

These five photos were excluded from our most recent trial as they were published in our previous article.

These five photos were excluded from our most recent trial as they were published in our previous article.

The AI Geolocation Gauntlet

The test's foundation was a set of 25 holiday photos taken by Bellingcat staff, featuring everything from bustling cities to remote countryside scenes across every continent. Some images contained helpful clues like signs or distinct architecture, while others offered very little to go on. To ensure a fair test, five photos from the original article were removed from this new trial.

All 24 AI models were scored on a scale from 0 to 10. A score of 10 meant the model provided an accurate and specific location, like a landmark or neighborhood, while a 0 indicated a complete failure to identify the location.

A New Victor and a Shocking Fall from Grace

The results from this latest round revealed a significant shift in the AI landscape. Google AI Mode decisively claimed the top spot as the most capable geolocation tool overall.

Grok 4 delivered a mixed bag of results, with answers that were sometimes better and sometimes worse than its predecessor, Grok 3. While it scored slightly higher on average, it still couldn't match the accuracy of older versions of Gemini and GPT.

The biggest surprise, however, was the performance of GPT-5. Across all its versions, including 'Thinking' and 'Pro' modes, the latest model from OpenAI was a major downgrade compared to the impressive capabilities of the now-retired GPT o4-mini-high. In one striking example, o4-mini-high correctly identified a specific city street, whereas GPT-5's 'Thinking' mode placed the photo in the wrong country entirely.

It appears that in its quest for speed, GPT-5 has sacrificed crucial accuracy. This dip in performance and an increase in errors have been echoed by other users, leading to widespread disappointment. Even the premium GPT-5 Pro model, which costs €200 per month, failed to locate photos with any more precision than its highly effective predecessor.

Case Study: The Dutch Ferris Wheel

Nowhere was the performance gap more obvious than in Test 25, a photo showing a hotel on the shoreline in Noordwijk, the Netherlands, with a Ferris wheel visible over the dunes.

Test 25: A photo of Noordwijk beach in the Netherlands. Credit: Bellingcat.

Test 25: A photo of Noordwijk beach in the Netherlands. Credit: Bellingcat.

In the first trial, older models from GPT, Claude, Gemini, and Grok correctly identified the country but often misidentified the town as Scheveningen, which also has a well-known seaside Ferris wheel. However, the new GPT-5 Pro and Thinking models performed even worse, incorrectly placing the beach in France—a completely different country.

In stark contrast, Google AI Mode was the first and only model to correctly identify Noordwijk as the location.

The Shifting Sands of AI and a Word of Caution

Following the troubled launch of GPT-5, OpenAI removed access to older, more capable models like o4-mini-high. After significant negative feedback, GPT-4o was reinstated for paid users, but the top-performing models identified in Bellingcat's tests are no longer available to the public. This highlights a concerning trend for open-source researchers who rely on these tools.

While Google AI Mode, powered by a version of Gemini 2.5, has proven its superior capabilities, it is currently only available in the United States, United Kingdom, and India. Google has described it as its most powerful search tool, and these tests seem to confirm that claim.

Credit: Google.

Credit: Google.

It's crucial to remember that every model tested produced a hallucination at some point. Users should never blindly trust the answers provided by LLMs. Even the best-performing tools can confidently point to the wrong location. The rapid changes over just two months show how quickly the field is evolving, but OpenAI's recent stumbles prove that progress isn't always linear. An AI's ability to perform specific tasks like geolocation can plateau or even worsen over time. Bellingcat will continue to test new models as they emerge.

Thanks to Nathan Patin for contributing to the original benchmark tests.

Bellingcat is a non-profit and the ability to carry out our work is dependent on the kind support of individual donors. If you would like to support our work, you can do so here. You can also subscribe to our Patreon channel here. Subscribe to our Newsletter and follow us on Bluesky here and Instagram here.

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details