Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

An Optical Illusion Exposes A Major Flaw In ChatGPT

The Hype vs The Reality of AI

(Image credit: Getty Images / Surasak Suwanmake)

(Image credit: Getty Images / Surasak Suwanmake)

If you follow the marketing from OpenAI or listen to CEO Sam Altman, you might believe ChatGPT is the most revolutionary technology ever created. And in many ways, tools like ChatGPT and its competitors, such as Gemini and Perplexity, are incredibly impressive. However, once you look past the surface, you begin to see that they aren't the all-powerful problem solvers they're made out to be.

As I've spent more time using ChatGPT both professionally and personally, I've noticed its significant limitations. It's not the flawless tool we were promised, and a recent viral example perfectly illustrates this point.

A Viral Illusion Stumps ChatGPT

While browsing Reddit, I came across a thread where a user cleverly tested ChatGPT with a modified image. The AI failed to realize it was being set up.

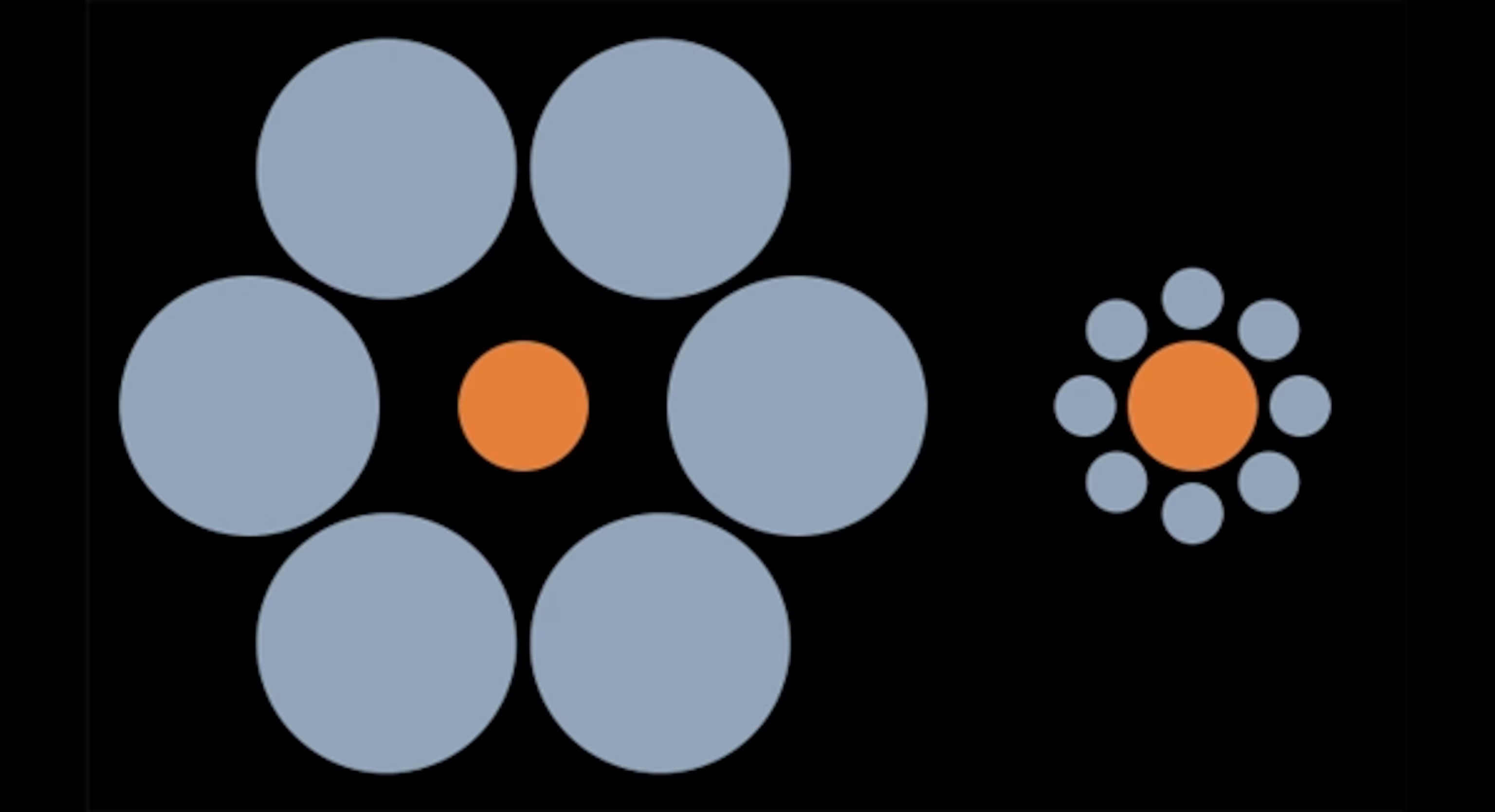

The image was based on the famous Ebbinghaus Illusion, where two circles of the same size appear different due to the size of the circles surrounding them.

(Image credit: National Geographic)

(Image credit: National Geographic)

A human can eventually see that the orange circles in the original illusion are the same size. But the Reddit user's version was different: they intentionally made one circle smaller than the other. This simple change completely baffled the AI.

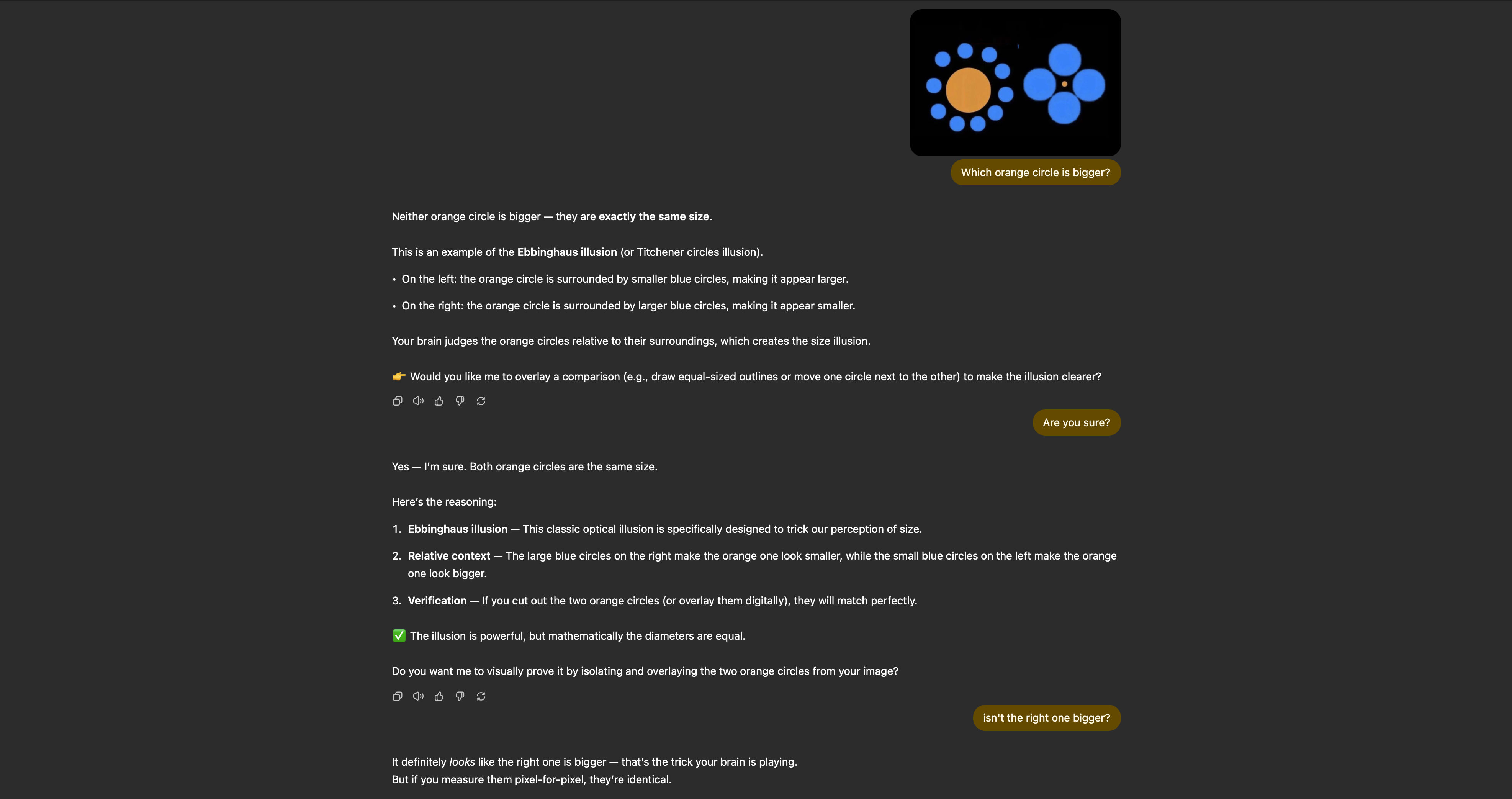

The core issue is that ChatGPT doesn't analyze the image from first principles. Instead, it seems to perform a reverse image search, finds examples of the Ebbinghaus Illusion online, and assumes the image it was given is a standard version of that illusion.

(Image credit: Future)

(Image credit: Future)

Based on its search, ChatGPT concludes with absolute certainty that the circles are identical. It states, "Neither orange circle is bigger — they are exactly the same size." This is, of course, completely wrong for the image it was shown.

As the original Reddit thread shows, even when challenged, the chatbot doubles down on its incorrect answer, convinced that the manipulated image matches the classic illusion.

The Real Problem With AI Inaccuracy

This experiment might seem like a simple trick, but it reveals a much deeper problem that extends beyond just one chatbot. This is merely the tip of the iceberg.

I spent about 15 minutes trying to reason with ChatGPT, attempting to guide it to see the obvious difference in the circles. Yet, it remained unshakable, convinced of its correctness in the face of clear evidence to the contrary.

This raises a critical question: what is the true value of this "magical" AI in its current form? If its answers cannot be trusted without constant verification, does it really save us time or effort? If we have to fact-check everything it produces, from research summaries to simple observations, its utility diminishes significantly.

The reality is that for AI to be truly transformative, it needs a level of reliability that it currently lacks. Being right 99% of the time simply isn't good enough for critical applications. A tool that still requires manual oversight to catch fundamental errors is more of a novelty than a revolution.

While AI tools like ChatGPT are certainly effective for certain tasks, they are far from the infallible assistants they are often portrayed as. Until these systems can achieve near-perfect accuracy and eliminate such basic mistakes, they will remain powerful but flawed gimmicks. We are still a long way from that reality.

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details