Developer Offer

Try ImaginePro API with 50 Free Credits

Build and ship AI-powered visuals with Midjourney, Flux, and more — free credits refresh every month.

Five Key Advantages Of Using Local AI Tools

When you hear the term AI, your mind likely jumps to big names like ChatGPT, Copilot, or Google Gemini. These online chatbots dominate the headlines, and for good reason. But the world of artificial intelligence extends far beyond these cloud-based giants. From Copilot+ PCs to video editing and running your own Large Language Model (LLM), many powerful AI tasks can be performed right on your personal computer without relying on an internet connection.

Here are five compelling reasons why you should consider exploring the world of local AI over its online counterparts.

A Quick Note on Hardware Requirements

Before we dive in, it's important to address the elephant in the room: hardware. To run the latest local LLMs, you need a capable machine. The impressive local features of Copilot+ PCs, for example, are powered by their integrated NPUs (Neural Processing Units). While not all local AI requires an NPU, the processing power has to come from somewhere, often a powerful GPU. Always check the system requirements before attempting to run a new local AI tool.

1. Unplug and Go: The Freedom of Offline Access

This is the most obvious, yet most powerful, benefit. Local AI runs on your PC, meaning it doesn't need an internet connection to function. While services like ChatGPT and Copilot are powerful, they are useless without a stable web connection.

You could be on a plane, in a remote area, or simply experiencing an internet outage, and a local model like OpenAI's gpt-oss:20b will still work perfectly. The same applies to other tools like Stable Diffusion for image generation or the AI features in video editors like DaVinci Resolve. This approach gives you true ownership and portability, freeing you from concerns about server capacity, stability, or sudden changes to terms of service. You also aren't at the mercy of companies retiring older models you might prefer, a current point of contention for users as services move towards GPT-5.

2. Your Data Stays Yours: Superior Privacy and Control

When you use an online AI tool, you are sending your data to a server in the cloud. This raises significant privacy concerns, as you lose direct control over how your information is handled. With local AI, your data never leaves your machine. This is absolutely critical if you work with confidential or sensitive information where security and privacy are non-negotiable.

While services like ChatGPT offer incognito modes, the data still has to travel from your computer to their servers. Running AI locally eliminates this risk entirely and makes it much easier to comply with regional data protection regulations. Your information remains completely offline and under your control.

3. Smarter Spending and a Greener Footprint

Powering massive cloud-based LLMs requires an immense amount of energy, which carries a significant environmental cost. While running AI on your PC also uses power, you have far more control over your personal energy consumption and its source.

From a financial perspective, local AI can be much more economical. The free tiers of online tools are often limited, pushing users towards paid plans. ChatGPT Pro can cost a staggering $200 per month. In contrast, if you already own a capable gaming PC with a modern graphics card like an RTX 5080, you can run powerful, open-source LLMs for free. If you have the hardware, why pay a recurring fee to someone else?

4. Tailor-Made AI for Your Personal Workflow

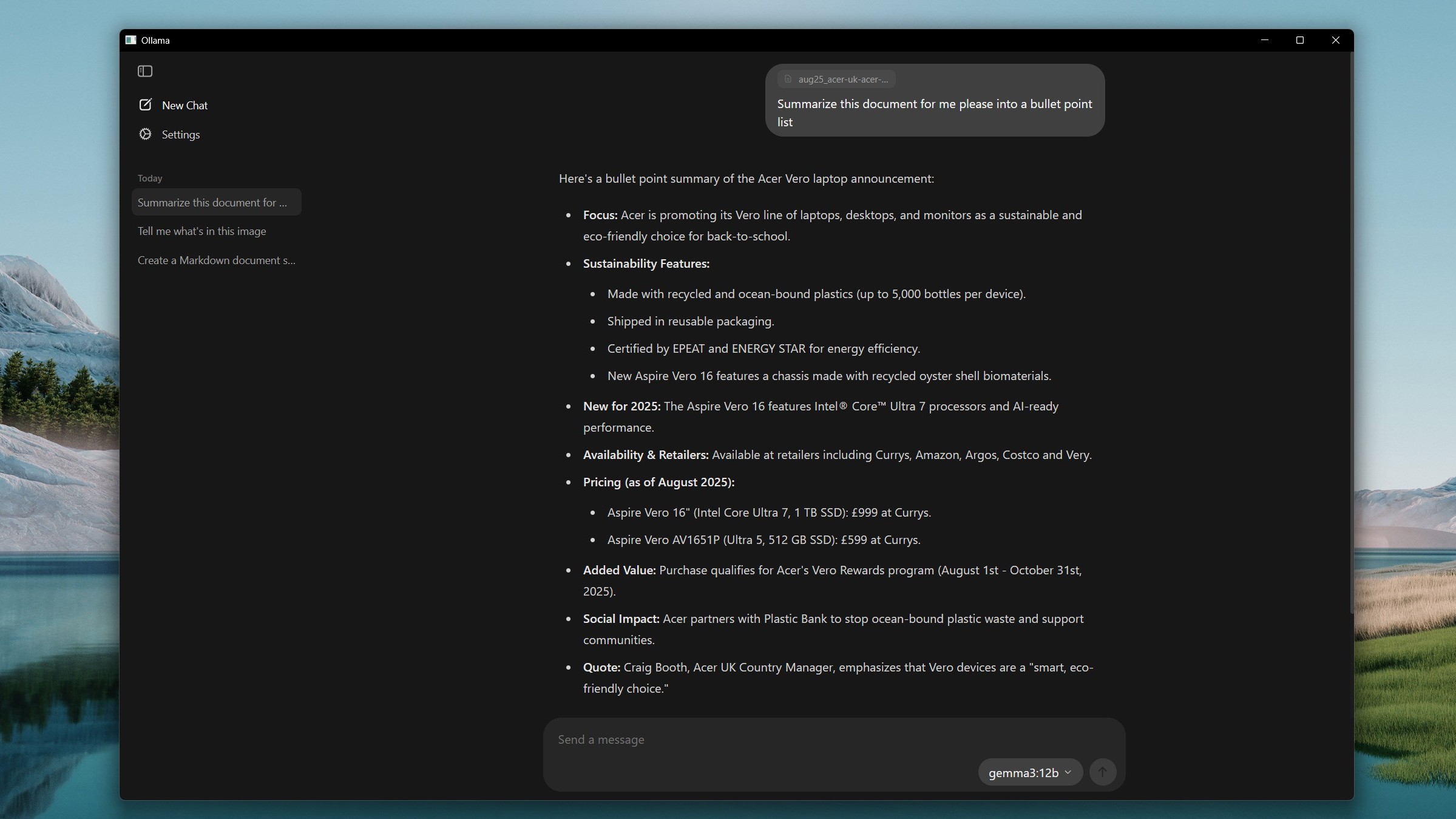

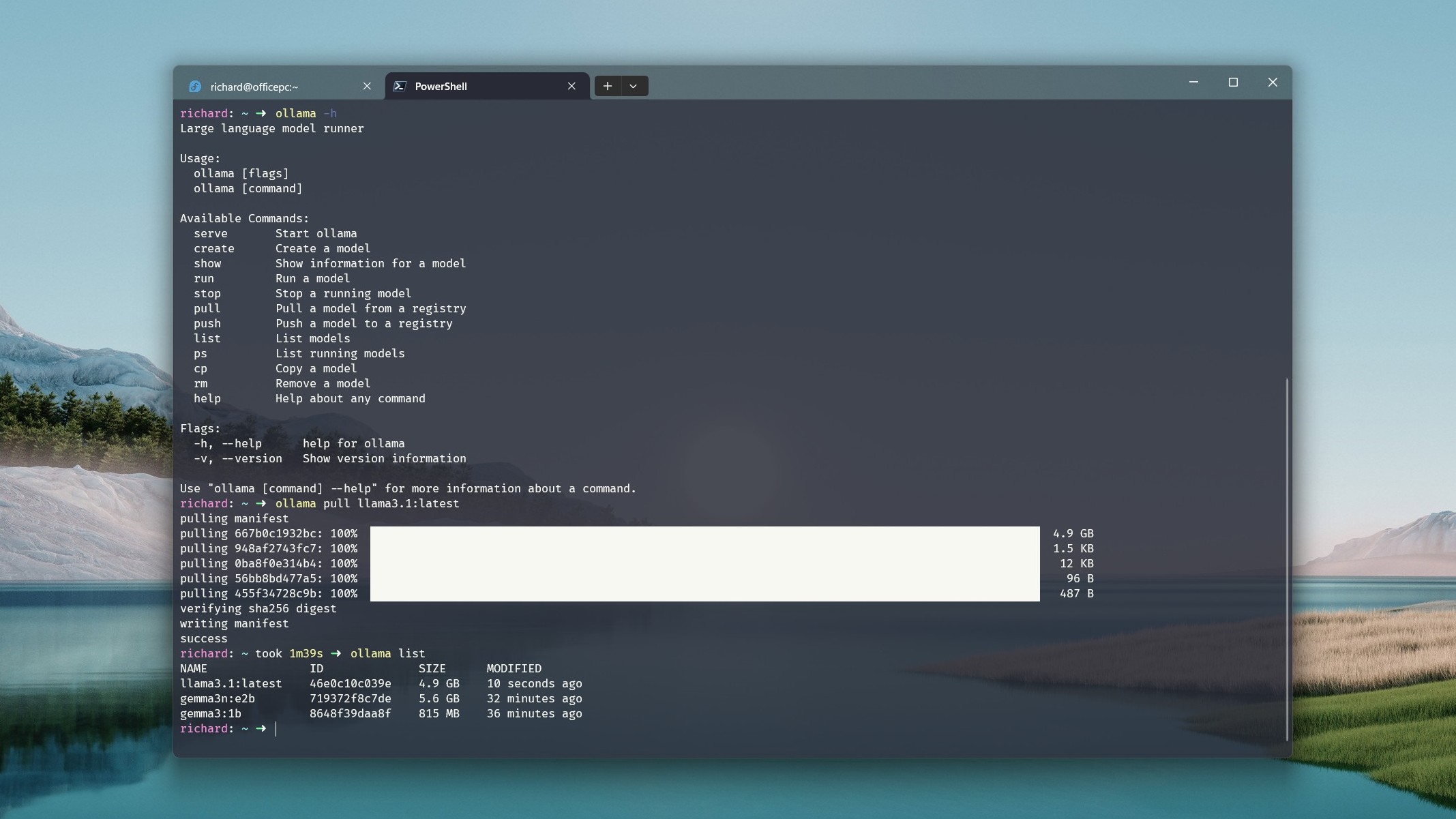

Local AI offers unparalleled opportunities for customization and integration. Using a tool like Ollama, you can integrate an open-source LLM directly into applications like VS Code, creating your own personal coding assistant that runs entirely offline.

This approach allows you to choose models that are specifically fine-tuned for your needs, whether it's coding, writing, or data analysis. Unlike the one-size-fits-all models presented by online chatbots, you have the freedom to select, modify, and fine-tune your own tools to build a workflow perfectly tailored to your requirements.

5. A Hands-On Way to Master AI

Beyond practical applications, using local AI is an incredible educational experience. It allows you to move past the 'magic' of simply typing into a browser and learn how the underlying technology actually works. You can experiment with different models, understand their hardware requirements, and even try fine-tuning an open-source LLM yourself.

AI is here to stay, and setting up your own local playground is one of the best ways to develop valuable skills. It gives you the freedom to experiment with your own data without being locked into a single company's ecosystem or subscription model.

Of course, there are drawbacks. Performance on a home PC, even a powerful one, won't match the speed of OpenAI's massive server farms. Running the largest open-source models is still beyond the reach of most consumer gaming PCs. However, despite these limitations, the compelling advantages of privacy, cost, and control make local AI an exciting frontier that is well worth exploring. If you have the hardware, why not give it a try?

Compare Plans & Pricing

Find the plan that matches your workload and unlock full access to ImaginePro.

| Plan | Price | Highlights |

|---|---|---|

| Standard | $8 / month |

|

| Premium | $20 / month |

|

Need custom terms? Talk to us to tailor credits, rate limits, or deployment options.

View All Pricing Details